Phd Thesis the Open University

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Origins of the Underline As Visual Representation of the Hyperlink on the Web: a Case Study in Skeuomorphism

The Origins of the Underline as Visual Representation of the Hyperlink on the Web: A Case Study in Skeuomorphism The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Romano, John J. 2016. The Origins of the Underline as Visual Representation of the Hyperlink on the Web: A Case Study in Skeuomorphism. Master's thesis, Harvard Extension School. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33797379 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA The Origins of the Underline as Visual Representation of the Hyperlink on the Web: A Case Study in Skeuomorphism John J Romano A Thesis in the Field of Visual Arts for the Degree of Master of Liberal Arts in Extension Studies Harvard University November 2016 Abstract This thesis investigates the process by which the underline came to be used as the default signifier of hyperlinks on the World Wide Web. Created in 1990 by Tim Berners- Lee, the web quickly became the most used hypertext system in the world, and most browsers default to indicating hyperlinks with an underline. To answer the question of why the underline was chosen over competing demarcation techniques, the thesis applies the methods of history of technology and sociology of technology. Before the invention of the web, the underline–also known as the vinculum–was used in many contexts in writing systems; collecting entities together to form a whole and ascribing additional meaning to the content. -

MTS4CC Elementary Stream Compliance Checker Tutorials 001-1415-00

MTS4CC Elementary Stream Compliance Checker Tutorials 001-1415-00 This document applies to software version 1.0 and above. www.tektronix.com Copyright © Tektronix. All rights reserved. Licensed software products are owned by Tektronix or its subsidiaries or suppliers, and are protected by national copyright laws and international treaty provisions. Tektronix products are covered by U.S. and foreign patents, issued and pending. Information in this publication supercedes that in all previously published material. Specifications and price change privileges reserved. TEKTRONIX and TEK are registered trademarks of Tektronix, Inc. Contacting Tektronix Tektronix, Inc. 14200 SW Karl Braun Drive P.O. Box 500 Beaverton, OR 97077 USA For product information, sales, service, and technical support: H In North America, call 1-800-833-9200. H Worldwide, visit www.tektronix.com to find contacts in your area. Table of Contents Getting Started............................................ 1 Basic Functions.................................................. 2 How to Begin a Tutorial........................................... 2 Tutorial 1: H.263 Standards Compliance and Motion Vectors..... 3 Procedure...................................................... 3 Conclusion..................................................... 8 Tutorial 2: MPEG-4 Compliance............................. 9 Procedure...................................................... 9 Conclusions..................................................... 15 Tutorial 3: MP4 Compliance Basics.......................... -

SHARING FILES and FOLDERS in WTC and WORKSPACES WORKSPACES V1.3X USER GUIDE

SHARING FILES AND FOLDERS IN WTC AND WORKSPACES WORKSPACES v1.3x USER GUIDE GlobalSCAPE, Inc. (GSB) Corporate Headquarters Address: 4500 Lockhill-Selma Road, Suite 150, San Antonio, TX (USA) 78249 Sales: (210) 308-8267 Sales (Toll Free): (800) 290-5054 Technical Support: (210) 366-3993 Web Support: http://www.globalscape.com/support/ © 2008-2017 GlobalSCAPE, Inc. All Rights Reserved August 2, 2017 Table of Contents How Do I Share Files? .................................................................................................................................................... 7 WTC Administration ...................................................................................................................................................... 9 Enabling User Access to the Web Transfer Client .................................................................................................. 9 Localization (Language) Settings .......................................................................................................................... 10 WTC Error Messages in EFT .................................................................................................................................. 11 Disable CRC ........................................................................................................................................................... 14 Disabling "Update Your Browser" Prompts .......................................................................................................... 14 Terms and -

Effects of Scroll Bar Orientation and Item Justification in Using List Box Widgets

Effects of scroll bar orientation and item justification in using list box widgets G. Michael Barnes Erik Kellener California State University Northridge Hollywood Online Inc. 18111 Nordhoff Street 1620 26th St., #370S Northridge, CA 91330-8281 USA Santa Monica, CA 90404 +1 818 677 2299 +1 310 586 2020 [email protected] [email protected] Abstract List boxes are a common user interface component in graphical user interfaces. In practice, most list boxes use right-oriented scroll bars to control left-justified text items. A two way interaction hypothesis favoring the use of a scroll bar orientation consistent with list box item justification was obtained for speed of use and user preference. Item selection was faster with a scroll bar orientation consistent with list item justification. Subjects preferred left- oriented scroll bars with left-justified items and right-oriented scroll bars with right-oriented items. These results support a design principle of locality for user interface controls and controlled objects. Keywords List widgets, scroll bar, graphical user interface design, usability study Introduction This electronic publication is an updated statistical analysis of Erik Kellener’s unpublished masters’ thesis, “Are GUI Ambidexterous” completed at California State University Northridge, CA. 1996. List boxes are used in many graphical user interfaces (GUI) today. Whether its a desktop P.C., a personal digital assistant (PDA) or an information kiosk at the grocery store, list boxes are integrated into most GUIs. The list box GUI component is usually present in an interface that asks a user to make a selection from a list of items. The size of the list of items can vary significantly, however the screen area required by a list box is usually fixed. -

Dealing with Document Size Limits

Dealing with Document Size Limits Introduction The Electronic Case Filing system will not accept PDF documents larger than ten megabytes (MB). If the document size is less than 10 MB, it can be filed electronically just as it is. If it is larger than 10 MB, it will need to be divided into two or more documents, with each document being less than 10 MB. Word Processing Documents Documents created with a word processing program (such as WordPerfect or Microsoft Word) and correctly converted to PDF will generally be smaller than a scanned document. Because of variances in software, usage, and content, it is difficult to estimate the number of pages that would constitute 10 MB. (Note: See “Verifying File Size” below and for larger documents, see “Splitting PDF Documents into Multiple Documents” below.) Scanned Documents Although the judges’ Filing Preferences indicate a preference for conversion of documents rather than scanning, it will be necessary to scan some documents for filing, e.g., evidentiary attachments must be scanned. Here are some things to remember: • Documents scanned to PDF are generally much larger than those converted through a word processor. • While embedded fonts may be necessary for special situations, e.g., trademark, they will increase the file size. • If graphs or color photos are included, just a few pages can easily exceed the 10 MB limit. Here are some guidelines: • The court’s standard scanner resolution is 300 dots per inch (DPI). Avoid using higher resolutions as this will create much larger file sizes. • Normally, the output should be set to black and white. -

M-Explorer User's Guide

Table of Contents M-Explorer User’s Guide Table of Contents M-Explorer User’s Guide..............................................1 Chapter 1 Using This Guide.................................................................1-1 Introduction...................................................................................................... 1-1 Key Concepts................................................................................................... 1-2 Chapter Organization .....................................................................................................1-2 Online Help ....................................................................................................................1-2 Manual Conventions ......................................................................................................1-2 Chapter 2 Introduction to M-Explorer .................................................2-1 Introduction...................................................................................................... 2-1 Key Concepts................................................................................................... 2-2 System Component Interaction......................................................................................2-2 OPC Data Access Servers.............................................................................................2-2 M-Inspector ....................................................................................................................2-2 M-Password ...................................................................................................................2-3 -

Thomson Reuters Spreadsheet Link User Guide

THOMSON REUTERS SPREADSHEET LINK USER GUIDE MN-212 Date of issue: 13 July 2011 Legal Information © Thomson Reuters 2011. All Rights Reserved. Thomson Reuters disclaims any and all liability arising from the use of this document and does not guarantee that any information contained herein is accurate or complete. This document contains information proprietary to Thomson Reuters and may not be reproduced, transmitted, or distributed in whole or part without the express written permission of Thomson Reuters. Contents Contents About this Document ...................................................................................................................................... 1 Intended Readership ................................................................................................................................. 1 In this Document........................................................................................................................................ 1 Feedback ................................................................................................................................................... 1 Chapter 1 Thomson Reuters Spreadsheet Link .......................................................................................... 2 Chapter 2 Template Library ........................................................................................................................ 3 View Templates (Template Library) .............................................................................................................................................. -

How to Create and Maintain a Table of Contents

How to Create and Maintain a Table of Contents How to Create and Maintain a Table of Contents Version 0.2 First edition: January 2004 First English edition: January 2004 Contents Contents Overview........................................................................................................................................ iii About this guide..........................................................................................................................iii Conventions used in this guide................................................................................................... iii Copyright and trademark information.........................................................................................iii Feedback.....................................................................................................................................iii Acknowledgments...................................................................................................................... iv Modifications and updates..........................................................................................................iv Creating a table of contents.......................................................................................................... 1 Opening Writer's table of contents feature.................................................................................. 1 Using the Index/Table tab ...........................................................................................................2 Setting -

ALGE Displaystudio Manual

ALGE DisplayStudio Manual DisplayStudio Table of Content 1 General .............................................................................................................................3 2 Getting started...................................................................................................................3 2.1 Main Window ............................................................................................................3 2.2 Tree view for project navigation................................................................................4 3 Lists...................................................................................................................................5 3.1 List Builder................................................................................................................5 3.1.1 Text panel.............................................................................................................6 3.1.2 Animation..............................................................................................................6 3.1.3 List compiling........................................................................................................7 4 Animations and wipes .......................................................................................................7 4.1 Adding animation/wipe to the project........................................................................8 4.2 Animation/wipe editing..............................................................................................8 -

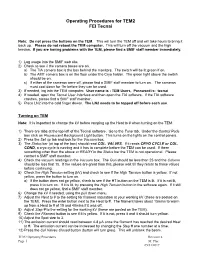

Operating Procedures for TEM2 FEI Tecnai

Operating Procedures for TEM2 FEI Tecnai Note: Do not press the buttons on the TEM. This will turn the TEM off and will take hours to bring it back up. Please do not reboot the TEM computer. This will turn off the vacuum and the high tension. If you are having problems with the TEM, please find a SMIF staff member immediately. 1) Log usage into the SMIF web site. 2) Check to see if the camera boxes are on. a) The TIA camera box is the box behind the monitors. The switch will be lit green if on. b) The AMT camera box is on the floor under the Cryo holder. The green light above the switch should be on. c) If either of the cameras were off, please find a SMIF staff member to turn on. The cameras must cool down for 1hr before they can be used. 3) If needed, log into the TEM computer. User name is : TEM Users, Password is: tecnai 4) If needed, open the Tecnai User Interface and then open the TIA software. If the TIA software crashes, please find a SMIF staff member. 5) Place LN2 into the cold finger dewar. The LN2 needs to be topped off before each use. Turning on TEM Note: It is important to change the kV before ramping up the Heat to # when turning on the TEM. 1) There are tabs at the top left of the Tecnai software. Go to the Tune tab. Under the Control Pads box click on Fluorescent Background Light button. This turns on the lights on the control panels. -

John Athayde and Bruce Williams — «The Rails View

What readers are saying about The Rails View This is a must-read for Rails developers looking to juice up their skills for a world of web apps that increasingly includes mobile browsers and a lot more JavaScript. ➤ Yehuda Katz Driving force behind Rails 3.0 and Co-founder, Tilde In the past several years, I’ve been privileged to work with some of the world’s leading Rails developers. If asked to name the best view-layer Rails developer I’ve met, I’d have a hard time picking between two names: Bruce Williams and John Athayde. This book is a rare opportunity to look into the minds of two of the leading experts on an area that receives far too little attention. Read, apply, and reread. ➤ Chad Fowler VP Engineering, LivingSocial Finally! An authoritative and up-to-date guide to everything view-related in Rails 3. If you’re stabbing in the dark when putting together your Rails apps’ views, The Rails View provides a big confidence boost and shows how to get things done the right way. ➤ Peter Cooper Editor, Ruby Inside and Ruby Weekly The Rails view layer has always been a morass, but this book reins it in with details of how to build views as software, not just as markup. This book represents the wisdom gained from years’ worth of building maintainable interfaces by two of the best and brightest minds in our business. I have been writing Ruby code for over a decade and Rails code since its inception, and out of all the Ruby books I’ve read, I value this one the most. -

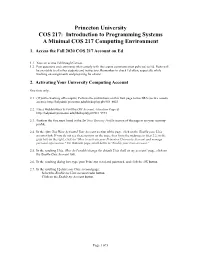

Princeton University COS 217: Introduction to Programming Systems a Minimal COS 217 Computing Environment

Princeton University COS 217: Introduction to Programming Systems A Minimal COS 217 Computing Environment 1. Access the Fall 2020 COS 217 Account on Ed 1.1. You can access Ed through Canvas. 1.2 Post questions and comments (that comply with the course communication policies) to Ed. Posts will be available to all other students and instructors. Remember to check Ed often, especially while working on assignments and preparing for exams. 2. Activating Your University Computing Account One time only… 2.1. (If you're working off-campus) Perform the instructions on this web page to use SRA (secure remote access): http://helpdesk.princeton.edu/kb/display.plx?ID=6023 2.2. Use a Web browser to visit the OIT Account Activation Page at http://helpdesk.princeton.edu/kb/display.plx?ID=9973 2.3. Perform the five steps listed in the Set Your Security Profile section of the page to set your security profile. 2.4. In the After You Have Activated Your Account section of the page, click on the Enable your Unix account link. If you do not see these options on the page, then from the webpage in Step 2.2, in the gray box on the right, click on “How to activate your Princeton University Account and manage personal information." On that new page, scroll down to "Enable your Unix account." 2.5. In the resulting Unix: How do I enable/change the default Unix shell on my account? page, click on the Enable Unix Account link. 2.6. In the resulting dialog box, type your Princeton netid and password, and click the OK button.