Multiple Entity Reconciliation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sydney Program Guide

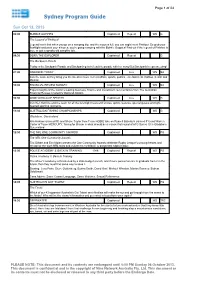

Page 1 of 24 Sydney Program Guide Sun Oct 13, 2013 06:00 BUBBLE GUPPIES Captioned Repeat WS G The Legend of Pinkfoot! Legend has it that when you go on a camping trip, and the moon is full, you just might meet Pinkfoot. So grab your flashlight and pack your s’mores; you’re going camping with the Bubble Guppies! Find out if the legend of Pinkfoot is true, or just a spooky old campfire tale. 06:30 DORA THE EXPLORER Captioned Repeat G The Backpack Parade Today is the Backpack Parade and Backpack gets to lead the parade with her song! But Backpack keeps sneezing! 07:00 WEEKEND TODAY Captioned Live WS NA Join the team as they bring you the latest in news, current affairs, sports, politics, entertainment, fashion, health and lifestyle. 10:00 FINANCIAL REVIEW SUNDAY Captioned Live WS NA Expert insights of the nation’s leading business, finance and investment commentators from The Australian Financial Review, hosted by Deborah Knight. 10:30 WIDE WORLD OF SPORTS Captioned Live WS G Join Ken Sutcliffe and the team for all the overnight news and scores, sports features, special guests and light- hearted sporting moments. 11:30 AUSTRALIAN FISHING CHAMPIONSHIPS Captioned WS G Gladstone, Queensland Kris Hickson (ranked #3) and Shane Taylor from Team HOBIE take on Russell Babekuhl (ranked #1) and Warren Carter of Team MERCURY, fishing for Bream in what should be a classic first round of AFC Series 10 in Gladstone, Queensland 12:00 THE NRL ONE COMMUNITY AWARDS Captioned WS PG The NRL One Community Awards Tim Gilbert and Erin Molan present the One Community Awards celebrate Rugby League’s unsung heroes and recognise the work NRL stars and volunteers contribute to grassroots rugby league. -

Lavalantula Full Movie in Italian Free Download Hd 720P

Lavalantula 1 / 4 La prima cosa che viene in mente quando vedi una creatura con grandi ragni di lava e Steve Guttenberg è ovviamente B-movie. E proprio così, ma va detto che il 2015 & quot; Lavalantula & quot; è in realtà una delle migliori caratteristiche delle creature là fuori e uno dei più divertenti film di ragno da molto tempo. Certo, il concetto è inverosimile. Ma, ehi, un terremoto colpisce Los Angeles, scatenando screpolature e inizia l'attività vulcanica, e in mezzo al caos sputa ragni giganti che si scaricano dalle profondità della terra per devastare Los Angeles. Certo, non c'è più nessun concetto generico e B- movie di questo. Ma aspetta, & quot; Lavalantula & quot; è molto, molto divertente ed è un orologio divertente. Ho visto molte caratteristiche di una creatura, la maggior parte delle quali ha effetti speciali discutibili o risibili. Tuttavia, va detto che gli effetti in & quot; Lavalantula & quot; erano in realtà sorprendentemente buoni. I ragni sembravano abbastanza belli e realistici, oltre che realistici come possono essere i giganteschi ragni che respirano la lava. E questa è una delle cose più importanti che ha reso il film guardabile e godibile. Steve Guttenberg in realtà mi ha sorpreso con la sua interpretazione in questo film, visto che è la prima volta che lo vedo in un ruolo quello non era solamente basato su elementi di commedia.E per gli appassionati di cinema di lunga data, quindi era ovviamente un piacere avere & quot; Police Academy & quot; le star Michael Winslow, Leslie Easterbrook e Marion Ramsey si aggiungono a Steve Guttenberg. -

Barnett Responds ·Positively to Evaluation

l'Jal'cb 20, 1986 University of Missouri· St. Louis Issue, 541 .Barnett Responds ·Positively To Evaluation Speaking specifically about 21st century. " I won't be on campus that much Steven Brawley Barnett said that by June 1 she pus philosophy provides a ' managing editor will be on board. philosophical overview that helps UMSL, Barnett said this multicam " I believe this campus can between now and then because'I still "Giving your presence give peo people have a clear perception of pus theme will be benefiMI. become a role model for what other have things to finish in New York" Chancellor-elect Marguerite ple an indication you really are com what it is the university is doing," "I think this campus is poised to urban universities should be doing," she said. ' Ross Barnett responded optimis ming and that your enthusiasm she said. become a crucial part of the she said. tically to the recommendations hasn't diminished," she said. She believes that this will also economic development in St. She said that the UMSL campus ~made to evaluate the UM system. Barnett said the multi campus carryover into her role as Louis." has' the talented faculty that can She will return to St. Louis on At the March Board of Curators theme of the report su bmitted to the chancellor. She also added that the campus make this possible. April 17, to partiCipate in the Chan meeting on campus, Barnett made curators, by the Committee to "It reinforces the efforts of the can become a" good neighbor" to the Between now and June 1, Barnett cellor's Report to the Community her first official appearance at Improve the University of Missouri chancellors and the president in public school system and that it can said she will continue to read piles being given by interim Chancellor lUMSL since it was announced she was very favol'able. -

Eastern Progress 1985-1986 Eastern Progress

Eastern Kentucky University Encompass Eastern Progress 1985-1986 Eastern Progress 3-13-1986 Eastern Progress - 13 Mar 1986 Eastern Kentucky University Follow this and additional works at: http://encompass.eku.edu/progress_1985-86 Recommended Citation Eastern Kentucky University, "Eastern Progress - 13 Mar 1986" (1986). Eastern Progress 1985-1986. Paper 24. http://encompass.eku.edu/progress_1985-86/24 This News Article is brought to you for free and open access by the Eastern Progress at Encompass. It has been accepted for inclusion in Eastern Progress 1985-1986 by an authorized administrator of Encompass. For more information, please contact [email protected]. Vol. 64/No. 24 Laboratory Publication of the Deportment of Mots Communication* March 13. 1986 Eastern Kentucky University, Richmond, Ky. 40475 Investigation to examine student death By Alan White ding to Ron Harrell, director of Editor public information. An investigation is continuing in- Harrell said Dr. Skip Daugherty, to the death of a university student director of Student Activities and who died early Saturday after he at- Organizations, and Troy Johnson, tended a function at the Sigma assistant director, are conducting Alpha Kpsilon fraternity house. the review. Michael Jose Dailey. 19. who was Dailey, a graduate of Erlanger taken to Pattie A. Clay Hospital by Lloyd High School and a sophomore members of the SAE fraternity transfer student from Northern after he became ill. was pronounc- Kentucky University, was a ed dead at 1 a.m. Saturday by marketing major. Madison County Coroner Embry Dailey bad participated in soccer Curry. and tennis n high school. He was According to Samuel Dailey, the also in toe marching band. -

Lottery Pays Off ^ F M T S T » FOOTHILL COLLEGE by SHELLEY SIEGEL Than Allotted for School Years Yqtm MMO& a Wise CHOICE* the Foothill/De Anza Com 1978-1984 Combined

Volume 28, Number 18 Los Altos Hills, CA 94022 March 21, 1986 The Foothill College Lottery pays off ^ f m t s t » FOOTHILL COLLEGE By SHELLEY SIEGEL than allotted for school years YQtM MMO& A Wise CHOICE* The Foothill/De Anza Com 1978-1984 combined. Between munity College District has 1984-1986 there has been a announced the receipt of a gradual increase in funds for whopping $1,147,845.04 check equipment such as engineering deposited to its general budget. devices and musical and athletic The check, which arrived on equipment. Feb. 7, is the first installment of Clements added that the lot a series of payments made to tery money is basically up for public schools from the Cali grabs for instructional purposes fornia State Lottery. It was or short-term projects. The the seventh largest payment to school plans to hire full-time the 70 community college dis tutors and aides, to upgrade tricts in the state. Originally, the “Writing Across the Curricu the district had expected to re lum” program, continue grounds ceive only $700,075. maintenance, and purchase any The payoff is based on resi new equipment on Campus that dent and non-resident ADA is needed. Use of the money will (average daily attendance). Ac depend on the district’s priori cording to Mary Heeney, Direc ties. “Short-term projects as tor of Business Services, the opposed to on-going projects $2,200 alotted by the state for will be the main use of lottery each full-time student per year funds because it is nearly impos will remain the same. -

PRODUCTION GUIDE by Linda Gorman and John Timmins

• • PRODUCTION GUIDE by Linda Gorman and John Timmins STREET LEGAL ter Dan Sissons asst.props. Robert Stelmach set dec. Lesley Beale const.coord. Stuart BCTV Series of 6 x l·hour episodes shooting May to Ennis d.o.p. Bob Ennis camp.op. Curt Petersen he following is a list of films in production (actually before c ~mera s ) (604) 420-2288 October 1986. To be telecast in the 1986-87 1st asst.cam . Joel Ranson 2nd asst.cam. and In negotiation In Canada. Needless to say, the films which are ZIGZAG season . Pi lot was titled Shellgame. exec.p. Gary Kennedy sd.mix. Martin Fossum boom Maryke McEwen p. Bonita Siegel, Duncan John Harling gaffer Barry Reid best boy Fred J. still in the project stage are subject to changes. A thi rd category,ln TV series. On e-half hours of comed y magazine T Lamb d. Alan Erlich , Mort Ran sen , Randy Brad- Boyd gennle op. John Helme key grip David style for pre-teens. Shooting sporadically from Pre-Production, will be used to indicate films which are in active pre-pro shaw sc. Williarn Deverell , Marc Strange, Ian Humphreys best boy Ben Rusi head ward. duction, having set a date for the beginning of princi pal photography and May to September. p.ld. Ross Sullivan sc. Ross Sullivan , Bill Reiter, Erick Dacommun. Adams, larry Gaynor. Ian Sutherland, Judith Jane Grose ward.asst. Christina McQuarrie, being engaged in casting and crewing. Films are li sted by the name of the Thompson , Don Tru ckey d.o.p. -

A Wchm of Flacvch* in a Low

2H - MANCHFSTKR HKKAU). Wednesday. Nov 21. 19^3^ MANCHESTER FOCUS SPORTS WEATHER Guard at llling Here’s the score Vvhalers explode Fair, cold tonight; rakes in plaudits on holiday music to rip Penguins milder Saturday ... page 3 ... page 9 ;.. page 17 ... page 2 Manchester, Conn. — A City of Village Charm Friday. Nov. 23. 1984 — Single copy: 25<P New^alks planned in January By Ira Allen United Press International • •;. V . f , SANTA BAHHAHA. Calif With the advenl of new ' ' • ' /-' •'*.' t ^ arms talks, a key presidential aide said today there was promise of a sustained IVS. Soviet dialogue after the near Cold Wai ini|)asse of President Reagan's first four years in the White House. Robert McF'arlane, Reagan's natilinal seeurity adviser, said "the pace of the dialogue has picked up considerably" since the Sept 28 meeting between Reagan and Soviet Foreign Minister Andrei (iro . niyko. and that there is ".some proini.se " tlie pace can be sustained Appearing on Ihe CBS "Morning News " program. McFarlane said, "Tlie meeting w ith tbi' president and foreign minister Gromyko provided a eeitain clearing of Ihe air and sipce that lime ... Hie pace of Ihe dialogue has picked up eonsiderably and we liope we ('an sustain it in private channels and there is some promise ol that,” he said. McFarlane annouiu'ed Thursday that Sei relary of State George Shultz and firomyko would meet in Geneva. Switzerland, ,lan. 7 and 8 to discuss an agenda for future aniis ('onlrol talks "with Ihe objective of reac'liing mulnally a('('eplable agree ments on tbe whole range ol (pies'lions coni'erning nuclear and outer space arms.'.' Although Moscow said lhe.se would be new talks and not a resumption of Ihe sli'ategi(' "irms and intermediate-range missile negotiations they walked . -

Newsletter 01/16 DIGITAL EDITION Nr

ISSN 1610-2606 ISSN 1610-2606 newsletter 01/16 DIGITAL EDITION Nr. 353 - Januar 2016 Michael J. Fox Christopher Lloyd LASER HOTLINE - Inh. Dipl.-Ing. (FH) Wolfram Hannemann, MBKS - Talstr. 11 - 70825 K o r n t a l Fon: 0711-832188 - Fax: 0711-8380518 - E-Mail: [email protected] - Web: www.laserhotline.de Newsletter 01/16 (Nr. 353) Januar 2016 editorial Hallo Laserdisc- und DVD-Fans, Als Bonusmaterial gibt es den Trailer Blu-ray überhaupt am Markt durchsetzt liebe Filmfreunde! zum Film sowie die Ansprache des Re- bleibt abzuwarten. Angesichts der rela- gisseurs anlässlich der Premiere in der tiv hohen Heimkino-Investitionen Herzlich willkommen zu unserem ersten Karlsruher Schauburg. Zu haben ist die könnte der 4K Blu-ray ein bescheide- Newsletter im Jahre 2016 – der eigent- schön verpackte BD-R versandkosten- nes Nischen-Dasein blühen. Immerhin lich schon im Dezember letzten Jahres frei direkt bei uns zum Preis von EUR gibt es zumindest in den USA breite hätte erscheinen sollen. Dieses Mal 10,-. Also nichts wie ran! Unterstützung durch einige Holly- kam uns leider unsere eigene Blu-ray in wood-Majors, so dass bereits 2016 mit die Quere: THINK BIG hat alle geplan- Nach dieser guten Nachricht müssen einer relativ hohen Anzahl von Veröf- ten Termine über den Haufen geworfen. wir leider auch noch eine schlechte fentlichungen gerechnet werden darf. Das Mastering der Blu-ray hatte sich Nachricht loswerden. Wieder einmal Wann der Startschuss in Deutschland etwas schwieriger gestaltet als geplant hat DHL seine Kosten nach oben korri- fällt ist noch ungewiss. Aber keine und beinhaltete zudem sogenannte giert, so dass wir unsere Versands- Bange: wir halten Sie natürlich mit un- “Last Minute Changes”, die uns ziem- pesen anpassen mussten. -

Auf Dvd & Blu-Ray

AUF DVD & BLU-RAY EINE SCHWARZE COMIC-GESCHICHTE EINES DAS WAR BADASS! WEIHNACHTS- BLUTBADES... ICH LIEBE ES. TWITCHFILM.COM AINTITCOOLNEWS.COM NEUER FESTLICHER HORROR IST GEBOREN! FRIGHTFEST ELFEN-ZOMBIES, EIN DÄMONISCHER KRAMPUS... UND WILLIAM SHATNER... ALLES WAS EIN GESCHENK UNTER DEM BAUM BRAUCHT. HOLLYWOOD REPORTER ...GRUSELIG UND BLUTIGER SPASS. HADDONFIELD HORRROR Ꭿ XMAS Story (Copperheart) Productions Incorporated. aCHS130x210.indd 1 11.11.15 13:44 VORWORT Für alle Gegner der weihnachtlich verordneten Besinnlichkeit, für alle Vermeider adventsbedingter Familienzusammenkünfte, für alle Hasser des Shopping-Terrors oder einfach als adrenalinhaltiges Mittel gegen den Winterblues präsentiert Fantasy Filmfest erstmalig die WHITE NIGHTS. Dabei freuen wir uns, euch wie angekündigt ein paar Highlights aus der internationalen herbstlichen Festivalsaison vorstellen zu können. Fast alle Filme feierten Premiere auf dem renommierten Toronto Filmfest, gewannen Preise beim Fantastic Fest Austin oder in San Sebastián. Ein bisschen konnten wir in der Oldie-Kiste stöbern und Schauspiel-Veteranen wie Kurt Russel (BONE TOMAHAWK) und Steve Guttenberg (LAVALANTULA) zu neuem Glanz verhelfen, Seite an Seite mit angesagten Jungstars wie Emma Roberts und Kiernan Shipka (FEBRUARY). Und dank dem äußerst verstörenden Horrortrip BASKIN ist die Türkei als Produktionsland erstmalig auf einer Fantasy Filmfest-Veranstaltung vertreten. Doch auch wenn Grauen, Blut und Schocks vordergründig die Oberhand zu haben scheinen, so gilt es auch wie immer poetisch visionäre Geschichten und Stile zu entdecken. Lasst euch also ein auf eine Entdeckungsreise der besonderen Art mit lästigem Spinnengetier, beängstigenden Höllenritts, Infizierten und Serien- killern, mit verhängnisvollen Hochzeitsfeiern, gespensterhaft verlassenen Internaten, dunklen Wäldern und teuflischen Winden. In diesem Sinn ein Frohes Fest! Euer Fantasy Filmfest-Team » For everyone who fears the festive spirit, dreads the family meetings and hates all the Christmas shopping. -

The BG News March 21, 1986

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 3-21-1986 The BG News March 21, 1986 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News March 21, 1986" (1986). BG News (Student Newspaper). 4507. https://scholarworks.bgsu.edu/bg-news/4507 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. Tumblers to defend MAC title, see page 4 THE BG NEWS Vol. 68 Issue 100 Bowling Green, Ohio Friday, March 21,1986 House defeats contra aid, 222-210 WASHINGTON (AP) - A sharply di- us victory," he said. House Speaker request last year, but later - after Ni- their doorstep." he said. "And one day O'Neill: "Today, you're wrong, you're vided House, on a 222-210 vote yester- Thomas O'Neill, D-Mass., who ted the caraguan leader Daniel Ortega paid a in the not too distant future that aware- wrong, you're wrong... A month from day, defeated President Reagan's plan opposition, promised an April 15 vote in visit to Moscow - approved $27 million ness will come home to the House of now will be too late because the com- to send $100 million in military aid to the House. in non-lethal aid. -

Police Academy 2 Cast

Police academy 2 cast Police Academy 2: Their First Assignment () cast and crew credits, including actors, actresses, directors, writers and more. Full Cast & Crew: Police Academy 2: Their First Assignment (). Cast (46). Steve Guttenberg. Carey Mahoney. Bubba Smith. Hightower. David Graf. Meet the cast and learn more about the stars of Police Academy 2: Their First Assignment with exclusive news, pictures, videos and more at films Full Cast of Police Academy 2: Their First Assignment Actors/Actresses Julie Brown Clueless, A Goofy Movie, Police Academy 2: Their First Assignment. 12 Lucy Lee Flippin is listed (or ranked) 12 on the list Full Cast of Tim Kazurinsky Police Academy 2: Their First Assignment, Police Academy 3: Back in Training. Read more >>> ?ges&keyword=police+academy+2+cast Police academy 2 cast Police academy 2 cast later confides to Mahoney. Police Academy 2: Their First Assignment () The first of six sequels to Police Academy, this sequel is even dumber Show More Cast. The international success of the first movie in the Police Academy series meant that new director Jerry Paris wasn't going to mess with a winning formula. Take a look below to find out where the cast of Police Academy is now. But here's the thing: Season 2 is just around the corner and you don't. See the full list of Police Academy 2: Their First Assignment cast and crew including cast. crew. David Graf as Officer Eugene Tackleberry. Bruce Mahler as. · Best Of Police Academy 4 - Duration: Hummerinloukku , views · Michael Winslow. Police Academy 2: Their First Assignment - Cast & Crew. -

Press Notes for the Short Animated Film Called Herman, the Legal Labrador

These are the press notes for the short animated film called Herman, The Legal Labrador Cute doggie. Loyal pet. World class defence lawyer. NAKEDFELLA PRODUCTIONS, with assistance from NEXT WAVE, presents “HERMAN, THE LEGAL LABRADOR” written, directed & animated by DAVID BLUMENSTEIN starring SHAUN MICALLEF ADAM WAJNBERG KATRINA MATHERS LOC HUU NGHE BRIAN MILLERSHIP SANTO CILAURO ADRIAN CALEAR DUFF THOMAS PULLAR and JIM KALOGIRATOS as “SCHIFF, THE LEGAL BULLDOG” music by DAN SULLIVAN DAVID BLUMENSTEIN NICK IVES JESSE SAMULENOK BEN SULLIVAN LENNY VOLKOV producer JEREMY PARKER production manager JACOB ZHIVOV creative consultant ADAM WAJNBERG unit publicist TIM WILSON post-production supervisor CHRIS DEA executive producer JEREMY PARKER Herman, The Legal Labrador Director’s Statement I have formulated an idea for a film which would stun the world, and which I will one day direct: POLICE ACADEMY VIII: COP KILLA Carey Mahoney (Steve Guttenberg)’s life is in a slump. His career in the Metropolitan Police Force has stalled, and in his mid-forties he remains a sergeant without hope of advancement. His cocksure strut and wacky antics have grown tired, his romantic dalliances are becoming less frequent... and he has been saddled with the unenviable task of playing nursemaid to the wholly senile Commandant Lassard (George Gaynes), retired, who lives with Mahoney in his small apartment, and whose every trip to the toilet becomes an adventure in mopping. It's just one more blow when Mahoney receives word that his former brother-in-arms, Tackleberry, has been killed in the line of duty. Unable to make it to the funeral, Mahoney commemorates it in his own way, at home, sobbing into a bottle of Jack.