A Formal Study of Free Software Distributions Jaap Boender

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The GNOME Census: Who Writes GNOME?

The GNOME Census: Who writes GNOME? Dave Neary & Vanessa David, Neary Consulting © Neary Consulting 2010: Some rights reserved Table of Contents Introduction.........................................................................................3 What is GNOME?.............................................................................3 Project governance...........................................................................3 Why survey GNOME?.......................................................................4 Scope and methodology...................................................................5 Tools and Observations on Data Quality..........................................7 Results and analysis...........................................................................10 GNOME Project size.......................................................................10 The Long Tail..................................................................................11 Effects of commercialisation..........................................................14 Who does the work?.......................................................................15 Who maintains GNOME?................................................................17 Conclusions........................................................................................22 References.........................................................................................24 Appendix 1: Modules included in survey...........................................25 2 Introduction What -

Misc Thesisdb Bythesissuperv

Honors Theses 2006 to August 2020 These records are for reference only and should not be used for an official record or count by major or thesis advisor. Contact the Honors office for official records. Honors Year of Student Student's Honors Major Thesis Title (with link to Digital Commons where available) Thesis Supervisor Thesis Supervisor's Department Graduation Accounting for Intangible Assets: Analysis of Policy Changes and Current Matthew Cesca 2010 Accounting Biggs,Stanley Accounting Reporting Breaking the Barrier- An Examination into the Current State of Professional Rebecca Curtis 2014 Accounting Biggs,Stanley Accounting Skepticism Implementation of IFRS Worldwide: Lessons Learned and Strategies for Helen Gunn 2011 Accounting Biggs,Stanley Accounting Success Jonathan Lukianuk 2012 Accounting The Impact of Disallowing the LIFO Inventory Method Biggs,Stanley Accounting Charles Price 2019 Accounting The Impact of Blockchain Technology on the Audit Process Brown,Stephen Accounting Rebecca Harms 2013 Accounting An Examination of Rollforward Differences in Tax Reserves Dunbar,Amy Accounting An Examination of Microsoft and Hewlett Packard Tax Avoidance Strategies Anne Jensen 2013 Accounting Dunbar,Amy Accounting and Related Financial Statement Disclosures Measuring Tax Aggressiveness after FIN 48: The Effect of Multinational Status, Audrey Manning 2012 Accounting Dunbar,Amy Accounting Multinational Size, and Disclosures Chelsey Nalaboff 2015 Accounting Tax Inversions: Comparing Corporate Characteristics of Inverted Firms Dunbar,Amy Accounting Jeffrey Peterson 2018 Accounting The Tax Implications of Owning a Professional Sports Franchise Dunbar,Amy Accounting Brittany Rogan 2015 Accounting A Creative Fix: The Persistent Inversion Problem Dunbar,Amy Accounting Foreign Account Tax Compliance Act: The Most Revolutionary Piece of Tax Szwakob Alexander 2015D Accounting Dunbar,Amy Accounting Legislation Since the Introduction of the Income Tax Prasant Venimadhavan 2011 Accounting A Proposal Against Book-Tax Conformity in the U.S. -

Compile Ta Primtux-Lubuntu-18.04

COMPILE TA PRIMTUX-LUBUNTU-18.04 PRÉREQUIS : • rouge : à taper dans le terminal du système chrooté (le système qu’on crée) • vert : à taper dans le terminal du système hôte • Remplacer USER par votre nom d’utilisateur et « Répertoire_de_l’iso_décompressée_de_Lubuntu-18.04 » par le vôtre. • Toutes les modifications exécutées à partir de l’explorateur de fichier hôte se font en root. • Testé sous Ubuntu 18.04 et 18.10 PRÉVOIR UNE DEMI-JOURNÉE : 1. Mise en place de l’environnement : • Dans l’environnement hôte : sudo apt-get install squashfs-tools schroot genisoimage • Télécharger puis extraire l'iso de la Lubuntu 18.04 • Créer un répertoire squashfs dans le /home/USER • Monter le filesystem.squashfs: sudo mount -t squashfs -o loop filesystem.squashfs /media/USER • Copier le filesystem.squashfs monté dans /squashfs: sudo cp -av /media/USER/. squashfs • Copier /run/systemd/resolve/stub-resolv.conf dans le /run/systemd/resolve/ du répertoire /home/USER/squashfs 2. Ajout des dépôts source : sudo chroot /home/USER/squashfs apt-get update add-apt-repository ppa:jclic/master add-apt-repository ppa:webupd8team/java wget -O – https://depot.primtux.fr/repo/debs/key/PrimTux.gpg.key | apt-key add – wget -q – https://depot.primtux.fr/repo/debs/key/PrimTux.gpg.key | apt-key add – • Supprimer PrimTux.gpg.key et -- de la racine du répertoire /home/USER/squashfs depuis l’explorateur de fichiers • Créer le document vide primtux.list dans /etc/apt/sources.list.d et y inscrire: deb https://depot.primtux.fr/repo/debs PrimTux2-Stretch64 main deb https://depot.primtux.fr/repo/debs PrimTux4-amd64 main apt-get update 3. -

2020 Spring Product Guide Final-Min

2020 SPRING INTEGRATOR PRODUCT GUIDE COME SWIM WITH THE BIG FISH November 9 – 11, 2020 | Cleveland, OH | Huntington Convention Center Take control of your business success. Gain insights from the integration industry elite this November at Total Tech Summit. This uniquely powerful by invitation-only event drives extraordinary progress in the integration industry. Total Tech Summit is where the industry elite gather. Total Tech Summit helps to grow and improve your company through: › Educational, deep-dive sessions from the industry elite › 1-on-1 and boardroom meetings with new vendors that can take your offerings from better to best › Networking at every turn. Connect with both integrators in your industry and beyond to help give you fresh ideas and business strategies › Complimentary flights, hotel, “Gathering of professionals that registration, and meals to help you are non-competition to collaborate focus on what matters most on ideas to grow business in profits, procurement, strategies, staffing and general business practices is a wealth of knowledge at the cost of a couple days’ time and to also have the added benefit of meeting one on one with manufacturers that you may not have reached out to.” — John Rudolph, Vice President, PCD To learn more and to apply to join the best in the industry, please visit www.totaltechsummit.com Table of Contents Page 6 Audio ▶ Page 23 Control/Networking/Energy Management ▶ Page 36 Home Enhancements (central vacuum, wire and cable, tools, testers, furniture) ▶ Page 48 Security ▶ Page 53 Video ▶ Page -

Monitoring File Events

MONITORING FILE EVENTS Some applications need to be able to monitor files or directories in order to deter- mine whether events have occurred for the monitored objects. For example, a graphical file manager needs to be able to determine when files are added or removed from the directory that is currently being displayed, or a daemon may want to monitor its configuration file in order to know if the file has been changed. Starting with kernel 2.6.13, Linux provides the inotify mechanism, which allows an application to monitor file events. This chapter describes the use of inotify. The inotify mechanism replaces an older mechanism, dnotify, which provided a subset of the functionality of inotify. We describe dnotify briefly at the end of this chapter, focusing on why inotify is better. The inotify and dnotify mechanisms are Linux-specific. (A few other systems provide similar mechanisms. For example, the BSDs provide the kqueue API.) A few libraries provide an API that is more abstract and portable than inotify and dnotify. The use of these libraries may be preferable for some applications. Some of these libraries employ inotify or dnotify, on systems where they are available. Two such libraries are FAM (File Alteration Monitor, http:// oss.sgi.com/projects/fam/) and Gamin (http://www.gnome.org/~veillard/gamin/). 19.1 Overview The key steps in the use of the inotify API are as follows: 1. The application uses inotify_init() to create an inotify instance. This system call returns a file descriptor that is used to refer to the inotify instance in later operations. -

Foot Prints Feel the Freedom of Fedora!

The Fedora Project: Foot Prints Feel The Freedom of Fedora! RRaahhuull SSuunnddaarraamm SSuunnddaarraamm@@ffeeddoorraapprroojjeecctt..oorrgg FFrreeee ((aass iinn ssppeeeecchh aanndd bbeeeerr)) AAddvviiccee 101011:: KKeeeepp iitt iinntteerraaccttiivvee!! Credit: Based on previous Fedora presentations from Red Hat and various community members. Using the age old wisdom and Indian, Free software tradition of standing on the shoulders of giants. Who the heck is Rahul? ( my favorite part of this presentation) ✔ Self elected Fedora project monkey and noisemaker ✔ Fedora Project Board Member ✔ Fedora Ambassadors steering committee member. ✔ Fedora Ambassador for India.. ✔ Editor for Fedora weekly reports. ✔ Fedora Websites, Documentation and Bug Triaging projects volunteer and miscellaneous few grunt work. Agenda ● Red Hat Linux to Fedora & RHEL - Why? ● What is Fedora ? ● What is the Fedora Project ? ● Who is behind the Fedora Project ? ● Primary Principles. ● What are the Fedora projects? ● Features, Future – Fedora Core 5 ... The beginning: Red Hat Linux 1994-2003 ● Released about every 6 months ● More stable “ .2” releases about every 18 months ● Rapid innovation ● Problems with retail channel sales model ● Impossible to support long-term ● Community Participation: ● Upstream Projects ● Beta Team / Bug Reporting The big split: Fedora and RHEL Red Hat had two separate, irreconcilable goals: ● To innovate rapidly. To provide stability for the long-term ● Red Hat Enterprise Linux (RHEL) ● Stable and supported for 7 years plus. A platform for 3rd party standardization ● Free as in speech ● Fedora Project / Fedora Core ● Rapid releases of Fedora Core, every 6 months ● Space to innovate. Fedora Core in the tradition of Red Hat Linux (“ FC1 == RHL10” ) Free as in speech, free as in beer, free as in community support ● Built and sponsored by Red Hat ● ...with increased community contributions. -

Greg Kroah-Hartman [email protected] Github.Com/Gregkh/Presentation-Kdbus

kdbus IPC for the modern world Greg Kroah-Hartman [email protected] github.com/gregkh/presentation-kdbus Interprocess Communication ● signal ● synchronization ● communication standard signals realtime The Linux Programming Interface, Michael Kerrisk, page 878 POSIX semaphore futex synchronization named eventfd unnamed semaphore System V semaphore “record” lock file lock file lock mutex threads condition variables barrier read/write lock The Linux Programming Interface, Michael Kerrisk, page 878 data transfer pipe communication FIFO stream socket pseudoterminal POSIX message queue message System V message queue memory mapping System V shared memory POSIX shared memory shared memory memory mapping Anonymous mapping mapped file The Linux Programming Interface, Michael Kerrisk, page 878 Android ● ashmem ● pmem ● binder ashmem ● POSIX shared memory for the lazy ● Uses virtual memory ● Can discard segments under pressure ● Unknown future pmem ● shares memory between kernel and user ● uses physically contigous memory ● GPUs ● Unknown future binder ● IPC bus for Android system ● Like D-Bus, but “different” ● Came from system without SysV types ● Works on object / message level ● Needs large userspace library ● NEVER use outside an Android system binder ● File descriptor passing ● Used for Intents and application separation ● Good for small messages ● Not for streams of data ● NEVER use outside an Android system QNX message passing ● Tight coupling to microkernel ● Send message and control, to another process ● Used to build complex messages -

Indicators for Missing Maintainership in Collaborative Open Source Projects

TECHNISCHE UNIVERSITÄT CAROLO-WILHELMINA ZU BRAUNSCHWEIG Studienarbeit Indicators for Missing Maintainership in Collaborative Open Source Projects Andre Klapper February 04, 2013 Institute of Software Engineering and Automotive Informatics Prof. Dr.-Ing. Ina Schaefer Supervisor: Michael Dukaczewski Affidavit Hereby I, Andre Klapper, declare that I wrote the present thesis without any assis- tance from third parties and without any sources than those indicated in the thesis itself. Braunschweig / Prague, February 04, 2013 Abstract The thesis provides an attempt to use freely accessible metadata in order to identify missing maintainership in free and open source software projects by querying various data sources and rating the gathered information. GNOME and Apache are used as case studies. License This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 Unported (CC BY-SA 3.0) license. Keywords Maintenance, Activity, Open Source, Free Software, Metrics, Metadata, DOAP Contents List of Tablesx 1 Introduction1 1.1 Problem and Motivation.........................1 1.2 Objective.................................2 1.3 Outline...................................3 2 Theoretical Background4 2.1 Reasons for Inactivity..........................4 2.2 Problems Caused by Inactivity......................4 2.3 Ways to Pass Maintainership.......................5 3 Data Sources in Projects7 3.1 Identification and Accessibility......................7 3.2 Potential Sources and their Exploitability................7 3.2.1 Code Repositories.........................8 3.2.2 Mailing Lists...........................9 3.2.3 IRC Chat.............................9 3.2.4 Wikis............................... 10 3.2.5 Issue Tracking Systems...................... 11 3.2.6 Forums............................... 12 3.2.7 Releases.............................. 12 3.2.8 Patch Review........................... 13 3.2.9 Social Media............................ 13 3.2.10 Other Sources.......................... -

Gnome-Session Gzip Less 2.14888718342 Lzop Lib64gpgme-Devel

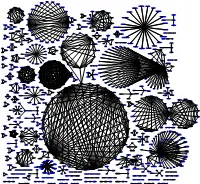

lib64xcb-xv0 lib64xcb-xfixes0 lib64xcb-xf86dri0 lib64xcb-xinerama0 lib64xcb-shape0 lib64boost_serialization1.42.0 lib64boost_regex1.42.0 lib64xcb-composite0 lib64consolekit0 lib64boost_wave1.42.0 lib64boost_math_tr1f1.42.0 0.5 policykit 2.45148110317 1.94075587334 lib64xcb-dpms0 lib64hal1 0.10010010010. 0.600600600601 1.83861082737 python-twisted 0.198609731877 lib64polkit2 0.398009950249 lib64boost_iostreams1.42.0 2.3493360572 lib64xcb-render0 python-mechanize 0. 0.3 3.06748466258 2.1472392638 python-twisted-conch lib64boost_math_c99l1.42.0 2.24719101124 0. 2.1472392638 python-twisted-news 0.4004004004 pciutils 2.1472392638 0.204290091931 0. 4.70219435737 3.06748466258 3.76175548589 3.76175548589 lib64xcb-xvmc0 0. lib64ggzmod4 0. 3.76175548589 pyid3lib 1.73646578141 2.1472392638 lib64skgbasemodeler0 0. 0. 0.510725229826 4.26195426195 4.70219435737 3.7422037422 consolekit 2.1472392638 0. pyasn1 0.204290091931 0. ggz-client-libs 3.76175548589 0. 0.408580183861 1.97505197505 0. 0. lib64xcb-dri2_0 0. 0. lib64boost_wserialization1.42.0 2.45398773006 0. 0. 0.0. 3.63825363825 0. 0. 3.76175548589 lib64ggzcore9 0. 0. cryptsetup python-twisted-lore lib64boost_program_options1.42.0 0. 0. 4.158004158 2.1472392638 0. lib64xau6-devel 0. python-clientform 4.07523510972 0. 0. 0.287356321839 0.287356321839 0. 4.05405405405 lib64xcb-xevie0 0. 3.76175548589 2.45398773006 0. 0. 0. hal-info 0. 0. x11-proto-devel lib64skgbasegui0 lib64skgbankgui0 3.59281437126 0. 0. 0. 1.97505197505 0. 0. 3.59281437126 python-axiom 0. 4.075235109722.1472392638 python-coherence usbutils 3.5343035343 0. 2.2869022869 0. lib64xcb-devel lib64xdmcp6-devel 3.76175548589 2.45398773006 0. 0. 0. 0. 0. hal 0. 0. 2.18295218295 lib64boost_math_tr1l1.42.0 acl 3.761755485894.07523510972 python-twisted-words python-celementtree 3.37423312883 0. -

Performance Tuning Guide

Red Hat Enterprise Linux 7 Performance Tuning Guide Optimizing subsystem throughput in Red Hat Enterprise Linux 7 Red Hat Subject Matter ExpertsLaura Bailey Charlie Boyle Red Hat Enterprise Linux 7 Performance Tuning Guide Optimizing subsystem throughput in Red Hat Enterprise Linux 7 Laura Bailey Red Hat Customer Content Services Charlie Boyle Red Hat Customer Content Services Red Hat Subject Matter Experts Edited by Milan Navrátil Red Hat Customer Content Services [email protected] Legal Notice Copyright © 2016 Red Hat, Inc. and others. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Red Hat Enterprise Linux 7 7.8 Release Notes

Red Hat Enterprise Linux 7 7.8 Release Notes Release Notes for Red Hat Enterprise Linux 7.8 Last Updated: 2021-03-02 Red Hat Enterprise Linux 7 7.8 Release Notes Release Notes for Red Hat Enterprise Linux 7.8 Legal Notice Copyright © 2021 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. Node.js ® is an official trademark of Joyent. Red Hat is not formally related to or endorsed by the official Joyent Node.js open source or commercial project.