Walking in the Shadow: a New Perspective on Descent Directions for Constrained Minimization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Announces: the SHADOW Composed and Conducted By

Announces: THE SHADOW Composed and Conducted by JERRY GOLDSMITH Intrada Special Collection Volume ISC 204 Universal Pictures' 1994 The Shadow allowed composer Jerry Goldsmith to put his imprimatur on the darkly gothic phase of the superhero genre that developed in the 1990s. Writing for a large orchestra, Goldsmith created a score that found the perfect mix between heroism and menace and resulted in one of his longest scores. Goldsmith’s main title music introduces many of the score’s core elements: an unnerving pitch bend, played by synthesizers; a grandly powerful brass melody for the Shadow; and a bouncing electronic figure that will become a key rhythmic device throughout the score. Goldsmith’s classic sounding Shadow theme and the score’s large scale orchestrations complemented the film’s period setting and lavish look, while the composer’s trademark electronics helped better position the film for a contemporary audience. When the original soundtrack for The Shadow was released in 1994, it presented only a fraction of Jerry Goldsmith’s hour-and-twenty-minute score, reducing the composer’s wealth of action material to two cues and barely hinting at the complete score’s range and scope. In particular, Goldsmith’s elegant and haunting love theme—one of his best of the ’90s—plays only briefly at the very end of the album. This premiere presentation of the complete score represents one of the most substantial restorations of a Goldsmith soundtrack, illuminated by the fact that just 30 minutes of the full 85-minute score appeared on the original 1994 soundtrack album. -

1 Living Beside the Shadow of Death by Grace Lukach It's Hard to Say

1 Living Beside The Shadow of Death By Grace Lukach It’s hard to say when my Grandpa really began to die. Complications from an elective back surgery in 2001 forced him to spend two hundred non-consecutive days of the next year institutionalized—either in the hospital, nursing home, or in-house rehabilitation center. On three separate occasions he was put on a ventilator and for nearly nineteen of those two hundred days he relied on this machine to live. It seemed as though he were dying, but somehow, after all those months of suffering, he recovered his strength and returned home. He had escaped the clutches of death. And though he never again walked independently and would dream of physical activities as simple as driving, he was able to share monthly meals with old co-workers, attend weekly mass, and watch his children—and their children—grow up for six more years. Because of modern medicine, my Grandpa experienced six more years of being alive. But then my Grandpa fell and broke his hip. Back in the hospital, he was intubated and extubated and intubated again. A permanent pacemaker was implanted as well as a dialysis port, a tracheotomy, and a feeding tube. He was on and off the ventilator. Death was the enemy and everyone was fighting with every bit of energy they could muster, but nothing could keep him alive this time. After four months in the hospital, my Grandpa finally died. My Grandma’s journey to death is much clearer. She was a fighter who had regained full mobility after suffering a debilitating stroke that paralyzed her right side at the age of fifty-four. -

GAME DEVELOPERS a One-Of-A-Kind Game Concept, an Instantly Recognizable Character, a Clever Phrase— These Are All a Game Developer’S Most Valuable Assets

HOLLYWOOD >> REVIEWS ALIAS MAYA 6 * RTZEN RT/SHADER ISSUE AUGUST 2004 THE LEADING GAME INDUSTRY MAGAZINE >>SIGGRAPH 2004 >>DEVELOPER DEFENSE >>FAST RADIOSITY SNEAK PEEK: LEGAL TOOLS TO SPEEDING UP LIGHTMAPS DISCREET 3DS MAX 7 PROTECT YOUR I.P. WITH PIXEL SHADERS POSTMORTEM: THE CINEMATIC EFFECT OF ZOMBIE STUDIOS’ SHADOW OPS: RED MERCURY []CONTENTS AUGUST 2004 VOLUME 11, NUMBER 7 FEATURES 14 COPYRIGHT: THE BIG GUN FOR GAME DEVELOPERS A one-of-a-kind game concept, an instantly recognizable character, a clever phrase— these are all a game developer’s most valuable assets. To protect such intangible properties from pirates, you’ll need to bring out the big gun—copyright. Here’s some free advice from a lawyer. By S. Gregory Boyd 20 FAST RADIOSITY: USING PIXEL SHADERS 14 With the latest advances in hardware, GPU, 34 and graphics technology, it’s time to take another look at lightmapping, the divine art of illuminating a digital environment. By Brian Ramage 20 POSTMORTEM 30 FROM BUNGIE TO WIDELOAD, SEROPIAN’S BEAT GOES ON 34 THE CINEMATIC EFFECT OF ZOMBIE STUDIOS’ A decade ago, Alexander Seropian founded a SHADOW OPS: RED MERCURY one-man company called Bungie, the studio that would eventually give us MYTH, ONI, and How do you give a player that vicarious presence in an imaginary HALO. Now, after his departure from Bungie, environment—that “you-are-there” feeling that a good movie often gives? he’s trying to repeat history by starting a new Zombie’s answer was to adopt many of the standard movie production studio: Wideload Games. -

Light & Shadows

BIG IDEAS LIGHT & SHADOWS • Shadows are created SHADOWS when light is blocked. Very young children think of shadows as actual objects. But by grade school, most kids will understand that a shadow is a • Shadows change shape phenomenon caused by blocking light. Most, however will not be and size, depending on able to articulate the relationship between the location of the light the location of the light and the size and shape of the shadow. This exploration will give and the object. them a chance to develop an intuitive sense of light and shadow. Set up each group of students with one Light Blox with the slit caps removed, and the worksheet for Activity 5: Shadows. Have WHAT YOU’LL NEED students complete the worksheets and then hold a classroom conversation that incorporates student’s findings and covers the • The Shadows worksheet main discussion points. • Light Blox • A piece of plain white You can extend the exploration by inviting students to make paper predictions about what they think will happen before they explore on their own. • A pencil or pen • A mirror stand MAIN DISCUSSION POINTS • A shadow “grows” in the same direction as the light travels. If you point the light from left to right, the shadow appears RELATED PRODUCTS Click the below to be taken right to the right of the object. If you point the light from right to to the product page. left, the shadow appears to the left of the object. Light Blox • A shadow gets bigger as the light moves further from the object - just like the light got “bigger” as it moved further from the wall in activity 1. -

What the Shadows Know 99

What the Shadows Know 99 What the Shadows Know: The Crime- Fighting Hero the Shadow and His Haunting of Late-1950s Literature Erik Mortenson During the Depression era of the 1930s and the war years of the 1940s, mil- lions of Americans sought escape from the tumultuous times in pulp magazines, comic books, and radio programs. In the face of mob violence, joblessness, war, and social upheaval, masked crusaders provided a much needed source of secu- rity where good triumphed over evil and wrongs were made right. Heroes such as Doc Savage, the Flash, Wonder Woman, Green Lantern, Captain America, and Superman were always there to save the day, making the world seem fair and in order. This imaginative world not only was an escape from less cheery realities but also ended up providing nostalgic memories of childhood for many writers of the early Cold War years. But not all crime fighters presented such an optimistic outlook. The Shad- ow, who began life in a 1931 pulp magazine but eventually crossed over into radio, was an ambiguous sort of crime fighter. Called “the Shadow” because he moved undetected in these dark spaces, his name provided a hint to his divided character. Although he clearly defended the interests of the average citizen, the Shadow also satisfied the demand for a vigilante justice. His diabolical laughter is perhaps the best sign of his ambiguity. One assumes that it is directed at his adversaries, but its vengeful and spiteful nature strikes fear into victims, as well as victimizers. He was a tour guide to the underworld, providing his fans with a taste of the shady, clandestine lives of the criminals he pursued. -

The Invincible Shiwan Khan

THE INVINCIBLE SHIWAN KHAN Maxwell Grant THE INVINCIBLE SHIWAN KHAN Table of Contents THE INVINCIBLE SHIWAN KHAN..............................................................................................................1 Maxwell Grant.........................................................................................................................................1 CHAPTER I. SPELL OF THE PAST.....................................................................................................1 CHAPTER II. DEATH'S CHOICE.........................................................................................................5 CHAPTER III. THE MASTER SPEAKS...............................................................................................9 CHAPTER IV. THREADS TO CRIME................................................................................................12 CHAPTER V. FROM SIX TO SEVEN................................................................................................16 CHAPTER VI. THE BRONZE KNIFE.................................................................................................21 CHAPTER VII. THE SECOND SUICIDE...........................................................................................24 CHAPTER VIII. QUEST OF MISSING MEN.....................................................................................27 CHAPTER IX. THE LONE TRAIL......................................................................................................31 CHAPTER X. PATH OF DARKNESS.................................................................................................35 -

The Shadow of an Invisible Cathedral

THE SHADOW OF AN INVISIBLE CATHEDRAL Change how a person conceives of space in his or her mind, I reflected, and you transform how they experience it in the world. I was in a state of near panic when the phone rang. Broke and unemployed, I was startled to recognize the voice of Berkeley’s architecture department director as she told me that a senior faculty member had died during the final days of summer and the school wanted me to teach her graduate seminar, Theories of Architectural Space. Frightened by the prospect of teaching a class for which I was grossly ill-prepared, yet desperate for work, I quickly reshaped the course in my mind into something I felt qualified to teach. “Sure I can do it,” I told the director after an awkward silence, “no problem.” My life at that point was falling apart. I had just completed my Ph.D. in architectural history and theory, and I was already weary of architecture as both a discipline and a means of employment. Unable to afford Berkeley rents, I had moved to a rundown Oakland apartment by a highway overpass. There weren’t any jobs, and I spent most of my days fantasizing an alternative life for myself as a successful novelist. Teaching was my only chance of supporting myself, so I used my love of fiction writing to jump-start my interest in architecture and molded the course into a seven-week graduate seminar I re-titled Spatial Narratives. Rather than presenting a survey of discursive touchstones on space, as the deceased professor had, I would have the students read short stories and excerpts from novels in which buildings figured prominently. -

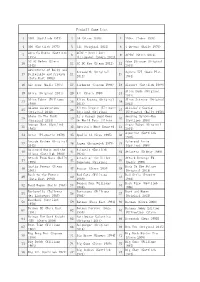

PINBALL NVRAM GAME LIST This List Was Created to Make It Easier for Customers to Figure out What Type of NVRAM They Need for Each Machine

PINBALL NVRAM GAME LIST This list was created to make it easier for customers to figure out what type of NVRAM they need for each machine. Please consult the product pages at www.pinitech.com for each type of NVRAM for further information on difficulty of installation, any jumper changes necessary on your board(s), a diagram showing location of the RAM being replaced & more. *NOTE: This list is meant as quick reference only. On Williams WPC and Sega/Stern Whitestar games you should check the RAM currently in your machine since either a 6264 or 62256 may have been used from the factory. On Williams System 11 games you should check that the chip at U25 is 24-pin (6116). See additional diagrams & notes at http://www.pinitech.com/products/cat_memory.php for assistance in locating the RAM on your board(s). PLUG-AND-PLAY (NO SOLDERING) Games below already have an IC socket installed on the boards from the factory and are as easy as removing the old RAM and installing the NVRAM (then resetting scores/settings per the manual). • BALLY 6803 → 6116 NVRAM • SEGA/STERN WHITESTAR → 6264 OR 62256 NVRAM (check IC at U212, see website) • DATA EAST → 6264 NVRAM (except Laser War) • CLASSIC BALLY → 5101 NVRAM • CLASSIC STERN → 5101 NVRAM (later Stern MPU-200 games use MPU-200 NVRAM) • ZACCARIA GENERATION 1 → 5101 NVRAM **NOT** PLUG-AND-PLAY (SOLDERING REQUIRED) The games below did not have an IC socket installed on the boards. This means the existing RAM needs to be removed from the board & an IC socket installed. -

Excerpts from Hagakure (In the Shadow of Leaves)

Primary Source Document with Questions (DBQs) EXCERPTS FROM HAGAKURE (IN THE SHADOW OF LEAVES) Introduction Hagakure (In the Shadow of Leaves) has come to be known as a foundational text of bushidō, the “way of the warrior.” Dictated between 1709 and 1716 by a retired samurai, Yamamoto Tsunetomo (1659-1719), to a young retainer, Tashirō Tsuramoto (1678-1748), Hagakure was less a rigorous philosophical exposition than the spirited reflections of a seasoned warrior. Although it became well known in the 1930s, when a young generation of nationalists embraced the supposed spirit of bushidō, Hagakure was not widely circulated in the Tokugawa period beyond Saga domain on the southern island of Kyushu, Yamamoto Tsunetomo’s home. Selected Document Excerpts with Questions From Sources of Japanese Tradition, edited by Wm. Theodore de Bary, Carol Gluck, and Arthur L. Tiedemann, 2nd ed., vol. 2 (New York: Columbia University Press, 2005), 476-478. © 2005 Columbia University Press. Reproduced with the permission of the publisher. All rights reserved. Excerpts from Hagakure (In the Shadow of Leaves) I have found that the Way of the samurai is death. This means that when you are compelled to choose between life and death, you must quickly choose death. There is nothing more to it than that. You just make up your mind and go forward. The idea that to die without accomplishing your purpose is undignified and meaningless, just dying like a dog, is the pretentious bushidō of the city slickers of Kyoto and Osaka. In a situation when you have to choose between life and death, there is no way to make sure that your purpose will be accomplished. -

Private Constant Returns and Public Shadow Prices

LIBRARY OF THE MASSACHUSETTS INSTITUTE OF TECHNOLOGY working paper department of economics PRIVATE CONSTANT RETURNS AND PUBLIC SHADOW PRICES P. Diamond and J. Mirrlees Number 151 February 1975 massachusetts institute of technology 50 memorial drive Cambridge, mass. 02139 PRIVATE CONSTANT RETURNS AND PUBLIC SHADOW PRICES P . Diamond and J . Mirrlees Number 151 February 1975 1. M.I.T. and Nuffield College, Oxford 2. Financial support by N.S.F. gratefully acknowledged Digitized by the Internet Archive in 2011 with funding from IVIIT Libraries http://www.archive.org/details/privateconstantr151diam Private Constant Returns and Public Shadow Prices P. Diamond and J. Mirrlees 1. Introduction Although there has been little analysis of the extent of its validity, a widely (but sometimes only implicitly) accepted proposition is that govern- ments should generally select production plans which are on the frontiers of their production possibility sets. This implies the existence of a set 3/ of shadow prices which can be used for government production decisions.— If private production is characterized by constant returns to scale and controlled by price taking profit maximizers and if the economy has optimal commodity taxes and no further distortions, then government production plans should generally be such that aggregate production (government plus private production) is on the frontier of the aggregate production possibility 4/ set [5].— It follows that the shadow prices associated with optimal government production are the market prices faced by private producers. In this note we shall explore the situation where part of the economy ex- hibits constant returns to scale and is controlled by price taking profit maximizers. -

Pinups and Pinball: the Sexualized Female Image in Pinball Artwork

Rochester Institute of Technology RIT Scholar Works Theses 5-2016 Pinups and Pinball: The Sexualized Female Image in Pinball Artwork Melissa A. Fanton [email protected] Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Fanton, Melissa A., "Pinups and Pinball: The Sexualized Female Image in Pinball Artwork" (2016). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. The Rochester Institute of Technology College of Liberal Arts Pinups and Pinball: The Sexualized Female Image in Pinball Artwork A Thesis Submitted in Partial Fulfillment of the Bachelor of Science Degree in Museum Studies History Department by Melissa A. Fanton May 2016 Contents Abstract ........................................................................................................................................... v List of Illustrations ......................................................................................................................... vi Introduction ..................................................................................................................................... 1 Historical Overview of the Development of Pinball Art ................................................................ 2 Visual Communication & Women and Gender Studies .............................................................. -

Pinball Game List

Pinball Game List 1 2001 (Gottlieb 1971) 2 24 (Stern 2009) 3 250cc (Inder 1992) 4 300 (Gottlieb 1975) 5 311 (Original 2012) 6 4 Queens (Bally 1970) Abra Ca Dabra (Gottlieb ACDC - Devil Girl 7 8 9 AC-DC (Stern 2012) 1975) (Ultimate) (Stern 2012) AC-DC Helen (Stern Adam Strange (Original 10 11 AC-DC Pro (Stern 2012) 12 2012) 2015) Adventures of Rocky and Aerosmith (Original Agents 777 (Game Plan 13 Bullwinkle and Friends 14 15 2015) 1984) (Data East 1993) 16 Air Aces (Bally 1975) 17 Airborne (Capcom 1996) 18 Airport (Gottlieb 1969) Alien Dude (Original 19 Akira (Original 2011) 20 Ali (Stern 1980) 21 2013) Alien Poker (Williams Alien Racing (Original Alien Science (Original 22 23 24 1980) 2013) 2012) Aliens Infestation Aliens Legacy (Ultimate Alladin's Castle 25 26 27 (Original 2012) Edition) (Original (Ultimate) (Bally 1976) Alone In The Dark A2l0'1s1 )G arage Band Goes Amazing Spider-Man 28 29 30 (Original 2014) On World Tour (Alivin (Gottlieb 1980) Amazon Hunt (Gottlieb G. 1992) Angry Robot (Original 31 32 America's Most Haunted 33 1983) 2015) Aquarius (Gottlieb 34 Antar (Playmatic 1979) 35 Apollo 13 (Sega 1995) 36 1970) Arcade Mayhem (Original Asteroid Annie 37 38 Aspen (Brunswick 1979) 39 2012) (Gottlieb 1980) Asteroid Annie and the Atlantis (Gottlieb 40 41 42 Atlantis (Midway 1989) Aliens (Gottlieb 1980) 1975) Attack From Mars (Bally Attack of the Killer Attack Revenge FM 43 44 45 1995) Tomatoes (Original (Bally 1999) Austin Powers (Stern 2014) Back To The Future 46 47 Avatar (Stern 2010) 48 2001) (Original 2013) Back to the Future