Node Hardware Performance Convergence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Historical Perspective and Further Reading 162.E1

2.21 Historical Perspective and Further Reading 162.e1 2.21 Historical Perspective and Further Reading Th is section surveys the history of in struction set architectures over time, and we give a short history of programming languages and compilers. ISAs include accumulator architectures, general-purpose register architectures, stack architectures, and a brief history of ARMv7 and the x86. We also review the controversial subjects of high-level-language computer architectures and reduced instruction set computer architectures. Th e history of programming languages includes Fortran, Lisp, Algol, C, Cobol, Pascal, Simula, Smalltalk, C+ + , and Java, and the history of compilers includes the key milestones and the pioneers who achieved them. Accumulator Architectures Hardware was precious in the earliest stored-program computers. Consequently, computer pioneers could not aff ord the number of registers found in today’s architectures. In fact, these architectures had a single register for arithmetic instructions. Since all operations would accumulate in one register, it was called the accumulator , and this style of instruction set is given the same name. For example, accumulator Archaic EDSAC in 1949 had a single accumulator. term for register. On-line Th e three-operand format of RISC-V suggests that a single register is at least two use of it as a synonym for registers shy of our needs. Having the accumulator as both a source operand and “register” is a fairly reliable indication that the user the destination of the operation fi lls part of the shortfall, but it still leaves us one has been around quite a operand short. Th at fi nal operand is found in memory. -

Computer Organization EECC 550 • Introduction: Modern Computer Design Levels, Components, Technology Trends, Register Transfer Week 1 Notation (RTN)

Computer Organization EECC 550 • Introduction: Modern Computer Design Levels, Components, Technology Trends, Register Transfer Week 1 Notation (RTN). [Chapters 1, 2] • Instruction Set Architecture (ISA) Characteristics and Classifications: CISC Vs. RISC. [Chapter 2] Week 2 • MIPS: An Example RISC ISA. Syntax, Instruction Formats, Addressing Modes, Encoding & Examples. [Chapter 2] • Central Processor Unit (CPU) & Computer System Performance Measures. [Chapter 4] Week 3 • CPU Organization: Datapath & Control Unit Design. [Chapter 5] Week 4 – MIPS Single Cycle Datapath & Control Unit Design. – MIPS Multicycle Datapath and Finite State Machine Control Unit Design. Week 5 • Microprogrammed Control Unit Design. [Chapter 5] – Microprogramming Project Week 6 • Midterm Review and Midterm Exam Week 7 • CPU Pipelining. [Chapter 6] • The Memory Hierarchy: Cache Design & Performance. [Chapter 7] Week 8 • The Memory Hierarchy: Main & Virtual Memory. [Chapter 7] Week 9 • Input/Output Organization & System Performance Evaluation. [Chapter 8] Week 10 • Computer Arithmetic & ALU Design. [Chapter 3] If time permits. Week 11 • Final Exam. EECC550 - Shaaban #1 Lec # 1 Winter 2005 11-29-2005 Computing System History/Trends + Instruction Set Architecture (ISA) Fundamentals • Computing Element Choices: – Computing Element Programmability – Spatial vs. Temporal Computing – Main Processor Types/Applications • General Purpose Processor Generations • The Von Neumann Computer Model • CPU Organization (Design) • Recent Trends in Computer Design/performance • Hierarchy -

486 COMMON SOCKET IMPLEMENTATION by J

486 COMMON SOCKET IMPLEMENTATION by J. Lombard, M. Lerman 1. Overview For additional information, please contact This document provides detailed information SGS-Thomson Microelectronics. Information in this document is subject to change without regarding the differences in pinouts between notification. The following documents were used SGS-THOMSON Microelectronics(ST) and Intel as references: Intel486 Microprocessor Family, 486 PGA devices and specifies electrical connections that allow a single motherboard AM486 Microprocessor Family System Design Consideration. Any functions not disclosed in the design to support all of the listed devices. This referenced documents are NOT covered by the implementation is intended to be a guideline to scope of this specification. eliminate conflicts due to pinout differences only. 2. CPU Features This common socket specification is intended to support the following devices: Each of the CPUs supported in this common socket specification is 486 bus compatible yet SGS-THOMSON ST486 DX2 CYRIX Cx486 DX2 has a unique set of features that impacts the device pinout. Table 2-1 lists the differences in SGS-THOMSON ST486 DX2V the CPU feature sets. SGS-THOMSON ST486 DX4V SGS-THOMSON ST5x86 CYRIX Cx5x86 INTEL i486 DX2 SL & WB Enhanced INTEL i486 DX4 AMD AM486 DX2 (Future SL & WB Enhanced) AMD AM486 DX4 (Future SL & WB Enhanced) Table 2-1. CPU Enhanced Features CPU VOLTAGE WRITE- SMM & POWER CORE CLOCK BACK MANAGEMENT CONTROL JTAG ST486DX2 5 V yes yes no no Cx486DX2 ST486DX2V 3.45V, 5V I/O yes yes no no Future AM486DX2 ST486DX4V 3.45V, 5V I/O yes yes 2x, 3x no Future AM486DX2 ST5x86 3.45V, 5V I/O yes yes 0.5x, 1x, 2x, 3x yes Cx5x86 Intel i486DX/DX2 SL & WB 5 V yes yes no yes Enh. -

MICROPROCESSOR REPORT the INSIDERS’ GUIDE to MICROPROCESSOR HARDWARE Slot Vs

VOLUME 12, NUMBER 1 JANUARY 26, 1998 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Slot vs. Socket Battle Heats Up Intel Prepares for Transition as Competitors Boost Socket 7 A A look Look by Michael Slater ship as many parts as they hoped, especially at the highest backBack clock speeds where profits are much greater. The past year has brought a great deal The shift to 0.25-micron technology will be central to of change to the x86 microprocessor 1998’s CPU developments. Intel began shipping 0.25-micron A market, with Intel, AMD, and Cyrix processors in 3Q97; AMD followed late in 1997, IDT plans to LookA look replacing virtually their entire product join in by mid-98, and Cyrix expects to catch up in 3Q98. Ahead ahead lines with new devices. But despite high The more advanced process technology will cut power con- hopes, AMD and Cyrix struggled in vain for profits. The sumption, allowing sixth-generation CPUs to be used in financial contrast is stark: in 1997, Intel earned a record notebook systems. The smaller die sizes will enable higher $6.9 billion in net profit, while AMD lost $21 million for the production volumes and make it possible to integrate an L2 year and Cyrix lost $6 million in the six months before it was cache on the CPU chip. acquired by National. New entrant IDT added another com- The processors from Intel’s challengers have lagged in petitor to the mix but hasn’t shipped enough products to floating-point and MMX performance, which the vendors become a significant force. -

Extensions to Instruction Sets

Extensions to Instruction Sets Ricardo Manuel Meira Ferrão Luis Departamento de Informática, Universidade do Minho 4710 - 057 Braga, Portugal [email protected] Abstract. This communication analyses extensions to instruction sets in general purpose processors, together with their evolution and significant innovation. It begins with an overview of specific multimedia and digital signal processing requirements and then it focuses on feature detection to properly identify the adequate extension. A brief performance evaluation on two competitive processors closes the communication. 1 Introduction The purpose of this communication is to analyse extensions to instruction sets, describe the specific multimedia and digital signal processing (DSP) requirements of a processor, detail how to detect these extensions and verify if the processor supports them. To achieve this functionality an IA32 architecture example code will be demonstrated. To study the instruction sets supported by each processor, an analysis is performed to compare and distinguish each technology in terms of new extensions to instruction sets and architecture evolution, mapping a table that lists the instruction sets supported by each processor. To clearly identify these differences between architectures, tests are performed with an application example, determining comparable results of two competitive processors. In this communication, Section 2 gives an overview of multimedia and digital signal processing requirements, Section 3 explains the feature detection of these instructions, Section 4 details some of the extensions supported by IA32 based processors, Section 5 tests and describes the performance of each technology and analyses the results. Section 6 concludes this communication. 2 Multimedia and DSP Requirements Computer industries needed a way to improve their multimedia capabilities because of the demand for 3D graphics, video and other multimedia applications such as games. -

Phaser 600 Color Printer User Manual

WELCOME! This is the home page of the Phaser 600 Color Printer User Manual. See Tips on using this guide, or go immediately to the Contents. Phaser® 600 Wide-Format Color Printer Tips on using this guide ■ Use the navigation buttons in Acrobat Reader to move through the document: 1 23 4 5 6 1 and 4 are the First Page and Last Page buttons. These buttons move to the first or last page of a document. 2 and 3 are the Previous Page and Next Page buttons. These buttons move the document backward or forward one page at a time. 5 and 6 are the Go Back and Go Forward buttons. These buttons let you retrace your steps through a document, moving to each page or view in the order visited. ■ For best results, use the Adobe Acrobat Reader version 2.1 to read this guide. Version 2.1 of the Acrobat Reader is supplied on your printer’s CD-ROM. ■ Click on the page numbers in the Contents on the following pages to open the topics you want to read. ■ You can click on the page numbers following keywords in the Index to open topics of interest. ■ You can also use the Bookmarks provided by the Acrobat Reader to navigate through this guide. If the Bookmarks are not already displayed at the left of the window, select Bookmarks and Page from the View menu. ■ If you have difficultly reading any small type or seeing the details in any of the illustrations, you can use the Acrobat Reader’s Magnification feature. -

Communication Theory II

Microprocessor (COM 9323) Lecture 2: Review on Intel Family Ahmed Elnakib, PhD Assistant Professor, Mansoura University, Egypt Feb 17th, 2016 1 Text Book/References Textbook: 1. The Intel Microprocessors, Architecture, Programming and Interfacing, 8th edition, Barry B. Brey, Prentice Hall, 2009 2. Assembly Language for x86 processors, 6th edition, K. R. Irvine, Prentice Hall, 2011 References: 1. Computer Architecture: A Quantitative Approach, 5th edition, J. Hennessy, D. Patterson, Elsevier, 2012. 2. The 80x86 Family, Design, Programming and Interfacing, 3rd edition, Prentice Hall, 2002 3. The 80x86 IBM PC and Compatible Computers, Assembly Language, Design, and Interfacing, 4th edition, M.A. Mazidi and J.G. Mazidi, Prentice Hall, 2003 2 Lecture Objectives 1. Provide an overview of the various 80X86 and Pentium family members 2. Define the contents of the memory system in the personal computer 3. Convert between binary, decimal, and hexadecimal numbers 4. Differentiate and represent numeric and alphabetic information as integers, floating-point, BCD, and ASCII data 5. Understand basic computer terminology (bit, byte, data, real memory system, protected mode memory system, Windows, DOS, I/O) 3 Brief History of the Computers o1946 The first generation of Computer ENIAC (Electrical and Numerical Integrator and Calculator) was started to be used based on the vacuum tube technology, University of Pennsylvania o1970s entire CPU was put in a single chip. (1971 the first microprocessor of Intel 4004 (4-bit data bus and 2300 transistors and 45 instructions) 4 Brief History of the Computers (cont’d) oLate 1970s Intel 8080/85 appeared with 8-bit data bus and 16-bit address bus and used from traffic light controllers to homemade computers (8085: 246 instruction set, RISC*) o1981 First PC was introduced by IBM with Intel 8088 (CISC**: over 20,000 instructions) microprocessor oMotorola emerged with 6800. -

Intel Prepares MMX CPU Wave After Weak 1996, AMD and Cyrix Ready 1997 Counterstrike

MICRODESIGN R ESOURCES SPECIAL REPORT Intel Prepares MMX CPU Wave After Weak 1996, AMD and Cyrix Ready 1997 Counterstrike by Michael Slater Nineteen ninety six was a remarkably successful one for Intel and a difficult one for its competitors. It was a quiet year for Intel in terms of new products, but the company nevertheless increased both its market share and its gross margin—thanks, in large part, to weak performances from AMD and Cyrix. (See list on page 14 for a summary of the key events of 1996 and pointers to Microprocessor Report’s coverage.) This year promises to be far more turbulent. Not only is Intel intro- ducing two major new product lines—P55C (officially, Pentium Processor with MMX Technology) and Klamath—but AMD and Cyrix will each launch their own next-generation processors, which will be more formi- dable competitors to Intel’s line. Intel is sure to do well despite the stepped-up challenge, but its dominance of the market is unlikely to be quite as complete in 1997 as in 1996. Intel Shifting into In 1996, Intel’s microprocessor lineup changed little. After the introduction High Gear of the 150- and 166-MHz Pentiums in January, the only new products were the 200-MHz Pentium and 150-MHz Mobile Pentium. The P55C would have been a great kicker for fall sales, but Intel apparently didn’t have the part ready in time. Intel began producing P55C processors in the late fall but chose not to announce the part until January in order to minimize the impact on the Christmas selling season. -

RISC-V Geneology

RISC-V Geneology Tony Chen David A. Patterson Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-6 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-6.html January 24, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Introduction RISC-V is an open instruction set designed along RISC principles developed originally at UC Berkeley1 and is now set to become an open industry standard under the governance of the RISC-V Foundation (www.riscv.org). Since the instruction set architecture (ISA) is unrestricted, organizations can share implementations as well as open source compilers and operating systems. Designed for use in custom systems on a chip, RISC-V consists of a base set of instructions called RV32I along with optional extensions for multiply and divide (RV32M), atomic operations (RV32A), single-precision floating point (RV32F), and double-precision floating point (RV32D). The base and these four extensions are collectively called RV32G. This report discusses the historical precedents of RV32G. We look at 18 prior instruction set architectures, chosen primarily from earlier UC Berkeley RISC architectures and major proprietary RISC instruction sets. Among the 122 instructions in RV32G: ● 6 instructions do not have precedents among the selected instruction sets, ● 98 instructions of the 116 with precedents appear in at least three different instruction sets. -

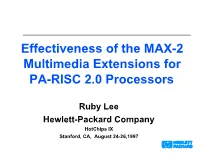

Effectiveness of the MAX-2 Multimedia Extensions for PA-RISC 2.0 Processors

Effectiveness of the MAX-2 Multimedia Extensions for PA-RISC 2.0 Processors Ruby Lee Hewlett-Packard Company HotChips IX Stanford, CA, August 24-26,1997 Outline Introduction PA-RISC MAX-2 features and examples Mix Permute Multiply with Shift&Add Conditionals with Saturation Arith (e.g., Absolute Values) Performance Comparison with / without MAX-2 General-Purpose Workloads will include Increasing Amounts of Media Processing MM a b a b 2 1 2 1 b c b c functionality 5 2 5 2 A B C D 1 2 22 2 2 33 3 4 55 59 A B C D 1 2 A B C D 22 1 2 22 2 2 2 2 33 33 3 4 55 59 3 4 55 59 Distributed Multimedia Real-time Information Access Communications Tool Tool Computation Tool time 1980 1990 2000 Multimedia Extensions for General-Purpose Processors MAX-1 for HP PA-RISC (product Jan '94) VIS for Sun Sparc (H2 '95) MAX-2 for HP PA-RISC (product Mar '96) MMX for Intel x86 (chips Jan '97) MDMX for SGI MIPS-V (tbd) MVI for DEC Alpha (tbd) Ideally, different media streams map onto both the integer and floating-point datapaths of microprocessors images GR: GR: 32x32 video 32x64 ALU SMU FP: graphics FP:16x64 Mem 32x64 audio FMAC PA-RISC 2.0 Processor Datapath Subword Parallelism in a General-Purpose Processor with Multimedia Extensions General Regs. y5 y6 y7 y8 x5 x6 x7 x8 x1 x2 x3 x4 y1 y2 y3 y4 Partitionable Partitionable 64-bit ALU 64-bit ALU 8 ops / cycle Subword Parallel MAX-2 Instructions in PA-RISC 2.0 Parallel Add (modulo or saturation) Parallel Subtract (modulo or saturation) Parallel Shift Right (1,2 or 3 bits) and Add Parallel Shift Left (1,2 or 3 bits) and Add Parallel Average Parallel Shift Right (n bits) Parallel Shift Left (n bits) Mix Permute MAX-2 Leverages Existing Processing Resources FP: INTEGER FLOAT GR: 16x64 General Regs. -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary. -

IDT Winchip 2A Data Sheet

Preliminary Information PROCESSOR Version A Data Sheet Preliminary Information January 1999 IDT WINCHIP 2ATM PROCESSOR DATA SHEET This is Version 1.0 of the IDT WinChip 2 version A Processor data sheet. The latest versions of this data sheet may be obtained from www.winchip.com © 1999 Integrated Device Technology, Inc. All Rights Reserved Integrated Device Technology, Inc. (IDT) reserves the right to make changes in its products without notice in order to improve design or performance characteristics. This publication neither states nor implies any representations or warranties of any kind, including but not limited to any implied warranty of merchantability or fitness for a particular purpose. No license, express or implied, to any intellectual property rights is granted by this document. IDT makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication or the information contained herein, and reserves the right to make changes at any time, without notice. IDT disclaims responsibility for any consequences resulting from the use of the information included herein. LIFE SUPPORT POLICY Integrated Device Technology's products are not authorized for use as components in life support or other medical devices or systems (hereinafter life support devices) unless a specific written agreement pertaining to such intended use is executed between the manufacturer and an officer of IDT. 1. Life support devices are devices which (a) are intended for surgical implant into the body or (b) support or sustain life and whose failure to perform, when properly used in accordance with instructions for use provided in the labeling, can be reasonably expected to result in a significant injury to the user.