View the Slides

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Security Assurance Requirements for Linux Application Container Deployments

NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli Computer Security Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 October 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology NISTIR 8176 SECURITY ASSURANCE FOR LINUX CONTAINERS National Institute of Standards and Technology Internal Report 8176 37 pages (October 2017) This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. This p There may be references in this publication to other publications currently under development by NIST in accordance with its assigned statutory responsibilities. The information in this publication, including concepts and methodologies, may be used by federal agencies even before the completion of such companion publications. Thus, until each ublication is available free of charge from: http publication is completed, current requirements, guidelines, and procedures, where they exist, remain operative. For planning and transition purposes, federal agencies may wish to closely follow the development of these new publications by NIST. -

Flexible Lustre Management

Flexible Lustre management Making less work for Admins ORNL is managed by UT-Battelle for the US Department of Energy How do we know Lustre condition today • Polling proc / sysfs files – The knocking on the door model – Parse stats, rpc info, etc for performance deviations. • Constant collection of debug logs – Heavy parsing for common problems. • The death of a node – Have to examine kdumps and /or lustre dump Origins of a new approach • Requirements for Linux kernel integration. – No more proc usage – Migration to sysfs and debugfs – Used to configure your file system. – Started in lustre 2.9 and still on going. • Two ways to configure your file system. – On MGS server run lctl conf_param … • Directly accessed proc seq_files. – On MSG server run lctl set_param –P • Originally used an upcall to lctl for configuration • Introduced in Lustre 2.4 but was broken until lustre 2.12 (LU-7004) – Configuring file system works transparently before and after sysfs migration. Changes introduced with sysfs / debugfs migration • sysfs has a one item per file rule. • Complex proc files moved to debugfs • Moving to debugfs introduced permission problems – Only debugging files should be their. – Both debugfs and procfs have scaling issues. • Moving to sysfs introduced the ability to send uevents – Item of most interest from LUG 2018 Linux Lustre client talk. – Both lctl conf_param and lctl set_param –P use this approach • lctl conf_param can set sysfs attributes without uevents. See class_modify_config() – We get life cycle events for free – udev is now involved. What do we get by using udev ? • Under the hood – uevents are collect by systemd and then processed by udev rules – /etc/udev/rules.d/99-lustre.rules – SUBSYSTEM=="lustre", ACTION=="change", ENV{PARAM}=="?*", RUN+="/usr/sbin/lctl set_param '$env{PARAM}=$env{SETTING}’” • You can create your own udev rule – http://reactivated.net/writing_udev_rules.html – /lib/udev/rules.d/* for examples – Add udev_log="debug” to /etc/udev.conf if you have problems • Using systemd for long task. -

Version 7.8-Systemd

Linux From Scratch Version 7.8-systemd Created by Gerard Beekmans Edited by Douglas R. Reno Linux From Scratch: Version 7.8-systemd by Created by Gerard Beekmans and Edited by Douglas R. Reno Copyright © 1999-2015 Gerard Beekmans Copyright © 1999-2015, Gerard Beekmans All rights reserved. This book is licensed under a Creative Commons License. Computer instructions may be extracted from the book under the MIT License. Linux® is a registered trademark of Linus Torvalds. Linux From Scratch - Version 7.8-systemd Table of Contents Preface .......................................................................................................................................................................... vii i. Foreword ............................................................................................................................................................. vii ii. Audience ............................................................................................................................................................ vii iii. LFS Target Architectures ................................................................................................................................ viii iv. LFS and Standards ............................................................................................................................................ ix v. Rationale for Packages in the Book .................................................................................................................... x vi. Prerequisites -

In Search of the Ideal Storage Configuration for Docker Containers

In Search of the Ideal Storage Configuration for Docker Containers Vasily Tarasov1, Lukas Rupprecht1, Dimitris Skourtis1, Amit Warke1, Dean Hildebrand1 Mohamed Mohamed1, Nagapramod Mandagere1, Wenji Li2, Raju Rangaswami3, Ming Zhao2 1IBM Research—Almaden 2Arizona State University 3Florida International University Abstract—Containers are a widely successful technology today every running container. This would cause a great burden on popularized by Docker. Containers improve system utilization by the I/O subsystem and make container start time unacceptably increasing workload density. Docker containers enable seamless high for many workloads. As a result, copy-on-write (CoW) deployment of workloads across development, test, and produc- tion environments. Docker’s unique approach to data manage- storage and storage snapshots are popularly used and images ment, which involves frequent snapshot creation and removal, are structured in layers. A layer consists of a set of files and presents a new set of exciting challenges for storage systems. At layers with the same content can be shared across images, the same time, storage management for Docker containers has reducing the amount of storage required to run containers. remained largely unexplored with a dizzying array of solution With Docker, one can choose Aufs [6], Overlay2 [7], choices and configuration options. In this paper we unravel the multi-faceted nature of Docker storage and demonstrate its Btrfs [8], or device-mapper (dm) [9] as storage drivers which impact on system and workload performance. As we uncover provide the required snapshotting and CoW capabilities for new properties of the popular Docker storage drivers, this is a images. None of these solutions, however, were designed with sobering reminder that widespread use of new technologies can Docker in mind and their effectiveness for Docker has not been often precede their careful evaluation. -

Multicore Operating-System Support for Mixed Criticality∗

Multicore Operating-System Support for Mixed Criticality∗ James H. Anderson, Sanjoy K. Baruah, and Bjorn¨ B. Brandenburg The University of North Carolina at Chapel Hill Abstract Different criticality levels provide different levels of assurance against failure. In current avionics designs, Ongoing research is discussed on the development of highly-critical software is kept physically separate from operating-system support for enabling mixed-criticality less-critical software. Moreover, no currently deployed air- workloads to be supported on multicore platforms. This craft uses multicore processors to host highly-critical tasks work is motivated by avionics systems in which such work- (more precisely, if multicore processors are used, and if loads occur. In the mixed-criticality workload model that is such a processor hosts highly-critical applications, then all considered, task execution costs may be determined using but one of its cores are turned off). These design decisions more-stringent methods at high criticality levels, and less- are largely driven by certification issues. For example, cer- stringent methods at low criticality levels. The main focus tifying highly-critical components becomes easier if poten- of this research effort is devising mechanisms for providing tially adverse interactions among components executing on “temporal isolation” across criticality levels: lower levels different cores through shared hardware such as caches are should not adversely “interfere” with higher levels. simply “defined” not to occur. Unfortunately, hosting an overall workload as described here clearly wastes process- ing resources. 1 Introduction In this paper, we propose operating-system infrastruc- ture that allows applications of different criticalities to be The evolution of computational frameworks for avionics co-hosted on a multicore platform. -

Scalability of VM Provisioning Systems

Scalability of VM Provisioning Systems Mike Jones, Bill Arcand, Bill Bergeron, David Bestor, Chansup Byun, Lauren Milechin, Vijay Gadepally, Matt Hubbell, Jeremy Kepner, Pete Michaleas, Julie Mullen, Andy Prout, Tony Rosa, Siddharth Samsi, Charles Yee, Albert Reuther Lincoln Laboratory Supercomputing Center MIT Lincoln Laboratory Lexington, MA, USA Abstract—Virtual machines and virtualized hardware have developed a technique based on binary code substitution been around for over half a century. The commoditization of the (binary translation) that enabled the execution of privileged x86 platform and its rapidly growing hardware capabilities have (OS) instructions from virtual machines on x86 systems [16]. led to recent exponential growth in the use of virtualization both Another notable effort was the Xen project, which in 2003 used in the enterprise and high performance computing (HPC). The a jump table for choosing bare metal execution or virtual startup time of a virtualized environment is a key performance machine execution of privileged (OS) instructions [17]. Such metric for high performance computing in which the runtime of projects prompted Intel and AMD to add the VT-x [19] and any individual task is typically much shorter than the lifetime of AMD-V [18] virtualization extensions to the x86 and x86-64 a virtualized service in an enterprise context. In this paper, a instruction sets in 2006, further pushing the performance and methodology for accurately measuring the startup performance adoption of virtual machines. on an HPC system is described. The startup performance overhead of three of the most mature, widely deployed cloud Virtual machines have seen use in a variety of applications, management frameworks (OpenStack, OpenNebula, and but with the move to highly capable multicore CPUs, gigabit Eucalyptus) is measured to determine their suitability for Ethernet network cards, and VM-aware x86/x86-64 operating workloads typically seen in an HPC environment. -

Resource Management: Linux Kernel Namespaces and Cgroups

Resource management: Linux kernel Namespaces and cgroups Rami Rosen [email protected] Haifux, May 2013 www.haifux.org 1/121 http://ramirose.wix.com/ramirosen TOC Network Namespace PID namespaces UTS namespace Mount namespace user namespaces cgroups Mounting cgroups links Note: All code examples are from for_3_10 branch of cgroup git tree (3.9.0-rc1, April 2013) 2/121 http://ramirose.wix.com/ramirosen General The presentation deals with two Linux process resource management solutions: namespaces and cgroups. We will look at: ● Kernel Implementation details. ●what was added/changed in brief. ● User space interface. ● Some working examples. ● Usage of namespaces and cgroups in other projects. ● Is process virtualization indeed lightweight comparing to Os virtualization ? ●Comparing to VMWare/qemu/scaleMP or even to Xen/KVM. 3/121 http://ramirose.wix.com/ramirosen Namespaces ● Namespaces - lightweight process virtualization. – Isolation: Enable a process (or several processes) to have different views of the system than other processes. – 1992: “The Use of Name Spaces in Plan 9” – http://www.cs.bell-labs.com/sys/doc/names.html ● Rob Pike et al, ACM SIGOPS European Workshop 1992. – Much like Zones in Solaris. – No hypervisor layer (as in OS virtualization like KVM, Xen) – Only one system call was added (setns()) – Used in Checkpoint/Restart ● Developers: Eric W. biederman, Pavel Emelyanov, Al Viro, Cyrill Gorcunov, more. – 4/121 http://ramirose.wix.com/ramirosen Namespaces - contd There are currently 6 namespaces: ● mnt (mount points, filesystems) ● pid (processes) ● net (network stack) ● ipc (System V IPC) ● uts (hostname) ● user (UIDs) 5/121 http://ramirose.wix.com/ramirosen Namespaces - contd It was intended that there will be 10 namespaces: the following 4 namespaces are not implemented (yet): ● security namespace ● security keys namespace ● device namespace ● time namespace. -

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV)

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV) White Paper August 2015 Order Number: 332860-001US YouLegal Lines andmay Disclaimers not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting: http://www.intel.com/ design/literature.htm. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at http:// www.intel.com/ or from the OEM or retailer. Results have been estimated or simulated using internal Intel analysis or architecture simulation or modeling, and provided to you for informational purposes. Any differences in your system hardware, software or configuration may affect your actual performance. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Tests document performance of components on a particular test, in specific systems. -

Containerization Introduction to Containers, Docker and Kubernetes

Containerization Introduction to Containers, Docker and Kubernetes EECS 768 Apoorv Ingle [email protected] Containers • Containers – lightweight VM or chroot on steroids • Feels like a virtual machine • Get a shell • Install packages • Run applications • Run services • But not really • Uses host kernel • Cannot boot OS • Does not need PID 1 • Process visible to host machine Containers • VM vs Containers Containers • Container Anatomy • cgroup: limit the use of resources • namespace: limit what processes can see (hence use) Containers • cgroup • Resource metering and limiting • CPU • IO • Network • etc.. • $ ls /sys/fs/cgroup Containers • Separate Hierarchies for each resource subsystem (CPU, IO, etc.) • Each process belongs to exactly 1 node • Node is a group of processes • Share resource Containers • CPU cgroup • Keeps track • user/system CPU • Usage per CPU • Can set weights • CPUset cgroup • Reserve to CPU to specific applications • Avoids context switch overheads • Useful for non uniform memory access (NUMA) Containers • Memory cgroup • Tracks pages used by each group • Pages can be shared across groups • Pages “charged” to a group • Shared pages “split the cost” • Set limits on usage Containers • Namespaces • Provides a view of the system to process • Controls what a process can see • Multiple namespaces • pid • net • mnt • uts • ipc • usr Containers • PID namespace • Processes within a PID namespace see only process in the same namespace • Each PID namespace has its own numbering staring from 1 • Namespace is killed when PID 1 goes away -

Architectural Decisions for Linuxone Hypervisors

July 2019 Webcast Virtualization options for Linux on IBM Z & LinuxONE Richard Young Executive IT Specialist Virtualization and Linux IBM Systems Lab Services Wilhelm Mild IBM Executive IT Architect for Mobile, IBM Z and Linux IBM R&D Lab, Germany Agenda ➢ Benefits of virtualization • Available virtualization options • Considerations for virtualization decisions • Virtualization options for LinuxONE & Z • Firmware hypervisors • Software hypervisors • Software Containers • Firmware hypervisor decision guide • Virtualization decision guide • Summary 2 © Copyright IBM Corporation 2018 Why do we virtualize? What are the benefits of virtualization? ▪ Simplification – use of standardized images, virtualized hardware, and automated configuration of virtual infrastructure ▪ Migration – one of the first uses of virtualization, enable coexistence, phased upgrades and migrations. It can also simplify hardware upgrades by make changes transparent. ▪ Efficiency – reduced hardware footprints, better utilization of available hardware resources, and reduced time to delivery. Reuse of deprovisioned or relinquished resources. ▪ Resilience – run new versions and old versions in parallel, avoiding service downtime ▪ Cost savings – having fewer machines translates to lower costs in server hardware, networking, floor space, electricity, administration (perceived) ▪ To accommodate growth – virtualization allows the IT department to be more responsive to business growth, hopefully avoiding interruption 3 © Copyright IBM Corporation 2018 Agenda • Benefits of -

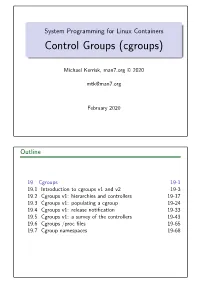

Control Groups (Cgroups)

System Programming for Linux Containers Control Groups (cgroups) Michael Kerrisk, man7.org © 2020 [email protected] February 2020 Outline 19 Cgroups 19-1 19.1 Introduction to cgroups v1 and v2 19-3 19.2 Cgroups v1: hierarchies and controllers 19-17 19.3 Cgroups v1: populating a cgroup 19-24 19.4 Cgroups v1: release notification 19-33 19.5 Cgroups v1: a survey of the controllers 19-43 19.6 Cgroups /procfiles 19-65 19.7 Cgroup namespaces 19-68 Outline 19 Cgroups 19-1 19.1 Introduction to cgroups v1 and v2 19-3 19.2 Cgroups v1: hierarchies and controllers 19-17 19.3 Cgroups v1: populating a cgroup 19-24 19.4 Cgroups v1: release notification 19-33 19.5 Cgroups v1: a survey of the controllers 19-43 19.6 Cgroups /procfiles 19-65 19.7 Cgroup namespaces 19-68 Goals Cgroups is a big topic Many controllers V1 versus V2 interfaces Our goal: understand fundamental semantics of cgroup filesystem and interfaces Useful from a programming perspective How do I build container frameworks? What else can I build with cgroups? And useful from a system engineering perspective What’s going on underneath my container’s hood? System Programming for Linux Containers ©2020, Michael Kerrisk Cgroups 19-4 §19.1 Focus We’ll focus on: General principles of operation; goals of cgroups The cgroup filesystem Interacting with the cgroup filesystem using shell commands Problems with cgroups v1, motivations for cgroups v2 Differences between cgroups v1 and v2 We’ll look briefly at some of the controllers System Programming for Linux Containers ©2020, Michael Kerrisk Cgroups 19-5 §19.1 -

Amazon Workspaces Guia De Administração Amazon Workspaces Guia De Administração

Amazon WorkSpaces Guia de administração Amazon WorkSpaces Guia de administração Amazon WorkSpaces: Guia de administração Copyright © Amazon Web Services, Inc. and/or its affiliates. All rights reserved. As marcas comerciais e imagens de marcas da Amazon não podem ser usadas no contexto de nenhum produto ou serviço que não seja da Amazon, nem de qualquer maneira que possa gerar confusão entre os clientes ou que deprecie ou desprestigie a Amazon. Todas as outras marcas comerciais que não pertencem à Amazon pertencem a seus respectivos proprietários, que podem ou não ser afiliados, patrocinados pela Amazon ou ter conexão com ela. Amazon WorkSpaces Guia de administração Table of Contents O que é WorkSpaces? ........................................................................................................................ 1 Features .................................................................................................................................... 1 Architecture ............................................................................................................................... 1 Acesse o WorkSpace .................................................................................................................. 2 Pricing ...................................................................................................................................... 3 Como começar a usar ................................................................................................................. 3 Conceitos básicos: Instalação