Package 'Tram'

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free Tram Zone

Melbourne’s Free Tram Zone Look for the signage at tram stops to identify the boundaries of the zone. Stop 0 Stop 8 For more information visit ptv.vic.gov.au Peel Street VICTORIA ST Victoria Street & Victoria Street & Peel Street Carlton Gardens Stop 7 Melbourne Star Observation Wheel Queen Victoria The District Queen Victoria Market ST ELIZABETH Melbourne Museum Market & IMAX Cinema t S n o s WILLIAM ST WILLIAM l o DOCKLANDS DR h ic Stop 8 N Melbourne Flagstaff QUEEN ST Gardens Central Station Royal Exhibition Building St Vincent’s LA TROBE ST LA TROBE ST VIC. PDE Hospital SPENCER ST KING ST WILLIAM ST ELIZABETH ST ST SWANSTON RUSSELL ST EXHIBITION ST HARBOUR ESP HARBOUR Flagstaff Melbourne Stop 0 Station Central State Library Station VICTORIA HARBOUR WURUNDJERI WAY of Victoria Nicholson Street & Victoria Parade LONSDALE ST LONSDALE ST Stop 0 Parliament Station Parliament Station VICTORIA HARBOUR PROMENADE Nicholson Street Marvel Stadium Library at the Dock SPRING ST Parliament BOURKE ST BOURKE ST BOURKE ST House YARRA RIVER COLLINS ST Old Treasury Southern Building Cross Station KING ST WILLIAM ST ST MARKET QUEEN ST ELIZABETH ST ST SWANSTON RUSSELL ST EXHIBITION ST COLLINS ST SPENCER ST COLLINS ST COLLINS ST Stop 8 St Paul’s Cathedral Spring Street & Collins Street Fitzroy Gardens Immigration Treasury Museum Gardens WURUNDJERI WAY FLINDERS ST FLINDERS ST Stop 8 Spring Street SEA LIFE Melbourne & Flinders Street Aquarium YARRA RIVER Flinders Street Station Federation Square Stop 24 Stop Stop 3 Stop 6 Don’t touch on or off if Batman Park Flinders Street Federation Russell Street Eureka & Queensbridge Tower Square & Flinders Street you’re just travelling in the SkyDeck Street Arts Centre city’s Free Tram Zone. -

Interstate Commerce Commission Washington

INTERSTATE COMMERCE COMMISSION WASHINGTON REPORT NO. 3374 PACIFIC ELECTRIC RAILWAY COMPANY IN BE ACCIDENT AT LOS ANGELES, CALIF., ON OCTOBER 10, 1950 - 2 - Report No. 3374 SUMMARY Date: October 10, 1950 Railroad: Pacific Electric Lo cation: Los Angeles, Calif. Kind of accident: Rear-end collision Trains involved; Freight Passenger Train numbers: Extra 1611 North 2113 Engine numbers: Electric locomo tive 1611 Consists: 2 muitiple-uelt 10 cars, caboose passenger cars Estimated speeds: 10 m. p h, Standing ft Operation: Timetable and operating rules Tracks: Four; tangent; ] percent descending grade northward Weather: Dense fog Time: 6:11 a. m. Casualties: 50 injured Cause: Failure properly to control speed of the following train in accordance with flagman's instructions - 3 - INTERSTATE COMMERCE COMMISSION REPORT NO, 3374 IN THE MATTER OF MAKING ACCIDENT INVESTIGATION REPORTS UNDER THE ACCIDENT REPORTS ACT OF MAY 6, 1910. PACIFIC ELECTRIC RAILWAY COMPANY January 5, 1951 Accident at Los Angeles, Calif., on October 10, 1950, caused by failure properly to control the speed of the following train in accordance with flagman's instructions. 1 REPORT OF THE COMMISSION PATTERSON, Commissioner: On October 10, 1950, there was a rear-end collision between a freight train and a passenger train on the Pacific Electric Railway at Los Angeles, Calif., which resulted in the injury of 48 passengers and 2 employees. This accident was investigated in conjunction with a representative of the Railroad Commission of the State of California. 1 Under authority of section 17 (2) of the Interstate Com merce Act the above-entitled proceeding was referred by the Commission to Commissioner Patterson for consideration and disposition. -

Interstate Commerce Commission in Re

INTERSTATE COMMERCE COMMISSION IN RE INVESTIGATION OF AM" ACCIDENT WHICH OCCURRED ON THE DENVER & INTERURBAN RAILROAD, NEAR GLOBEVILLE, COLO , ON SEPTEMBER 6, 1920 November 17, 1920 To the Commission On September G, 1920, there was a hoad-end collision between two passenger tiams on the Denvei & Intemiban Railioad neai Globe ville, Colo , which lesulted m the death of 11 passengeis and 2 em ployees, and the injury of 209 passengers and 5 employees This accident was investigated jointly with the Public Utilities Commis sion of Coloiado, and as a result of this investigation I lespectfully submit the following lepoit The Dem ei & Interurban Railioacl is a bianch of the Coloiado & Southern Railway, the rules of which govern Denver & Interurban trains Donvei & Intemiban tiains aie opeiated between Denvei and Bouldei, 31 miles noith of Denvei, over the Denvei Tiamway Co's tiacks between Denvei and Globeville and ovei Denvei & In temiban tiacks between Globeville and Denver & Inteiuiban Junc tion, at which point the line blanches, one line extending to Louis ville Junction and the othei to Webb Junction, and between these two junctions and Bouldei the trains of the Denvei & Inteiuiban j.»,ailroad opeiate over the tiacks of the Colorado & Southern Kail- way From Marshall, between Louisville Junction and Bouldei, a bianch line .extends to Eldorado Spimgs, this is also used jointly by the tiams of the two laihoads On this line Denvei & Intel urban employees aie ordinarily re lieved by Denver Tiamway employees at Globeville, the tiamway employees -

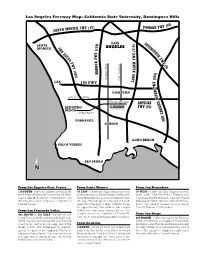

Freeway and Campus Combo

Los Angeles Freeway Map: California State University, Dominguez Hills 0) ) Y (1 ONICA FWY (10 POMONA FW SANTA M ) LOS I ) N 0 SANTA 0 T 1 E MONICA S ANGELES 1 R 1 A 7 ( S N ( T A Y D Y T I E E W G W F F O F W F R Y H W O C (5 Y B A ) R ( E 4 A B 0 ) H 5 G 5 ) 0 N 6 Avalon Blvd. ( Central Ave. O LAX L 105 FWY Y W W F F R R E COMPTON E V V I Artesia Blvd. I R R L Victoria Street L E ARTESIA E I I R REDONDO FWY (91) R B B A BEACH A G 190th Street G N N A A S TORRANCE S CARSON LONG BEACH PALOS VERDES SAN PEDRO N ➢ From Los Angeles Civic Center From Santa Monica From San Bernadino 110 SOUTH - Follow the Harbor Freeway (110) 10 EAST - Follow the Santa Monica Freeway 10 WEST - Follow the San Gabriel Freeway to the Artesia Freeway (91) east to Avalon Blvd. (10) east to the San Diego Freeway (405) south (605) south. Take the Artesia Freeway (91) Take Avalon Blvd. south to Victoria Street, turn toward Long Beach. Exit at the Vermont Avenue west toward Redondo Beach. Take the Central left. The entrance to campus is a right turn at off-ramp. Turn left (east) at the end of the off- Avenue exit and turn left; turn right onto Victoria Tamcliff Avenue. ramp onto 190th Street. -

From the 1832 Horse Pulled Tramway to 21Th Century Light Rail Transit/Light Metro Rail - a Short History of the Evolution in Pictures

From the 1832 Horse pulled Tramway to 21th Century Light Rail Transit/Light Metro Rail - a short History of the Evolution in Pictures By Dr. F.A. Wingler, September 2019 Animation of Light Rail Transit/ Light Metro Rail INTRODUCTION: Light Rail Transit (LRT) or Light Metro Rail (LMR) Systems operates with Light Rail Vehicles (LRV). Those Light Rail Vehicles run in urban region on Streets on reserved or unreserved rail tracks as City Trams, elevated as Right-of-Way Trams or Underground as Metros, and they can run also suburban and interurban on dedicated or reserved rail tracks or on main railway lines as Commuter Rail. The invest costs for LRT/LMR are less than for Metro Rail, the diversity is higher and the adjustment to local conditions and environment is less complicated. Whereas Metro Rail serves only certain corridors, LRT/LRM can be installed with dense and branched networks to serve wider areas. 1 In India the new buzzword for LRT/LMR is “METROLIGHT” or “METROLITE”. The Indian Central Government proposes to run light urban metro rail ‘Metrolight’ or Metrolite” for smaller towns of various states. These transits will operate in places, where the density of people is not so high and a lower ridership is expected. The Light Rail Vehicles will have three coaches, and the speed will be not much more than 25 kmph. The Metrolight will run along the ground as well as above on elevated structures. Metrolight will also work as a metro feeder system. Its cost is less compared to the metro rail installations. -

Trolleybuses: Applicability of UN Regulation No

Submitted by the expert from OICA Informal document GRSG-110-08-Rev.1 (110th GRSG, 26-29 April 2016, agenda item 2(a)) Trolleybuses: Applicability of UN Regulation No. 100 (Electric Power Train Vehicle) vs. UN Regulation No. 107 Annex 12 (Construction of M2/M3 Vehicles) for Electrical Safety 1. At 110th session of GRSG Belgium proposes to amend UN R107 annex 12 by deleting the requirements for trolleybuses (see GRSG/2016/05) and transfer the requirements into UN R100 (see GRSP/2016/07), which will be on the agenda of upcoming GRSP session in May 2016. 2. Due to the design of a trolleybus and stated in UN Regulation No. 107, trolleybuses are dual- mode vehicles. They can operate either: (a) in trolley mode, when connected to the overhead contact line (OCL), or (b) in bus mode when not connected to the OCL. When not connected to the OCL, they can also be (c) in charging mode, where they are stationary and plugged into the power grid for battery charging. 3. The basic principles of the design of the electric powertrain of the trolleybus and the connection to the OCL is based on international standards developed for trams and trains and is implemented and well accepted in the market worldwide. 4. Due to the fact that the trolleybus is used on public roads the trolleybus has to fulfil the regulations under the umbrella of the UNECE regulatory framework due to the existing national regulations (e.g. European frame work directive). 5. Therefore the annex 12 in UN R107 was amended to align the additional safety prescriptions for trolleybuses with the corresponding electrical standards. -

SAN GABRIEL VALLEY Sb 1 Funding Subregional Overview Reducing Safer Emissions Fact Sheet Roads

YOUR STATE TRANSPORTATION DOLLARS AT WORK IN SAN GABRIEL VALLEY sb 1 funding subregional overview Reducing Safer Emissions Fact Sheet Roads Filling More > More safety improvements and Potholes expanding bike and pedestrian networks along the Glendora Urban Train and Greenway, Pasadena, Alhambra, Baldwin Park and Rosemead Funding for cities and unincorporated areas to: > More electric buses and expanded > Repair potholes and sidewalks bus routes for Arcadia, Claremont and Foothill Transit LA COUNTY > Install upgraded traffic signals and pedestrian lights > More active transportation projects State Investment to keep schools and students safer > Repave local streets in the Pasadena Unified School > Improve pedestrian and bike District $1 BILLION safety, and upgrade bus shelters > New safety and highway improvements for the SR-57/-60 PER YEAR Confluence and the SR-71 gap conversion projects Smoother Commutes Stretching your Measure M > Fixing overpasses and roads on Dollars the SR-60 freight corridor for truck safety > Improving traffic flow on the I-210, > Extending the Metro Gold Line from I-10 and SR-134, and repaving and Azusa to Montclair in partnership re-striping highways with San Bernardino County > Critical safety-enhancing grade Transportation Authority separations in Montebello and > Building a 17.3 mile dedicated Bus Industry/Rowland Heights Rapid Transit route that creates a > Operational and station connection between San Fernando improvements on the Metrolink Valley and San Gabriel Valley commuter rail system > Implementing “complete streets” > Funding for the Freeway Service pedestrian, road and bike safety Patrol to relieve congestion on improvements along Temple Av highways between Walnut and Pomona SAN GABRIEL VALLEY SUBREGION The state is investing approximately $1 billion per year in transportation funding in LA County from the new gas taxes and fees authorized by Senate Bill 1 (SB 1). -

Los Angeles Transportation Transit History – South LA

Los Angeles Transportation Transit History – South LA Matthew Barrett Metro Transportation Research Library, Archive & Public Records - metro.net/library Transportation Research Library & Archive • Originally the library of the Los • Transportation research library for Angeles Railway (1895-1945), employees, consultants, students, and intended to serve as both academics, other government public outreach and an agencies and the general public. employee resource. • Partner of the National • Repository of federally funded Transportation Library, member of transportation research starting Transportation Knowledge in 1971. Networks, and affiliate of the National Academies’ Transportation • Began computer cataloging into Research Board (TRB). OCLC’s World Catalog using Library of Congress Subject • Largest transit operator-owned Headings and honoring library, forth largest transportation interlibrary loan requests from library collection after U.C. outside institutions in 1978. Berkeley, Northwestern University and the U.S. DOT’s Volpe Center. • Archive of Los Angeles transit history from 1873-present. • Member of Getty/USC’s L.A. as Subject forum. Accessing the Library • Online: metro.net/library – Library Catalog librarycat.metro.net – Daily aggregated transportation news headlines: headlines.metroprimaryresources.info – Highlights of current and historical documents in our collection: metroprimaryresources.info – Photos: flickr.com/metrolibraryarchive – Film/Video: youtube/metrolibrarian – Social Media: facebook, twitter, tumblr, google+, -

Googledrive: an Interface to Google Drive

Package ‘googledrive’ July 8, 2021 Title An Interface to Google Drive Version 2.0.0 Description Manage Google Drive files from R. License MIT + file LICENSE URL https://googledrive.tidyverse.org, https://github.com/tidyverse/googledrive BugReports https://github.com/tidyverse/googledrive/issues Depends R (>= 3.3) Imports cli (>= 3.0.0), gargle (>= 1.2.0), glue (>= 1.4.2), httr, jsonlite, lifecycle, magrittr, pillar, purrr (>= 0.2.3), rlang (>= 0.4.9), tibble (>= 2.0.0), utils, uuid, vctrs (>= 0.3.0), withr Suggests covr, curl, downlit, dplyr (>= 1.0.0), knitr, mockr, rmarkdown, roxygen2, sodium, spelling, testthat (>= 3.0.0) VignetteBuilder knitr Config/Needs/website pkgdown, tidyverse, r-lib/downlit, tidyverse/tidytemplate Config/testthat/edition 3 Encoding UTF-8 Language en-US RoxygenNote 7.1.1.9001 NeedsCompilation no Author Lucy D'Agostino McGowan [aut], Jennifer Bryan [aut, cre] (<https://orcid.org/0000-0002-6983-2759>), RStudio [cph, fnd] Maintainer Jennifer Bryan <[email protected]> Repository CRAN Date/Publication 2021-07-08 09:10:06 UTC 1 2 R topics documented: R topics documented: as_dribble . .3 as_id . .4 as_shared_drive . .5 dribble . .6 dribble-checks . .6 drive_about . .7 drive_auth . .8 drive_auth_configure . 11 drive_browse . 12 drive_cp . 13 drive_create . 15 drive_deauth . 17 drive_download . 18 drive_empty_trash . 20 drive_endpoints . 20 drive_examples . 21 drive_extension . 22 drive_fields . 23 drive_find . 24 drive_get . 27 drive_has_token . 30 drive_link . 30 drive_ls . 31 drive_mime_type . 32 drive_mkdir . 33 drive_mv . 35 drive_publish . 37 drive_put . 38 drive_read_string . 40 drive_rename . 41 drive_reveal . 42 drive_rm . 45 drive_share . 46 drive_token . 48 drive_trash . 49 drive_update . 50 drive_upload . 51 drive_user . 54 googledrive-configuration . 55 request_generate . 57 request_make . 58 shared_drives . -

The Neighborly Substation the Neighborly Substation Electricity, Zoning, and Urban Design

MANHATTAN INSTITUTE CENTER FORTHE RETHINKING DEVELOPMENT NEIGHBORLY SUBstATION Hope Cohen 2008 er B ecem D THE NEIGHBORLY SUBstATION THE NEIGHBORLY SUBstATION Electricity, Zoning, and Urban Design Hope Cohen Deputy Director Center for Rethinking Development Manhattan Institute In 1879, the remarkable thing about Edison’s new lightbulb was that it didn’t burst into flames as soon as it was lit. That disposed of the first key problem of the electrical age: how to confine and tame electricity to the point where it could be usefully integrated into offices, homes, and every corner of daily life. Edison then designed and built six twenty-seven-ton, hundred-kilowatt “Jumbo” Engine-Driven Dynamos, deployed them in lower Manhattan, and the rest is history. “We will make electric light so cheap,” Edison promised, “that only the rich will be able to burn candles.” There was more taming to come first, however. An electrical fire caused by faulty wiring seriously FOREWORD damaged the library at one of Edison’s early installations—J. P. Morgan’s Madison Avenue brownstone. Fast-forward to the massive blackout of August 2003. Batteries and standby generators kicked in to keep trading alive on the New York Stock Exchange and the NASDAQ. But the Amex failed to open—it had backup generators for the trading-floor computers but depended on Consolidated Edison to cool them, so that they wouldn’t melt into puddles of silicon. Banks kept their ATM-control computers running at their central offices, but most of the ATMs themselves went dead. Cell-phone service deteriorated fast, because soaring call volumes quickly drained the cell- tower backup batteries. -

Santa Monica Smiley Sand Tram

Santa Monica Smiley Sand Tram TRANSFORMING BEACH PARKING, TRANSPORTATION AND ACCESS Prepared by Santa Monica Pier Restoration Corporation, February 2006 Concept History In the fall of 2004 a long-term event was staged in the 1550 parking lot occupying over 70% of the lot. The event producer was required to implement shuttle service from the south beach parking lots up to the Pier. A local business man offered the use of an open air propane powered tram and on October 5, 2004 at 8pm, the tram was dropped off in the 2030 Barnard Way parking lot for an initial trial run. The tram was an instant hit. Tram use in Santa Monica is not a new concept. A Santa Monica ordinance adopted in 1971 allows for the operation of trams along Ocean Front Walk and electric trams were operated between Santa Monica and Venice throughout the 1920’s. Electric trams took passengers between the Venice and Santa Monica Piers. - 1920 In 2004, due to pedestrian traffic concerns, Ocean Front Walk was not utilized for the tram. Instead, the official route for the tram was established along the streets running one block east of the beach. This route required extensive traffic management and, while providing effective transportation, provided limited access to the world-class beach. At a late-night brainstorming session on how to improve the route SMPD Sergeant Greg Smiley suggested that the tram run on the sand. With the emerging vision of the “Beach Tram”, the Santa Monica Pier Restoration Corporation began the process of research, prototype design and consideration of the issues this concept would raise. -

The Urban Rail Development Handbook

DEVELOPMENT THE “ The Urban Rail Development Handbook offers both planners and political decision makers a comprehensive view of one of the largest, if not the largest, investment a city can undertake: an urban rail system. The handbook properly recognizes that urban rail is only one part of a hierarchically integrated transport system, and it provides practical guidance on how urban rail projects can be implemented and operated RAIL URBAN THE URBAN RAIL in a multimodal way that maximizes benefits far beyond mobility. The handbook is a must-read for any person involved in the planning and decision making for an urban rail line.” —Arturo Ardila-Gómez, Global Lead, Urban Mobility and Lead Transport Economist, World Bank DEVELOPMENT “ The Urban Rail Development Handbook tackles the social and technical challenges of planning, designing, financing, procuring, constructing, and operating rail projects in urban areas. It is a great complement HANDBOOK to more technical publications on rail technology, infrastructure, and project delivery. This handbook provides practical advice for delivering urban megaprojects, taking account of their social, institutional, and economic context.” —Martha Lawrence, Lead, Railway Community of Practice and Senior Railway Specialist, World Bank HANDBOOK “ Among the many options a city can consider to improve access to opportunities and mobility, urban rail stands out by its potential impact, as well as its high cost. Getting it right is a complex and multifaceted challenge that this handbook addresses beautifully through an in-depth and practical sharing of hard lessons learned in planning, implementing, and operating such urban rail lines, while ensuring their transformational role for urban development.” —Gerald Ollivier, Lead, Transit-Oriented Development Community of Practice, World Bank “ Public transport, as the backbone of mobility in cities, supports more inclusive communities, economic development, higher standards of living and health, and active lifestyles of inhabitants, while improving air quality and liveability.