5 Adversarial Search

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Minimax TD-Learning with Neural Nets in a Markov Game

Minimax TD-Learning with Neural Nets in a Markov Game Fredrik A. Dahl and Ole Martin Halck Norwegian Defence Research Establishment (FFI) P.O. Box 25, NO-2027 Kjeller, Norway {Fredrik-A.Dahl, Ole-Martin.Halck}@ffi.no Abstract. A minimax version of temporal difference learning (minimax TD- learning) is given, similar to minimax Q-learning. The algorithm is used to train a neural net to play Campaign, a two-player zero-sum game with imperfect information of the Markov game class. Two different evaluation criteria for evaluating game-playing agents are used, and their relation to game theory is shown. Also practical aspects of linear programming and fictitious play used for solving matrix games are discussed. 1 Introduction An important challenge to artificial intelligence (AI) in general, and machine learning in particular, is the development of agents that handle uncertainty in a rational way. This is particularly true when the uncertainty is connected with the behavior of other agents. Game theory is the branch of mathematics that deals with these problems, and indeed games have always been an important arena for testing and developing AI. However, almost all of this effort has gone into deterministic games like chess, go, othello and checkers. Although these are complex problem domains, uncertainty is not their major challenge. With the successful application of temporal difference learning, as defined by Sutton [1], to the dice game of backgammon by Tesauro [2], random games were included as a standard testing ground. But even backgammon features perfect information, which implies that both players always have the same information about the state of the game. -

Adversarial Search

Adversarial Search In which we examine the problems that arise when we try to plan ahead in a world where other agents are planning against us. Outline 1. Games 2. Optimal Decisions in Games 3. Alpha-Beta Pruning 4. Imperfect, Real-Time Decisions 5. Games that include an Element of Chance 6. State-of-the-Art Game Programs 7. Summary 2 Search Strategies for Games • Difference to general search problems deterministic random – Imperfect Information: opponent not deterministic perfect Checkers, Backgammon, – Time: approximate algorithms information Chess, Go Monopoly incomplete Bridge, Poker, ? information Scrabble • Early fundamental results – Algorithm for perfect game von Neumann (1944) • Our terminology: – Approximation through – deterministic, fully accessible evaluation information Zuse (1945), Shannon (1950) Games 3 Games as Search Problems • Justification: Games are • Games as playground for search problems with an serious research opponent • How can we determine the • Imperfection through actions best next step/action? of opponent: possible results... – Cutting branches („pruning“) • Games hard to solve; – Evaluation functions for exhaustive: approximation of utility – Average branching factor function chess: 35 – ≈ 50 steps per player ➞ 10154 nodes in search tree – But “Only” 1040 allowed positions Games 4 Search Problem • 2-player games • Search problem – Player MAX – Initial state – Player MIN • Board, positions, first player – MAX moves first; players – Successor function then take turns • Lists of (move,state)-pairs – Goal test -

Artificial Intelligence Spring 2019 Homework 2: Adversarial Search

Artificial Intelligence Spring 2019 Homework 2: Adversarial Search PROGRAMMING In this assignment, you will create an adversarial search agent to play the 2048-puzzle game. A demo of the game is available here: gabrielecirulli.github.io/2048. I. 2048 As A Two-Player Game II. Choosing a Search Algorithm: Expectiminimax III. Using The Skeleton Code IV. What You Need To Submit V. Important Information VI. Before You Submit I. 2048 As A Two-Player Game 2048 is played on a 4×4 grid with numbered tiles which can slide up, down, left, or right. This game can be modeled as a two player game, in which the computer AI generates a 2- or 4-tile placed randomly on the board, and the player then selects a direction to move the tiles. Note that the tiles move until they either (1) collide with another tile, or (2) collide with the edge of the grid. If two tiles of the same number collide in a move, they merge into a single tile valued at the sum of the two originals. The resulting tile cannot merge with another tile again in the same move. Usually, each role in a two-player games has a similar set of moves to choose from, and similar objectives (e.g. chess). In 2048 however, the player roles are inherently asymmetric, as the Computer AI places tiles and the Player moves them. Adversarial search can still be applied! Using your previous experience with objects, states, nodes, functions, and implicit or explicit search trees, along with our skeleton code, focus on optimizing your player algorithm to solve 2048 as efficiently and consistently as possible. -

4 Beyond Classical Search

BEYOND CLASSICAL 4 SEARCH In which we relax the simplifying assumptions of the previous chapter, thereby getting closer to the real world. Chapter 3 addressed a single category of problems: observable, deterministic, known envi- ronments where the solution is a sequence of actions. In this chapter, we look at what happens when these assumptions are relaxed. We begin with a fairly simple case: Sections 4.1 and 4.2 cover algorithms that perform purely local search in the state space, evaluating and modify- ing one or more current states rather than systematically exploring paths from an initial state. These algorithms are suitable for problems in which all that matters is the solution state, not the path cost to reach it. The family of local search algorithms includes methods inspired by statistical physics (simulated annealing)andevolutionarybiology(genetic algorithms). Then, in Sections 4.3–4.4, we examine what happens when we relax the assumptions of determinism and observability. The key idea is that if an agent cannot predict exactly what percept it will receive, then it will need to consider what to do under each contingency that its percepts may reveal. With partial observability, the agent will also need to keep track of the states it might be in. Finally, Section 4.5 investigates online search,inwhichtheagentisfacedwithastate space that is initially unknown and must be explored. 4.1 LOCAL SEARCH ALGORITHMS AND OPTIMIZATION PROBLEMS The search algorithms that we have seen so far are designed to explore search spaces sys- tematically. This systematicity is achieved by keeping one or more paths in memory and by recording which alternatives have been explored at each point along the path. -

Best-First and Depth-First Minimax Search in Practice

Best-First and Depth-First Minimax Search in Practice Aske Plaat, Erasmus University, [email protected] Jonathan Schaeffer, University of Alberta, [email protected] Wim Pijls, Erasmus University, [email protected] Arie de Bruin, Erasmus University, [email protected] Erasmus University, University of Alberta, Department of Computer Science, Department of Computing Science, Room H4-31, P.O. Box 1738, 615 General Services Building, 3000 DR Rotterdam, Edmonton, Alberta, The Netherlands Canada T6G 2H1 Abstract Most practitioners use a variant of the Alpha-Beta algorithm, a simple depth-®rst pro- cedure, for searching minimax trees. SSS*, with its best-®rst search strategy, reportedly offers the potential for more ef®cient search. However, the complex formulation of the al- gorithm and its alleged excessive memory requirements preclude its use in practice. For two decades, the search ef®ciency of ªsmartº best-®rst SSS* has cast doubt on the effectiveness of ªdumbº depth-®rst Alpha-Beta. This paper presents a simple framework for calling Alpha-Beta that allows us to create a variety of algorithms, including SSS* and DUAL*. In effect, we formulate a best-®rst algorithm using depth-®rst search. Expressed in this framework SSS* is just a special case of Alpha-Beta, solving all of the perceived drawbacks of the algorithm. In practice, Alpha-Beta variants typically evaluate less nodes than SSS*. A new instance of this framework, MTD(ƒ), out-performs SSS* and NegaScout, the Alpha-Beta variant of choice by practitioners. 1 Introduction Game playing is one of the classic problems of arti®cial intelligence. -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

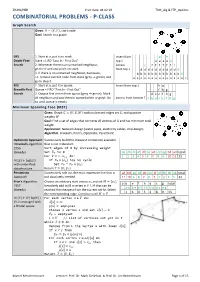

COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), Start Node Goal: Search in a Graph

ZHAW/HSR Print date: 04.02.19 TSM_Alg & FTP_Optimiz COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), start node Goal: Search in a graph DFS 1. Start at a, put it on stack. Insert from g h Depth-First- Stack = LIFO "Last In - First Out" top ↓ e e e e e Search 2. Whenever there is an unmarked neighbour, Access c f f f f f f f go there and and put it on stack from top ↓ d d d d d d d d d d d 3. If there is no unmarked neighbour, backtrack; b b b b b b b b b b b b b i.e. remove current node from stack (grey ⇒ green) and a a a a a a a a a a a a a a a go to step 2. BFS 1. Start at a, put it in queue. Insert from top ↓ h g Breadth-First Queue = FIFO "First In - First Out" f h g Search 2. Output first vertex from queue (grey ⇒ green). Mark d e c f h g all neighbors and put them in queue (white ⇒ grey). Do Access from bottom ↑ a b d e c f h g so until queue is empty Minimum Spanning Tree (MST) Given: Graph 퐺 = (푉, 퐸, 푊) with undirected edges set 퐸, with positive weights 푊 Goal: Find a set of edges that connects all vertices of G and has minimum total weight. Application: Network design (water pipes, electricity cables, chip design) Algorithm: Kruskal's, Prim's, Optimistic, Pessimistic Optimistic Approach Successively build the cheapest connection available =Kruskal's algorithm that is not redundant. -

6 Adversarial Search

6 ADVERSARIAL SEARCH In which we examine the problems that arise when we try to plan ahead in a world where other agents are planning against us. 6.1 GAMES Chapter 2 introduced multiagent environments, in which any given agent will need to con- sider the actions of other agents and how they affect its own welfare. The unpredictability of these other agents can introduce many possible contingencies into the agent’s problem- solving process, as discussed in Chapter 3. The distinction between cooperative and compet- itive multiagent environments was also introduced in Chapter 2. Competitive environments, in which the agents’ goals are in conflict, give rise to adversarial search problems—often GAMES known as games. Mathematical game theory, a branch of economics, views any multiagent environment as a game provided that the impact of each agent on the others is “significant,” regardless of whether the agents are cooperative or competitive.1 In AI, “games” are usually of a rather specialized kind—what game theorists call deterministic, turn-taking, two-player, zero-sum ZEROSUM GAMES games of perfect information. In our terminology, this means deterministic, fully observable PERFECT INFORMATION environments in which there are two agents whose actions must alternate and in which the utility values at the end of the game are always equal and opposite. For example, if one player wins a game of chess (+1), the other player necessarily loses (–1). It is this opposition between the agents’ utility functions that makes the situation adversarial. We will consider multiplayer games, non-zero-sum games, and stochastic games briefly in this chapter, but will delay discussion of game theory proper until Chapter 17. -

A System for Bidirectional Robotic Pathfinding

Portland State University PDXScholar Computer Science Faculty Publications and Presentations Computer Science 11-2012 A System for Bidirectional Robotic Pathfinding Tesca Fitzgerald Portland State University, [email protected] Follow this and additional works at: https://pdxscholar.library.pdx.edu/compsci_fac Part of the Artificial Intelligence and Robotics Commons Let us know how access to this document benefits ou.y Citation Details Fitzgerald, Tesca, "A System for Bidirectional Robotic Pathfinding" (2012). Computer Science Faculty Publications and Presentations. 226. https://pdxscholar.library.pdx.edu/compsci_fac/226 This Technical Report is brought to you for free and open access. It has been accepted for inclusion in Computer Science Faculty Publications and Presentations by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. A System for Bidirectional Robotic Pathfinding Tesca K. Fitzgerald Department of Computer Science, Portland State University PO Box 751 Portland, OR 97207 USA [email protected] TR 12-02 November 2012 Abstract The accuracy of an autonomous robot's intended navigation can be impacted by environmental factors affecting the robot's movement. When sophisticated localizing sensors cannot be used, it is important for a pathfinding algorithm to provide opportunities for landmark usage during route execution, while balancing the efficiency of that path. Although current pathfinding algorithms may be applicable, they often disfavor paths that balance accuracy and efficiency needs. I propose a bidirectional pathfinding algorithm to meet the accuracy and efficiency needs of autonomous, navigating robots. 1. Introduction While a multitude of pathfinding algorithms navigation without expensive sensors. As low- exist, pathfinding algorithms tailored for the cost navigating robots need to account for robotics domain are less common. -

English for It Students

УКРАЇНА НАЦІОНАЛЬНИЙ УНІВЕРСИТЕТ БІОРЕСУРСІВ І ПРИРОДОКОРИСТУВАННЯ УКРАЇНИ Кафедра англійської філології Ямнич Н. Ю., Данькевич Л. Р. ENGLISH FOR IT STUDENTS 1 УДК: 811.111(072) Навчальний посібник з англійської мови розрахований на студентів вищих навчальних закладів зі спеціальностей «Комп”ютерні науки» та «Програмна інженерія». Мета видання – сприяти розвитку і вдосконаленню у студентів комунікативних навичок з фаху, навичок читання та письма і закріплення навичок з граматики, а також активізувати навички автономного навчання. Посібник охоплює теми актуальні у сучасному інформаційному середовищі, що подаються на основі автентичних професійно спрямованих текстів, метою яких є розвиток у студентів мовленнєвої фахової компетенції, що сприятиме розвитку логічного мислення. Добір навчального матеріалу відповідає вимогам навчальної програми з англійської мови. Укладачі: Л.Р. Данькевич, Н.Ю. Ямнич, Рецензенти: В. В. Коломійцева, к. філол. н., доцент кафедри сучасної української мови інституту філології Київського національного університету імені Тараса Шевченка В.І. Ковальчук, д. пед. наук, професор,завідувач кафедри методики навчання та управління навчальними закладами НУБіП України Кравченко Н. К., д. філ. наук, професор кафедри англійської філології і філософії мови ім. професора О. М. Мороховського, КНЛУ Навчальний посібник з англійської мови для студентів факультету інформаційних технологій. – К.: «Компринт», 2017. – 608 с. ISBN Видання здійснено за авторським редагуванням Відповідальний за випуск: Н.Ю.Ямнич ISBN © Н. Ю. Ямнич, Л.Д. Данькевич, 2017 2 CONTENTS Unit 1 Higher Education 5 Language practice. Overview of verb tenses 18 Unit 2 Jobs and careers 25 Language practice. Basic sentence structures 89 Unit 3 Tied to technology 103 Language practice. Modal verbs 115 Unit 4 Computers 135 Language practice. Passive 153 Unit 5 Communication. E –commerce 167 Language practice. -

"Large Scale Monte Carlo Tree Search on GPU"

Large Scale Monte Carlo Tree Search on GPU GPU+HK大ávモンテカルロ木探索 Doctoral Dissertation ¿7論£ Kamil Marek Rocki ロツキ カミル >L#ク Submitted to Department of Computer Science, Graduate School of Information Science and Technology, The University of Tokyo in partial fulfillment of the requirements for the degree of Doctor of Information Science and Technology Thesis Supervisor: Reiji Suda (½田L¯) Professor of Computer Science August, 2011 Abstract: Monte Carlo Tree Search (MCTS) is a method for making optimal decisions in artificial intelligence (AI) problems, typically for move planning in combinatorial games. It combines the generality of random simulation with the precision of tree search. Research interest in MCTS has risen sharply due to its spectacular success with computer Go and its potential application to a number of other difficult problems. Its application extends beyond games, and MCTS can theoretically be applied to any domain that can be described in terms of (state, action) pairs, as well as it can be used to simulate forecast outcomes such as decision support, control, delayed reward problems or complex optimization. The main advantages of the MCTS algorithm consist in the fact that, on one hand, it does not require any strategic or tactical knowledge about the given domain to make reasonable decisions, on the other hand algorithm can be halted at any time to return the current best estimate. So far, current research has shown that the algorithm can be parallelized on multiple CPUs. The motivation behind this work was caused by the emerging GPU- based systems and their high computational potential combined with the relatively low power usage compared to CPUs. -

Biography of N. N. Luzin

BIOGRAPHY OF N. N. LUZIN http://theor.jinr.ru/~kuzemsky/Luzinbio.html BIOGRAPHY OF N. N. LUZIN (1883-1950) born December 09, 1883, Irkutsk, Russia. died January 28, 1950, Moscow, Russia. Biographic Data of N. N. Luzin: Nikolai Nikolaevich Luzin (also spelled Lusin; Russian: НиколайНиколаевич Лузин) was a Soviet/Russian mathematician known for his work in descriptive set theory and aspects of mathematical analysis with strong connections to point-set topology. He was the co-founder of "Luzitania" (together with professor Dimitrii Egorov), a close group of young Moscow mathematicians of the first half of the 1920s. This group consisted of the higly talented and enthusiastic members which form later the core of the famous Moscow school of mathematics. They adopted his set-theoretic orientation, and went on to apply it in other areas of mathematics. Luzin started studying mathematics in 1901 at Moscow University, where his advisor was professor Dimitrii Egorov (1869-1931). Professor Dimitrii Fedorovihch Egorov was a great scientist and talented teacher; in addition he was a person of very high moral principles. He was a Russian and Soviet mathematician known for significant contributions to the areas of differential geometry and mathematical analysis. Egorov was devoted and openly practicized member of Russian Orthodox Church and active parish worker. This activity was the reason of his conflicts with Soviet authorities after 1917 and finally led him to arrest and exile to Kazan (1930) where he died from heavy cancer. From 1910 to 1914 Luzin studied at Gottingen, where he was influenced by Edmund Landau. He then returned to Moscow and received his Ph.D.