Algol Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book)

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book) R. w. BEMER Introduction This is an admittedly fragmentary chronicle of events in the develop ment of the algorithmic language ALGOL. Nevertheless, it seems perti nent, while we await the advent of a technical and conceptual history, to outline the matrix of forces which shaped that history in a political and social sense. Perhaps the author's role is only that of recorder of visible events, rather than the complex interplay of ideas which have made ALGOL the force it is in the computational world. It is true, as Professor Ershov stated in his review of a draft of the present work, that "the reading of this history, rich in curious details, nevertheless does not enable the beginner to understand why ALGOL, with a history that would seem more disappointing than triumphant, changed the face of current programming". I can only state that the time scale and my own lesser competence do not allow the tracing of conceptual development in requisite detail. Books are sure to follow in this area, particularly one by Knuth. A further defect in the present work is the relatively lesser availability of European input to the log, although I could claim better access than many in the U.S.A. This is regrettable in view of the relatively stronger support given to ALGOL in Europe. Perhaps this calmer acceptance had the effect of reducing the number of significant entries for a log such as this. Following a brief view of the pattern of events come the entries of the chronology, or log, numbered for reference in the text. -

Technical Details of the Elliott 152 and 153

Appendix 1 Technical Details of the Elliott 152 and 153 Introduction The Elliott 152 computer was part of the Admiralty’s MRS5 (medium range system 5) naval gunnery project, described in Chap. 2. The Elliott 153 computer, also known as the D/F (direction-finding) computer, was built for GCHQ and the Admiralty as described in Chap. 3. The information in this appendix is intended to supplement the overall descriptions of the machines as given in Chaps. 2 and 3. A1.1 The Elliott 152 Work on the MRS5 contract at Borehamwood began in October 1946 and was essen- tially finished in 1950. Novel target-tracking radar was at the heart of the project, the radar being synchronized to the computer’s clock. In his enthusiasm for perfecting the radar technology, John Coales seems to have spent little time on what we would now call an overall systems design. When Harry Carpenter joined the staff of the Computing Division at Borehamwood on 1 January 1949, he recalls that nobody had yet defined the way in which the control program, running on the 152 computer, would interface with guns and radar. Furthermore, nobody yet appeared to be working on the computational algorithms necessary for three-dimensional trajectory predic- tion. As for the guns that the MRS5 system was intended to control, not even the basic ballistics parameters seemed to be known with any accuracy at Borehamwood [1, 2]. A1.1.1 Communication and Data-Rate The physical separation, between radar in the Borehamwood car park and digital computer in the laboratory, necessitated an interconnecting cable of about 150 m in length. -

Microbenchmarks in Big Data

M Microbenchmark Overview Microbenchmarks constitute the first line of per- Nicolas Poggi formance testing. Through them, we can ensure Databricks Inc., Amsterdam, NL, BarcelonaTech the proper and timely functioning of the different (UPC), Barcelona, Spain individual components that make up our system. The term micro, of course, depends on the prob- lem size. In BigData we broaden the concept Synonyms to cover the testing of large-scale distributed systems and computing frameworks. This chap- Component benchmark; Functional benchmark; ter presents the historical background, evolution, Test central ideas, and current key applications of the field concerning BigData. Definition Historical Background A microbenchmark is either a program or routine to measure and test the performance of a single Origins and CPU-Oriented Benchmarks component or task. Microbenchmarks are used to Microbenchmarks are closer to both hardware measure simple and well-defined quantities such and software testing than to competitive bench- as elapsed time, rate of operations, bandwidth, marking, opposed to application-level – macro or latency. Typically, microbenchmarks were as- – benchmarking. For this reason, we can trace sociated with the testing of individual software microbenchmarking influence to the hardware subroutines or lower-level hardware components testing discipline as can be found in Sumne such as the CPU and for a short period of time. (1974). Furthermore, we can also find influence However, in the BigData scope, the term mi- in the origins of software testing methodology crobenchmarking is broadened to include the during the 1970s, including works such cluster – group of networked computers – acting as Chow (1978). One of the first examples of as a single system, as well as the testing of a microbenchmark clearly distinguishable from frameworks, algorithms, logical and distributed software testing is the Whetstone benchmark components, for a longer period and larger data developed during the late 1960s and published sizes. -

Internet Engineering Jan Nikodem, Ph.D. Software Engineering

Internet Engineering Jan Nikodem, Ph.D. Software Engineering Theengineering paradigm Software Engineering Lecture 3 The term "software crisis" was coined at the first NATO Software Engineering Conference in 1968 by: Friedrich. L. Bauer Nationality;German, mathematician, theoretical physicist, Technical University of Munich Friedrich L. Bauer 1924 3/24 The term "software crisis" was coined at the first NATO Software Engineering Conference in 1968 by: Peter Naur Nationality;Dutch, astronomer, Regnecentralen, Niels Bohr Institute, Technical University of Denmark, University of Copenhagen. Peter Naur 1928 4/24 Whatshouldbe ourresponse to software crisis which provided with too little quality, too late deliver and over budget? Nationality;Dutch, astronomer, Regnecentralen, Niels Bohr Institute, Technical University of Denmark, University of Copenhagen. Peter Naur 1928 5/24 Software should following an engineering paradigm NATO conference in Garmisch-Partenkirchen, 1968 Peter Naur 1928 6/24 The hope is that the progress in hardware will cure all software ills. The Oberon System User Guide and Programmer's Manual. ACM Press Nationality;Swiss, electrical engineer, computer scientist ETH Zürich, IBM Zürich Research Laboratory, Institute for Media Communications Martin Reiser 7/24 However, a critical observer may notethat software manages to outgrow hardware in size and sluggishness. The Oberon System User Guide and Programmer's Manual. ACM Press Nationality;Swiss, electrical engineer, computer scientist ETH Zürich, IBM Zürich Research Laboratory, Institute for Media Communications Martin Reiser 8/24 Wirth's computing adage Software is getting slower more rapidly than hardware becomes faster. Nationality;Swiss, electronic engineer, computer scientist ETH Zürich, University of California, Berkeley, Stanford University University of Zurich. Xerox PARC. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

I.MX 8Quadxplus Power and Performance

NXP Semiconductors Document Number: AN12338 Application Note Rev. 4 , 04/2020 i.MX 8QuadXPlus Power and Performance 1. Introduction Contents This application note helps you to design power 1. Introduction ........................................................................ 1 management systems. It illustrates the current drain 2. Overview of i.MX 8QuadXPlus voltage supplies .............. 1 3. Power measurement of the i.MX 8QuadXPlus processor ... 2 measurements of the i.MX 8QuadXPlus Applications 3.1. VCC_SCU_1V8 power ........................................... 4 Processors taken on NXP Multisensory Evaluation Kit 3.2. VCC_DDRIO power ............................................... 4 (MEK) Platform through several use cases. 3.3. VCC_CPU/VCC_GPU/VCC_MAIN power ........... 5 3.4. Temperature measurements .................................... 5 This document provides details on the performance and 3.5. Hardware and software used ................................... 6 3.6. Measuring points on the MEK platform .................. 6 power consumption of the i.MX 8QuadXPlus 4. Use cases and measurement results .................................... 6 processors under a variety of low- and high-power 4.1. Low-power mode power consumption (Key States modes. or ‘KS’)…… ......................................................................... 7 4.2. Complex use case power consumption (Arm Core, The data presented in this application note is based on GPU active) ......................................................................... 11 5. SOC -

Srovnání Kvality Virtuálních Strojů Assessment of Virtual Machines Performance

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by DSpace at VSB Technical University of Ostrava VŠB - Technická univerzita Ostrava Fakulta elektrotechniky a informatiky Katedra Informatiky Srovnání kvality virtuálních strojů Assessment of Virtual Machines Performance 2011 Lenka Novotná Prohlašuji, že jsem tuto bakalářskou práci vypracovala samostatně. Uvedla jsem všechny literární prameny a publikace, ze kterých jsem čerpala. V Ostravě dne 20. dubna 2011 ……………………… Lenka Novotná Ráda bych poděkovala vedoucímu bakalářské práce, Ing. Petru Olivkovi, za pomoc, věcné připomínky a veškerý čas, který mi věnoval. Abstrakt Hlavním cílem této bakalářské práce je vysvětlit pojem virtualizace, virtuální stroj, a dále popsat a porovnat dostupné virtualizační prostředky, které se využívají ve světovém oboru informačních technologií. Práce se především zabývá srovnáním výkonu virtualizačních strojů pro desktopové operační systémy MS Windows a Linux. Při testování jsem se zaměřila na tři hlavní faktory a to propustnost sítě, při které jsem použila aplikaci Iperf, ke změření výkonu diskových operací jsem využila program IOZone a pro test posledního faktoru, který je zaměřen na přidělování CPU procesů, jsem použila známé testovací aplikace Dhrystone a Whetstone. Všechna zmiňovaná měření okruhů byla provedena na třech virtualizačních platformách, kterými jsou VirtualBox OSE, VMware Player a KVM s QEMU. Klíčová slova: Virtualizace, virtuální stroj, VirtualBox, VMware, KVM, QEMU, plná virtualizace, paravirtualizace, částečná virtualizace, hardwarově asistovaná virtualizace, virtualizace na úrovní operačního systému, měření výkonu CPU, měření propustnosti sítě, měření diskových operací, Dhrystone, Whetstone, Iperf, IOZone. Abstract The main goal of this thesis is explain the term of Virtualization and Virtual Machine, describe and compare available virtualization resources, which we can use in worldwide field of information technology. -

Boolean Matrix in the Matrix Equation (19)

General Disclaimer One or more of the Following Statements may affect this Document This document has been reproduced from the best copy furnished by the organizational source. It is being released in the interest of making available as much information as possible. This document may contain data, which exceeds the sheet parameters. It was furnished in this condition by the organizational source and is the best copy available. This document may contain tone-on-tone or color graphs, charts and/or pictures, which have been reproduced in black and white. This document is paginated as submitted by the original source. Portions of this document are not fully legible due to the historical nature of some of the material. However, it is the best reproduction available from the original submission. Produced by the NASA Center for Aerospace Information (CASI) C f I l 1 ^o } Technical Report 69-86 March 1969 Design Automation by the Computer Design Language by Yaohan Cho Professor fr N he computer time used was supported by the National Aeronautics and Space Administration under Grant NsO 398 to the Computer Science Center of the University of Maryland. /i Abstract A Computer Design Language (CDL) has been developed for facilitating design automation of digital computers. When the functional organizatl.on and sequential operation of a digital computer are conceived and specified by the CDL, this d CDL description is called a Macro design. The macro design is highly descriptive in computer elements. it describes precisely and concisely what the computer is expected to do functionally step by step. -

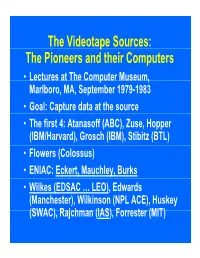

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

Niels Ivar Bech

Niels Ivar Bech Born 1920 Lemvig, Denmark; died 1975; originator of Danish computer development. Niels Ivar Bech was one of Europe's most creative leaders in the field of electronic digital computers.1 He originated Danish computer development under the auspices of the Danish Academy of Technical Sciences and was first managing director of its subsidiary, Regnecentralen, which was Denmark's (and one of Europe's) first independent designer and builder of electronic computers. Bech was born in 1920 in Lemvig, a small town in the northwestern corner of Jutland, Denmark; his schooling ended with his graduation from Gentofte High School (Statsskole) in 1940. Because he had no further formal education, he was not held in as high esteem as he deserved by some less gifted people who had degrees or were university professors. During the war years, Bech was a teacher. When Denmark was occupied by the Nazis, he became a runner for the distribution of illegal underground newspapers, and on occasion served on the crews of the small boats that perilously smuggled Danish Jews across the Kattegat to Sweden. After the war, from 1949 to 1957, he worked as a calculator in the Actuarial Department of the Copenhagen Telephone Company (Kobenhavns Telefon Aktieselskab, KTA). The Danish Academy of Technical Sciences established a committee on electronic computing in 1947, and in 1952 the academy obtained free access to the complete design of the computer BESK (Binar Electronisk Sekevens Kalkylator) being built in Stockholm by the Swedish Mathematical Center (Matematikmaskinnamndens Arbetsgrupp). In 1953 the Danish academy founded a nonprofit computer subsidiary, Regnecentralen. -

New Contracts

whel"e wel"e tJ0U when the telejah0ne l"an~? Chances are you weren't anywhere-yet. For it was March 10, 1876 when Alexander Graham Bell made that first historic telephone call to Mr. Watson ... and another significant milestone in man's attempt to better himself and his environment had been passed. Now you and your family can enjoy, know better, and relive hundreds of rich and exciting days just like this when you enroll as a Founding Associate of THE NATIONAL HISTORICAL SOCIETY If you would relish the experience of being a spectator at the fascinating West-anywhere Americans can trace roots or great moments in America's past- from yesterday back; if you have left a mark for all time. would like unique tailor-made vacations to spots where America's Three-day seminars featuring presentations by some of the heritage is always just around the corner; if you would have a country's most outstanding historians ... and held at actual place for authentic, unbelievably priced antique reproductions; historical settings, providing both eye-and-ear witness to the and the opportunity to always renew your association with the way it actually happened. Society at the same low dues cost; take this opportunity now before the rolls close- to become a Founding Associate. You'll The opportunity to buy-at discounts up to 25%-the latest be pleased and proud, too, to frame and display the handsome and best in new books on history, without the usual commit Certificate, personally inscribed for you, as a permanent symbol ment of belonging to a book club. -

A Historical Perspective of the Development of British Computer Manufacturers with Particular Reference to Staffordshire

A historical perspective of the development of British computer manufacturers with particular reference to Staffordshire John Wilcock School of Computing, Staffordshire University Abstract Beginning in the early years of the 20th Century, the report summarises the activities within the English Electric and ICT groups of computer manufacturers, and their constituent groups and successor companies, culminating with the formation of ICL and its successors STC-ICL and Fujitsu-ICL. Particular reference is made to developments within the county of Staffordshire, and to the influence which these companies have had on the teaching of computing at Staffordshire University and its predecessors. 1. After the second world war In Britain several computer research teams were formed in the late 1940s, which concentrated on computer storage techniques. At the University of Manchester, Williams and Kilburn developed the “Williams Tube Store”, which stored binary numbers as electrostatic charges on the inside face of a cathode ray tube. The Manchester University Mark I, Mark II (Mercury 1954) and Atlas (1960) computers were all built and marketed by Ferranti at West Gorton. At Birkbeck College, University of London, an early form of magnetic storage, the “Birkbeck Drum” was constructed. The pedigree of the computers constructed in Staffordshire begins with the story of the EDSAC first generation machine. At the University of Cambridge binary pulses were stored by ultrasound in 2m long columns of mercury, known as tanks, each tank storing 16 words of 35 bits, taking typically 32ms to circulate. Programmers needed to know not only where their data were stored, but when they were available at the top of the delay lines.