Causal V. Positivist Theories of Scientific Explanation : a Defense of the Causal Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Theory and Reality

an introduction to the philosophy of science ........................................................... SCIENCE AND ITS CONCEPTUAL FOUNDATIONS A SERIES EDITED BY DAVID L. HULL THEORY AND REALITY ........................................................... Peter Godfrey-Smith is associate professor of philosophy and of his tory and philosophy of science at Stanford University. He is the author PETER GODFREY-SMITH of Complexity and the Function ofMind in Nature. The University of Chicago Press, Chicago 60637 The University of Chicago Press, Ltd., London © 2003 by The University of Chicago All rights reserved. Published 2003 Printed in the United States of America 12 II 100908 45 ISBN: 0-226-30062-5 (cloth) ISBN: 0-226-30063-3 (paper) Library of Congress Cataloging-in-Publication Data Godfrey-Smith, Peter. Theory and reality: an introduction to the philosophy of science I Peter Godfrey-Smith. p. cm. - (Science and its conceptual foundations) Includes bibliographical references and index. ISBN 0-226-30062-5 (alk. paper) - ISBN 0-226-30063-3 (pbk. : alk. The University of Chicago Press / Chicago and London paper) I. Science-Philosophy. I. Title. II. Series. QI75 .G596 2003 501-dc2I 2002155305 @ The paper used in this publication meets the minimum requirements of the American National Standard for Information Sciences-Perma nence of Paper for Printed Library Materials, ANSI Z39.48-1992. 56 Chapter Three Later (especially in chapter 14) I will return to these problems. But in the next chapter we will look at a philosophy that gets a good part of its motivation from the frustrations discussed in this chapter. Further Reading 4...................................................................................... Once again, Hempel's Aspects ofScientific Explanation (1965) is a key source, con Popper: Conjecture and Refutation taining a long (and exhausting) chapter on confirmation. -

Positivism and the 'New Archaeology'

2 Positivism and the 'new archaeology' 'Recipes for the Good Society used to run, in caricature, something like this - (1) Take about 2,000 hom. sap., dissect each into essence and accidents and discard the accidents. (2) Place essences in a large casserole, add socialising syrup and stew until conflict disappears. (3) Serve with a pinch of salt.' (Hollis 1977, p. 1) 'The wish to establish a natural science of society . probably remains, in the English speaking world at least, the dominant standpoint today . But those who still wait for a Newton are not only waiting for a train that won't arrive, they're in the wrong station altogether.' (Giddens 1976, p. 13) Introduction How should archaeologists come to have knowledge of the past? What does this knowl- edge involve? What constitutes an explanation of what archaeologists find? This chapter considers the answer to these questions accepted by the 'new' archaeology; it considers epistemological issues raised by a study of the past in the archaeological literature post- dating 1960. New archaeology has embraced explicitly and implicitly a positivist model of how to explain the past and we examine the treatment of the social world as an exten- sion of the natural, the reduction of practice lo behaviour, the separation of'reality', the facts, from concepts and theories. We criticize testing, validation and the refutation of theory as a way of connecting theory and the facts, emphasizing all observation as theory-laden. The new archaeology polemically opposed itself to traditional 'normative' archaeology as a social science and we begin the chapter with a consideration of this change and why it took place. -

Some Thoughts About Natural Law

Some Thoughts About Natural Law Phflip E. Johnsont My first task is to define the subject. When I use the term "natural" law, I am distinguishing the category from other kinds of law such as positive law, divine law, or scientific law. When we discuss positive law, we look to materials like legislation, judicial opinions, and scholarly anal- ysis of these materials. If we speak of divine law, we ask if there are any knowable commands from God. If we look for scientific law, we conduct experiments, or make observations and calculations, in order to come to objectively verifiable knowledge about the material world. Natural law-as I will be using the term in this essay-refers to a method that we employ to judge what the principles of individual moral- ity or positive law ought to be. The natural law philosopher aspires to make these judgments on the basis of reason and human nature without invoking divine revelation or prophetic inspiration. Natural law so defined is a category much broader than any particular theory of natural law. One can believe in the existence of natural law without agreeing with the particular systems of natural law advocates like Aristotle or Aquinas. I am describing a way of thinking, not a particular theory. In the broad sense in which I am using the term, therefore, anyone who attempts to found concepts of justice upon reason and human nature engages in natural law philosophy. Contemporary philosophical systems based on feminism, wealth maximization, neutral conversation, liberal equality, or libertarianism are natural law philosophies. -

Scientific Explanation

Scientific Explanation Michael Strevens For the Macmillan Encyclopedia of Philosophy, second edition The three cardinal aims of science are prediction, control, and expla- nation; but the greatest of these is explanation. Also the most inscrutable: prediction aims at truth, and control at happiness, and insofar as we have some independent grasp of these notions, we can evaluate science’s strate- gies of prediction and control from the outside. Explanation, by contrast, aims at scientific understanding, a good intrinsic to science and therefore something that it seems we can only look to science itself to explicate. Philosophers have wondered whether science might be better off aban- doning the pursuit of explanation. Duhem (1954), among others, argued that explanatory knowledge would have to be a kind of knowledge so ex- alted as to be forever beyond the reach of ordinary scientific inquiry: it would have to be knowledge of the essential natures of things, something that neo-Kantians, empiricists, and practical men of science could all agree was neither possible nor perhaps even desirable. Everything changed when Hempel formulated his dn account of ex- planation. In accordance with the observation above, that our only clue to the nature of explanatory knowledge is science’s own explanatory prac- tice, Hempel proposed simply to describe what kind of things scientists ten- dered when they claimed to have an explanation, without asking whether such things were capable of providing “true understanding”. Since Hempel, the philosophy of scientific explanation has proceeded in this humble vein, 1 seeming more like a sociology of scientific practice than an inquiry into a set of transcendent norms. -

Meta-Metaphysics on Metaphysical Equivalence, Primitiveness, and Theory Choice

J. Benovsky Meta-metaphysics On Metaphysical Equivalence, Primitiveness, and Theory Choice Series: Synthese Library, Vol. 374 ▶ Contains original research in analytic metaphysics and meta- metaphysics ▶ Offers a detailed and innovative discussion of metaphysical equivalence ▶ Explores the role of aesthetic properties of metaphysical theories from an original angle Metaphysical theories are beautiful. At the end of this book, Jiri Benovsky defends the view that metaphysical theories possess aesthetic properties and that these play a crucial role when it comes to theory evaluation and theory choice. Before we get there, the philosophical path the author proposes to follow starts with three discussions 1st ed. 2016, XI, 135 p. 20 illus. of metaphysical equivalence. Benovsky argues that there are cases of metaphysical equivalence, cases of partial metaphysical equivalence, as well as interesting cases of theories that are not equivalent. Thus, claims of metaphysical equivalence can only be Printed book raised locally. The slogan is: the best way to do meta-metaphysics is to do first-level Hardcover metaphysics.To do this work, Benovsky focuses on the nature of primitives and on the ISBN 978-3-319-25332-9 role they play in each of the theories involved. He emphasizes the utmost importance of primitives in the construction of metaphysical theories and in the subsequent evaluation ▶ 89,99 € | £79.99 of them. He then raises the simple but complicated question: how to make a choice ▶ *96,29 € (D) | 98,99 € (A) | CHF 106.50 between competing metaphysical theories? If two theories are equivalent, then perhaps we do not need to make a choice. But what about all the other cases of non-equivalent "equally good" theories? Benovsky uses some of the theories discussed in the first part of the book as examples and examines some traditional meta-theoretical criteria for theory choice (various kinds of simplicity, compatibility with physics, compatibility with intuitions, explanatory power, internal consistency,...) only to show that they do not allow us to make a choice. -

Two Concepts of Law of Nature

Prolegomena 12 (2) 2013: 413–442 Two Concepts of Law of Nature BRENDAN SHEA Winona State University, 528 Maceman St. #312, Winona, MN, 59987, USA [email protected] ORIGINAL SCIENTIFIC ARTICLE / RECEIVED: 04–12–12 ACCEPTED: 23–06–13 ABSTRACT: I argue that there are at least two concepts of law of nature worthy of philosophical interest: strong law and weak law . Strong laws are the laws in- vestigated by fundamental physics, while weak laws feature prominently in the “special sciences” and in a variety of non-scientific contexts. In the first section, I clarify my methodology, which has to do with arguing about concepts. In the next section, I offer a detailed description of strong laws, which I claim satisfy four criteria: (1) If it is a strong law that L then it also true that L; (2) strong laws would continue to be true, were the world to be different in some physically possible way; (3) strong laws do not depend on context or human interest; (4) strong laws feature in scientific explanations but cannot be scientifically explained. I then spell out some philosophical consequences: (1) is incompatible with Cartwright’s contention that “laws lie” (2) with Lewis’s “best-system” account of laws, and (3) with contextualism about laws. In the final section, I argue that weak laws are distinguished by (approximately) meeting some but not all of these criteria. I provide a preliminary account of the scientific value of weak laws, and argue that they cannot plausibly be understood as ceteris paribus laws. KEY WORDS: Contextualism, counterfactuals, David Lewis, laws of nature, Nancy Cartwright, physical necessity. -

A Difference-Making Account of Causation1

A difference-making account of causation1 Wolfgang Pietsch2, Munich Center for Technology in Society, Technische Universität München, Arcisstr. 21, 80333 München, Germany A difference-making account of causality is proposed that is based on a counterfactual definition, but differs from traditional counterfactual approaches to causation in a number of crucial respects: (i) it introduces a notion of causal irrelevance; (ii) it evaluates the truth-value of counterfactual statements in terms of difference-making; (iii) it renders causal statements background-dependent. On the basis of the fundamental notions ‘causal relevance’ and ‘causal irrelevance’, further causal concepts are defined including causal factors, alternative causes, and importantly inus-conditions. Problems and advantages of the proposed account are discussed. Finally, it is shown how the account can shed new light on three classic problems in epistemology, the problem of induction, the logic of analogy, and the framing of eliminative induction. 1. Introduction ......................................................................................................................................................... 2 2. The difference-making account ........................................................................................................................... 3 2a. Causal relevance and causal irrelevance ....................................................................................................... 4 2b. A difference-making account of causal counterfactuals -

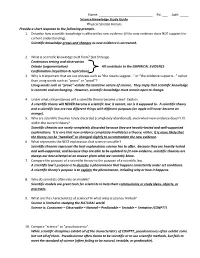

Ast#: ___Science Knowledge Study Guide Physical

Name: ______________________________ Pd: ___ Ast#: _____ Science Knowledge Study Guide Physical Science Honors Provide a short response to the following prompts. 1. Describe how scientific knowledge is affected by new evidence (if the new evidence does NOT support the current understanding). Scientific knowledge grows and changes as new evidence is uncovered. 2. What is scientific knowledge built from? (list 3 things) Continuous testing and observation Debate (argumentation) All contribute to the EMPIRICAL EVIDENCE Confirmation (repetition & replication) 3. Why is it important that we use phrases such as “the results suggest…” or “the evidence supports…” rather than using words such as “prove” or “proof”? Using words such as “prove” violate the tentative nature of science. They imply that scientific knowledge is concrete and unchanging. However, scientific knowledge must remain open to change. 4. Under what circumstances will a scientific theory become a law? Explain. A scientific theory will NEVER become a scientific law; it cannot, nor is it supposed to. A scientific theory and a scientific law are two different things with different purposes (an apple will never become an orange). 5. Why are scientific theories rarely discarded (completely abandoned), even when new evidence doesn’t fit within the current theory? Scientific theories are rarely completely discarded because they are heavily-tested and well-supported explanations. It is rare that new evidence completely invalidates a theory; rather, it is more likely that the theory can be “tweaked” or changed slightly to accommodate the new evidence. 6. What represents the BEST explanation that science can offer? Scientific theories represent the best explanations science has to offer. -

Sacred Rhetorical Invention in the String Theory Movement

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Communication Studies Theses, Dissertations, and Student Research Communication Studies, Department of Spring 4-12-2011 Secular Salvation: Sacred Rhetorical Invention in the String Theory Movement Brent Yergensen University of Nebraska-Lincoln, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/commstuddiss Part of the Speech and Rhetorical Studies Commons Yergensen, Brent, "Secular Salvation: Sacred Rhetorical Invention in the String Theory Movement" (2011). Communication Studies Theses, Dissertations, and Student Research. 6. https://digitalcommons.unl.edu/commstuddiss/6 This Article is brought to you for free and open access by the Communication Studies, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Communication Studies Theses, Dissertations, and Student Research by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. SECULAR SALVATION: SACRED RHETORICAL INVENTION IN THE STRING THEORY MOVEMENT by Brent Yergensen A DISSERTATION Presented to the Faculty of The Graduate College at the University of Nebraska In Partial Fulfillment of Requirements For the Degree of Doctor of Philosophy Major: Communication Studies Under the Supervision of Dr. Ronald Lee Lincoln, Nebraska April, 2011 ii SECULAR SALVATION: SACRED RHETORICAL INVENTION IN THE STRING THEORY MOVEMENT Brent Yergensen, Ph.D. University of Nebraska, 2011 Advisor: Ronald Lee String theory is argued by its proponents to be the Theory of Everything. It achieves this status in physics because it provides unification for contradictory laws of physics, namely quantum mechanics and general relativity. While based on advanced theoretical mathematics, its public discourse is growing in prevalence and its rhetorical power is leading to a scientific revolution, even among the public. -

What Scientific Theories Could Not Be Author(S): Hans Halvorson Reviewed Work(S): Source: Philosophy of Science, Vol

What Scientific Theories Could Not Be Author(s): Hans Halvorson Reviewed work(s): Source: Philosophy of Science, Vol. 79, No. 2 (April 2012), pp. 183-206 Published by: The University of Chicago Press on behalf of the Philosophy of Science Association Stable URL: http://www.jstor.org/stable/10.1086/664745 . Accessed: 03/12/2012 10:32 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp . JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. The University of Chicago Press and Philosophy of Science Association are collaborating with JSTOR to digitize, preserve and extend access to Philosophy of Science. http://www.jstor.org This content downloaded by the authorized user from 192.168.52.67 on Mon, 3 Dec 2012 10:32:52 AM All use subject to JSTOR Terms and Conditions What Scientific Theories Could Not Be* Hans Halvorson†‡ According to the semantic view of scientific theories, theories are classes of models. I show that this view—if taken literally—leads to absurdities. In particular, this view equates theories that are distinct, and it distinguishes theories that are equivalent. Furthermore, the semantic view lacks the resources to explicate interesting theoretical relations, such as embeddability of one theory into another. -

A Visual Analytics Approach for Exploratory Causal Analysis: Exploration, Validation, and Applications

A Visual Analytics Approach for Exploratory Causal Analysis: Exploration, Validation, and Applications Xiao Xie, Fan Du, and Yingcai Wu Fig. 1. The user interface of Causality Explorer demonstrated with a real-world audiology dataset that consists of 200 rows and 24 dimensions [18]. (a) A scalable causal graph layout that can handle high-dimensional data. (b) Histograms of all dimensions for comparative analyses of the distributions. (c) Clicking on a histogram will display the detailed data in the table view. (b) and (c) are coordinated to support what-if analyses. In the causal graph, each node is represented by a pie chart (d) and the causal direction (e) is from the upper node (cause) to the lower node (result). The thickness of a link encodes the uncertainty (f). Nodes without descendants are placed on the left side of each layer to improve readability (g). Users can double-click on a node to show its causality subgraph (h). Abstract—Using causal relations to guide decision making has become an essential analytical task across various domains, from marketing and medicine to education and social science. While powerful statistical models have been developed for inferring causal relations from data, domain practitioners still lack effective visual interface for interpreting the causal relations and applying them in their decision-making process. Through interview studies with domain experts, we characterize their current decision-making workflows, challenges, and needs. Through an iterative design process, we developed a visualization tool that allows analysts to explore, validate, arXiv:2009.02458v1 [cs.HC] 5 Sep 2020 and apply causal relations in real-world decision-making scenarios. -

Causal Inference for Process Understanding in Earth Sciences

Causal inference for process understanding in Earth sciences Adam Massmann,* Pierre Gentine, Jakob Runge May 4, 2021 Abstract There is growing interest in the study of causal methods in the Earth sciences. However, most applications have focused on causal discovery, i.e. inferring the causal relationships and causal structure from data. This paper instead examines causality through the lens of causal inference and how expert-defined causal graphs, a fundamental from causal theory, can be used to clarify assumptions, identify tractable problems, and aid interpretation of results and their causality in Earth science research. We apply causal theory to generic graphs of the Earth sys- tem to identify where causal inference may be most tractable and useful to address problems in Earth science, and avoid potentially incorrect conclusions. Specifically, causal inference may be useful when: (1) the effect of interest is only causally affected by the observed por- tion of the state space; or: (2) the cause of interest can be assumed to be independent of the evolution of the system’s state; or: (3) the state space of the system is reconstructable from lagged observations of the system. However, we also highlight through examples that causal graphs can be used to explicitly define and communicate assumptions and hypotheses, and help to structure analyses, even if causal inference is ultimately challenging given the data availability, limitations and uncertainties. Note: We will update this manuscript as our understanding of causality’s role in Earth sci- ence research evolves. Comments, feedback, and edits are enthusiastically encouraged, and we arXiv:2105.00912v1 [physics.ao-ph] 3 May 2021 will add acknowledgments and/or coauthors as we receive community contributions.