Insufficient Effort Responding: Examining an Insidious Confound in Survey Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gateless Gate Has Become Common in English, Some Have Criticized This Translation As Unfaithful to the Original

Wú Mén Guān The Barrier That Has No Gate Original Collection in Chinese by Chán Master Wúmén Huìkāi (1183-1260) Questions and Additional Comments by Sŏn Master Sǔngan Compiled and Edited by Paul Dōch’ŏng Lynch, JDPSN Page ii Frontspiece “Wú Mén Guān” Facsimile of the Original Cover Page iii Page iv Wú Mén Guān The Barrier That Has No Gate Chán Master Wúmén Huìkāi (1183-1260) Questions and Additional Comments by Sŏn Master Sǔngan Compiled and Edited by Paul Dōch’ŏng Lynch, JDPSN Sixth Edition Before Thought Publications Huntington Beach, CA 2010 Page v BEFORE THOUGHT PUBLICATIONS HUNTINGTON BEACH, CA 92648 ALL RIGHTS RESERVED. COPYRIGHT © 2010 ENGLISH VERSION BY PAUL LYNCH, JDPSN NO PART OF THIS BOOK MAY BE REPRODUCED OR TRANSMITTED IN ANY FORM OR BY ANY MEANS, GRAPHIC, ELECTRONIC, OR MECHANICAL, INCLUDING PHOTOCOPYING, RECORDING, TAPING OR BY ANY INFORMATION STORAGE OR RETRIEVAL SYSTEM, WITHOUT THE PERMISSION IN WRITING FROM THE PUBLISHER. PRINTED IN THE UNITED STATES OF AMERICA BY LULU INCORPORATION, MORRISVILLE, NC, USA COVER PRINTED ON LAMINATED 100# ULTRA GLOSS COVER STOCK, DIGITAL COLOR SILK - C2S, 90 BRIGHT BOOK CONTENT PRINTED ON 24/60# CREAM TEXT, 90 GSM PAPER, USING 12 PT. GARAMOND FONT Page vi Dedication What are we in this cosmos? This ineffable question has haunted us since Buddha sat under the Bodhi Tree. I would like to gracefully thank the author, Chán Master Wúmén, for his grace and kindness by leaving us these wonderful teachings. I would also like to thank Chán Master Dàhuì for his ineptness in destroying all copies of this book; thankfully, Master Dàhuì missed a few so that now we can explore the teachings of his teacher. -

Appeal No. 2100138 DOB V. Chen Xue Huang March 17, 2021

Appeal No. 2100138 DOB v. Chen Xue Huang March 17, 2021 APPEAL DECISION The appeal of Respondent, premises owner, is denied. Respondent appeals from a recommended decision by Hearing Officer J. Handlin (Bklyn.), dated December 3, 2020, sustaining a Class 1 charge of § 28-207.2.2 of the Administrative Code of the City of New York (Code), for continuing work while on notice of a stop work order. Having fully reviewed the record, the Board finds that the hearing officer’s decision is supported by the law and a preponderance of the evidence. Therefore, the Board finds as follows: Summons Law Charged Hearing Determination Appeal Determination Penalty 35488852J Code § 28-207.2.2 In Violation Affirmed – In Violation $5,000 In the summons, the issuing officer (IO) affirmed observing on July 20, 2020, at 32-14 106th Street, Queens: [T]wo workers at cellar level working on boiler while on notice of stop work order #052318C0303JW.” At the telephonic hearing, held on December 1, 2020, the attorney for Petitioner, Department of Buildings (DOB), relied on the IO’s affirmed statement in the summons and also the Complaint Overview for the premises, which documented Petitioner’s issuance of a full SWO on June 28, 2019, still in effect on the date of inspection. Respondent’s representative stated as follows. Respondent knew about the full SWO; however, Petitioner issued it in conjunction with a job that Respondent withdrew. Respondent then filed a new job that superseded the previous application and for which DOB issued a work permit. In the decision sustaining the charge, the hearing officer found that because Respondent offered no evidence that he ever applied to Petitioner to rescind the SWO, the work observed by the IO resulted in the cited violation. -

The Significance and Inheritance of Huang Di Culture

ISSN 1799-2591 Theory and Practice in Language Studies, Vol. 8, No. 12, pp. 1698-1703, December 2018 DOI: http://dx.doi.org/10.17507/tpls.0812.17 The Significance and Inheritance of Huang Di Culture Donghui Chen Henan Academy of Social Sciences, Zhengzhou, Henan, China Abstract—Huang Di culture is an important source of Chinese culture. It is not mechanical, still and solidified but melting, extensible, creative, pioneering and vigorous. It is the root of Chinese culture and a cultural system that keeps pace with the times. Its influence is enduring and universal. It has rich connotations including the “Root” Culture, the “Harmony” Culture, the “Golden Mean” Culture, the “Governance” Culture. All these have a great significance for the times and the realization of the Great Chinese Dream, therefore, it is necessary to combine the inheritance of Huang Di culture with its innovation, constantly absorb the fresh blood of the times with a confident, open and creative attitude, give Huang Di culture a rich connotation of the times, tap the factors in Huang Di culture that fit the development of the modern times to advance the progress of the country and society, and make Huang Di culture still full of vitality in the contemporary era. Index Terms—Huang Di, Huang Di culture, Chinese culture I. INTRODUCTION Huang Di, being considered the ancestor of all Han Chinese in Chinese mythology, is a legendary emperor and cultural hero. His victory in the war against Emperor Chi You is viewed as the establishment of the Han Chinese nationality. He has made great many accomplishments in agriculture, medicine, arithmetic, calendar, Chinese characters and arts, among which, his invention of the principles of Traditional Chinese medicine, Huang Di Nei Jing, has been seen as one of the greatest contributions to Chinese medicine. -

Baorong Guo, Ph.D

Baorong Guo, Ph.D. Associate Professor University of Missouri-St. Louis School of Social Work, 121 Bellerive Hall One University Boulevard, St. Louis, MO 63121 Telephone: (314) 516-6618 Fax: (314) 516-5816 Email: [email protected] EDUCATION Ph.D. (2005), Social Work, Washington University in St. Louis M.A. (2000), Sociology, Peking University, China B.A. (1997), Social Work, China Youth University for Political Science (CYCP), China EMPLOYMENT MSW Program Director, University of Missouri – St. Louis (2014-present) Associate Professor, University of Missouri - St. Louis (2011-present) Assistant Professor, University of Missouri - St. Louis (2005-2011) Lab Instructor/Teaching Assistant, Washington University in St. Louis (2001-2004) ACADEMIC AFFILIATIONS Faculty Associate, Center for Family Research and Policy, University of Missouri-St. Louis, 2009-present. Faculty Associate, Center for International Studies, University of Missouri-St. Louis, 2005- present. Faculty Associate, Center for Social Development, Washington University, 2005-present. COURSES TAUGHT Research Design in Social Work (undergraduate level) Social Issues and Social Policy Development (undergraduate level) Human Service Organizations (graduate and undergraduate level) Research Methods in Social Work (graduate level) Structural Equation Modeling (Teaching assistant) GUO, B. - 1 - Multivariate Statistics (Lab instructor) Program Evaluation (Lab instructor) GRANTS Funded external Principal investigator, Transition to Adulthood: Dynamics of Disability, Food Insecurity, Health, -

The Spreading of Christianity and the Introduction of Modern Architecture in Shannxi, China (1840-1949)

Escuela Técnica Superior de Arquitectura de Madrid Programa de doctorado en Concervación y Restauración del Patrimonio Architectónico The Spreading of Christianity and the introduction of Modern Architecture in Shannxi, China (1840-1949) Christian churches and traditional Chinese architecture Author: Shan HUANG (Architect) Director: Antonio LOPERA (Doctor, Arquitecto) 2014 Tribunal nombrado por el Magfco. y Excmo. Sr. Rector de la Universidad Politécnica de Madrid, el día de de 20 . Presidente: Vocal: Vocal: Vocal: Secretario: Suplente: Suplente: Realizado el acto de defensa y lectura de la Tesis el día de de 20 en la Escuela Técnica Superior de Arquitectura de Madrid. Calificación:………………………………. El PRESIDENTE LOS VOCALES EL SECRETARIO Index Index Abstract Resumen Introduction General Background........................................................................................... 1 A) Definition of the Concepts ................................................................ 3 B) Research Background........................................................................ 4 C) Significance and Objects of the Study .......................................... 6 D) Research Methodology ...................................................................... 8 CHAPTER 1 Introduction to Chinese traditional architecture 1.1 The concept of traditional Chinese architecture ......................... 13 1.2 Main characteristics of the traditional Chinese architecture .... 14 1.2.1 Wood was used as the main construction materials ........ 14 1.2.2 -

The Case of Wang Wei (Ca

_full_journalsubtitle: International Journal of Chinese Studies/Revue Internationale de Sinologie _full_abbrevjournaltitle: TPAO _full_ppubnumber: ISSN 0082-5433 (print version) _full_epubnumber: ISSN 1568-5322 (online version) _full_issue: 5-6_full_issuetitle: 0 _full_alt_author_running_head (neem stramien J2 voor dit article en vul alleen 0 in hierna): Sufeng Xu _full_alt_articletitle_deel (kopregel rechts, hier invullen): The Courtesan as Famous Scholar _full_is_advance_article: 0 _full_article_language: en indien anders: engelse articletitle: 0 _full_alt_articletitle_toc: 0 T’OUNG PAO The Courtesan as Famous Scholar T’oung Pao 105 (2019) 587-630 www.brill.com/tpao 587 The Courtesan as Famous Scholar: The Case of Wang Wei (ca. 1598-ca. 1647) Sufeng Xu University of Ottawa Recent scholarship has paid special attention to late Ming courtesans as a social and cultural phenomenon. Scholars have rediscovered the many roles that courtesans played and recognized their significance in the creation of a unique cultural atmosphere in the late Ming literati world.1 However, there has been a tendency to situate the flourishing of late Ming courtesan culture within the mainstream Confucian tradition, assuming that “the late Ming courtesan” continued to be “integral to the operation of the civil-service examination, the process that re- produced the empire’s political and cultural elites,” as was the case in earlier dynasties, such as the Tang.2 This assumption has suggested a division between the world of the Chinese courtesan whose primary clientele continued to be constituted by scholar-officials until the eight- eenth century and that of her Japanese counterpart whose rise in the mid- seventeenth century was due to the decline of elitist samurai- 1) For important studies on late Ming high courtesan culture, see Kang-i Sun Chang, The Late Ming Poet Ch’en Tzu-lung: Crises of Love and Loyalism (New Haven: Yale Univ. -

Participant List

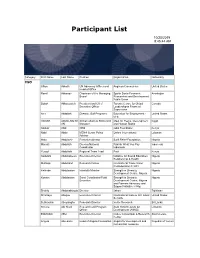

Participant List 10/20/2019 8:45:44 AM Category First Name Last Name Position Organization Nationality CSO Jillian Abballe UN Advocacy Officer and Anglican Communion United States Head of Office Ramil Abbasov Chariman of the Managing Spektr Socio-Economic Azerbaijan Board Researches and Development Public Union Babak Abbaszadeh President and Chief Toronto Centre for Global Canada Executive Officer Leadership in Financial Supervision Amr Abdallah Director, Gulf Programs Educaiton for Employment - United States EFE HAGAR ABDELRAHM African affairs & SDGs Unit Maat for Peace, Development Egypt AN Manager and Human Rights Abukar Abdi CEO Juba Foundation Kenya Nabil Abdo MENA Senior Policy Oxfam International Lebanon Advisor Mala Abdulaziz Executive director Swift Relief Foundation Nigeria Maryati Abdullah Director/National Publish What You Pay Indonesia Coordinator Indonesia Yussuf Abdullahi Regional Team Lead Pact Kenya Abdulahi Abdulraheem Executive Director Initiative for Sound Education Nigeria Relationship & Health Muttaqa Abdulra'uf Research Fellow International Trade Union Nigeria Confederation (ITUC) Kehinde Abdulsalam Interfaith Minister Strength in Diversity Nigeria Development Centre, Nigeria Kassim Abdulsalam Zonal Coordinator/Field Strength in Diversity Nigeria Executive Development Centre, Nigeria and Farmers Advocacy and Support Initiative in Nig Shahlo Abdunabizoda Director Jahon Tajikistan Shontaye Abegaz Executive Director International Insitute for Human United States Security Subhashini Abeysinghe Research Director Verite -

Newcastle 1St April

Postpositions vs. prepositions in Mandarin Chinese: The articulation of disharmony* Redouane Djamouri Waltraud Paul John Whitman [email protected] [email protected] [email protected] Centre de recherches linguistiques sur l‟Asie orientale (CRLAO) Department of Linguistics EHESS - CNRS, Paris Cornell University, Ithaca, NY NINJAL, Tokyo 1. Introduction Whitman (2008) divides word order generalizations modelled on Greenberg (1963) into three types: hierarchical, derivational, and crosscategorial. The first reflect basic patterns of selection and encompass generalizations like those proposed in Cinque (1999). The second reflect constraints on synactic derivations. The third type, crosscategorial generalizations, assert the existence of non-hierarchical, non-derivational generalizations across categories (e.g. the co-patterning of V~XP with P~NP and C~TP). In common with much recent work (e.g. Kayne 1994, Newmeyer 2005), Whitman rejects generalizations of the latter type - that is, generalizations such as the Head Parameter – as components of Universal Grammar. He argues that alleged universals of this type are unfailingly statistical (cf. Dryer 1998), and thus should be explained as the result of diachronic processes, such as V > P and V > C reanalysis, rather than synchronic grammar. This view predicts, contra the Head Parameter, that „mixed‟ or „disharmonic‟ crosscategorial word order properties are permitted by UG. Sinitic languages contain well- known examples of both types. Mixed orders are exemplified by prepositions, postpositions and circumpositions occurring in the same language. Disharmonic orders found in Chinese languages include head initial VP-internal order coincident with head final NP-internal order and clause-final complementizers. Such combinations are present in Chinese languages since their earliest attestation. -

HÀNWÉN and TAIWANESE SUBJECTIVITIES: a GENEALOGY of LANGUAGE POLICIES in TAIWAN, 1895-1945 by Hsuan-Yi Huang a DISSERTATION S

HÀNWÉN AND TAIWANESE SUBJECTIVITIES: A GENEALOGY OF LANGUAGE POLICIES IN TAIWAN, 1895-1945 By Hsuan-Yi Huang A DISSERTATION Submitted to Michigan State University in partial fulfillment of the requirements for the degree of Curriculum, Teaching, and Educational Policy—Doctor of Philosophy 2013 ABSTRACT HÀNWÉN AND TAIWANESE SUBJECTIVITIES: A GENEALOGY OF LANGUAGE POLICIES IN TAIWAN, 1895-1945 By Hsuan-Yi Huang This historical dissertation is a pedagogical project. In a critical and genealogical approach, inspired by Foucault’s genealogy and effective history and the new culture history of Sol Cohen and Hayden White, I hope pedagogically to raise awareness of the effect of history on shaping who we are and how we think about our self. I conceptualize such an historical approach as effective history as pedagogy, in which the purpose of history is to critically generate the pedagogical effects of history. This dissertation is a genealogical analysis of Taiwanese subjectivities under Japanese rule. Foucault’s theory of subjectivity, constituted by the four parts, substance of subjectivity, mode of subjectification, regimen of subjective practice, and telos of subjectification, served as a conceptual basis for my analysis of Taiwanese practices of the self-formation of a subject. Focusing on language policies in three historical events: the New Culture Movement in the 1920s, the Taiwanese Xiāngtǔ Literature Movement in the early 1930s, and the Japanization Movement during Wartime in 1937-1945, I analyzed discourses circulating within each event, particularly the possibilities/impossibilities created and shaped by discourses for Taiwanese subjectification practices. I illustrate discursive and subjectification practices that further shaped particular Taiwanese subjectivities in a particular event. -

Names of Chinese People in Singapore

101 Lodz Papers in Pragmatics 7.1 (2011): 101-133 DOI: 10.2478/v10016-011-0005-6 Lee Cher Leng Department of Chinese Studies, National University of Singapore ETHNOGRAPHY OF SINGAPORE CHINESE NAMES: RACE, RELIGION, AND REPRESENTATION Abstract Singapore Chinese is part of the Chinese Diaspora.This research shows how Singapore Chinese names reflect the Chinese naming tradition of surnames and generation names, as well as Straits Chinese influence. The names also reflect the beliefs and religion of Singapore Chinese. More significantly, a change of identity and representation is reflected in the names of earlier settlers and Singapore Chinese today. This paper aims to show the general naming traditions of Chinese in Singapore as well as a change in ideology and trends due to globalization. Keywords Singapore, Chinese, names, identity, beliefs, globalization. 1. Introduction When parents choose a name for a child, the name necessarily reflects their thoughts and aspirations with regards to the child. These thoughts and aspirations are shaped by the historical, social, cultural or spiritual setting of the time and place they are living in whether or not they are aware of them. Thus, the study of names is an important window through which one could view how these parents prefer their children to be perceived by society at large, according to the identities, roles, values, hierarchies or expectations constructed within a social space. Goodenough explains this culturally driven context of names and naming practices: Department of Chinese Studies, National University of Singapore The Shaw Foundation Building, Block AS7, Level 5 5 Arts Link, Singapore 117570 e-mail: [email protected] 102 Lee Cher Leng Ethnography of Singapore Chinese Names: Race, Religion, and Representation Different naming and address customs necessarily select different things about the self for communication and consequent emphasis. -

A Comparison of the Korean and Japanese Approaches to Foreign Family Names

15 A Comparison of the Korean and Japanese Approaches to Foreign Family Names JIN Guanglin* Abstract There are many foreign family names in Korean and Japanese genealogies. This paper is especially focused on the fact that out of approximately 280 Korean family names, roughly half are of foreign origin, and that out of those foreign family names, the majority trace their beginnings to China. In Japan, the Newly Edited Register of Family Names (新撰姓氏錄), published in 815, records that out of 1,182 aristocratic clans in the capital and its surroundings, 326 clans—approximately one-third—originated from China and Korea. Does the prevalence of foreign family names reflect migration from China to Korea, and from China and Korea to Japan? Or is it perhaps a result of Korean Sinophilia (慕華思想) and Japanese admiration for Korean and Chinese cultures? Or could there be an entirely distinct explanation? First I discuss premodern Korean and ancient Japanese foreign family names, and then I examine the formation and characteristics of these family names. Next I analyze how migration from China to Korea, as well as from China and Korea to Japan, occurred in their historical contexts. Through these studies, I derive answers to the above-mentioned questions. Key words: family names (surnames), Chinese-style family names, cultural diffusion and adoption, migration, Sinophilia in traditional Korea and Japan 1 Foreign Family Names in Premodern Korea The precise number of Korean family names varies by record. The Geography Annals of King Sejong (世宗實錄地理志, 1454), the first systematic register of Korean family names, records 265 family names, but the Survey of the Geography of Korea (東國輿地勝覽, 1486) records 277. -

A Study of Xu Xu's Ghost Love and Its Three Film Adaptations THESIS

Allegories and Appropriations of the ―Ghost‖: A Study of Xu Xu‘s Ghost Love and Its Three Film Adaptations THESIS Presented in Partial Fulfillment of the Requirements for the Degree Master of Arts in the Graduate School of The Ohio State University By Qin Chen Graduate Program in East Asian Languages and Literatures The Ohio State University 2010 Master's Examination Committee: Kirk Denton, Advisor Patricia Sieber Copyright by Qin Chen 2010 Abstract This thesis is a comparative study of Xu Xu‘s (1908-1980) novella Ghost Love (1937) and three film adaptations made in 1941, 1956 and 1995. As one of the most popular writers during the Republican period, Xu Xu is famous for fiction characterized by a cosmopolitan atmosphere, exoticism, and recounting fantastic encounters. Ghost Love, his first well-known work, presents the traditional narrative of ―a man encountering a female ghost,‖ but also embodies serious psychological, philosophical, and even political meanings. The approach applied to this thesis is semiotic and focuses on how each text reflects the particular reality and ethos of its time. In other words, in analyzing how Xu‘s original text and the three film adaptations present the same ―ghost story,‖ as well as different allegories hidden behind their appropriations of the image of the ―ghost,‖ the thesis seeks to broaden our understanding of the history, society, and culture of some eventful periods in twentieth-century China—prewar Shanghai (Chapter 1), wartime Shanghai (Chapter 2), post-war Hong Kong (Chapter 3) and post-Mao mainland (Chapter 4). ii Dedication To my parents and my husband, Zhang Boying iii Acknowledgments This thesis owes a good deal to the DEALL teachers and mentors who have taught and helped me during the past two years at The Ohio State University, particularly my advisor, Dr.