Lecture 3: Equilibrium

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals](https://docslib.b-cdn.net/cover/4942/arxiv-1910-10745v1-cond-mat-str-el-23-oct-2019-2-2-symmetry-protected-time-crystals-304942.webp)

Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals

A Brief History of Time Crystals Vedika Khemania,b,∗, Roderich Moessnerc, S. L. Sondhid aDepartment of Physics, Harvard University, Cambridge, Massachusetts 02138, USA bDepartment of Physics, Stanford University, Stanford, California 94305, USA cMax-Planck-Institut f¨urPhysik komplexer Systeme, 01187 Dresden, Germany dDepartment of Physics, Princeton University, Princeton, New Jersey 08544, USA Abstract The idea of breaking time-translation symmetry has fascinated humanity at least since ancient proposals of the per- petuum mobile. Unlike the breaking of other symmetries, such as spatial translation in a crystal or spin rotation in a magnet, time translation symmetry breaking (TTSB) has been tantalisingly elusive. We review this history up to recent developments which have shown that discrete TTSB does takes place in periodically driven (Floquet) systems in the presence of many-body localization (MBL). Such Floquet time-crystals represent a new paradigm in quantum statistical mechanics — that of an intrinsically out-of-equilibrium many-body phase of matter with no equilibrium counterpart. We include a compendium of the necessary background on the statistical mechanics of phase structure in many- body systems, before specializing to a detailed discussion of the nature, and diagnostics, of TTSB. In particular, we provide precise definitions that formalize the notion of a time-crystal as a stable, macroscopic, conservative clock — explaining both the need for a many-body system in the infinite volume limit, and for a lack of net energy absorption or dissipation. Our discussion emphasizes that TTSB in a time-crystal is accompanied by the breaking of a spatial symmetry — so that time-crystals exhibit a novel form of spatiotemporal order. -

Lecture 6: Entropy

Matthew Schwartz Statistical Mechanics, Spring 2019 Lecture 6: Entropy 1 Introduction In this lecture, we discuss many ways to think about entropy. The most important and most famous property of entropy is that it never decreases Stot > 0 (1) Here, Stot means the change in entropy of a system plus the change in entropy of the surroundings. This is the second law of thermodynamics that we met in the previous lecture. There's a great quote from Sir Arthur Eddington from 1927 summarizing the importance of the second law: If someone points out to you that your pet theory of the universe is in disagreement with Maxwell's equationsthen so much the worse for Maxwell's equations. If it is found to be contradicted by observationwell these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of ther- modynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation. Another possibly relevant quote, from the introduction to the statistical mechanics book by David Goodstein: Ludwig Boltzmann who spent much of his life studying statistical mechanics, died in 1906, by his own hand. Paul Ehrenfest, carrying on the work, died similarly in 1933. Now it is our turn to study statistical mechanics. There are many ways to dene entropy. All of them are equivalent, although it can be hard to see. In this lecture we will compare and contrast dierent denitions, building up intuition for how to think about entropy in dierent contexts. The original denition of entropy, due to Clausius, was thermodynamic. -

Dirty Tricks for Statistical Mechanics

Dirty tricks for statistical mechanics Martin Grant Physics Department, McGill University c MG, August 2004, version 0.91 ° ii Preface These are lecture notes for PHYS 559, Advanced Statistical Mechanics, which I’ve taught at McGill for many years. I’m intending to tidy this up into a book, or rather the first half of a book. This half is on equilibrium, the second half would be on dynamics. These were handwritten notes which were heroically typed by Ryan Porter over the summer of 2004, and may someday be properly proof-read by me. Even better, maybe someday I will revise the reasoning in some of the sections. Some of it can be argued better, but it was too much trouble to rewrite the handwritten notes. I am also trying to come up with a good book title, amongst other things. The two titles I am thinking of are “Dirty tricks for statistical mechanics”, and “Valhalla, we are coming!”. Clearly, more thinking is necessary, and suggestions are welcome. While these lecture notes have gotten longer and longer until they are al- most self-sufficient, it is always nice to have real books to look over. My favorite modern text is “Lectures on Phase Transitions and the Renormalisation Group”, by Nigel Goldenfeld (Addison-Wesley, Reading Mass., 1992). This is referred to several times in the notes. Other nice texts are “Statistical Physics”, by L. D. Landau and E. M. Lifshitz (Addison-Wesley, Reading Mass., 1970) par- ticularly Chaps. 1, 12, and 14; “Statistical Mechanics”, by S.-K. Ma (World Science, Phila., 1986) particularly Chaps. -

Generalized Molecular Chaos Hypothesis and the H-Theorem: Problem of Constraints and Amendment of Nonextensive Statistical Mechanics

Generalized molecular chaos hypothesis and the H-theorem: Problem of constraints and amendment of nonextensive statistical mechanics Sumiyoshi Abe1,2,3 1Department of Physical Engineering, Mie University, Mie 514-8507, Japan*) 2Institut Supérieur des Matériaux et Mécaniques Avancés, 44 F. A. Bartholdi, 72000 Le Mans, France 3Inspire Institute Inc., McLean, Virginia 22101, USA Abstract Quite unexpectedly, kinetic theory is found to specify the correct definition of average value to be employed in nonextensive statistical mechanics. It is shown that the normal average is consistent with the generalized Stosszahlansatz (i.e., molecular chaos hypothesis) and the associated H-theorem, whereas the q-average widely used in the relevant literature is not. In the course of the analysis, the distributions with finite cut-off factors are rigorously treated. Accordingly, the formulation of nonextensive statistical mechanics is amended based on the normal average. In addition, the Shore- Johnson theorem, which supports the use of the q-average, is carefully reexamined, and it is found that one of the axioms may not be appropriate for systems to be treated within the framework of nonextensive statistical mechanics. PACS number(s): 05.20.Dd, 05.20.Gg, 05.90.+m ____________________________ *) Permanent address 1 I. INTRODUCTION There exist a number of physical systems that possess exotic properties in view of traditional Boltzmann-Gibbs statistical mechanics. They are said to be statistical- mechanically anomalous, since they often exhibit and realize broken ergodicity, strong correlation between elements, (multi)fractality of phase-space/configuration-space portraits, and long-range interactions, for example. In the past decade, nonextensive statistical mechanics [1,2], which is a generalization of the Boltzmann-Gibbs theory, has been drawing continuous attention as a possible theoretical framework for describing these systems. -

LECTURE 9 Statistical Mechanics Basic Methods We Have Talked

LECTURE 9 Statistical Mechanics Basic Methods We have talked about ensembles being large collections of copies or clones of a system with some features being identical among all the copies. There are three different types of ensembles in statistical mechanics. 1. If the system under consideration is isolated, i.e., not interacting with any other system, then the ensemble is called the microcanonical ensemble. In this case the energy of the system is a constant. 2. If the system under consideration is in thermal equilibrium with a heat reservoir at temperature T , then the ensemble is called a canonical ensemble. In this case the energy of the system is not a constant; the temperature is constant. 3. If the system under consideration is in contact with both a heat reservoir and a particle reservoir, then the ensemble is called a grand canonical ensemble. In this case the energy and particle number of the system are not constant; the temperature and the chemical potential are constant. The chemical potential is the energy required to add a particle to the system. The most common ensemble encountered in doing statistical mechanics is the canonical ensemble. We will explore many examples of the canonical ensemble. The grand canon- ical ensemble is used in dealing with quantum systems. The microcanonical ensemble is not used much because of the difficulty in identifying and evaluating the accessible microstates, but we will explore one simple system (the ideal gas) as an example of the microcanonical ensemble. Microcanonical Ensemble Consider an isolated system described by an energy in the range between E and E + δE, and similar appropriate ranges for external parameters xα. -

H Theorems in Statistical Fluid Dynamics

J. Phys. A: Math. Gen. 14 (1981) 1701-1718. Printed in Great Britain H theorems in statistical fluid dynamics George F Camevaleit, Uriel FrischO and Rick Salmon1 $National Center for Atmospheric Researchq, Boulder, Colorado 80307, USA §Division of Applied Sciences, Harvard University, Cambridge, Massachusetts 02138, USA and CNRS, Observatoire de Nice, Nice, France SScripps Institution of Oceanography, La Jolla, California 92093, USA Received 28 April 1980, in final form 6 November 1980 Abstract. It is demonstrated that the second-order Markovian closures frequently used in turbulence theory imply an H theorem for inviscid flow with an ultraviolet spectral cut-off. That is, from the inviscid closure equations, it follows that a certain functional of the energy spectrum (namely entropy) increases monotonically in time to a maximum value at absolute equilibrium. This is shown explicitly for isotropic homogeneous flow in dimensions d 3 2, and then a generalised theorem which covers a wide class of systems of current interest is presented. It is shown that the H theorem for closure can be derived from a Gibbs-type H theorem for the exact non-dissipative dynamics. 1. Introduction In the kinetic theory of classical and quantum many-particle systems, statistical evolution is described by a Boltzmann equation. This equation provides a collisional representation for nonlinear interactions, and through the Boltzmann H theorem it implies monotonic relaxation toward absolute canonical equilibrium. Here we show to what extent this same framework formally applies in statistical macroscopic fluid dynamics. Naturally, since canonical equilibrium applies only to conservative systems, a strong analogy can be produced only by confining ourselves to non-dissipative idealisations of fluid systems. -

Statistical Mechanics I: Lecture 26

VII.G Superfluid He4 Interesting examples of quantum fluids are provided by the isotopes of helium. The two electrons of He occupy the 1s orbital with opposite spins. The filled orbital makes this noble gas particularly inert. There is still a van der Waals attraction between two He atoms, but the interatomic potential has a shallow minimum of depth 9◦K at a separation of roughly 3A.˚ The weakness of this interaction makes He a powerful wetting agent. As the He atoms have a stronger attraction for practically all other molecules, they easily spread over the surface of any substance. Due to its light mass, the He atom undergoes large zero point fluctuations at T = 0. These fluctuations are sufficient to melt a solid phase, and thus He remains in a liquid state at ordinary pressures. Pressures of over 25 atmosphere are required to sufficiently localize the He atoms to result in a solid phase at T = 0. A remarkable property of the quantum liquid at zero temperature is that, unlike its classical counterpart, it has zero entropy. The lighter isotope of He3 has three nucleons and obeys fermi statistics. The liquid phase is very well described by a fermi gas of the type discussed in sec.VII.E. The inter actions between atoms do not significantly change the non-interacting picture described earlier. By contrast, the heavier isotope of He4 is a boson. Helium can be cooled down by a process of evaporation: Liquid helium is placed in an isolated container, in equilibrium with the gas phase at a finite vapor density. -

Lecture 7: Ensembles

Matthew Schwartz Statistical Mechanics, Spring 2019 Lecture 7: Ensembles 1 Introduction In statistical mechanics, we study the possible microstates of a system. We never know exactly which microstate the system is in. Nor do we care. We are interested only in the behavior of a system based on the possible microstates it could be, that share some macroscopic proporty (like volume V ; energy E, or number of particles N). The possible microstates a system could be in are known as the ensemble of states for a system. There are dierent kinds of ensembles. So far, we have been counting microstates with a xed number of particles N and a xed total energy E. We dened as the total number microstates for a system. That is (E; V ; N) = 1 (1) microstatesk withsaXmeN ;V ;E Then S = kBln is the entropy, and all other thermodynamic quantities follow from S. For an isolated system with N xed and E xed the ensemble is known as the microcanonical 1 @S ensemble. In the microcanonical ensemble, the temperature is a derived quantity, with T = @E . So far, we have only been using the microcanonical ensemble. 1 3 N For example, a gas of identical monatomic particles has (E; V ; N) V NE 2 . From this N! we computed the entropy S = kBln which at large N reduces to the Sackur-Tetrode formula. 1 @S 3 NkB 3 The temperature is T = @E = 2 E so that E = 2 NkBT . Also in the microcanonical ensemble we observed that the number of states for which the energy of one degree of freedom is xed to "i is (E "i) "i/k T (E " ). -

Section 2 Introduction to Statistical Mechanics

Section 2 Introduction to Statistical Mechanics 2.1 Introducing entropy 2.1.1 Boltzmann’s formula A very important thermodynamic concept is that of entropy S. Entropy is a function of state, like the internal energy. It measures the relative degree of order (as opposed to disorder) of the system when in this state. An understanding of the meaning of entropy thus requires some appreciation of the way systems can be described microscopically. This connection between thermodynamics and statistical mechanics is enshrined in the formula due to Boltzmann and Planck: S = k ln Ω where Ω is the number of microstates accessible to the system (the meaning of that phrase to be explained). We will first try to explain what a microstate is and how we count them. This leads to a statement of the second law of thermodynamics, which as we shall see has to do with maximising the entropy of an isolated system. As a thermodynamic function of state, entropy is easy to understand. Entropy changes of a system are intimately connected with heat flow into it. In an infinitesimal reversible process dQ = TdS; the heat flowing into the system is the product of the increment in entropy and the temperature. Thus while heat is not a function of state entropy is. 2.2 The spin 1/2 paramagnet as a model system Here we introduce the concepts of microstate, distribution, average distribution and the relationship to entropy using a model system. This treatment is intended to complement that of Guenault (chapter 1) which is very clear and which you must read. -

4 Pressure and Viscosity

4 Pressure and Viscosity Reading: Ryden, chapter 2; Shu, chapter 4 4.1 Specific heats and the adiabatic index First law of thermodynamics (energy conservation): d = −P dV + dq =) dq = d + P dV; (28) − V ≡ ρ 1 = specific volume [ cm3 g−1] dq ≡ T ds = heat change per unit mass [ erg g−1] − s ≡ specific entropy [ erg g−1 K 1]: The specific heat at constant volume, @q −1 −1 cV ≡ [ erg g K ] (29) @T V is the amount of heat that must be added to raise temperature of 1 g of gas by 1 K. At constant volume, dq = d, and if depends only on temperature (not density), (V; T ) = (T ), then @q @ d cV ≡ = = : @T V @T V dT implying dq = cV dT + P dV: 1 If a gas has temperature T , then each degree of freedom that can be excited has energy 2 kT . (This is the equipartition theorem of classical statistical mechanics.) The pressure 1 ρ P = ρhjw~j2i = kT 3 m since 1 1 3kT h mw2i = kT =) hjw~j2i = : 2 i 2 m Therefore kT k P V = =) P dV = dT: m m Using dq = cV dT + P dV , the specific heat at constant pressure is @q dV k cP ≡ = cV + P = cV + : @T P dT m Changing the temperature at constant pressure requires more heat than at constant volume because some of the energy goes into P dV work. 19 For reasons that will soon become evident, the quantity γ ≡ cP =cV is called the adiabatic index. A monatomic gas has 3 degrees of freedom (translation), so 3 kT 3 k 5 k 5 = =) c = =) c = =) γ = : 2 m V 2 m P 2 m 3 A diatomic gas has 2 additional degrees of freedom (rotation), so cV = 5k=2m, γ = 7=5. -

Statistical Mechanical Modeling and Its Application to Nanosystems Keivan Esfarjani 1 and G.Ali Mansoori 2 (1)

Handbook of Theoretical and Computational NANOTECHNOLOGY M. Rieth and W. Schommers (Ed's) Volume X: Chapter 16, Pages: 1–45, 2005 CHAPTER 16 Statistical Mechanical Modeling and Its Application to Nanosystems Keivan Esfarjani and G.Ali Mansoori 1 2 (1). Sharif University of Technology, Tehran, Iran. Present address: University of Virginia, MAE-307, 122 Engineer's Way , Charlottesville, Virginia 22904 U.S.A. Email: [email protected] (2). University of Illinois at Chicago, (M/C 063) Chicago, Illinois, 60607-7052, U.S.A. Email: [email protected] CONTENTS 1. Introduction ..................................... 1 2. Thermodynamics of Small Systems ..................... 3 2.1. Nonextensivity of Nanosystems ................... 6 2.2. The Gibbs Equation for Nanosystems .............. 7 2.3. Statistical Mechanics and Thermodynamic Property Predictions ........................... 9 3. Intermolecular Forces and Potentials .................. 10 3.1. Step 1: Atomic Force Microscopy Measurement and Empirical Modeling ........................... 14 3.2. Step 2: Theoretical Modeling ................... 14 3.3. Step 3: Development of Nanoparticle Potentials ...... 17 4. Nanoscale systems Computer-Based Simulations .......... 17 4.1. Classification of the Methods ................... 17 4.2. Monte Carlo Method and Random Numbers ........ 19 4.3. Molecular Dynamics Method ................... 31 5. Conclusions .................................... 41 Glossary ....................................... 41 References ..................................... 42 1. INTRODUCTION -

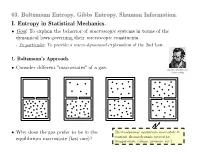

03. Entropy: Boltzmann, Gibbs, Shannon

03. Boltzmann Entropy, Gibbs Entropy, Shannon Information. I. Entropy in Statistical Mechanics. • Goal: To explain the behavior of macroscopic systems in terms of the dynamical laws governing their microscopic consituents. - In particular: To provide a micro-dynamical explanation of the 2nd Law. 1. Boltzmann's Approach. • Consider different "macrostates" of a gas: Ludwig Boltzmann (1844-1906) • Why does the gas prefer to be in the Thermodynamic equilibrium macrostate = constant thermodynamic properties equilibrium macrostate (last one)? (temperature, volume, pressure, etc.) • Suppose the gas consists of N identical particles governed by Hamilton's equations of motion (the micro-dynamics). Def. 1. A microstate X of a gas is a specification of the position (3 values) and momentum (3 values) for each of its N particles. Let Ω = phase space = 6N-dim space of all possible microstates. Let ΩE = region of Ω that consists of all microstates with constant energy E. • Ω • E • Hamiltonian dynamics • maps initial microstate • • X to final microstate X . • • i f Xf Xi • Can 2nd Law be • explained by recourse to • • • this dynamics? • • Def. 2. A macrostate Γ of a gas is a specification of the gas in terms of macroscopic properties (pressure, temperature, volume, etc.). • Relation between microstates and macrostates: Macrostates supervene on microstates! - To each microstate there corresponds exactly one macrostate. - Many distinct microstates can correspond to the same macrostate. • So: ΩE is partitioned into a finite number of regions corresponding to macrostates, with each microstate X belonging to one macrostate Γ(X). • • • ΩE microstates • • Γ(X) • • • • macrostate • X • • • • • • • • • Boltzmann's Claim: The equilibrium macrostate Γeq is vastly larger than any other macrostate (so it contains the vast majority of possible microstates).