Lecture 7: Ensembles

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Canonical Ensemble

ME346A Introduction to Statistical Mechanics { Wei Cai { Stanford University { Win 2011 Handout 8. Canonical Ensemble January 26, 2011 Contents Outline • In this chapter, we will establish the equilibrium statistical distribution for systems maintained at a constant temperature T , through thermal contact with a heat bath. • The resulting distribution is also called Boltzmann's distribution. • The canonical distribution also leads to definition of the partition function and an expression for Helmholtz free energy, analogous to Boltzmann's Entropy formula. • We will study energy fluctuation at constant temperature, and witness another fluctuation- dissipation theorem (FDT) and finally establish the equivalence of micro canonical ensemble and canonical ensemble in the thermodynamic limit. (We first met a mani- festation of FDT in diffusion as Einstein's relation.) Reading Assignment: Reif x6.1-6.7, x6.10 1 1 Temperature For an isolated system, with fixed N { number of particles, V { volume, E { total energy, it is most conveniently described by the microcanonical (NVE) ensemble, which is a uniform distribution between two constant energy surfaces. const E ≤ H(fq g; fp g) ≤ E + ∆E ρ (fq g; fp g) = i i (1) mc i i 0 otherwise Statistical mechanics also provides the expression for entropy S(N; V; E) = kB ln Ω. In thermodynamics, S(N; V; E) can be transformed to a more convenient form (by Legendre transform) of Helmholtz free energy A(N; V; T ), which correspond to a system with constant N; V and temperature T . Q: Does the transformation from N; V; E to N; V; T have a meaning in statistical mechanics? A: The ensemble of systems all at constant N; V; T is called the canonical NVT ensemble. -

Magnetism, Magnetic Properties, Magnetochemistry

Magnetism, Magnetic Properties, Magnetochemistry 1 Magnetism All matter is electronic Positive/negative charges - bound by Coulombic forces Result of electric field E between charges, electric dipole Electric and magnetic fields = the electromagnetic interaction (Oersted, Maxwell) Electric field = electric +/ charges, electric dipole Magnetic field ??No source?? No magnetic charges, N-S No magnetic monopole Magnetic field = motion of electric charges (electric current, atomic motions) Magnetic dipole – magnetic moment = i A [A m2] 2 Electromagnetic Fields 3 Magnetism Magnetic field = motion of electric charges • Macro - electric current • Micro - spin + orbital momentum Ampère 1822 Poisson model Magnetic dipole – magnetic (dipole) moment [A m2] i A 4 Ampere model Magnetism Microscopic explanation of source of magnetism = Fundamental quantum magnets Unpaired electrons = spins (Bohr 1913) Atomic building blocks (protons, neutrons and electrons = fermions) possess an intrinsic magnetic moment Relativistic quantum theory (P. Dirac 1928) SPIN (quantum property ~ rotation of charged particles) Spin (½ for all fermions) gives rise to a magnetic moment 5 Atomic Motions of Electric Charges The origins for the magnetic moment of a free atom Motions of Electric Charges: 1) The spins of the electrons S. Unpaired spins give a paramagnetic contribution. Paired spins give a diamagnetic contribution. 2) The orbital angular momentum L of the electrons about the nucleus, degenerate orbitals, paramagnetic contribution. The change in the orbital moment -

Identical Particles

8.06 Spring 2016 Lecture Notes 4. Identical particles Aram Harrow Last updated: May 19, 2016 Contents 1 Fermions and Bosons 1 1.1 Introduction and two-particle systems .......................... 1 1.2 N particles ......................................... 3 1.3 Non-interacting particles .................................. 5 1.4 Non-zero temperature ................................... 7 1.5 Composite particles .................................... 7 1.6 Emergence of distinguishability .............................. 9 2 Degenerate Fermi gas 10 2.1 Electrons in a box ..................................... 10 2.2 White dwarves ....................................... 12 2.3 Electrons in a periodic potential ............................. 16 3 Charged particles in a magnetic field 21 3.1 The Pauli Hamiltonian ................................... 21 3.2 Landau levels ........................................ 23 3.3 The de Haas-van Alphen effect .............................. 24 3.4 Integer Quantum Hall Effect ............................... 27 3.5 Aharonov-Bohm Effect ................................... 33 1 Fermions and Bosons 1.1 Introduction and two-particle systems Previously we have discussed multiple-particle systems using the tensor-product formalism (cf. Section 1.2 of Chapter 3 of these notes). But this applies only to distinguishable particles. In reality, all known particles are indistinguishable. In the coming lectures, we will explore the mathematical and physical consequences of this. First, consider classical many-particle systems. If a single particle has state described by position and momentum (~r; p~), then the state of N distinguishable particles can be written as (~r1; p~1; ~r2; p~2;:::; ~rN ; p~N ). The notation (·; ·;:::; ·) denotes an ordered list, in which different posi tions have different meanings; e.g. in general (~r1; p~1; ~r2; p~2)6 = (~r2; p~2; ~r1; p~1). 1 To describe indistinguishable particles, we can use set notation. -

![Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals](https://docslib.b-cdn.net/cover/4942/arxiv-1910-10745v1-cond-mat-str-el-23-oct-2019-2-2-symmetry-protected-time-crystals-304942.webp)

Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals

A Brief History of Time Crystals Vedika Khemania,b,∗, Roderich Moessnerc, S. L. Sondhid aDepartment of Physics, Harvard University, Cambridge, Massachusetts 02138, USA bDepartment of Physics, Stanford University, Stanford, California 94305, USA cMax-Planck-Institut f¨urPhysik komplexer Systeme, 01187 Dresden, Germany dDepartment of Physics, Princeton University, Princeton, New Jersey 08544, USA Abstract The idea of breaking time-translation symmetry has fascinated humanity at least since ancient proposals of the per- petuum mobile. Unlike the breaking of other symmetries, such as spatial translation in a crystal or spin rotation in a magnet, time translation symmetry breaking (TTSB) has been tantalisingly elusive. We review this history up to recent developments which have shown that discrete TTSB does takes place in periodically driven (Floquet) systems in the presence of many-body localization (MBL). Such Floquet time-crystals represent a new paradigm in quantum statistical mechanics — that of an intrinsically out-of-equilibrium many-body phase of matter with no equilibrium counterpart. We include a compendium of the necessary background on the statistical mechanics of phase structure in many- body systems, before specializing to a detailed discussion of the nature, and diagnostics, of TTSB. In particular, we provide precise definitions that formalize the notion of a time-crystal as a stable, macroscopic, conservative clock — explaining both the need for a many-body system in the infinite volume limit, and for a lack of net energy absorption or dissipation. Our discussion emphasizes that TTSB in a time-crystal is accompanied by the breaking of a spatial symmetry — so that time-crystals exhibit a novel form of spatiotemporal order. -

On Thermality of CFT Eigenstates

On thermality of CFT eigenstates Pallab Basu1 , Diptarka Das2 , Shouvik Datta3 and Sridip Pal2 1International Center for Theoretical Sciences-TIFR, Shivakote, Hesaraghatta Hobli, Bengaluru North 560089, India. 2Department of Physics, University of California San Diego, La Jolla, CA 92093, USA. 3Institut f¨urTheoretische Physik, Eidgen¨ossischeTechnische Hochschule Z¨urich, 8093 Z¨urich,Switzerland. E-mail: [email protected], [email protected], [email protected], [email protected] Abstract: The Eigenstate Thermalization Hypothesis (ETH) provides a way to under- stand how an isolated quantum mechanical system can be approximated by a thermal density matrix. We find a class of operators in (1+1)-d conformal field theories, consisting of quasi-primaries of the identity module, which satisfy the hypothesis only at the leading order in large central charge. In the context of subsystem ETH, this plays a role in the deviation of the reduced density matrices, corresponding to a finite energy density eigen- state from its hypothesized thermal approximation. The universal deviation in terms of the square of the trace-square distance goes as the 8th power of the subsystem fraction and is suppressed by powers of inverse central charge (c). Furthermore, the non-universal deviations from subsystem ETH are found to be proportional to the heavy-light-heavy p arXiv:1705.03001v2 [hep-th] 26 Aug 2017 structure constants which are typically exponentially suppressed in h=c, where h is the conformal scaling dimension of the finite energy density -

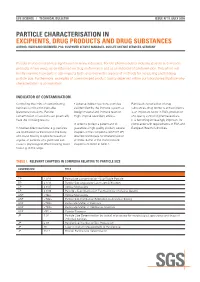

Particle Characterisation In

LIFE SCIENCE I TECHNICAL BULLETIN ISSUE N°11 /JULY 2008 PARTICLE CHARACTERISATION IN EXCIPIENTS, DRUG PRODUCTS AND DRUG SUBSTANCES AUTHOR: HILDEGARD BRÜMMER, PhD, CUSTOMER SERVICE MANAGER, SGS LIFE SCIENCE SERVICES, GERMANY Particle characterization has significance in many industries. For the pharmaceutical industry, particle size impacts products in two ways: as an influence on drug performance and as an indicator of contamination. This article will briefly examine how particle size impacts both, and review the arsenal of methods for measuring and tracking particle size. Furthermore, examples of compromised product quality observed within our laboratories illustrate why characterization is so important. INDICATOR OF CONTAMINATION Controlling the limits of contaminating • Adverse indirect reactions: particles Particle characterisation of drug particles is critical for injectable are identified by the immune system as substances, drug products and excipients (parenteral) solutions. Particle foreign material and immune reaction is an important factor in R&D, production contamination of solutions can potentially might impose secondary effects. and quality control of pharmaceuticals. have the following results: It is becoming increasingly important for In order to protect a patient and to compliance with requirements of FDA and • Adverse direct reactions: e.g. particles guarantee a high quality product, several European Health Authorities. are distributed via the blood in the body chapters in the compendia (USP, EP, JP) and cause toxicity to specific tissues or describe techniques for characterisation organs, or particles of a given size can of limits. Some of the most relevant cause a physiological effect blocking blood chapters are listed in Table 1. flow e.g. in the lungs. -

Chapter 3 Bose-Einstein Condensation of an Ideal

Chapter 3 Bose-Einstein Condensation of An Ideal Gas An ideal gas consisting of non-interacting Bose particles is a ¯ctitious system since every realistic Bose gas shows some level of particle-particle interaction. Nevertheless, such a mathematical model provides the simplest example for the realization of Bose-Einstein condensation. This simple model, ¯rst studied by A. Einstein [1], correctly describes important basic properties of actual non-ideal (interacting) Bose gas. In particular, such basic concepts as BEC critical temperature Tc (or critical particle density nc), condensate fraction N0=N and the dimensionality issue will be obtained. 3.1 The ideal Bose gas in the canonical and grand canonical ensemble Suppose an ideal gas of non-interacting particles with ¯xed particle number N is trapped in a box with a volume V and at equilibrium temperature T . We assume a particle system somehow establishes an equilibrium temperature in spite of the absence of interaction. Such a system can be characterized by the thermodynamic partition function of canonical ensemble X Z = e¡¯ER ; (3.1) R where R stands for a macroscopic state of the gas and is uniquely speci¯ed by the occupa- tion number ni of each single particle state i: fn0; n1; ¢ ¢ ¢ ¢ ¢ ¢g. ¯ = 1=kBT is a temperature parameter. Then, the total energy of a macroscopic state R is given by only the kinetic energy: X ER = "ini; (3.2) i where "i is the eigen-energy of the single particle state i and the occupation number ni satis¯es the normalization condition X N = ni: (3.3) i 1 The probability -

Bose-Einstein Condensation of Photons and Grand-Canonical Condensate fluctuations

Bose-Einstein condensation of photons and grand-canonical condensate fluctuations Jan Klaers Institute for Applied Physics, University of Bonn, Germany Present address: Institute for Quantum Electronics, ETH Zürich, Switzerland Martin Weitz Institute for Applied Physics, University of Bonn, Germany Abstract We review recent experiments on the Bose-Einstein condensation of photons in a dye-filled optical microresonator. The most well-known example of a photon gas, pho- tons in blackbody radiation, does not show Bose-Einstein condensation. Instead of massively populating the cavity ground mode, photons vanish in the cavity walls when they are cooled down. The situation is different in an ultrashort optical cavity im- printing a low-frequency cutoff on the photon energy spectrum that is well above the thermal energy. The latter allows for a thermalization process in which both tempera- ture and photon number can be tuned independently of each other or, correspondingly, for a non-vanishing photon chemical potential. We here describe experiments demon- strating the fluorescence-induced thermalization and Bose-Einstein condensation of a two-dimensional photon gas in the dye microcavity. Moreover, recent measurements on the photon statistics of the condensate, showing Bose-Einstein condensation in the grandcanonical ensemble limit, will be reviewed. 1 Introduction Quantum statistical effects become relevant when a gas of particles is cooled, or its den- sity is increased, to the point where the associated de Broglie wavepackets spatially over- arXiv:1611.10286v1 [cond-mat.quant-gas] 30 Nov 2016 lap. For particles with integer spin (bosons), the phenomenon of Bose-Einstein condensation (BEC) then leads to macroscopic occupation of a single quantum state at finite tempera- tures [1]. -

Spin Statistics, Partition Functions and Network Entropy

This is a repository copy of Spin Statistics, Partition Functions and Network Entropy. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/118694/ Version: Accepted Version Article: Wang, Jianjia, Wilson, Richard Charles orcid.org/0000-0001-7265-3033 and Hancock, Edwin R orcid.org/0000-0003-4496-2028 (2017) Spin Statistics, Partition Functions and Network Entropy. Journal of Complex Networks. pp. 1-25. ISSN 2051-1329 https://doi.org/10.1093/comnet/cnx017 Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ IMA Journal of Complex Networks (2017)Page 1 of 25 doi:10.1093/comnet/xxx000 Spin Statistics, Partition Functions and Network Entropy JIANJIA WANG∗ Department of Computer Science,University of York, York, YO10 5DD, UK ∗[email protected] RICHARD C. WILSON Department of Computer Science,University of York, York, YO10 5DD, UK AND EDWIN R. HANCOCK Department of Computer Science,University of York, York, YO10 5DD, UK [Received on 2 May 2017] This paper explores the thermodynamic characterization of networks using the heat bath analogy when the energy states are occupied under different spin statistics, specified by a partition function. -

Magnetic Materials I

5 Magnetic materials I Magnetic materials I ● Diamagnetism ● Paramagnetism Diamagnetism - susceptibility All materials can be classified in terms of their magnetic behavior falling into one of several categories depending on their bulk magnetic susceptibility χ. without spin M⃗ 1 χ= in general the susceptibility is a position dependent tensor M⃗ (⃗r )= ⃗r ×J⃗ (⃗r ) H⃗ 2 ] In some materials the magnetization is m / not a linear function of field strength. In A [ such cases the differential susceptibility M is introduced: d M⃗ χ = d d H⃗ We usually talk about isothermal χ susceptibility: ∂ M⃗ χ = T ( ∂ ⃗ ) H T Theoreticians define magnetization as: ∂ F⃗ H[A/m] M=− F=U−TS - Helmholtz free energy [4] ( ∂ H⃗ ) T N dU =T dS− p dV +∑ μi dni i=1 N N dF =T dS − p dV +∑ μi dni−T dS−S dT =−S dT − p dV +∑ μi dni i=1 i=1 Diamagnetism - susceptibility It is customary to define susceptibility in relation to volume, mass or mole: M⃗ (M⃗ /ρ) m3 (M⃗ /mol) m3 χ= [dimensionless] , χρ= , χ = H⃗ H⃗ [ kg ] mol H⃗ [ mol ] 1emu=1×10−3 A⋅m2 The general classification of materials according to their magnetic properties μ<1 <0 diamagnetic* χ ⃗ ⃗ ⃗ ⃗ ⃗ ⃗ B=μ H =μr μ0 H B=μo (H +M ) μ>1 >0 paramagnetic** ⃗ ⃗ ⃗ χ μr μ0 H =μo( H + M ) → μ r=1+χ μ≫1 χ≫1 ferromagnetic*** *dia /daɪə mæ ɡˈ n ɛ t ɪ k/ -Greek: “from, through, across” - repelled by magnets. We have from L2: 1 2 the force is directed antiparallel to the gradient of B2 F = V ∇ B i.e. -

From Quantum Chaos and Eigenstate Thermalization to Statistical

Advances in Physics,2016 Vol. 65, No. 3, 239–362, http://dx.doi.org/10.1080/00018732.2016.1198134 REVIEW ARTICLE From quantum chaos and eigenstate thermalization to statistical mechanics and thermodynamics a,b c b a Luca D’Alessio ,YarivKafri,AnatoliPolkovnikov and Marcos Rigol ∗ aDepartment of Physics, The Pennsylvania State University, University Park, PA 16802, USA; bDepartment of Physics, Boston University, Boston, MA 02215, USA; cDepartment of Physics, Technion, Haifa 32000, Israel (Received 22 September 2015; accepted 2 June 2016) This review gives a pedagogical introduction to the eigenstate thermalization hypothesis (ETH), its basis, and its implications to statistical mechanics and thermodynamics. In the first part, ETH is introduced as a natural extension of ideas from quantum chaos and ran- dom matrix theory (RMT). To this end, we present a brief overview of classical and quantum chaos, as well as RMT and some of its most important predictions. The latter include the statistics of energy levels, eigenstate components, and matrix elements of observables. Build- ing on these, we introduce the ETH and show that it allows one to describe thermalization in isolated chaotic systems without invoking the notion of an external bath. We examine numerical evidence of eigenstate thermalization from studies of many-body lattice systems. We also introduce the concept of a quench as a means of taking isolated systems out of equi- librium, and discuss results of numerical experiments on quantum quenches. The second part of the review explores the implications of quantum chaos and ETH to thermodynamics. Basic thermodynamic relations are derived, including the second law of thermodynamics, the fun- damental thermodynamic relation, fluctuation theorems, the fluctuation–dissipation relation, and the Einstein and Onsager relations. -

Statistical Physics– a Second Course

Statistical Physics– a second course Finn Ravndal and Eirik Grude Flekkøy Department of Physics University of Oslo September 3, 2008 2 Contents 1 Summary of Thermodynamics 5 1.1 Equationsofstate .......................... 5 1.2 Lawsofthermodynamics. 7 1.3 Maxwell relations and thermodynamic derivatives . .... 9 1.4 Specificheatsandcompressibilities . 10 1.5 Thermodynamicpotentials . 12 1.6 Fluctuations and thermodynamic stability . .. 15 1.7 Phasetransitions ........................... 16 1.8 EntropyandGibbsParadox. 18 2 Non-Interacting Particles 23 1 2.1 Spin- 2 particlesinamagneticfield . 23 2.2 Maxwell-Boltzmannstatistics . 28 2.3 Idealgas................................ 32 2.4 Fermi-Diracstatistics. 35 2.5 Bose-Einsteinstatistics. 36 3 Statistical Ensembles 39 3.1 Ensemblesinphasespace . 39 3.2 Liouville’stheorem . .. .. .. .. .. .. .. .. .. .. 42 3.3 Microcanonicalensembles . 45 3.4 Free particles and multi-dimensional spheres . .... 48 3.5 Canonicalensembles . 50 3.6 Grandcanonicalensembles . 54 3.7 Isobaricensembles .......................... 58 3.8 Informationtheory . .. .. .. .. .. .. .. .. .. .. 62 4 Real Gases and Liquids 67 4.1 Correlationfunctions. 67 4.2 Thevirialtheorem .......................... 73 4.3 Mean field theory for the van der Waals equation . 76 4.4 Osmosis ................................ 80 3 4 CONTENTS 5 Quantum Gases and Liquids 83 5.1 Statisticsofidenticalparticles. .. 83 5.2 Blackbodyradiationandthephotongas . 88 5.3 Phonons and the Debye theory of specific heats . 96 5.4 Bosonsatnon-zerochemicalpotential .