Chess Playing Agents: a Problem Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virginia Chess Federation 2010 - #4

VIRGINIA CHESS Newsletter The bimonthly publication of the Virginia Chess Federation 2010 - #4 -------- Inside... / + + + +\ Charlottesville Open /+ + + + \ Virginia Senior Championship / N L O O\ Tim Rogalski - King of the Open Sicilian 2010 State Championship announcement/+p+pO Op\ and more, including... / Pk+p+p+\ Larry Larkins revisits/+ + +p+ \ the Yeager-Perelshteyn ending/ + + + +\ /+ + + + \ ________ VIRGINIA CHESS Newsletter 2010 - Issue #4 Editor: Circulation: Macon Shibut Ernie Schlich 8234 Citadel Place 1370 South Braden Crescent Vienna VA 22180 Norfolk VA 23502 [email protected] [email protected] k w r Virginia Chess is published six times per year by the Virginia Chess Federation. Membership benefits (dues: $10/yr adult; $5/yr junior under 18) include a subscription to Virginia Chess. Send material for publication to the editor. Send dues, address changes, etc to Circulation. The Virginia Chess Federation (VCF) is a non-profit organization for the use of its members. Dues for regular adult membership are $10/yr. Junior memberships are $5/yr. President: Mike Hoffpauir, 405 Hounds Chase, Yorktown VA 23693, mhoffpauir@ aol.com Treasurer: Ernie Schlich, 1370 South Braden Crescent, Norfolk VA 23502, [email protected] Secretary: Helen Hinshaw, 3430 Musket Dr, Midlothian VA 23113, [email protected] Tournaments: Mike Atkins, PO Box 6138, Alexandria VA, [email protected] Scholastics Coordinator: Mike Hoffpauir, 405 Hounds Chase, Yorktown VA 23693, [email protected] VCF Inc Directors: Helen Hinshaw (Chairman), Rob Getty, John Farrell, Mike Hoffpauir, Ernie Schlich. otjnwlkqbhrp 2010 - #4 1 otjnwlkqbhrp Charlottesville Open by David Long INTERNATIONAL MASTER OLADAPO ADU overpowered a field of 54 Iplayers to win the Charlottesville Open over the July 10-11 weekend. -

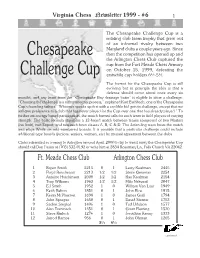

1999/6 Layout

Virginia Chess Newsletter 1999 - #6 1 The Chesapeake Challenge Cup is a rotating club team trophy that grew out of an informal rivalry between two Maryland clubs a couple years ago. Since Chesapeake then the competition has opened up and the Arlington Chess Club captured the cup from the Fort Meade Chess Armory on October 15, 1999, defeating the 1 1 Challenge Cup erstwhile cup holders 6 ⁄2-5 ⁄2. The format for the Chesapeake Cup is still evolving but in principle the idea is that a defense should occur about once every six months, and any team from the “Chesapeake Bay drainage basin” is eligible to issue a challenge. “Choosing the challenger is a rather informal process,” explained Kurt Eschbach, one of the Chesapeake Cup's founding fathers. “Whoever speaks up first with a credible bid gets to challenge, except that we will give preference to a club that has never played for the Cup over one that has already played.” To further encourage broad participation, the match format calls for each team to field players of varying strength. The basic formula stipulates a 12-board match between teams composed of two Masters (no limit), two Expert, and two each from classes A, B, C & D. The defending team hosts the match and plays White on odd-numbered boards. It is possible that a particular challenge could include additional type boards (juniors, seniors, women, etc) by mutual agreement between the clubs. Clubs interested in coming to Arlington around April, 2000 to try to wrest away the Chesapeake Cup should call Dan Fuson at (703) 532-0192 or write him at 2834 Rosemary Ln, Falls Church VA 22042. -

100 Years of Shannon: Chess, Computing and Botvinik Iryna Andriyanova

100 Years of Shannon: Chess, Computing and Botvinik Iryna Andriyanova To cite this version: Iryna Andriyanova. 100 Years of Shannon: Chess, Computing and Botvinik. Doctoral. United States. 2016. cel-01709767 HAL Id: cel-01709767 https://hal.archives-ouvertes.fr/cel-01709767 Submitted on 15 Feb 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. CLAUDE E. SHANNON 1916—2001 • 1936 : Bachelor in EE and Mathematics from U.Michigan • 1937 : Master in EE from MIT • 1940 : PhD in EE from MIT • 1940 : Research fellow at Princeton • 1940-1956 : Researcher at Bell Labs • 1948 : “A Mathematical Theory of Communication”, Bell System Technical Journal • 1956-1978 : Professor at MIT 100 YEARS OF SHANNON SHANNON, BOTVINNIK AND COMPUTER CHESS Iryna Andriyanova ETIS Lab, UMR 8051, ENSEA/Université de Cergy-Pontoise/CNRS 54TH ALLERTON CONFERENCE SHORT HISTORY OF CHESS/COMPUTER CHESS CHESS AND COMPUTER CHESS TWO PARALLELS W. Steinitz M. Botvinnik A. Karpov 1886 1894 1921 1948 1963 1975 1985 2006* E. Lasker G. Kasparov W. Steinitz: simplicity and rationality E. Lasker: more risky play M. Botvinnik: complicated, very original positions A. Karpov, G. Kasparov: Botvinnik’s school CHESS AND COMPUTER CHESS TWO PARALLELS W. -

Preparation of Papers for R-ICT 2007

Chess Puzzle Mate in N-Moves Solver with Branch and Bound Algorithm Ryan Ignatius Hadiwijaya / 13511070 Program Studi Teknik Informatika Sekolah Teknik Elektro dan Informatika Institut Teknologi Bandung, Jl. Ganesha 10 Bandung 40132, Indonesia [email protected] Abstract—Chess is popular game played by many people 1. Any player checkmate the opponent. If this occur, in the world. Aside from the normal chess game, there is a then that player will win and the opponent will special board configuration that made chess puzzle. Chess lose. puzzle has special objective, and one of that objective is to 2. Any player resign / give up. If this occur, then that checkmate the opponent within specifically number of moves. To solve that kind of chess puzzle, branch and bound player is lose. algorithm can be used for choosing moves to checkmate the 3. Draw. Draw occur on any of the following case : opponent. With good heuristics, the algorithm will solve a. Stalemate. Stalemate occur when player doesn‟t chess puzzle effectively and efficiently. have any legal moves in his/her turn and his/her king isn‟t in checked. Index Terms—branch and bound, chess, problem solving, b. Both players agree to draw. puzzle. c. There are not enough pieces on the board to force a checkmate. d. Exact same position is repeated 3 times I. INTRODUCTION e. Fifty consecutive moves with neither player has Chess is a board game played between two players on moved a pawn or captured a piece. opposite sides of board containing 64 squares of alternating colors. -

The Evects of Time Pressure on Chess Skill: an Investigation Into Fast and Slow Processes Underlying Expert Performance

Psychological Research (2007) 71:591–597 DOI 10.1007/s00426-006-0076-0 ORIGINAL ARTICLE The eVects of time pressure on chess skill: an investigation into fast and slow processes underlying expert performance Frenk van Harreveld · Eric-Jan Wagenmakers · Han L. J. van der Maas Received: 28 November 2005 / Accepted: 3 July 2006 / Published online: 22 December 2006 © Springer-Verlag 2006 Abstract The ability to play chess is generally Introduction assumed to depend on two types of processes: slow processes such as search, and fast processes such as One of the important challenges for cognitive science is pattern recognition. It has been argued that an increase to shed light on the processes that underlie expert in time pressure during a game selectively hinders the decision making. Across a wide range of Welds such as ability to engage in slow processes. Here we study the medical decision making and engineering, it has been eVect of time pressure on expert chess performance in shown that expertise involves both slow processes order to test the hypothesis that compared to weak such as selective search and fast processes such as the players, strong players depend relatively heavily on recognition of meaningful patterns (e.g., Ericsson & fast processes. In the Wrst study we examine the perfor- Staszewski 1989). This distinction between fast and slow mance of players of various strengths at an online chess processes is very applicable to the game of chess and server, for games played under diVerent time controls. therefore research on chess is of considerable impor- In a second study we examine the eVect of time con- tance for the understanding of expert performance. -

Learning Long-Term Chess Strategies from Databases

Mach Learn (2006) 63:329–340 DOI 10.1007/s10994-006-6747-7 TECHNICAL NOTE Learning long-term chess strategies from databases Aleksander Sadikov · Ivan Bratko Received: March 10, 2005 / Revised: December 14, 2005 / Accepted: December 21, 2005 / Published online: 28 March 2006 Springer Science + Business Media, LLC 2006 Abstract We propose an approach to the learning of long-term plans for playing chess endgames. We assume that a computer-generated database for an endgame is available, such as the king and rook vs. king, or king and queen vs. king and rook endgame. For each position in the endgame, the database gives the “value” of the position in terms of the minimum number of moves needed by the stronger side to win given that both sides play optimally. We propose a method for automatically dividing the endgame into stages characterised by different objectives of play. For each stage of such a game plan, a stage- specific evaluation function is induced, to be used by minimax search when playing the endgame. We aim at learning playing strategies that give good insight into the principles of playing specific endgames. Games played by these strategies should resemble human expert’s play in achieving goals and subgoals reliably, but not necessarily as quickly as possible. Keywords Machine learning . Computer chess . Long-term Strategy . Chess endgames . Chess databases 1. Introduction The standard approach used in chess playing programs relies on the minimax principle, efficiently implemented by alpha-beta algorithm, plus a heuristic evaluation function. This approach has proved to work particularly well in situations in which short-term tactics are prevalent, when evaluation function can easily recognise within the search horizon the con- sequences of good or bad moves. -

No. 123 - (Vol.VIH)

No. 123 - (Vol.VIH) January 1997 Editorial Board editors John Roycrqfttf New Way Road, London, England NW9 6PL Edvande Gevel Binnen de Veste 36, 3811 PH Amersfoort, The Netherlands Spotlight-column: J. Heck, Neuer Weg 110, D-47803 Krefeld, Germany Opinions-column: A. Pallier, La Mouziniere, 85190 La Genetouze, France Treasurer: J. de Boer, Zevenenderdrffi 40, 1251 RC Laren, The Netherlands EDITORIAL achievement, recorded only in a scientific journal, "The chess study is close to the chess game was not widely noticed. It was left to the dis- because both study and game obey the same coveries by Ken Thompson of Bell Laboratories rules." This has long been an argument used to in New Jersey, beginning in 1983, to put the boot persuade players to look at studies. Most players m. prefer studies to problems anyway, and readily Aside from a few upsets to endgame theory, the give the affinity with the game as the reason for set of 'total information' 5-raan endgame their preference. Your editor has fought a long databases that Thompson generated over the next battle to maintain the literal truth of that ar- decade demonstrated that several other endings gument. It was one of several motivations in might require well over 50 moves to win. These writing the final chapter of Test Tube Chess discoveries arrived an the scene too fast for FIDE (1972), in which the Laws are separated into to cope with by listing exceptions - which was the BMR (Board+Men+Rules) elements, and G first expedient. Then in 1991 Lewis Stiller and (Game) elements, with studies firmly identified Noam Elkies using a Connection Machine with the BMR realm and not in the G realm. -

Multilinear Algebra and Chess Endgames

Games of No Chance MSRI Publications Volume 29, 1996 Multilinear Algebra and Chess Endgames LEWIS STILLER Abstract. This article has three chief aims: (1) To show the wide utility of multilinear algebraic formalism for high-performance computing. (2) To describe an application of this formalism in the analysis of chess endgames, and results obtained thereby that would have been impossible to compute using earlier techniques, including a win requiring a record 243 moves. (3) To contribute to the study of the history of chess endgames, by focusing on the work of Friedrich Amelung (in particular his apparently lost analysis of certain six-piece endgames) and that of Theodor Molien, one of the founders of modern group representation theory and the first person to have systematically numerically analyzed a pawnless endgame. 1. Introduction Parallel and vector architectures can achieve high peak bandwidth, but it can be difficult for the programmer to design algorithms that exploit this bandwidth efficiently. Application performance can depend heavily on unique architecture features that complicate the design of portable code [Szymanski et al. 1994; Stone 1993]. The work reported here is part of a project to explore the extent to which the techniques of multilinear algebra can be used to simplify the design of high- performance parallel and vector algorithms [Johnson et al. 1991]. The approach is this: Define a set of fixed, structured matrices that encode architectural primitives • of the machine, in the sense that left-multiplication of a vector by this matrix is efficient on the target architecture. Formulate the application problem as a matrix multiplication. -

Scenarios for Using the ARPANET at the International Conference On

VAlirc NIC 11863 SCENARIOS for using the ARPANET at the INTERNATIONAL CONFERENCE ON COMPUTER COMMUNICATION Washington, D.C. October 24—26, 1972 ARPA Network Information Center Stanford Research Institute Menlo Park, California 94025 , 11?>o - 3 £: 3c? - 16 $<}0-l!:}o 3 - & i 3o iW |{: 3 cp - 3 NIC 11863 SCENARIOS for using the ARPANET at the INTERNATIONAL CONFERENCE ON COMPUTER COMMUNICATION Washington, D.C. October 24—26, 1972 ARPA Network Information Center Stanford Research Institute Menlo Park, California 94025 SCENARIOS FOR USING THE ARPANET AT THE ICCC We intend that the following scenarios be used by individuals to browse the ARPA Computer Network (ARPANET) in its current early stage of development and thereby to introduce themselves to some possibilities in computer communication. The scenarios include only a few of the existing ARPANET resources. They were chosen for this booklet (somewhat haphazardly) to exhibit variety and sophistication, while retaining simplicity. The scenarios are by no means complete or perfect. We have tried to make them accurate, but are certain that they contain errors. The scenarios are, therefore, only one kind of tool for experiencing computer communication. We assume that you will attend the various showings of film and videotape, pay close attention at the several scheduled demonstrations of specific resources, approach the ARPANET aggressively yourself using these scenarios, and unhesitatingly call upon the ICCC Special Project People for the advice and encouragement you are sure to need. The account numbers and passwords provided in these scenarios were generated spe cifically for the ICCC. It is hoped that some of them will remain available after the ICCC for continued browsing. -

Birth of the Chess Queen C Marilyn Yalom for Irv, Who Introduced Me to Chess and Other Wonders Contents

A History Birth of the Chess Queen C Marilyn Yalom For Irv, who introduced me to chess and other wonders Contents Acknowledgments viii Introduction xii Selected Rulers of the Period xx part 1 • the mystery of the chess queen’ s birth One Chess Before the Chess Queen 3 Two Enter the Queen! 15 Three The Chess Queen Shows Her Face 29 part 2 • spain, italy, and germany Four Chess and Queenship in Christian Spain 39 Five Chess Moralities in Italy and Germany 59 part 3 • france and england Six Chess Goes to France and England 71 v • contents Seven Chess and the Cult of the Virgin Mary 95 Eight Chess and the Cult of Love 109 part 4 • scandinavia and russia Nine Nordic Queens, On and Off the Board 131 Ten Chess and Women in Old Russia 151 part 5 • power to the queen Eleven New Chess and Isabella of Castile 167 Twelve The Rise of “Queen’s Chess” 187 Thirteen The Decline of Women Players 199 Epilogue 207 Notes 211 Index 225 About the Author Praise Other Books by Marilyn Yalom Credits Cover Copyright About the Publisher Waking Piece The world dreams in chess Kibitzing like lovers Pawn’s queened redemption L is a forked path only horses lead. Rook and King castling for safety Bishop boasting of crossways slide. Echo of Orbit: starless squared sky. She alone moves where she chooses. Protecting helpless monarch, her bidden skill. Attacking schemers, plotters, blundered all. Game eternal. War breaks. She enters. Check mate. Hail Queen. How we crave Her majesty. —Gary Glazner Acknowledgments This book would not have been possible without the vast philo- logical, archaeological, literary, and art historical research of pre- vious writers, most notably from Germany and England. -

YEARBOOK the Information in This Yearbook Is Substantially Correct and Current As of December 31, 2020

OUR HERITAGE 2020 US CHESS YEARBOOK The information in this yearbook is substantially correct and current as of December 31, 2020. For further information check the US Chess website www.uschess.org. To notify US Chess of corrections or updates, please e-mail [email protected]. U.S. CHAMPIONS 2002 Larry Christiansen • 2003 Alexander Shabalov • 2005 Hakaru WESTERN OPEN BECAME THE U.S. OPEN Nakamura • 2006 Alexander Onischuk • 2007 Alexander Shabalov • 1845-57 Charles Stanley • 1857-71 Paul Morphy • 1871-90 George H. 1939 Reuben Fine • 1940 Reuben Fine • 1941 Reuben Fine • 1942 2008 Yury Shulman • 2009 Hikaru Nakamura • 2010 Gata Kamsky • Mackenzie • 1890-91 Jackson Showalter • 1891-94 Samuel Lipchutz • Herman Steiner, Dan Yanofsky • 1943 I.A. Horowitz • 1944 Samuel 2011 Gata Kamsky • 2012 Hikaru Nakamura • 2013 Gata Kamsky • 2014 1894 Jackson Showalter • 1894-95 Albert Hodges • 1895-97 Jackson Reshevsky • 1945 Anthony Santasiere • 1946 Herman Steiner • 1947 Gata Kamsky • 2015 Hikaru Nakamura • 2016 Fabiano Caruana • 2017 Showalter • 1897-06 Harry Nelson Pillsbury • 1906-09 Jackson Isaac Kashdan • 1948 Weaver W. Adams • 1949 Albert Sandrin Jr. • 1950 Wesley So • 2018 Samuel Shankland • 2019 Hikaru Nakamura Showalter • 1909-36 Frank J. Marshall • 1936 Samuel Reshevsky • Arthur Bisguier • 1951 Larry Evans • 1952 Larry Evans • 1953 Donald 1938 Samuel Reshevsky • 1940 Samuel Reshevsky • 1942 Samuel 2020 Wesley So Byrne • 1954 Larry Evans, Arturo Pomar • 1955 Nicolas Rossolimo • Reshevsky • 1944 Arnold Denker • 1946 Samuel Reshevsky • 1948 ONLINE: COVID-19 • OCTOBER 2020 1956 Arthur Bisguier, James Sherwin • 1957 • Robert Fischer, Arthur Herman Steiner • 1951 Larry Evans • 1952 Larry Evans • 1954 Arthur Bisguier • 1958 E. -

Ancient Chess Turns Moving One Piece in Each Turn

About this Booklet How to Print: This booklet will print best on card stock (110 lb. paper), but can also be printed on regular (20 lb.) paper. Do not print Page 1 (these instructions). First, have your printer print Page 2. Then load that same page back into your printer to be printed on the other side and print Page 3. When you load the page back into your printer, be sure tha t the top and bottom of the pages are oriented correctly. Permissions: You may print this booklet as often as you like, for p ersonal purposes. You may also print this booklet to be included with a game which is sold to another party. You may distribute this booklet, in printed or electronic form freely, not for profit. If this booklet is distributed, it may not be changed in an y way. All copyright and contact information must be kept intact. This booklet may not be sold for profit, except as mentioned a bove, when included in the sale of a board game. To contact the creator of this booklet, please go to the “contact” page at www.AncientChess.com Playing the Game A coin may be tossed to decide who goes first, and the players take Ancient Chess turns moving one piece in each turn. If a player’s King is threatened with capture, “ check” (Persian: “Shah”) is declared, and the player must move so that his King is n o longer threatened. If there is no possible move to relieve the King of the threat, he is in “checkmate” (Persian: “shahmat,” meaning, “the king is at a loss”).