Exploring Extended Reality with ILLIXR: a New Playground for Architecture Research

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluating Performance Benefits of Head Tracking in Modern Video

Evaluating Performance Benefits of Head Tracking in Modern Video Games Arun Kulshreshth Joseph J. LaViola Jr. Department of EECS Department of EECS University of Central Florida University of Central Florida 4000 Central Florida Blvd 4000 Central Florida Blvd Orlando, FL 32816, USA Orlando, FL 32816, USA [email protected] [email protected] ABSTRACT PlayStation Move, TrackIR 5) that support 3D spatial in- teraction have been implemented and made available to con- We present a study that investigates user performance ben- sumers. Head tracking is one example of an interaction tech- efits of using head tracking in modern video games. We nique, commonly used in the virtual and augmented reality explored four di↵erent carefully chosen commercial games communities [2, 7, 9], that has potential to be a useful ap- with tasks which can potentially benefit from head tracking. proach for controlling certain gaming tasks. Recent work on For each game, quantitative and qualitative measures were head tracking and video games has shown some potential taken to determine if users performed better and learned for this type of gaming interface. For example, Sko et al. faster in the experimental group (with head tracking) than [10] proposed a taxonomy of head gestures for first person in the control group (without head tracking). A game ex- shooter (FPS) games and showed that some of their tech- pertise pre-questionnaire was used to classify participants niques (peering, zooming, iron-sighting and spinning) are into casual and expert categories to analyze a possible im- useful in games. In addition, previous studies [13, 14] have pact on performance di↵erences. -

Game Engine Review

Game Engine Review Mr. Stuart Armstrong 12565 Research Parkway, Suite 350 Orlando FL, 32826 USA [email protected] ABSTRACT There has been a significant amount of interest around the use of Commercial Off The Shelf products to support military training and education. This paper compares a number of current game engines available on the market and assesses them against potential military simulation criteria. 1.0 GAMES IN DEFENSE “A game is a system in which players engage in an artificial conflict, defined by rules, which result in a quantifiable outcome.” The use of games for defence simulation can be broadly split into two categories – the use of game technologies to provide an immersive and flexible military training system and a “serious game” that uses game design principles (such as narrative and scoring) to deliver education and training content in a novel way. This talk and subsequent education notes focus on the use of game technologies, in particular game engines to support military training. 2.0 INTRODUCTION TO GAMES ENGINES “A games engine is a software suite designed to facilitate the production of computer games.” Developers use games engines to create games for games consoles, personal computers and growingly mobile devices. Games engines provide a flexible and reusable development toolkit with all the core functionality required to produce a game quickly and efficiently. Multiple games can be produced from the same games engine, for example Counter Strike Source, Half Life 2 and Left 4 Dead 2 are all created using the Source engine. Equally once created, the game source code can with little, if any modification be abstracted for different gaming platforms such as a Playstation, Personal Computer or Wii. -

Mobile Developer's Guide to the Galaxy

Don’t Panic MOBILE DEVELOPER’S GUIDE TO THE GALAXY U PD A TE D & EX TE ND 12th ED EDITION published by: Services and Tools for All Mobile Platforms Enough Software GmbH + Co. KG Sögestrasse 70 28195 Bremen Germany www.enough.de Please send your feedback, questions or sponsorship requests to: [email protected] Follow us on Twitter: @enoughsoftware 12th Edition February 2013 This Developer Guide is licensed under the Creative Commons Some Rights Reserved License. Editors: Marco Tabor (Enough Software) Julian Harty Izabella Balce Art Direction and Design by Andrej Balaz (Enough Software) Mobile Developer’s Guide Contents I Prologue 1 The Galaxy of Mobile: An Introduction 1 Topology: Form Factors and Usage Patterns 2 Star Formation: Creating a Mobile Service 6 The Universe of Mobile Operating Systems 12 About Time and Space 12 Lost in Space 14 Conceptional Design For Mobile 14 Capturing The Idea 16 Designing User Experience 22 Android 22 The Ecosystem 24 Prerequisites 25 Implementation 28 Testing 30 Building 30 Signing 31 Distribution 32 Monetization 34 BlackBerry Java Apps 34 The Ecosystem 35 Prerequisites 36 Implementation 38 Testing 39 Signing 39 Distribution 40 Learn More 42 BlackBerry 10 42 The Ecosystem 43 Development 51 Testing 51 Signing 52 Distribution 54 iOS 54 The Ecosystem 55 Technology Overview 57 Testing & Debugging 59 Learn More 62 Java ME (J2ME) 62 The Ecosystem 63 Prerequisites 64 Implementation 67 Testing 68 Porting 70 Signing 71 Distribution 72 Learn More 4 75 Windows Phone 75 The Ecosystem 76 Implementation 82 Testing -

PROGRAMS for LIBRARIES Alastore.Ala.Org

32 VIRTUAL, AUGMENTED, & MIXED REALITY PROGRAMS FOR LIBRARIES edited by ELLYSSA KROSKI CHICAGO | 2021 alastore.ala.org ELLYSSA KROSKI is the director of Information Technology and Marketing at the New York Law Institute as well as an award-winning editor and author of sixty books including Law Librarianship in the Age of AI for which she won AALL’s 2020 Joseph L. Andrews Legal Literature Award. She is a librarian, an adjunct faculty member at Drexel University and San Jose State University, and an international conference speaker. She received the 2017 Library Hi Tech Award from the ALA/LITA for her long-term contributions in the area of Library and Information Science technology and its application. She can be found at www.amazon.com/author/ellyssa. © 2021 by the American Library Association Extensive effort has gone into ensuring the reliability of the information in this book; however, the publisher makes no warranty, express or implied, with respect to the material contained herein. ISBNs 978-0-8389-4948-1 (paper) Library of Congress Cataloging-in-Publication Data Names: Kroski, Ellyssa, editor. Title: 32 virtual, augmented, and mixed reality programs for libraries / edited by Ellyssa Kroski. Other titles: Thirty-two virtual, augmented, and mixed reality programs for libraries Description: Chicago : ALA Editions, 2021. | Includes bibliographical references and index. | Summary: “Ranging from gaming activities utilizing VR headsets to augmented reality tours, exhibits, immersive experiences, and STEM educational programs, the program ideas in this guide include events for every size and type of academic, public, and school library” —Provided by publisher. Identifiers: LCCN 2021004662 | ISBN 9780838949481 (paperback) Subjects: LCSH: Virtual reality—Library applications—United States. -

Augmented Reality and Its Aspects: a Case Study for Heating Systems

Augmented Reality and its aspects: a case study for heating systems. Lucas Cavalcanti Viveiros Dissertation presented to the School of Technology and Management of Bragança to obtain a Master’s Degree in Information Systems. Under the double diploma course with the Federal Technological University of Paraná Work oriented by: Prof. Paulo Jorge Teixeira Matos Prof. Jorge Aikes Junior Bragança 2018-2019 ii Augmented Reality and its aspects: a case study for heating systems. Lucas Cavalcanti Viveiros Dissertation presented to the School of Technology and Management of Bragança to obtain a Master’s Degree in Information Systems. Under the double diploma course with the Federal Technological University of Paraná Work oriented by: Prof. Paulo Jorge Teixeira Matos Prof. Jorge Aikes Junior Bragança 2018-2019 iv Dedication I dedicate this work to my friends and my family, especially to my parents Tadeu José Viveiros and Vera Neide Cavalcanti, who have always supported me to continue my stud- ies, despite the physical distance has been a demand factor from the beginning of the studies by the change of state and country. v Acknowledgment First of all, I thank God for the opportunity. All the teachers who helped me throughout my journey. Especially, the mentors Paulo Matos and Jorge Aikes Junior, who not only provided the necessary support but also the opportunity to explore a recent area that is still under development. Moreover, the professors Paulo Leitão and Leonel Deusdado from CeDRI’s laboratory for allowing me to make use of the HoloLens device from Microsoft. vi Abstract Thanks to the advances of technology in various domains, and the mixing between real and virtual worlds. -

Advanced 3D Visualization for Simulation Using Game Technology

Proceedings of the 2011 Winter Simulation Conference S. Jain, R. R. Creasey, J. Himmelspach, K. P. White, and M. Fu, eds. ADVANCED 3D VISUALIZATION FOR SIMULATION USING GAME TECHNOLOGY Jonatan L. Bijl Csaba A. Boer TBA B.V. Karrepad 2a 2623 AP Delft, Netherlands ABSTRACT 3D visualization is becoming increasingly popular for discrete event simulation. However, the 3D visu- alization of many of the commercial off the shelf simulation packages is not up to date with the quickly developing area of computer graphics. In this paper we present an advanced 3D visualization tool, which uses game technology. The tool is especially fit for discrete event simulation, it is easily configurable, and it can be kept up to date with modern computer graphics techniques. The tool has been used in several container terminal simulation projects. From a survey under simulation experts and our experience with the visualization tool, we concluded that realism is important for some of the purposes of visualization, and the use of game technology can help to achieve this goal. 1 INTRODUCTION Simulation is the process of designing a model of a concrete system and conducting experiments with this model in order to understand the behavior of the concrete system and/or evaluate various strategies for the operation of the system (Shannon 1975). Although the simulation experiment can produce a large amount of data, the visualization of a simulated system provides a more complete understanding of its behavior. In the visualization, key elements of the system are represented on the screen by icons that dynamically change position, color and shape as the simulation evolves through time (Law and Kelton 2000). -

Building the Metaverse One Open Standard at a Time

Building the Metaverse One Open Standard at a Time Khronos APIs and 3D Asset Formats for XR Neil Trevett Khronos President NVIDIA VP Developer Ecosystems [email protected]| @neilt3d April 2020 © Khronos® Group 2020 This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 1 Khronos Connects Software to Silicon Open interoperability standards to enable software to effectively harness the power of multiprocessors and accelerator silicon 3D graphics, XR, parallel programming, vision acceleration and machine learning Non-profit, member-driven standards-defining industry consortium Open to any interested company All Khronos standards are royalty-free Well-defined IP Framework protects participant’s intellectual property >150 Members ~ 40% US, 30% Europe, 30% Asia This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 2 Khronos Active Initiatives 3D Graphics 3D Assets Portable XR Parallel Computation Desktop, Mobile, Web Authoring Augmented and Vision, Inferencing, Machine Embedded and Safety Critical and Delivery Virtual Reality Learning Guidelines for creating APIs to streamline system safety certification This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 3 Pervasive Vulkan Desktop and Mobile GPUs http://vulkan.gpuinfo.org/ Platforms Apple Desktop Android (via porting Media Players Consoles (Android 7.0+) layers) (Vulkan 1.1 required on Android Q) Virtual Reality Cloud Services Game Streaming Embedded Engines Croteam Serious Engine Note: The version of Vulkan available will depend on platform and vendor This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. -

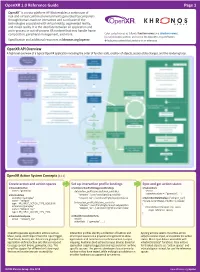

Openxr 1.0 Reference Guide Page 1

OpenXR 1.0 Reference Guide Page 1 OpenXR™ is a cross-platform API that enables a continuum of real-and-virtual combined environments generated by computers through human-machine interaction and is inclusive of the technologies associated with virtual reality, augmented reality, and mixed reality. It is the interface between an application and an in-process or out-of-process XR runtime that may handle frame composition, peripheral management, and more. Color-coded names as follows: function names and structure names. [n.n.n] Indicates sections and text in the OpenXR 1.0 specification. Specification and additional resources at khronos.org/openxr £ Indicates content that pertains to an extension. OpenXR API Overview A high level overview of a typical OpenXR application including the order of function calls, creation of objects, session state changes, and the rendering loop. OpenXR Action System Concepts [11.1] Create action and action spaces Set up interaction profile bindings Sync and get action states xrCreateActionSet xrSetInteractionProfileSuggestedBindings xrSyncActions name = "gameplay" /interaction_profiles/oculus/touch_controller session activeActionSets = { "gameplay", ...} xrCreateAction "teleport": /user/hand/right/input/a/click actionSet="gameplay" "teleport_ray": /user/hand/right/input/aim/pose xrGetActionStateBoolean ("teleport_ray") name = “teleport” if (state.currentState) // button is pressed /interaction_profiles/htc/vive_controller type = XR_INPUT_ACTION_TYPE_BOOLEAN { actionSet="gameplay" "teleport": /user/hand/right/input/trackpad/click -

Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces

TECHNOLOGY AND CODE published: 04 June 2021 doi: 10.3389/frvir.2021.684498 Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces Florian Kern*, Peter Kullmann, Elisabeth Ganal, Kristof Korwisi, René Stingl, Florian Niebling and Marc Erich Latoschik Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany This article introduces the Off-The-Shelf Stylus (OTSS), a framework for 2D interaction (in 3D) as well as for handwriting and sketching with digital pen, ink, and paper on physically aligned virtual surfaces in Virtual, Augmented, and Mixed Reality (VR, AR, MR: XR for short). OTSS supports self-made XR styluses based on consumer-grade six-degrees-of-freedom XR controllers and commercially available styluses. The framework provides separate modules for three basic but vital features: 1) The stylus module provides stylus construction and calibration features. 2) The surface module provides surface calibration and visual feedback features for virtual-physical 2D surface alignment using our so-called 3ViSuAl procedure, and Edited by: surface interaction features. 3) The evaluation suite provides a comprehensive test bed Daniel Zielasko, combining technical measurements for precision, accuracy, and latency with extensive University of Trier, Germany usability evaluations including handwriting and sketching tasks based on established Reviewed by: visuomotor, graphomotor, and handwriting research. The framework’s development is Wolfgang Stuerzlinger, Simon Fraser University, Canada accompanied by an extensive open source reference implementation targeting the Unity Thammathip Piumsomboon, game engine using an Oculus Rift S headset and Oculus Touch controllers. The University of Canterbury, New Zealand development compares three low-cost and low-tech options to equip controllers with a *Correspondence: tip and includes a web browser-based surface providing support for interacting, Florian Kern fl[email protected] handwriting, and sketching. -

Operational Capabilities of a Six Degrees of Freedom Spacecraft Simulator

AIAA Guidance, Navigation, and Control (GNC) Conference AIAA 2013-5253 August 19-22, 2013, Boston, MA Operational Capabilities of a Six Degrees of Freedom Spacecraft Simulator K. Saulnier*, D. Pérez†, G. Tilton‡, D. Gallardo§, C. Shake**, R. Huang††, and R. Bevilacqua ‡‡ Rensselaer Polytechnic Institute, Troy, NY, 12180 This paper presents a novel six degrees of freedom ground-based experimental testbed, designed for testing new guidance, navigation, and control algorithms for nano-satellites. The development of innovative guidance, navigation and control methodologies is a necessary step in the advance of autonomous spacecraft. The testbed allows for testing these algorithms in a one-g laboratory environment, increasing system reliability while reducing development costs. The system stands out among the existing experimental platforms because all degrees of freedom of motion are dynamically reproduced. The hardware and software components of the testbed are detailed in the paper, as well as the motion tracking system used to perform its navigation. A Lyapunov-based strategy for closed loop control is used in hardware-in-the loop experiments to successfully demonstrate the system’s capabilities. Nomenclature ̅ = reference model dynamics matrix ̅ = control distribution matrix = moment arms of the thrusters = tracking error vector ̅ = thrust distribution matrix = mass of spacecraft ̅ = solution to the Lyapunov equation ̅ = selected matrix for the Lyapunov equation ̅ = rotation matrix from the body to the inertial frame = binary thrusts vector = thrust force of a single thruster = input to the linear reference model = ideal control vector = Lyapunov function = attitude vector = position vector Downloaded by Riccardo Bevilacqua on August 22, 2013 | http://arc.aiaa.org DOI: 10.2514/6.2013-5253 * Graduate Student, Department of Electrical, Computer, and Systems Engineering, 110 8th Street Troy NY JEC Room 1034. -

Global Interoperability in Telerobotics and Telemedicine

2010 IEEE International Conference on Robotics and Automation Anchorage Convention District May 3-8, 2010, Anchorage, Alaska, USA Plugfest 2009: Global Interoperability in Telerobotics and Telemedicine H. Hawkeye King1, Blake Hannaford1, Ka-Wai Kwok2, Guang-Zhong Yang2, Paul Griffiths3, Allison Okamura3, Ildar Farkhatdinov4, Jee-Hwan Ryu4, Ganesh Sankaranarayanan5, Venkata Arikatla 5, Kotaro Tadano6, Kenji Kawashima6, Angelika Peer7, Thomas Schauß7, Martin Buss7, Levi Miller8, Daniel Glozman8, Jacob Rosen8, Thomas Low9 1University of Washington, Seattle, WA, USA, 2Imperial College London, London, UK, 3Johns Hopkins University, Baltimore, MD, USA, 4Korea University of Technology and Education, Cheonan, Korea, 5Rensselaer Polytechnic Institute, Troy, NY, USA, 6Tokyo Institute of Technology, Yokohama, Japan, 7Technische Universitat¨ Munchen,¨ Munich, Germany, 8University of California, Santa Cruz, CA, USA, 9SRI International, Menlo Park, CA, USA Abstract— Despite the great diversity of teleoperator designs systems (e.g. [1], [2]) and network characterizations have and applications, their underlying control systems have many shown that under normal circumstances Internet can be used similarities. These similarities can be exploited to enable inter- for teleoperation with force feedback [3]. Fiorini and Oboe, operability between heterogeneous systems. We have developed a network data specification, the Interoperable Telerobotics for example carried out early studies in force-reflecting tele- Protocol, that can be used for Internet based control of a wide operation over the Internet [4]. Furthermore, long distance range of teleoperators. telesurgery has been demonstrated in successful operation In this work we test interoperable telerobotics on the using dedicated point-to-point network links [5], [6]. Mean- global Internet, focusing on the telesurgery application do- while, work by the Tachi Lab at the University of Tokyo main. -

Conference Booklet

30th Oct - 1st Nov CONFERENCE BOOKLET 1 2 3 INTRO REBOOT DEVELOP RED | 2019 y Always Outnumbered, Never Outgunned Warmest welcome to first ever Reboot Develop it! And we are here to stay. Our ambition through Red conference. Welcome to breathtaking Banff the next few years is to turn Reboot Develop National Park and welcome to iconic Fairmont Red not just in one the best and biggest annual Banff Springs. It all feels a bit like history repeating games industry and game developers conferences to me. When we were starting our European older in Canada and North America, but in the world! sister, Reboot Develop Blue conference, everybody We are committed to stay at this beautiful venue was full of doubts on why somebody would ever and in this incredible nature and astonishing choose a beautiful yet a bit remote place to host surroundings for the next few forthcoming years one of the biggest worldwide gatherings of the and make it THE annual key gathering spot of the international games industry. In the end, it turned international games industry. We will need all of into one of the biggest and highest-rated games your help and support on the way! industry conferences in the world. And here we are yet again at the beginning, in one of the most Thank you from the bottom of the heart for all beautiful and serene places on Earth, at one of the the support shown so far, and even more for the most unique and luxurious venues as well, and in forthcoming one! the company of some of the greatest minds that the games industry has to offer! _Damir Durovic