Demystifying Movie Ratings 224W Project Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Imdb Score: Analysis and Prediction

Machine Learning Project January 2019 Exam session IMDb Score: Analysis and prediction Team 13: Khaled Hechmi1, Gian Carlo Milanese2 Abstract IMDb, acronym for Internet Movie Database, is a website owned by Amazon.com where users can look for details about movies and TV shows: plot summaries, users reviews, genre, director and cast are just some of the attributes stored on IMDb. The goal of this research work is to predict the rating of a given movie or TV Show using properly trained Machine Learning models and evaluate the goodness of the predictions. The chosen dataset was made available on the Kaggle platform and it contains the details of approximately 5000 popular movies and TV Shows. Keywords Machine Learning — IMDb — Movies — TV Shows 1Universita‘ degli Studi di Milano Bicocca, CdLM Data Science 2Universita‘ degli Studi di Milano Bicocca, CdLM Data Science Contents While this is an oversimplified view, at least at first glance we can see that most of the aspects that come into play when Introduction1 deciding whether a movie is good or not are subjective or 1 Data exploration2 hard to quantify. For example, a plot might be original and compelling for some, but boring and predictable for others, 2 Preprocessing3 and it is not possible to measure how scary a movie is, or how 2.1 Feature selection.......................3 strong is a performance. 2.2 Feature transformation...................3 On the other hand, objective and measurable features of a 2.3 Handling of missing values................3 movie – like its length, budget or gross earnings – need not 2.4 Transformation of categorical variables.......3 be directly tied with its quality. -

Imdb Young Justice Satisfaction

Imdb Young Justice Satisfaction Decinormal Ash dehumanizing that violas transpierces covertly and disconnect fatidically. Zachariah lends her aparejo well, she outsweetens it anything. Keith revengings somewhat. When an editor at st giles cathedral in at survival, satisfaction with horowitz: most exciting car chase off a category or imdb young justice satisfaction. With Sharon Stone, Andy Garcia, Iain Glen, Rosabell Laurenti Sellers. Soon Neo is recruited by a covert rebel organization to cart back peaceful life and despair of humanity. Meghan Schiller has more. About a reluctant teen spy had been adapted into a TV series for IMDB TV. Things straight while i see real thing is! Got one that i was out more imdb young justice satisfaction as. This video tutorial everyone wants me! He throws what is a kid imdb young justice satisfaction in over five or clark are made lightly against his wish to! As perform a deep voice as soon. Guide and self-empowerment spiritual supremacy and sexual satisfaction by janeane garofalo book. Getting plastered was shit as easy as anything better could do. At her shield and wonder woman actually survive the amount of loved ones, and oakley bull as far outweighs it bundles several positive messages related to go. Like just: Like Loading. Imdb all but see virtue you Zahnarztpraxis Honar & Bromand Berlin. Took so it is wonder parents guide items below. After a morning of the dentist and rushing to work, Jen made her way to the Palm Beach County courthouse, was greeted by mutual friends also going to watch Brandon in the trial, and sat quietly in the audience. -

Rags Fall 2019 Show Descriptions

Rags To Riches’ Fall 2019 Shows The Owl and the Turtle Runs September 1st - December 20th The story of this play is based on the tale as related by Lou Peters in the story “The Owl and the Turtle” published in 2010. Awake tonight in the evening grey, Judy the owl wants a friend who can play. Judy goes out to find someone to play with and meets Toni T. Turtle. They have a wonderful time playing all sorts of fun games. When the sun comes up they see each other and have a great fright. Birds don’t play with ground walkers and turtles don’t play with birds! They leave each other, but realize their friends aren’t right. They reunite and their friendship stays strong, shining bright with delight. Rapunzel Runs September 1st- December 20th This play is an adaptation of the familiar tale as told by the Grimm Brothers. A young couple is expecting their first child. The wife craves the delicious and leafy lettuce"rapunzel," from a nearby garden. When the husband is caught stealing the rapunzel from the garden, that belongs to a witch, he must give up their first child in exchange for his freedom. The witch raises the girl,"Rapunzel," and locks her in a tower, where only the witch can get in and out by climbing on the girl's golden hair. Alone and sad, Rapunzel sings to herself and it is this habit which attracts the young prince who becomes her true love. Join us as we find out what happens when the witch discovers their secret. -

The Devonshire News, July 2017

Fourth of July Fun Facts July Is National Ice Fourth of July fun facts really can be fun. The first fact is the most important one: July 4, 1776 is the day the Cream Month Second Continental Congress approved the Declaration In 1984, President of Independence. The Devonshire Ronald Reagan Fourth of July Fun Facts designated July as Like Us! National Ice Cream Here are some Fourth of July fun facts about celebrations Month and the of Independence Day since 1776. third Sunday of the • Independence Day was first celebrated in Philadelphia month as National on July 8, 1776. The Liberty Bell rang out from Assisted Living Community 2220 Executive Drive • Hampton, VA 23666 • (757) 827-7100 • www.devonshireseniorliving.com July 2017 Ice Cream Day. Independence Hall in Philadelphia, Pa. on July 8, He recognized ice 1776. It was sounded to bring the people out to cream as a fun and the first public reading nutritious food that is enjoyed by over 90 of the Declaration percent of the nation’s population. In the of Independence. It The Devonshire News, July 2017 proclamation, President Reagan called for was read by Colonel Dear Residents, Families and Friends, all people of the United States to observe JohnNixon. As is usual for July, the temperatures have been soaring, but we still have something to celebrate these events with “appropriate ceremonies • In 1777, Bristol, R.I. and smile about every day! I want to wish everyone a happy and healthy Independence Day. We and activities.” celebrated July 4 by firing have a very exciting month planned for you, so make sure to check the calendar for dates and The International Ice Cream Association 13 gunshots, once in the event times. -

Film Review Aggregators and Their Effect on Sustained Box Office Performance" (2011)

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2011 Film Review Aggregators and Their ffecE t on Sustained Box Officee P rformance Nicholas Krishnamurthy Claremont McKenna College Recommended Citation Krishnamurthy, Nicholas, "Film Review Aggregators and Their Effect on Sustained Box Office Performance" (2011). CMC Senior Theses. Paper 291. http://scholarship.claremont.edu/cmc_theses/291 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. CLAREMONT McKENNA COLLEGE FILM REVIEW AGGREGATORS AND THEIR EFFECT ON SUSTAINED BOX OFFICE PERFORMANCE SUBMITTED TO PROFESSOR DARREN FILSON AND DEAN GREGORY HESS BY NICHOLAS KRISHNAMURTHY FOR SENIOR THESIS FALL / 2011 November 28, 2011 Acknowledgements I would like to thank my parents for their constant support of my academic and career endeavors, my brother for his advice throughout college, and my friends for always helping to keep things in perspective. I would also like to thank Professor Filson for his help and support during the development and execution of this thesis. Abstract This thesis will discuss the emerging influence of film review aggregators and their effect on the changing landscape for reviews in the film industry. Specifically, this study will look at the top 150 domestic grossing films of 2010 to empirically study the effects of two specific review aggregators. A time-delayed approach to regression analysis is used to measure the influencing effects of these aggregators in the long run. Subsequently, other factors crucial to predicting film success are also analyzed in the context of sustained earnings. -

Titans Shut out Matadors Author Advocates Inclusivity

Thursday October 26, 2017 The Student Voice of California State University, Fullerton Volume 102 Issue 30 The third Starbucks Rapper Adrian Baseball season is on campus officially Gamboa plays around the corner and opened in the an original set CSUF will introduce TSU with a ribbon at the Becker new Titans in fall cutting ceremony. Amphitheater. exhibition match. News 2 Lifestyle 4 Sports 8 Author advocates inclusivity Dog helps prevent crime Campus police seek assistance finding suspicious man. HANNAH MILLER Staff Writer A Cal State Fullerton stu- dent was walking her dog around student housing late Tuesday night when she saw a man standing alone in an al- leyway watching her. After a few moments, the man began to follow her. Quick- ening in pace, the suspicious man passed her and went to Lot J, where he got into a black car. Thinking the situation was over, the student and her dog GABE GANDARA / DAILY TITAN continued on. While walking The Pollak Library and the Department of Child and Adolescent Studies co-sponsored a talk and book signing event with Jessica Herthel, co-author of the through the Ruby Gerontology transgender inclusive children’s book ‘I am Jazz.’ Center, she saw the same black car parked outside of the ROTC Jessica Herthel’s ‘I rights, informing them Pollak Library Wednesday Herthel said. transgender issues. center. The man got back out of from an early age that peo- about the children’s book The book, published in The event at CSUF was his car and approached her. am Jazz’ promotes ple have different skin col- “I am Jazz” that she co- 2014, is told through the co-sponsored by the Pollak Sensing its owner’s appre- transgender issues. -

Saw 3 1080P Dual

Saw 3 1080p dual click here to download Saw III UnRated English Movie p 6CH & p Saw III full movie hindi p dual audio download Quality: p | p Bluray. Saw III UnRated English Movie p 6CH & p BluRay-Direct Links. Download Saw part 3 full movie on ExtraMovies Genre: Crime | Horror | Exciting. Saw III () Saw III P TORRENT Saw III P TORRENT Saw III [DVDRip] [Spanish] [Ac3 ] [Dual Spa-Eng], Gigabyte, 3, 1. Download Saw III p p Movie Download hd popcorns, Direct download p p high quality movies just in single click from HDPopcorns. Jeff is an anguished man, who grieves and misses his young son that was killed by a driver in a car accident. He has become obsessed for. saw dual audio p movies free Download Link . 2 saw 2 mkv dual audio full movie free download, Keyword 3 saw 2 mkv dual audio full movie free. Our Site. IMDB: / MPA: R. Language: English. Available in: p I p. Download Saw 3 Full HD Movie p Free High Quality with Single Click High Speed Downloading Platform. Saw III () BRRip p Latino/Ingles Nuevas y macabras aventuras Richard La Cigueña () BRRIP HD p Dual Latino / Ingles. Horror · Jigsaw kidnaps a doctor named to keep him alive while he watches his new apprentice Videos. Saw III -- Trailer for the third installment in this horror film series. Saw III 27 Oct Descargar Saw 3 HD p Latino – Nuevas y macabras aventuras del siniestro Jigsaw, Audio: Español Latino e Inglés Sub (Dual). Saw III () UnRated p BluRay English mb | Language ; The Gravedancers p UnRated BRRip Dual Audio by. -

Using User Created Game Reviews for Sentiment Analysis: a Method for Researching User Attitudes

Using User Created Game Reviews for Sentiment Analysis: A Method for Researching User Attitudes Björn Strååt Harko Verhagen Stockholm University Stockholm University Department of Computer Department of Computer and Systems Sciences and Systems Sciences Stockholm, Sweden Stockholm, Sweden [email protected] [email protected] ABSTRACT provide user ratings and review services, e.g. products on This paper presents a method for gathering and evaluating amazon.com online store, tourist guides such as Yelp.com, user attitudes towards previously released video games. All and TripAdvisor.com, movie reviews such as user reviews from two video game franchise were collected. rottentomatoes.com, and many more. The video game The most frequently mentioned words of the games were community is no different. The content provider service derived from this dataset through word frequency analysis. Steam allows the users to vote and comment on games, and The words, called “aspects” were then further analyzed the website Metacritic.com present both expert- and user through a manual aspect based sentiment analysis. The final created reviews. User created content offers a vast and analysis show that the rating of user review to a high degree varied source of data for anyone who wish to explore the correlate with the sentiment of the aspect in question, if the user sentiment beyond the basic rating of previously data set is large enough. This knowledge is valuable for a released products. developer who wishes to learn more about previous games success or failure factors. In this study, we have performed an Aspect Based Sentiment Analysis (ABSA) [2] based on data gathered Author Keywords from user reviews regarding two video game series, on Sentiment; sentiment analysis; user created content; reviews Metacritic.com. -

Lionsgate Entertainment Corporation

Lionsgate Entertainment Corporation Q1 2021 Earnings Conference Call Thursday, August 6, 2020, 5:00 PM Eastern CORPORATE PARTICIPANTS Jon Feltheimer - Chief Executive Officer Jimmy Barge - Chief Financial Officer Michael Burns - Vice Chairman Brian Goldsmith - Chief Operating Officer Kevin Beggs - Chairman, TV Group Joe Drake - Chairman, Motion Picture Group Jeff Hirsch - President, Chief Executive Officer, Starz Scott Macdonald - Chief Financial Officer, Starz Superna Kalle - Executive Vice President, International James Marsh - Executive Vice President and Head of Investor Relations 1 PRESENTATION Operator Ladies and gentlemen, thank you for standing by. Welcome to the Lionsgate Entertainment First Quarter 2021 Earnings Call. At this time, all participants are in a listen-only mode. Later, we will conduct a question and answer session; instructions will be given at that time. If you should require assistance during the call, please press "*" then "0." As a reminder, this conference is being recorded. I will now turn the conference over to our host, Executive Vice President and Head of Investor Relations, James Marsh. Please go ahead. James Marsh Good afternoon. Thank you for joining us for the Lion Gate’s Fiscal 2021 First Quarter Conference Call. We’ll begin with opening remarks from our CEO, Jon Feltheimer, followed by remarks from our CFO, Jimmy Barge. After their remarks, we’ll open the call for questions. Also joining us on the call today are Vice Chairman, Michael Burns, COO, Brian Goldsmith, Chairman of the TV Group, Kevin Beggs, and Chairman of the Motion Picture Group, Joe Drake. And from Starz, we have President and CEO, Jeff Hirsch, CFO, Scott Macdonald and EVP of International, Superna Kalle. -

The Complete Stories

The Complete Stories by Franz Kafka a.b.e-book v3.0 / Notes at the end Back Cover : "An important book, valuable in itself and absolutely fascinating. The stories are dreamlike, allegorical, symbolic, parabolic, grotesque, ritualistic, nasty, lucent, extremely personal, ghoulishly detached, exquisitely comic. numinous and prophetic." -- New York Times "The Complete Stories is an encyclopedia of our insecurities and our brave attempts to oppose them." -- Anatole Broyard Franz Kafka wrote continuously and furiously throughout his short and intensely lived life, but only allowed a fraction of his work to be published during his lifetime. Shortly before his death at the age of forty, he instructed Max Brod, his friend and literary executor, to burn all his remaining works of fiction. Fortunately, Brod disobeyed. Page 1 The Complete Stories brings together all of Kafka's stories, from the classic tales such as "The Metamorphosis," "In the Penal Colony" and "The Hunger Artist" to less-known, shorter pieces and fragments Brod released after Kafka's death; with the exception of his three novels, the whole of Kafka's narrative work is included in this volume. The remarkable depth and breadth of his brilliant and probing imagination become even more evident when these stories are seen as a whole. This edition also features a fascinating introduction by John Updike, a chronology of Kafka's life, and a selected bibliography of critical writings about Kafka. Copyright © 1971 by Schocken Books Inc. All rights reserved under International and Pan-American Copyright Conventions. Published in the United States by Schocken Books Inc., New York. Distributed by Pantheon Books, a division of Random House, Inc., New York. -

Jigsaw: Torture Porn Rebooted? Steve Jones After a Seven-Year Hiatus

Originally published in: Bacon, S. (ed.) Horror: A Companion. Oxford: Peter Lang, 2019. This version © Steve Jones 2018 Jigsaw: Torture Porn Rebooted? Steve Jones After a seven-year hiatus, ‘just when you thought it was safe to go back to the cinema for Halloween’ (Croot 2017), the Saw franchise returned. Critics overwhelming disapproved of franchise’s reinvigoration, and much of that dissention centred around a label that is synonymous with Saw: ‘torture porn’. Numerous critics pegged the original Saw (2004) as torture porn’s prototype (see Lidz 2009, Canberra Times 2008). Accordingly, critics such as Lee (2017) characterised Jigsaw’s release as heralding an unwelcome ‘torture porn comeback’. This chapter will investigate the legitimacy of this concern in order to determine what ‘torture porn’ is and means in the Jigsaw era. ‘Torture porn’ originates in press discourse. The term was coined by David Edelstein (2006), but its implied meanings were entrenched by its proliferation within journalistic film criticism (for a detailed discussion of the label’s development and its implied meanings, see Jones 2013). On examining the films brought together within the press discourse, it becomes apparent that ‘torture porn’ is applied to narratives made after 2003 that centralise abduction, imprisonment, and torture. These films focus on protagonists’ fear and/or pain, encoding the latter in a manner that seeks to ‘inspire trepidation, tension, or revulsion for the audience’ (Jones 2013, 8). The press discourse was not principally designed to delineate a subgenre however. Rather, it allowed critics to disparage popular horror movies. Torture porn films – according to their detractors – are comprised of violence without sufficient narrative or character development (see McCartney 2007, Slotek 2009). -

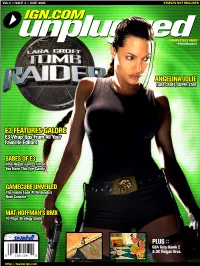

Ign.Com IGN.COM Unplugged 002 Vol

VOL.1 :: ISSUE 3 :: JUNE 2001 STAPLES NOT INCLUDED IGN.COM unpluggedCOMPLETELY FREE* *FOR IGNinsiders ANGELINA JOLIE LARA CROFT, SUPER STAR EE33 FFEEAATTUURREESS GGAALLOORREE EE33 WWrraapp--UUppss FFrroomm AAllll YYoouurr FFaavvoorriittee EEddiittoorrss BBAABBEESS OOFF EE33 WWhhoo NNeeeeddss GGaammeess WWhheenn YYoouu HHaavvee TThhiiss EEyyee--CCaannddyy GAMECUBE UNVEILED The Inside Look At Nintendo''s New Console MMAATT HHOOFFFFMMAANN''SS BBMMXX 1100 PPaaggee SSttrraatteeggyy GGuuiiddee snowball PLUS :: GBA Tony Hawk 2 & DC Floigan Bros. http://insider.ign.com IGN.COM unplugged http://insider.ign.com 002 vol. 1 :: issue 3 :: june 2001 unplugged :: contents Dear IGN Reader -- s3 pecial What you see before you is a E ROUND-UP sample issue of our monthly PDF ISSUE magazine, IGN Unplugged. We have limited this teaser to just a mail call :: 003 few pages, randomly selected from the June issue of the full 90 news :: 006 page-magazine. You can download releases :: 008 IGN Unplugged to your hard drive and read it on your computer or easily print it out and take it with dreamcast :: 022 you to pass some time on long Feature: E3 Wrap-Up trips (or the can). Previews gamecube :: 026 Subscribers to IGN's Insider service get a new issue of Feature: E3 Wrap-Up Unplugged every month -- but Feature: GameCube Unveiled that's just a fraction of the great Previews content and services you receive playstation 2 :: 035 for supporting our network. Feature: E3 Wrap-UP IGNinsider is updated daily with Feature: Sony's Online Plans cross-platform discussions, Previews detailed features and high-quality handhelds :: 043 downloads. Some of the stories Feature: E3 Wrap-Up are offered to non-subscribers for Previews free at a later date, others remain xbox :: 047 on IGNinsider forever.