AI for Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Siriusxm-Schedule.Pdf

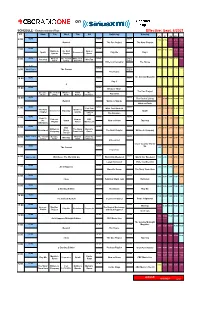

on SCHEDULE - Eastern Standard Time - Effective: Sept. 6/2021 ET Mon Tue Wed Thu Fri Saturday Sunday ATL ET CEN MTN PAC NEWS NEWS NEWS 6:00 7:00 6:00 5:00 4:00 3:00 Rewind The Doc Project The Next Chapter NEWS NEWS NEWS 7:00 8:00 7:00 6:00 5:00 4:00 Quirks & The Next Now or Spark Unreserved Play Me Day 6 Quarks Chapter Never NEWS What on The Cost of White Coat NEWS World 9:00 8:00 7:00 6:00 5:00 8:00 Pop Chat WireTap Earth Living Black Art Report Writers & Company The House 8:37 NEWS World 10:00 9:00 8:00 7:00 6:00 9:00 World Report The Current Report The House The Sunday Magazine 10:00 NEWS NEWS NEWS 11:00 10:00 9:00 8:00 7:00 Day 6 q NEWS NEWS NEWS 12:00 11:00 10:00 9:00 8:00 11:00 Because News The Doc Project Because The Cost of What on Front The Pop Chat News Living Earth Burner Debaters NEWS NEWS NEWS 1:00 12:00 The Cost of Living 12:00 11:00 10:00 9:00 Rewind Quirks & Quarks What on Earth NEWS NEWS NEWS 1:00 Pop Chat White Coat Black Art 2:00 1:00 12:00 11:00 10:00 The Next Quirks & Unreserved Tapestry Spark Chapter Quarks Laugh Out Loud The Debaters NEWS NEWS NEWS 2:00 Ideas in 3:00 2:00 1:00 12:00 11:00 Podcast Now or CBC the Spark Now or Never Tapestry Playlist Never Music Live Afternoon NEWS NEWS NEWS 3:00 CBC 4:00 3:00 2:00 1:00 12:00 Writers & The Story Marvin's Reclaimed Music The Next Chapter Writers & Company Company From Here Room Top 20 World This Hr The Cost of Because What on Under the NEWS NEWS 4:00 WireTap 5:00 4:00 3:00 2:00 1:00 Living News Earth Influence Unreserved Cross Country Check- NEWS NEWS Up 5:00 The Current -

Foundations of Computational Science August 29-30, 2019

Foundations of Computational Science August 29-30, 2019 Thursday, August 29 Time Speaker Title/Abstract 8:30 - 9:30am Breakfast 9:30 - 9:40am Opening Explainable AI and Multimedia Research Abstract: AI as a concept has been around since the 1950’s. With the recent advancements in machine learning algorithms, and the availability of big data and large computing resources, the scene is set for AI to be used in many more systems and applications which will profoundly impact society. The current deep learning based AI systems are mostly in black box form and are often non-explainable. Though it has high performance, it is also known to make occasional fatal mistakes. 9:30 - 10:05am Tat Seng Chua This has limited the applications of AI, especially in mission critical applications. In this talk, I will present the current state-of-the arts in explainable AI, which holds promise to helping humans better understand and interpret the decisions made by the black-box AI models. This is followed by our preliminary research on explainable recommendation, relation inference in videos, as well as leveraging prior domain knowledge, information theoretic principles, and adversarial algorithms to achieving explainable framework. I will also discuss future research towards quality, fairness and robustness of explainable AI. Group Photo and 10:05 - 10:35am Coffee Break Deep Learning-based Chinese Language Computation at Tsinghua University: 10:35 - 10:50am Maosong Sun Progress and Challenges 10:50 - 11:05am Minlie Huang Controllable Text Generation 11:05 - 11:20am Peng Cui Stable Learning: The Convergence of Causal Inference and Machine Learning 11:20 - 11:45am Yike Guo Data Efficiency in Machine Learning Deep Approximation via Deep Learning Abstract: The primary task of many applications is approximating/estimating a function through samples drawn from a probability distribution on the input space. -

Surnames 198

Surnames 198 P PACQUIN PAGONE PALCISCO PACUCH PAHACH PALEK PAAHANA PACY PAHEL PALENIK PAAR PADASAK PAHUSZKI PALERMO PAASSARELLI PADDOCK PAHUTSKY PALESCH PABALAN PADELL PAINE PALGUTA PABLIK PADGETT PAINTER PALI PABRAZINSKY PADLO PAIRSON PALILLA PABST PADUNCIC PAISELL PALINA PACCONI PAESANI PAJAK PALINO PACE PAESANO PAJEWSKI PALINSKI PACEK PAFFRATH PAKALA PALKO PACELLI PAGANI PAKOS PALL PACEY PAGANO PALACE PALLO PACHARKA PAGDEN PALADINO PALLONE PACIFIC PAGE PALAGGO PALLOSKY PACILLA PAGLARINI PALAIC PALLOTTINI PACINI PAGLIARINI PALANIK PALLOZZI PACK PAGLIARNI PALANKEY PALM PACKARD PAGLIARO PALANKI PALMA PACKER PAGLIARULO PALAZZONE PALMER PACNUCCI PAGLIASOTTI PALCHESKO PALMERO PACOLT PAGO PALCIC PALMERRI Historical & Genealogical Society of Indiana County 1/21/2013 Surnames 199 PALMIERI PANCIERRA PAOLO PARDUS PALMISANO PANCOAST PAONE PARE PALMISCIANO PANCZAK PAPAKIE PARENTE PALMISCNO PANDAL PAPCIAK PARENTI PALMO PANDULLO PAPE PARETTI PALOMBO PANE PAPIK PARETTO PALONE PANGALLO PAPOVICH PARFITT PALSGROVE PANGBURN PAPPAL PARHAM PALUCH PANGONIS PAPSON PARILLO PALUCHAK PANIALE PAPUGA PARIS PALUDA PANKOVICH PAPURELLO PARISE PALUGA PANKRATZ PARADA PARISEY PALUGNACK PANNACHIA PARANA PARISH PALUMBO PANNEBAKER PARANIC PARISI PALUS PANONE PARAPOT PARISO PALUSKA PANOSKY PARATTO PARIZACK PALYA PANTALL PARCELL PARK PAMPE PANTALONE PARCHINSKY PARKE PANAIA PANTANI PARCHUKE PARKER PANASCI PANTANO PARDEE PARKES PANASKI PANTZER PARDINI PARKHILL PANCHICK PANZY PARDO PARKHURST PANCHIK PAOLINELLIE PARDOE PARKIN Historical & Genealogical Society of Indiana County -

Data Science at OLCF

Data Science at OLCF Bronson Messer Scientific Computing Group Oak Ridge Leadership Computing Facility Oak Ridge National Laboratory Mallikarjam “Arjun” Shankar Group Leader – Advanced Data and Workflows Group Oak Ridge Leadership Computing Facility Oak Ridge National Laboratory ORNL is managed by UT-Battelle, LLC for the US Department of Energy OLCF Data/Learning Strategy & Tactics 1. Engage with applications – Summit Early Science Applications (e.g., CANDLE) – INCITE projects (e.g., Co-evolutionary Networks: From Genome to 3D Proteome, Jacobson, et al.) – Directors Discretionary projects (e.g., Fusion RNN, MiNerva) 2. Create leadership-class analytics capabilities – Leadership analytics (e.g., Frameworks: pbdR, TensorFlow + Horovod) – Algorithms requiring scale (e.g., non-negative matrix factorization) 3. Enable infrastructure for analytics/AI and data-intensive facilities – Workflows to include data from observations for analysis within OLCF – Analytics enabling technologies (e.g., container deployments for rapidly changing DL/ML frameworks, analytics notebooks, etc.) 2 Data Science at the OLCF Applications Supported through DD/ALCC: Selected Machine Learning Projects on Titan: 2016-2017 Program PI PI Employer Project Name Allocation (Titan core-hrs) Discovering Optimal Deep Learning and Neuromorphic Network Structures using Evolutionary ALCC Robert Patton ORNL 75,000,000 Approaches on High Performance Computers ALCC Gabriel Perdue FNAL Large scale deep neural network optimization for neutrino physics 58,000,000 ALCC Gregory Laskowski GE High-Fidelity Simulations of Gas Turbine Stages for Model Development using Machine Learning 30,000,000 High-Throughput Screening and Machine Learning for Predicting Catalyst Structure and Designing ALCC Efthimions Kaxiras Harvard U. 17,500,000 Effective Catalysts ALCC Georgia Tourassi ORNL CANDLE Treatment Strategy Challenge for Deep Learning Enabled Cancer Surveillance 10,000,000 DD Abhinav Vishnu PNNL Machine Learning on Extreme Scale GPU systems 3,500,000 DD J. -

Computational Science and Engineering

Computational Science and Engineering, PhD College of Engineering Graduate Coordinator: TBA Email: Phone: Department Chair: Marwan Bikdash Email: [email protected] Phone: 336-334-7437 The PhD in Computational Science and Engineering (CSE) is an interdisciplinary graduate program designed for students who seek to use advanced computational methods to solve large problems in diverse fields ranging from the basic sciences (physics, chemistry, mathematics, etc.) to sociology, biology, engineering, and economics. The mission of Computational Science and Engineering is to graduate professionals who (a) have expertise in developing novel computational methodologies and products, and/or (b) have extended their expertise in specific disciplines (in science, technology, engineering, and socioeconomics) with computational tools. The Ph.D. program is designed for students with graduate and undergraduate degrees in a variety of fields including engineering, chemistry, physics, mathematics, computer science, and economics who will be trained to develop problem-solving methodologies and computational tools for solving challenging problems. Research in Computational Science and Engineering includes: computational system theory, big data and computational statistics, high-performance computing and scientific visualization, multi-scale and multi-physics modeling, computational solid, fluid and nonlinear dynamics, computational geometry, fast and scalable algorithms, computational civil engineering, bioinformatics and computational biology, and computational physics. Additional Admission Requirements Master of Science or Engineering degree in Computational Science and Engineering (CSE) or in science, engineering, business, economics, technology or in a field allied to computational science or computational engineering field. GRE scores Program Outcomes: Students will demonstrate critical thinking and ability in conducting research in engineering, science and mathematics through computational modeling and simulations. -

Sophie Voisin

Sophie Voisin Contact Geographic Information Science & Technology Office: (865) 574-8235 Information Oak Ridge National Laboratory E-mail: [email protected] Oak Ridge, TN 37831-6017 Citizenship: France US Permanent Resident Biosketch Dr. Sophie Voisin received her PhD Degree in Computer Science and Image Processing from the University of Burgundy, France, in 2008. She was a visiting scholar with the Imaging, Robotics, and Intelligent Systems Laboratory at The University of Tennessee from October 2004 to December 2008 to work on her PhD research, and subsequently was involved in program development activities. In September 2010, she joined the Oak Ridge National Laboratory (ORNL) as a Postoctoral Reseach Associate to support various efforts related to applied signal processing, 2D and 3D image understanding, and high performance computing. Firstly, she worked at the ORNL Spallation Neutron Source performing quantitative analysis of neutron image data for various industrial and academic applications related to quality control, process monitoring, and to retrieve the structure of objects. Then, she joined the ORNL Biomedical Science & Engineering Center to develop on one hand image processing algorithms for eyegaze data analysis and on the other hand text processing techniques for social media data mining to correlate individuals' health history and their geographical exposure. Noteworthily she was part of the team that received a R&D 100 award for the developement of a personalized computer aid diagnostic system relying on eyegaze analysis for decision making. Since April 2014, she has been working for the Geographic Information Science & Technology group. Her research focuses on developing multispectral image processing algorithms for CPU and GPU platforms for high performance computing of satellite imagery. -

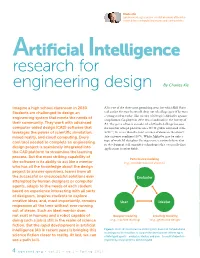

Artificial Intelligence Research For

Charles Xie ([email protected]) is a senior scientist who works at the inter- section between computational science and learning science. Artificial Intelligence research for engineering design By Charles Xie Imagine a high school classroom in 2030. AI is one of the three most promising areas for which Bill Gates Students are challenged to design an said earlier this year he would drop out of college again if he were a young student today. The victory of Google’s AlphaGo against engineering system that meets the needs of a top human Go player in 2016 was a landmark in the history of their community. They work with advanced AI. The game of Go is considered a difficult challenge because computer-aided design (CAD) software that the number of legal positions on a 19×19 grid is estimated to be leverages the power of scientific simulation, 2×10170, far more than the total number of atoms in the observ- mixed reality, and cloud computing. Every able universe combined (1082). While AlphaGo may be only a cool tool needed to complete an engineering type of weak AI that plays Go at present, scientists believe that its development will engender technologies that eventually find design project is seamlessly integrated into applications in other fields. the CAD platform to streamline the learning process. But the most striking capability of Performance modeling the software is its ability to act like a mentor (e.g., simulation-based analysis) who has all the knowledge about the design project to answer questions, learns from all the successful or unsuccessful solutions ever Evaluator attempted by human designers or computer agents, adapts to the needs of each student based on experience interacting with all sorts of designers, inspires students to explore creative ideas, and, most importantly, remains User Ideator responsive all the time without ever running out of steam. -

Spreading Excellence Report

HIGH PERFORMANCE AND EMBEDDED ARCHITECTURE AND COMPILATION Project Acronym: HiPEAC Project full title: High Performance and Embedded Architecture and Compilation Grant agreement no: ICT-217068 DELIVERABLE 3.1 SPREADING EXCELLENCE REPORT 15/04/2009 Spreading Excellence Report 1 1. Summary on Spreading Excellence ................................................................................ 3 2. Task 3.1: Conference ........................................................................................................ 4 2.1. HiPEAC 2008 Conference, Goteborg ......................................................................... 4 2.2. HiPEAC 2009 Conference, Paphos ........................................................................... 10 2.3. Conference Ranking .................................................................................................. 16 3. Task 3.2: Summer School .............................................................................................. 16 th 3.1. 4 International Summer School – 2008 ................................................................... 16 th 3.2. 5 International Summer School – 2009 ................................................................... 21 4. Task 3.3: HiPEAC Journal ............................................................................................ 22 5. Task 3.4: HiPEAC Roadmap ........................................................................................ 23 6. Task 3.5: HiPEAC Newsletter ...................................................................................... -

The Institutional Challenges of Cyberinfrastructure and E-Research

E-Research and E-Scholarship The Institutional Challenges Cyberinfrastructureof and E-Research cholarly practices across an astoundingly wide range of dis- ciplines have become profoundly and irrevocably changed By Clifford Lynch by the application of advanced information technology. This collection of new and emergent scholarly practices was first widely recognized in the science and engineering disciplines. In the late 1990s, the term e-science (or occasionally, particularly in Asia, cyber-science) began to be used as a shorthand for these new methods and approaches. The United Kingdom launched Sits formal e-science program in 2001.1 In the United States, a multi-year inquiry, having its roots in supercomputing support for the portfolio of science and engineering disciplines funded by the National Science Foundation, culminated in the production of the “Atkins Report” in 2003, though there was considerable delay before NSF began to act program- matically on the report.2 The quantitative social sciences—which are largely part of NSF’s funding purview and which have long traditions of data curation and sharing, as well as the use of high-end statistical com- putation—received more detailed examination in a 2005 NSF report.3 Key leaders in the humanities and qualitative social sciences recognized that IT-driven innovation in those disciplines was also well advanced, though less uniformly adopted (and indeed sometimes controversial). In fact, the humanities continue to showcase some of the most creative and transformative examples of the use of information technology to create new scholarship.4 Based on this recognition of the immense disciplinary scope of the impact of information technology, the more inclusive term e-research (occasionally, e-scholarship) has come into common use, at least in North America and Europe. -

A Compiler-Compiler for DSL Embedding

A Compiler-Compiler for DSL Embedding Amir Shaikhha Vojin Jovanovic Christoph Koch EPFL, Switzerland Oracle Labs EPFL, Switzerland {amir.shaikhha}@epfl.ch {vojin.jovanovic}@oracle.com {christoph.koch}@epfl.ch Abstract (EDSLs) [14] in the Scala programming language. DSL devel- In this paper, we present a framework to generate compil- opers define a DSL as a normal library in Scala. This plain ers for embedded domain-specific languages (EDSLs). This Scala implementation can be used for debugging purposes framework provides facilities to automatically generate the without worrying about the performance aspects (handled boilerplate code required for building DSL compilers on top separately by the DSL compiler). of extensible optimizing compilers. We evaluate the practi- Alchemy provides a customizable set of annotations for cality of our framework by demonstrating several use-cases encoding the domain knowledge in the optimizing compila- successfully built with it. tion frameworks. A DSL developer annotates the DSL library, from which Alchemy generates a DSL compiler that is built CCS Concepts • Software and its engineering → Soft- on top of an extensible optimizing compiler. As opposed to ware performance; Compilers; the existing compiler-compilers and language workbenches, Alchemy does not need a new meta-language for defining Keywords Domain-Specific Languages, Compiler-Compiler, a DSL; instead, Alchemy uses the reflection capabilities of Language Embedding Scala to treat the plain Scala code of the DSL library as the language specification. 1 Introduction A compiler expert can customize the behavior of the pre- Everything that happens once can never happen defined set of annotations based on the features provided by again. -

Podcast List

ACS Chemical Biology Materials Today - Podcasts Africa in Progress Meatball's Meatballs - Juicy and spicy audio meatballs. All Things That Fly Medically Speaking Podcast Annals of Internal Medicine Podcast MedPod101 | Learn Medicine APM: Marketplace Money MicrobeWorld's Meet the Scientist Podcast Are We Alone - where science isn't alien. MIT Press Podcast Asunto del dia en R5 NeuroPod Bandwidth, CBC Radio New England Journal of Medicine Bath University Podcast Directory NIH Podcasts and Videocasts Berkman Center NPR: Planet Money Podcast Beyond the Book NPR Topics: Technology Podcast Bibliotech NW Spanish News - NHK World Radio Japan Books and Ideas Podcast On the Media Brain Science Podcast One Planet Bridges with Africa Out of Their Minds, CBC radio feed Brookings Institute Persiflagers Infectious Disease Puscasts CBS News: Larry Magrid's Tech Report Podnutz - Computer Repair Podcast Chemistry World Podcast PRI: Selected Shorts Podcast Conversations in Medicine PRI's The World: Technology from BBC/PRI/WGBH Conversations Network QuackCast CyberSpeak's Podcast Quirks & Quarks Complete Show from CBC Radio Diffusion Science radio Regenerative Medicine Today - Podcast Digital Planet Science Elements Distillations Science in Action Documentaries, BBC Science Magazine Podcast Duke Office Hours Sciencepodcasters.org Earth Beat Security Now! EconTalk Society for General Microbiology Podcast Engines Of Our Ingenuity Podcast Sound Investing Escape Pod South Asia Wired ESRI Speakers Podcast Spark Plus from CBC Radio FORA.tv Technology Today Stanford's -

Cyberinfrastructure for High Energy Physics in Korea

17th International Conference on Computing in High Energy and Nuclear Physics (CHEP09) IOP Publishing Journal of Physics: Conference Series 219 (2010) 072032 doi:10.1088/1742-6596/219/7/072032 Cyberinfrastructure for High Energy Physics in Korea Kihyeon Cho1, Hyunwoo Kim and Minho Jeung High Energy Physics Team Korea Institute of Science and Technology Information, Daejeon 305-806, Korea E-mail: [email protected] Abstract. We introduce the hierarchy of cyberinfrastructure which consists of infrastructure (supercomputing and networks), Grid, e-Science, community and physics from bottom layer to top layer. KISTI is the national headquarter of supercomputer, network, Grid and e-Science in Korea. Therefore, KISTI is the best place to for high energy physicists to use cyberinfrastructure. We explain this concept on the CDF and the ALICE experiments. In the meantime, the goal of e-Science is to study high energy physics anytime and anywhere even if we are not on-site of accelerator laboratories. The components are data production, data processing and data analysis. The data production is to take both on-line and off-line shifts remotely. The data processing is to run jobs anytime, anywhere using Grid farms. The data analysis is to work together to publish papers using collaborative environment such as EVO (Enabling Virtual Organization) system. We also present the global community activities of FKPPL (France-Korea Particle Physics Laboratory) and physics as top layer. 1. Introduction We report our experiences and results relating to the utilization of cyberinfrastrucure in high energy physics. According to the Wiki webpage on “cyberinfrastrucure,” the term “cyberinfrastructure” describes the new research environments that support advanced data acquisition, storage, management, integration, mining, and visulaization, as well as other computing and information processing services over the Internet.