Corso Sul File System Parallelo Distribuito GPFS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Globalfs: a Strongly Consistent Multi-Site File System

GlobalFS: A Strongly Consistent Multi-Site File System Leandro Pacheco Raluca Halalai Valerio Schiavoni University of Lugano University of Neuchatelˆ University of Neuchatelˆ Fernando Pedone Etienne Riviere` Pascal Felber University of Lugano University of Neuchatelˆ University of Neuchatelˆ Abstract consistency, availability, and tolerance to partitions. Our goal is to ensure strongly consistent file system operations This paper introduces GlobalFS, a POSIX-compliant despite node failures, at the price of possibly reduced geographically distributed file system. GlobalFS builds availability in the event of a network partition. Weak on two fundamental building blocks, an atomic multicast consistency is suitable for domain-specific applications group communication abstraction and multiple instances of where programmers can anticipate and provide resolution a single-site data store. We define four execution modes and methods for conflicts, or work with last-writer-wins show how all file system operations can be implemented resolution methods. Our rationale is that for general-purpose with these modes while ensuring strong consistency and services such as a file system, strong consistency is more tolerating failures. We describe the GlobalFS prototype in appropriate as it is both more intuitive for the users and detail and report on an extensive performance assessment. does not require human intervention in case of conflicts. We have deployed GlobalFS across all EC2 regions and Strong consistency requires ordering commands across show that the system scales geographically, providing replicas, which needs coordination among nodes at performance comparable to other state-of-the-art distributed geographically distributed sites (i.e., regions). Designing file systems for local commands and allowing for strongly strongly consistent distributed systems that provide good consistent operations over the whole system. -

Big Data Storage Workload Characterization, Modeling and Synthetic Generation

BIG DATA STORAGE WORKLOAD CHARACTERIZATION, MODELING AND SYNTHETIC GENERATION BY CRISTINA LUCIA ABAD DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate College of the University of Illinois at Urbana-Champaign, 2014 Urbana, Illinois Doctoral Committee: Professor Roy H. Campbell, Chair Professor Klara Nahrstedt Associate Professor Indranil Gupta Assistant Professor Yi Lu Dr. Ludmila Cherkasova, HP Labs Abstract A huge increase in data storage and processing requirements has lead to Big Data, for which next generation storage systems are being designed and implemented. As Big Data stresses the storage layer in new ways, a better understanding of these workloads and the availability of flexible workload generators are increas- ingly important to facilitate the proper design and performance tuning of storage subsystems like data replication, metadata management, and caching. Our hypothesis is that the autonomic modeling of Big Data storage system workloads through a combination of measurement, and statistical and machine learning techniques is feasible, novel, and useful. We consider the case of one common type of Big Data storage cluster: A cluster dedicated to supporting a mix of MapReduce jobs. We analyze 6-month traces from two large clusters at Yahoo and identify interesting properties of the workloads. We present a novel model for capturing popularity and short-term temporal correlations in object re- quest streams, and show how unsupervised statistical clustering can be used to enable autonomic type-aware workload generation that is suitable for emerging workloads. We extend this model to include other relevant properties of stor- age systems (file creation and deletion, pre-existing namespaces and hierarchical namespaces) and use the extended model to implement MimesisBench, a realistic namespace metadata benchmark for next-generation storage systems. -

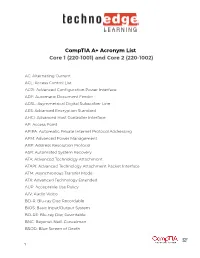

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

A Decentralized Cloud Storage Network Framework

Storj: A Decentralized Cloud Storage Network Framework Storj Labs, Inc. October 30, 2018 v3.0 https://github.com/storj/whitepaper 2 Copyright © 2018 Storj Labs, Inc. and Subsidiaries This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 license (CC BY-SA 3.0). All product names, logos, and brands used or cited in this document are property of their respective own- ers. All company, product, and service names used herein are for identification purposes only. Use of these names, logos, and brands does not imply endorsement. Contents 0.1 Abstract 6 0.2 Contributors 6 1 Introduction ...................................................7 2 Storj design constraints .......................................9 2.1 Security and privacy 9 2.2 Decentralization 9 2.3 Marketplace and economics 10 2.4 Amazon S3 compatibility 12 2.5 Durability, device failure, and churn 12 2.6 Latency 13 2.7 Bandwidth 14 2.8 Object size 15 2.9 Byzantine fault tolerance 15 2.10 Coordination avoidance 16 3 Framework ................................................... 18 3.1 Framework overview 18 3.2 Storage nodes 19 3.3 Peer-to-peer communication and discovery 19 3.4 Redundancy 19 3.5 Metadata 23 3.6 Encryption 24 3.7 Audits and reputation 25 3.8 Data repair 25 3.9 Payments 26 4 4 Concrete implementation .................................... 27 4.1 Definitions 27 4.2 Peer classes 30 4.3 Storage node 31 4.4 Node identity 32 4.5 Peer-to-peer communication 33 4.6 Node discovery 33 4.7 Redundancy 35 4.8 Structured file storage 36 4.9 Metadata 39 4.10 Satellite 41 4.11 Encryption 42 4.12 Authorization 43 4.13 Audits 44 4.14 Data repair 45 4.15 Storage node reputation 47 4.16 Payments 49 4.17 Bandwidth allocation 50 4.18 Satellite reputation 53 4.19 Garbage collection 53 4.20 Uplink 54 4.21 Quality control and branding 55 5 Walkthroughs ............................................... -

HP Storageworks Clustered File System Command Line Reference

HP StorageWorks Clustered File System 3.0 Command Line reference guide *392372-001* *392372–001* Part number: 392372–001 First edition: May 2005 Legal and notice information © Copyright 1999-2005 PolyServe, Inc. Portions © 2005 Hewlett-Packard Development Company, L.P. Neither PolyServe, Inc. nor Hewlett-Packard Company makes any warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Neither PolyServe nor Hewlett-Packard shall be liable for errors contained herein or for incidental or consequential damages in connection with the furnishing, performance, or use of this material. This document contains proprietary information, which is protected by copyright. No part of this document may be photocopied, reproduced, or translated into another language without the prior written consent of Hewlett-Packard. The information is provided “as is” without warranty of any kind and is subject to change without notice. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Neither PolyServe nor HP shall be liable for technical or editorial errors or omissions contained herein. The software this document describes is PolyServe confidential and proprietary. PolyServe and the PolyServe logo are trademarks of PolyServe, Inc. PolyServe Matrix Server contains software covered by the following copyrights and subject to the licenses included in the file thirdpartylicense.pdf, which is included in the PolyServe Matrix Server distribution. Copyright © 1999-2004, The Apache Software Foundation. Copyright © 1992, 1993 Simmule Turner and Rich Salz. -

Winter 2004 ISSN 1741-4229

IIRRMMAA INFORMATION RISK MANAGEMENT & AUDIT JOURNAL ◆ SPECIALIST ROUP OF THE ◆ JOURNAL A G BCS volume 14 number 5 winter 2004 ISSN 1741-4229 Programme for members’ meetings 2004 – 2005 Tuesday 7 September 2004 Computer Audit Basics 2: Auditing 16:00 for 16:30 Late afternoon the Infrastructure and Operations KPMG Thursday 7 October 2004 Regulatory issues affecting IT in the 10:00 to 16:00 Full day Financial Industry Old Sessions House Tuesday 16 November 2004 Networks Attacks – quantifying and 10:00 to 16:00 Full day dealing with future threats Chartered Accountants Hall Tuesday 18 January 2005 Database Security 16:00 for 16:30 Late afternoon KPMG Tuesday 15 March 2005 IT Governance 10:00 to 16:00 Full day BCS – The Davidson Building, 5 Southampton Street, London WC2 7HA Tuesday 17 May 2005 Computer Audit Basics 3: CAATS 16:00 for 16:30 Late afternoon Preceded by IRMA AGM KPMG AGM precedes the meeting Please note that these are provisional details and are subject to change. The late afternoon meetings are free of charge to members. For full day briefings a modest, very competitive charge is made to cover both lunch and a full printed delegate’s pack. For venue maps see back cover. Contents of the Journal Technical Briefings Front Cover Editorial John Mitchell 3 The Down Under Column Bob Ashton 4 Members’ Benefits 5 Creating and Using Issue Analysis Memos Greg Krehel 6 Computer Forensics Science – Part II Celeste Rush 11 Membership Application 25 Management Committee 27 Advertising in the Journal 28 IRMA Venues Map 28 GUIDELINES FOR POTENTIAL AUTHORS The Journal publishes various types of article. -

Shared File Systems: Determining the Best Choice for Your Distributed SAS® Foundation Applications Margaret Crevar, SAS Institute Inc., Cary, NC

Paper SAS569-2017 Shared File Systems: Determining the Best Choice for your Distributed SAS® Foundation Applications Margaret Crevar, SAS Institute Inc., Cary, NC ABSTRACT If you are planning on deploying SAS® Grid Manager and SAS® Enterprise BI (or other distributed SAS® Foundation applications) with load balanced servers on multiple operating systems instances, , a shared file system is required. In order to determine the best shared file system choice for a given deployment, it is important to understand how the file system is used, the SAS® I/O workload characteristics performed on it, and the stressors that SAS Foundation applications produce on the file system. For the purposes of this paper, we use the term "shared file system" to mean both a clustered file system and shared file system, even though" shared" can denote a network file system and a distributed file system – not clustered. INTRODUCTION This paper examines the shared file systems that are most commonly used with SAS and reviews their strengths and weaknesses. SAS GRID COMPUTING REQUIREMENTS FOR SHARED FILE SYSTEMS Before we get into the reasons why a shared file system is needed for SAS® Grid Computing, let’s briefly discuss the SAS I/O characteristics. GENERAL SAS I/O CHARACTERISTICS SAS Foundation creates a high volume of predominately large-block, sequential access I/O, generally at block sizes of 64K, 128K, or 256K, and the interactions with data storage are significantly different from typical interactive applications and RDBMSs. Here are some major points to understand (more details about the bullets below can be found in this paper): SAS tends to perform large sequential Reads and Writes. -

Disk Management (4 Min) When We Want to Install a Hard Drive in Our System We're Going to Need to Make That Hard Drive Either a Basic Disk Or a Dynamic Disk

Video – Disk Management (4 min) When we want to install a hard drive in our system we're going to need to make that hard drive either a basic disk or a dynamic disk. A basic disk, which is the default, contains primary and extended partitions as well as logical drives. A basic disk is limited to four partitions. The Windows operating system needs to be installed onto a basic disk. After it's installed the basic disk can then be converted to a dynamic disk. Primary partition. The primary partition contains the operating system. There can be up to four primary partitions per hard drive and a primary partition cannot be subdivided into smaller sections. A primary partition can also be marked as the active partition. The operating system uses the active partition to boot the computer. Only one primary partition per disk can be marked as active. In most cases, the C: drive is the active partition and contains the boot and system files. Meaning the MBR or Master Boot Record Partition Table. Newer systems that use EFI instead of BIOS are using the GPT or GUID partition table. If you're using the GPT instead of the MBR partition table you can have more than four primary partitions on a disk. An extended partition. There can only be one extended partition per hard drive. Once again, primary partitions, active partitions and extended partitions are all part of a basic disk. An extended partition cannot hold the operating system, but it can be subdivided into smaller sections called logical drives. -

An Introduction to Windows Operating System

EINAR KROGH AN INTRODUCTION TO WINDOWS OPERATING SYSTEM Download free eBooks at bookboon.com 2 An Introduction to Windows Operating System 2nd edition © 2017 Einar Krogh & bookboon.com ISBN 978-87-403-1935-4 Peer review by Høgskolelektor Lars Vidar Magnusson, Høgskolen i Østfold Download free eBooks at bookboon.com 3 AN INTRODUCTION TO WINDOWS OPERATING SYSTEM CONTENTS CONTENTS Introduction 9 1 About Windows history 10 1.1 MS-DOS 10 1.2 The first versions of Windows 11 1.3 Windows NT 12 1.4 Windows versions based on Windows NT 13 1.5 Windows Server 15 1.6 Control Questions 17 2 The tasks of an operating system 18 2.1 About the construction of computers 19 2.2 Central tasks for an operating system 20 2.3 Control Questions 22 �e Graduate Programme I joined MITAS because for Engineers and Geoscientists I wanted real responsibili� www.discovermitas.comMaersk.com/Mitas �e Graduate Programme I joined MITAS because for Engineers and Geoscientists I wanted real responsibili� Maersk.com/Mitas Month 16 I wwasas a construction Month 16 supervisorI wwasas in a construction the North Sea supervisor in advising and the North Sea Real work helpinghe foremen advising and IInternationalnternationaal opportunities ��reeree wworkoro placements solves Real work problems helpinghe foremen IInternationalnternationaal opportunities ��reeree wworkoro placements solves problems Download free eBooks at bookboon.com Click on the ad to read more 4 AN INTRODUCTION TO WINDOWS OPERATING SYSTEM CONTENTS 3 Some concepts and terms of the Windows operating system 23 3.1 -

Comparative Analysis of Distributed and Parallel File Systems' Internal Techniques

Comparative Analysis of Distributed and Parallel File Systems’ Internal Techniques Viacheslav Dubeyko Content 1 TERMINOLOGY AND ABBREVIATIONS ................................................................................ 4 2 INTRODUCTION......................................................................................................................... 5 3 COMPARATIVE ANALYSIS METHODOLOGY ....................................................................... 5 4 FILE SYSTEM FEATURES CLASSIFICATION ........................................................................ 5 4.1 Distributed File Systems ............................................................................................................................ 6 4.1.1 HDFS ..................................................................................................................................................... 6 4.1.2 GFS (Google File System) ....................................................................................................................... 7 4.1.3 InterMezzo ............................................................................................................................................ 9 4.1.4 CodA .................................................................................................................................................... 10 4.1.5 Ceph.................................................................................................................................................... 12 4.1.6 DDFS .................................................................................................................................................. -

Tahoe-LAFS Documentation Release 1.X

Tahoe-LAFS Documentation Release 1.x The Tahoe-LAFS Developers January 19, 2017 Contents 1 Welcome to Tahoe-LAFS! 3 1.1 What is Tahoe-LAFS?..........................................3 1.2 What is “provider-independent security”?................................3 1.3 Access Control..............................................4 1.4 Get Started................................................4 1.5 License..................................................4 2 Installing Tahoe-LAFS 5 2.1 First: In Case Of Trouble.........................................5 2.2 Pre-Packaged Versions..........................................5 2.3 Preliminaries...............................................5 2.4 Install the Latest Tahoe-LAFS Release.................................6 2.5 Running the tahoe executable.....................................8 2.6 Running the Self-Tests..........................................8 2.7 Common Problems............................................9 2.8 Using Tahoe-LAFS............................................9 3 How To Run Tahoe-LAFS 11 3.1 Introduction............................................... 11 3.2 Do Stuff With It............................................. 12 3.3 Socialize................................................. 13 3.4 Complain................................................. 13 4 Configuring a Tahoe-LAFS node 15 4.1 Node Types................................................ 16 4.2 Overall Node Configuration....................................... 16 4.3 Connection Management........................................ -

GIAC.GCFA.V2018-03-11.Q309

GIAC.GCFA.v2018-03-11.q309 Exam Code: GCFA Exam Name: GIAC Certified Forensics Analyst Certification Provider: GIAC Free Question Number: 309 Version: v2018-03-11 # of views: 403 # of Questions views: 24822 https://www.freecram.com/torrent/GIAC.GCFA.v2018-03-11.q309.html NEW QUESTION: 1 Which of the following statements is NOT true about the file slack spaces in Windows operating system? A. File slack is the space, which exists between the end of the file and the end of the last cluster. B. File slack may contain data from the memory of the system. C. It is possible to find user names, passwords, and other important information in slack. D. Large cluster size will decrease the volume of the file slack. Answer: D (LEAVE A REPLY) NEW QUESTION: 2 In which of the following files does the Linux operating system store passwords? A. Passwd B. SAM C. Shadow D. Password Answer: (SHOW ANSWER) NEW QUESTION: 3 Which of the following password cracking attacks is based on a pre-calculated hash table to retrieve plain text passwords? A. Rainbow attack B. Dictionary attack C. Hybrid attack D. Brute Force attack Answer: A (LEAVE A REPLY) NEW QUESTION: 4 You work as a Network Administrator for NetTech Inc. The company has a network that consists of 200 client computers and ten database servers. One morning, you find that an unauthorized user is accessing data on a database server on the network. Which of the following actions will you take to preserve the evidences? Each correct answer represents a complete solution.