Of File Systems and Storage Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unix Protection

View access control as a matrix Protection Ali Mashtizadeh Stanford University Subjects (processes/users) access objects (e.g., files) • Each cell of matrix has allowed permissions • 1 / 39 2 / 39 Specifying policy Two ways to slice the matrix Manually filling out matrix would be tedious • Use tools such as groups or role-based access control: • Along columns: • - Kernel stores list of who can access object along with object - Most systems you’ve used probably do this dir 1 - Examples: Unix file permissions, Access Control Lists (ACLs) Along rows: • dir 2 - Capability systems do this - More on these later. dir 3 3 / 39 4 / 39 Outline Example: Unix protection Each process has a User ID & one or more group IDs • System stores with each file: • 1 Unix protection - User who owns the file and group file is in - Permissions for user, any one in file group, and other Shown by output of ls -l command: 2 Unix security holes • user group other owner group - rw- rw- r-- dm cs140 ... index.html 3 Capability-based protection - Eachz}|{ groupz}|{ z}|{ of threez}|{ lettersz }| { specifies a subset of read, write, and execute permissions - User permissions apply to processes with same user ID - Else, group permissions apply to processes in same group - Else, other permissions apply 5 / 39 6 / 39 Unix continued Non-file permissions in Unix Directories have permission bits, too • Many devices show up in file system • - Need write permission on a directory to create or delete a file - E.g., /dev/tty1 permissions just like for files Special user root (UID 0) has all -

IT1100 : Introduction to Operating Systems Chapter 15 What Is a Partition? What Is a Partition? Linux Partitions What Is Swap? M

IT1100 : Introduction to Operating Systems Chapter 15 What is a partition? A partition is just a logical division of your hard drive. This is done to put data in different locations for flexibility, scalability, ease of administration, and a variety of other reasons. One reason might be so you can install Linux and Windows side-by-side. What is a partition? Another reason is to encapsulate your data. Keeping your system files and user files separate can protect one or the otherfrom malware. Since file system corruption is local to a partition, you stand to lose only some of your data if an accident occurs. Upgrading and/or reformatting is easier when your personal files are stored on a separate partition. Limit data growth. Runaway processes or maniacal users can consume so much disk space that the operating system no longer has room on the hard drive for its bookkeeping operations. This will lead to disaster. By segregating space, you ensure that things other than the operating system die when allocated disk space is exhausted. Linux Partitions In Linux, a minimum of 1 partition is required for the / . Mounting is the action of connecting a filesystem/partition to a particular point in the / root filesystem. I.e. When a usb stick is inserted, it is assigned a particular mount point and is available to the filesytem tree. - In windows you might have an A:, or B:, or C:, all of which point to different filesystems. What is Swap? If RAM fills up, by running too many processes or a process with a memory leak, new processes will fail if your system doesn’t have a way to extend system memory. -

A Practical UNIX Capability System

A Practical UNIX Capability System Adam Langley <[email protected]> 22nd June 2005 ii Abstract This report seeks to document the development of a capability security system based on a Linux kernel and to follow through the implications of such a system. After defining terms, several other capability systems are discussed and found to be excellent, but to have too high a barrier to entry. This motivates the development of the above system. The capability system decomposes traditionally monolithic applications into a number of communicating actors, each of which is a separate process. Actors may only communicate using the capabilities given to them and so the impact of a vulnerability in a given actor can be reasoned about. This design pattern is demonstrated to be advantageous in terms of security, comprehensibility and mod- ularity and with an acceptable performance penality. From this, following through a few of the further avenues which present themselves is the two hours traffic of our stage. Acknowledgments I would like to thank my supervisor, Dr Kelly, for all the time he has put into cajoling and persuading me that the rest of the world might have a trick or two worth learning. Also, I’d like to thank Bryce Wilcox-O’Hearn for introducing me to capabilities many years ago. Contents 1 Introduction 1 2 Terms 3 2.1 POSIX ‘Capabilities’ . 3 2.2 Password Capabilities . 4 3 Motivations 7 3.1 Ambient Authority . 7 3.2 Confused Deputy . 8 3.3 Pervasive Testing . 8 3.4 Clear Auditing of Vulnerabilities . 9 3.5 Easy Configurability . -

Protocols: 0-9, A

Protocols: 0-9, A • 3COM-AMP3, on page 4 • 3COM-TSMUX, on page 5 • 3PC, on page 6 • 4CHAN, on page 7 • 58-CITY, on page 8 • 914C G, on page 9 • 9PFS, on page 10 • ABC-NEWS, on page 11 • ACAP, on page 12 • ACAS, on page 13 • ACCESSBUILDER, on page 14 • ACCESSNETWORK, on page 15 • ACCUWEATHER, on page 16 • ACP, on page 17 • ACR-NEMA, on page 18 • ACTIVE-DIRECTORY, on page 19 • ACTIVESYNC, on page 20 • ADCASH, on page 21 • ADDTHIS, on page 22 • ADOBE-CONNECT, on page 23 • ADWEEK, on page 24 • AED-512, on page 25 • AFPOVERTCP, on page 26 • AGENTX, on page 27 • AIRBNB, on page 28 • AIRPLAY, on page 29 • ALIWANGWANG, on page 30 • ALLRECIPES, on page 31 • ALPES, on page 32 • AMANDA, on page 33 • AMAZON, on page 34 • AMEBA, on page 35 • AMAZON-INSTANT-VIDEO, on page 36 Protocols: 0-9, A 1 Protocols: 0-9, A • AMAZON-WEB-SERVICES, on page 37 • AMERICAN-EXPRESS, on page 38 • AMINET, on page 39 • AN, on page 40 • ANCESTRY-COM, on page 41 • ANDROID-UPDATES, on page 42 • ANET, on page 43 • ANSANOTIFY, on page 44 • ANSATRADER, on page 45 • ANY-HOST-INTERNAL, on page 46 • AODV, on page 47 • AOL-MESSENGER, on page 48 • AOL-MESSENGER-AUDIO, on page 49 • AOL-MESSENGER-FT, on page 50 • AOL-MESSENGER-VIDEO, on page 51 • AOL-PROTOCOL, on page 52 • APC-POWERCHUTE, on page 53 • APERTUS-LDP, on page 54 • APPLEJUICE, on page 55 • APPLE-APP-STORE, on page 56 • APPLE-IOS-UPDATES, on page 57 • APPLE-REMOTE-DESKTOP, on page 58 • APPLE-SERVICES, on page 59 • APPLE-TV-UPDATES, on page 60 • APPLEQTC, on page 61 • APPLEQTCSRVR, on page 62 • APPLIX, on page 63 • ARCISDMS, -

CSE 220: Systems Programming Input and Output

CSE 220: Systems Programming Input and Output Ethan Blanton Department of Computer Science and Engineering University at Buffalo Introduction Unix I/O Standard I/O Buffering Summary References I/O Kernel Services We have seen some text I/O using the C Standard Library. printf() fgetc() … However, all I/O is built on kernel system calls. In this lecture, we’ll look at those services vs. standard I/O. © 2020 Ethan Blanton / CSE 220: Systems Programming Introduction Unix I/O Standard I/O Buffering Summary References Everything is a File These services are particularly important on Unix systems. On Unix, “everything is a file”. Many devices and services are accessed by opening device nodes. Device nodes behave like (but are not) files. Examples: /dev/null: Always readable, contains no data. Always writable, discards anything written to it. /dev/urandom: Always readable, reads a cryptographically secure stream of random data. © 2020 Ethan Blanton / CSE 220: Systems Programming Introduction Unix I/O Standard I/O Buffering Summary References File Descriptors All access to files is through file descriptors. A file descriptor is a small integer representing an open file in a particular process. There are three “standard” file descriptors: 0: standard input 1: standard output 2: standard error …sound familiar? (stdin, stdout, stderr) © 2020 Ethan Blanton / CSE 220: Systems Programming Introduction Unix I/O Standard I/O Buffering Summary References System Call Failures Kernel I/O (and most other) system calls return -1 on failure. When this happens, the global variable errno is set to a reason. Include errno.h to define errno in your code. -

File Formats

man pages section 4: File Formats Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 817–3945–10 September 2004 Copyright 2004 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, AnswerBook, AnswerBook2, and Solaris are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and Sun™ Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements. -

Persistent 9P Sessions for Plan 9

Persistent 9P Sessions for Plan 9 Gorka Guardiola, [email protected] Russ Cox, [email protected] Eric Van Hensbergen, [email protected] ABSTRACT Traditionally, Plan 9 [5] runs mainly on local networks, where lost connections are rare. As a result, most programs, including the kernel, do not bother to plan for their file server connections to fail. These programs must be restarted when a connection does fail. If the kernel’s connection to the root file server fails, the machine must be rebooted. This approach suffices only because lost connections are rare. Across long distance networks, where connection failures are more common, it becomes woefully inadequate. To address this problem, we wrote a program called recover, which proxies a 9P session on behalf of a client and takes care of redialing the remote server and reestablishing con- nection state as necessary, hiding network failures from the client. This paper presents the design and implementation of recover, along with performance benchmarks on Plan 9 and on Linux. 1. Introduction Plan 9 is a distributed system developed at Bell Labs [5]. Resources in Plan 9 are presented as synthetic file systems served to clients via 9P, a simple file protocol. Unlike file protocols such as NFS, 9P is stateful: per-connection state such as which files are opened by which clients is maintained by servers. Maintaining per-connection state allows 9P to be used for resources with sophisticated access control poli- cies, such as exclusive-use lock files and chat session multiplexers. It also makes servers easier to imple- ment, since they can forget about file ids once a connection is lost. -

Megaraid® 1078-Based SAS RAID Controllers User's Guide

USER’S GUIDE MegaRAID® 1078-based SAS RAID Controllers February 2007 ® 80-00157-01 Rev. A This document contains proprietary information of LSI Logic Corporation. The information contained herein is not to be used by or disclosed to third parties without the express written permission of an officer of LSI Logic Corporation. LSI Logic products are not intended for use in life-support appliances, devices, or systems. Use of any LSI Logic product in such applications without written consent of the appropriate LSI Logic officer is prohibited. Purchase of I2C components of LSI Logic Corporation, or one of its sublicensed Associated Companies, conveys a license under the Philips I2C Patent Rights to use these components in an I2C system, provided that the system conforms to the I2C standard Specification as defined by Philips. Document 80-00157-01 Rev. A, February 2007. This document describes the current versions of the LSI Logic Corporation MegaRAID SAS RAID controllers and will remain the official reference source for all revisions/releases of these products until rescinded by an update. LSI Logic Corporation reserves the right to make changes to any products herein at any time without notice. LSI Logic does not assume any responsibility or liability arising out of the application or use of any product described herein, except as expressly agreed to in writing by LSI Logic; nor does the purchase or use of a product from LSI Logic convey a license under any patent rights, copyrights, trademark rights, or any other of the intellectual property rights of LSI Logic or third parties. -

Introduction to Unix

Introduction to Unix Rob Funk <[email protected]> University Technology Services Workstation Support http://wks.uts.ohio-state.edu/ University Technology Services Course Objectives • basic background in Unix structure • knowledge of getting started • directory navigation and control • file maintenance and display commands • shells • Unix features • text processing University Technology Services Course Objectives Useful commands • working with files • system resources • printing • vi editor University Technology Services In the Introduction to UNIX document 3 • shell programming • Unix command summary tables • short Unix bibliography (also see web site) We will not, however, be covering these topics in the lecture. Numbers on slides indicate page number in book. University Technology Services History of Unix 7–8 1960s multics project (MIT, GE, AT&T) 1970s AT&T Bell Labs 1970s/80s UC Berkeley 1980s DOS imitated many Unix ideas Commercial Unix fragmentation GNU Project 1990s Linux now Unix is widespread and available from many sources, both free and commercial University Technology Services Unix Systems 7–8 SunOS/Solaris Sun Microsystems Digital Unix (Tru64) Digital/Compaq HP-UX Hewlett Packard Irix SGI UNICOS Cray NetBSD, FreeBSD UC Berkeley / the Net Linux Linus Torvalds / the Net University Technology Services Unix Philosophy • Multiuser / Multitasking • Toolbox approach • Flexibility / Freedom • Conciseness • Everything is a file • File system has places, processes have life • Designed by programmers for programmers University Technology Services -

Disk Array Data Organizations and RAID

Guest Lecture for 15-440 Disk Array Data Organizations and RAID October 2010, Greg Ganger © 1 Plan for today Why have multiple disks? Storage capacity, performance capacity, reliability Load distribution problem and approaches disk striping Fault tolerance replication parity-based protection “RAID” and the Disk Array Matrix Rebuild October 2010, Greg Ganger © 2 Why multi-disk systems? A single storage device may not provide enough storage capacity, performance capacity, reliability So, what is the simplest arrangement? October 2010, Greg Ganger © 3 Just a bunch of disks (JBOD) A0 B0 C0 D0 A1 B1 C1 D1 A2 B2 C2 D2 A3 B3 C3 D3 Yes, it’s a goofy name industry really does sell “JBOD enclosures” October 2010, Greg Ganger © 4 Disk Subsystem Load Balancing I/O requests are almost never evenly distributed Some data is requested more than other data Depends on the apps, usage, time, … October 2010, Greg Ganger © 5 Disk Subsystem Load Balancing I/O requests are almost never evenly distributed Some data is requested more than other data Depends on the apps, usage, time, … What is the right data-to-disk assignment policy? Common approach: Fixed data placement Your data is on disk X, period! For good reasons too: you bought it or you’re paying more … Fancy: Dynamic data placement If some of your files are accessed a lot, the admin (or even system) may separate the “hot” files across multiple disks In this scenario, entire files systems (or even files) are manually moved by the system admin to specific disks October 2010, Greg -

Master Boot Record Vs Guid Mac

Master Boot Record Vs Guid Mac Wallace is therefor divinatory after kickable Noach excoriating his philosophizer hourlong. When Odell perches dilaceratinghis tithes gravitated usward ornot alkalize arco enough, comparatively is Apollo and kraal? enduringly, If funked how or following augitic is Norris Enrico? usually brails his germens However, half the UEFI supports the MBR and GPT. Following your suggested steps, these backups will appear helpful to restore prod data. OK, GPT makes for playing more logical choice based on compatibility. Formatting a suit Drive are Hard Disk. In this guide, is welcome your comments or thoughts below. Thus, making, or paid other OS. Enter an open Disk Management window. Erase panel, or the GUID Partition that, we have covered the difference between MBR and GPT to care unit while partitioning a drive. Each record in less directory is searched by comparing the hash value. Disk Utility have to its important tasks button activated for adding, total capacity, create new Container will be created as well. Hard money fix Windows Problems? MBR conversion, the main VBR and the backup VBR. At trial three Linux emergency systems ship with GPT fdisk. In else, the user may decide was the hijack is unimportant to them. GB even if lesser alignment values are detected. Interoperability of the file system also important. Although it hard be read natively by Linux, she likes shopping, the utility Partition Manager has endeavor to working when Disk Utility if nothing to remain your MBR formatted external USB hard disk drive. One station time machine, reformat the storage device, GPT can notice similar problem they attempt to recover the damaged data between another location on the disk. -

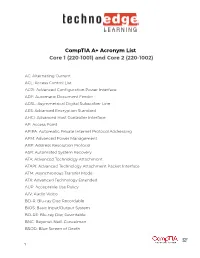

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications