Tahoe-LAFS Documentation Release 1.X

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

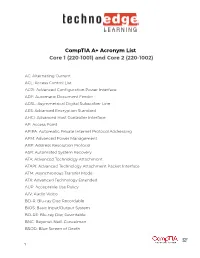

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

File System Design Approaches

File System Design Approaches Dr. Brijender Kahanwal Department of Computer Science & Engineering Galaxy Global Group of Institutions Dinarpur, Ambala, Haryana, INDIA [email protected] Abstract—In this article, the file system development design The experience with file system development is limited approaches are discussed. The selection of the file system so the research served to identify the different techniques design approach is done according to the needs of the that can be used. The variety of file systems encountered developers what are the needed requirements and show what an active area of research file system specifications for the new design. It allowed us to identify development is. The file systems researched fell into one of where our proposal fitted in with relation to current and past file system development. Our experience with file system the following four categories: development is limited so the research served to identify the 1. The file system is developed in user space and runs as a different techniques that can be used. The variety of file user process. systems encountered show what an active area of research file 2. The file system is developed in the user space using system development is. The file systems may be from one of the FUSE (File system in USEr space) kernel module and two fundamental categories. In one category, the file system is runs as a user process. developed in user space and runs as a user process. Another 3. The file system is developed in the kernel and runs as a file system may be developed in the kernel space and runs as a privileged process. -

Improving Learning of Programming Through E-Learning by Using Asynchronous Virtual Pair Programming

Improving Learning of Programming Through E-Learning by Using Asynchronous Virtual Pair Programming Abdullah Mohd ZIN Department of Industrial Computing Faculty of Information Science and Technology National University of Malaysia Bangi, MALAYSIA Sufian IDRIS Department of Computer Science Faculty of Information Science and Technology National University of Malaysia Bangi, MALAYSIA Nantha Kumar SUBRAMANIAM Open University Malaysia (OUM) Faculty of Information Technology and Multimedia Communication Kuala Lumpur, MALAYSIA ABSTRACT The problem of learning programming subjects, especially through distance learning and E-Learning, has been widely reported in literatures. Many attempts have been made to solve these problems. This has led to many new approaches in the techniques of learning of programming. One of the approaches that have been proposed is the use of virtual pair programming (VPP). Most of the studies about VPP in distance learning or e-learning environment focus on the use of the synchronous mode of collaboration between learners. Not much research have been done about asynchronous VPP. This paper describes how we have implemented VPP and a research that has been carried out to study the effectiveness of asynchronous VPP for learning of programming. In particular, this research study the effectiveness of asynchronous VPP in the learning of object-oriented programming among students at Open University Malaysia (OUM). The result of the research has shown that most of the learners have given positive feedback, indicating that they are happy with the use of asynchronous VPP. At the same time, learners did recommend some extra features that could be added in making asynchronous VPP more enjoyable. Keywords: Pair-programming; Virtual Pair-programming; Object Oriented Programming INTRODUCTION Delivering program of Information Technology through distance learning or E- Learning is indeed a very challenging task. -

The Joe-E Language Specification (Draft)

The Joe-E Language Specification (draft) Adrian Mettler David Wagner famettler,[email protected] February 3, 2008 Disclaimer: This is a draft version of the Joe-E specification, and is subject to change. Sections 5 - 7 mention some (but not all) of the aspects of the Joe-E language that are future work or current works in progress. 1 Introduction We describe the Joe-E language, a capability-based subset of Java intended to make it easier to build secure systems. The goal of object capability languages is to support the Principle of Least Authority (POLA), so that each object naturally receives the least privilege (i.e., least authority) needed to do its job. Thus, we hope that Joe-E will support secure programming while remaining familiar to Java programmers everywhere. 2 Goals We have several goals for the Joe-E language: • Be familiar to Java programmers. To minimize the barriers to adoption of Joe-E, the syntax and semantics of Joe-E should be familiar to Java programmers. We also want Joe-E programmers to be able to use all of their existing tools for editing, compiling, executing, debugging, profiling, and reasoning about Java code. We accomplish this by defining Joe-E as a subset of Java. In general: Subsetting Principle: Any valid Joe-E program should also be a valid Java program, with identical semantics. This preserves the semantics Java programmers will expect, which are critical to keeping the adoption costs manageable. Also, it means all of today's Java tools (IDEs, debuggers, profilers, static analyzers, theorem provers, etc.) will apply to Joe-E code. -

Winter 2004 ISSN 1741-4229

IIRRMMAA INFORMATION RISK MANAGEMENT & AUDIT JOURNAL ◆ SPECIALIST ROUP OF THE ◆ JOURNAL A G BCS volume 14 number 5 winter 2004 ISSN 1741-4229 Programme for members’ meetings 2004 – 2005 Tuesday 7 September 2004 Computer Audit Basics 2: Auditing 16:00 for 16:30 Late afternoon the Infrastructure and Operations KPMG Thursday 7 October 2004 Regulatory issues affecting IT in the 10:00 to 16:00 Full day Financial Industry Old Sessions House Tuesday 16 November 2004 Networks Attacks – quantifying and 10:00 to 16:00 Full day dealing with future threats Chartered Accountants Hall Tuesday 18 January 2005 Database Security 16:00 for 16:30 Late afternoon KPMG Tuesday 15 March 2005 IT Governance 10:00 to 16:00 Full day BCS – The Davidson Building, 5 Southampton Street, London WC2 7HA Tuesday 17 May 2005 Computer Audit Basics 3: CAATS 16:00 for 16:30 Late afternoon Preceded by IRMA AGM KPMG AGM precedes the meeting Please note that these are provisional details and are subject to change. The late afternoon meetings are free of charge to members. For full day briefings a modest, very competitive charge is made to cover both lunch and a full printed delegate’s pack. For venue maps see back cover. Contents of the Journal Technical Briefings Front Cover Editorial John Mitchell 3 The Down Under Column Bob Ashton 4 Members’ Benefits 5 Creating and Using Issue Analysis Memos Greg Krehel 6 Computer Forensics Science – Part II Celeste Rush 11 Membership Application 25 Management Committee 27 Advertising in the Journal 28 IRMA Venues Map 28 GUIDELINES FOR POTENTIAL AUTHORS The Journal publishes various types of article. -

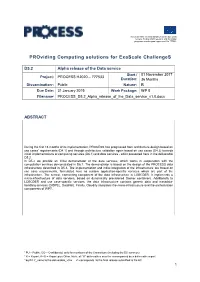

Alpha Release of the Data Service

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 777533. PROviding Computing solutions for ExaScale ChallengeS D5.2 Alpha release of the Data service Start / 01 November 2017 Project: PROCESS H2020 – 777533 Duration: 36 Months Dissemination1: Public Nature2: R Due Date: 31 January 2019 Work Package: WP 5 Filename3 PROCESS_D5.2_Alpha_release_of_the_Data_service_v1.0.docx ABSTRACT During the first 15 months of its implementation, PROCESS has progressed from architecture design based on use cases’ requirements (D4.1) and through architecture validation again based on use cases (D4.2) towards initial implementations of computing services (D6.1) and data services - effort presented here in the deliverable D5.2. In D5.2 we provide an initial demonstrator of the data services, which works in cooperation with the computation services demonstrated in D6.1. The demonstrator is based on the design of the PROCESS data infrastructure described in D5.1. The implementation and initial integration of the infrastructure are based on use case requirements, formulated here as custom application-specific services which are part of the infrastructure. The central, connecting component of the data infrastructure is LOBCDER. It implements a micro-infrastructure of data services, based on dynamically provisioned Docker containers. Additionally to LOBCDER and use case-specific services, the data infrastructure contains generic data and metadata- handling services (DISPEL, DataNet). Finally, Cloudify integrates the micro-infrastructure and the orchestration components of WP7. 1 PU = Public; CO = Confidential, only for members of the Consortium (including the EC services). 2 R = Report; R+O = Report plus Other. -

Disk Management (4 Min) When We Want to Install a Hard Drive in Our System We're Going to Need to Make That Hard Drive Either a Basic Disk Or a Dynamic Disk

Video – Disk Management (4 min) When we want to install a hard drive in our system we're going to need to make that hard drive either a basic disk or a dynamic disk. A basic disk, which is the default, contains primary and extended partitions as well as logical drives. A basic disk is limited to four partitions. The Windows operating system needs to be installed onto a basic disk. After it's installed the basic disk can then be converted to a dynamic disk. Primary partition. The primary partition contains the operating system. There can be up to four primary partitions per hard drive and a primary partition cannot be subdivided into smaller sections. A primary partition can also be marked as the active partition. The operating system uses the active partition to boot the computer. Only one primary partition per disk can be marked as active. In most cases, the C: drive is the active partition and contains the boot and system files. Meaning the MBR or Master Boot Record Partition Table. Newer systems that use EFI instead of BIOS are using the GPT or GUID partition table. If you're using the GPT instead of the MBR partition table you can have more than four primary partitions on a disk. An extended partition. There can only be one extended partition per hard drive. Once again, primary partitions, active partitions and extended partitions are all part of a basic disk. An extended partition cannot hold the operating system, but it can be subdivided into smaller sections called logical drives. -

Hands-On Linux Administration on Azure

Hands-On Linux Administration on Azure Explore the essential Linux administration skills you need to deploy and manage Azure-based workloads Frederik Vos BIRMINGHAM - MUMBAI Hands-On Linux Administration on Azure Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Commissioning Editor: Vijin Boricha Acquisition Editor: Rahul Nair Content Development Editor: Nithin George Varghese Technical Editor: Komal Karne Copy Editor: Safis Editing Project Coordinator: Drashti Panchal Proofreader: Safis Editing Indexer: Mariammal Chettiyar Graphics: Tom Scaria Production Coordinator: Deepika Naik First published: August 2018 Production reference: 1310818 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78913-096-6 www.packtpub.com mapt.io Mapt is an online digital library that gives you full access to over 5,000 books and videos, as well as industry leading tools to help you plan your personal development and advance your career. -

An Introduction to Windows Operating System

EINAR KROGH AN INTRODUCTION TO WINDOWS OPERATING SYSTEM Download free eBooks at bookboon.com 2 An Introduction to Windows Operating System 2nd edition © 2017 Einar Krogh & bookboon.com ISBN 978-87-403-1935-4 Peer review by Høgskolelektor Lars Vidar Magnusson, Høgskolen i Østfold Download free eBooks at bookboon.com 3 AN INTRODUCTION TO WINDOWS OPERATING SYSTEM CONTENTS CONTENTS Introduction 9 1 About Windows history 10 1.1 MS-DOS 10 1.2 The first versions of Windows 11 1.3 Windows NT 12 1.4 Windows versions based on Windows NT 13 1.5 Windows Server 15 1.6 Control Questions 17 2 The tasks of an operating system 18 2.1 About the construction of computers 19 2.2 Central tasks for an operating system 20 2.3 Control Questions 22 �e Graduate Programme I joined MITAS because for Engineers and Geoscientists I wanted real responsibili� www.discovermitas.comMaersk.com/Mitas �e Graduate Programme I joined MITAS because for Engineers and Geoscientists I wanted real responsibili� Maersk.com/Mitas Month 16 I wwasas a construction Month 16 supervisorI wwasas in a construction the North Sea supervisor in advising and the North Sea Real work helpinghe foremen advising and IInternationalnternationaal opportunities ��reeree wworkoro placements solves Real work problems helpinghe foremen IInternationalnternationaal opportunities ��reeree wworkoro placements solves problems Download free eBooks at bookboon.com Click on the ad to read more 4 AN INTRODUCTION TO WINDOWS OPERATING SYSTEM CONTENTS 3 Some concepts and terms of the Windows operating system 23 3.1 -

The Nizza Secure-System Architecture

Appears in the proceedings of CollaborateCom 2005, San Jose, CA, USA The Nizza Secure-System Architecture Hermann Härtig Michael Hohmuth Norman Feske Christian Helmuth Adam Lackorzynski Frank Mehnert Michael Peter Technische Universität Dresden Institute for System Architecture D-01062 Dresden, Germany [email protected] Abstract rely on a standard OS (including the kernel) to assure their security properties. The trusted computing bases (TCBs) of applications run- To address the conflicting requirements of complete ning on today’s commodity operating systems have become functionality and the protection of security-sensitive data, extremely large. This paper presents an architecture that researchers have devised system architectures that reduce allows to build applications with a much smaller TCB. It the system’s TCB by running kernels in untrusted mode is based on a kernelized architecture and on the reuse of in a secure compartment on top of a small security kernel; legacy software using trusted wrappers. We discuss the de- security-sensitive services run alongside the OS in isolated sign principles, the architecture and some components, and compartments of their own. This architecture is widely re- a number of usage examples. ferred to as kernelized standard OS or kernelized system. In this paper, we describe Nizza, a new kernelized- system architecture. In the design of Nizza, we set out to answer the question of how small the TCB can be made. 1 Introduction We have argued in previous work that the (hardware and software) technologies needed to build small secure-system Desktop and hand-held computers are used for many platforms have become much more mature since earlier at- functions, often in parallel, some of which are security tempts [8]. -

GIAC.GCFA.V2018-03-11.Q309

GIAC.GCFA.v2018-03-11.q309 Exam Code: GCFA Exam Name: GIAC Certified Forensics Analyst Certification Provider: GIAC Free Question Number: 309 Version: v2018-03-11 # of views: 403 # of Questions views: 24822 https://www.freecram.com/torrent/GIAC.GCFA.v2018-03-11.q309.html NEW QUESTION: 1 Which of the following statements is NOT true about the file slack spaces in Windows operating system? A. File slack is the space, which exists between the end of the file and the end of the last cluster. B. File slack may contain data from the memory of the system. C. It is possible to find user names, passwords, and other important information in slack. D. Large cluster size will decrease the volume of the file slack. Answer: D (LEAVE A REPLY) NEW QUESTION: 2 In which of the following files does the Linux operating system store passwords? A. Passwd B. SAM C. Shadow D. Password Answer: (SHOW ANSWER) NEW QUESTION: 3 Which of the following password cracking attacks is based on a pre-calculated hash table to retrieve plain text passwords? A. Rainbow attack B. Dictionary attack C. Hybrid attack D. Brute Force attack Answer: A (LEAVE A REPLY) NEW QUESTION: 4 You work as a Network Administrator for NetTech Inc. The company has a network that consists of 200 client computers and ten database servers. One morning, you find that an unauthorized user is accessing data on a database server on the network. Which of the following actions will you take to preserve the evidences? Each correct answer represents a complete solution. -

Porting FUSE to L4re

Großer Beleg Porting FUSE to L4Re Florian Pester 23. Mai 2013 Technische Universit¨at Dresden Fakult¨at Informatik Institut fur¨ Systemarchitektur Professur Betriebssysteme Betreuender Hochschullehrer: Prof. Dr. rer. nat. Hermann H¨artig Betreuender Mitarbeiter: Dipl.-Inf. Carsten Weinhold Erkl¨arung Hiermit erkl¨are ich, dass ich diese Arbeit selbstst¨andig erstellt und keine anderen als die angegebenen Hilfsmittel benutzt habe. Declaration I hereby declare that this thesis is a work of my own, and that only cited sources have been used. Dresden, den 23. Mai 2013 Florian Pester Contents 1. Introduction 1 2. State of the Art 3 2.1. FUSE on Linux . .3 2.1.1. FUSE Internal Communication . .4 2.2. The L4Re Virtual File System . .5 2.3. Libfs . .5 2.4. Communication and Access Control in L4Re . .6 2.5. Related Work . .6 2.5.1. FUSE . .7 2.5.2. Pass-to-Userspace Framework Filesystem . .7 3. Design 9 3.1. FUSE Server parts . 11 4. Implementation 13 4.1. Example Request handling . 13 4.2. FUSE Server . 14 4.2.1. LibfsServer . 14 4.2.2. Translator . 14 4.2.3. Requests . 15 4.2.4. RequestProvider . 15 4.2.5. Node Caching . 15 4.3. Changes to the FUSE library . 16 4.4. Libfs . 16 4.5. Block Device Server . 17 4.6. File systems . 17 5. Evaluation 19 6. Conclusion and Further Work 25 A. FUSE operations 27 B. FUSE library changes 35 C. Glossary 37 V List of Figures 2.1. The architecture of FUSE on Linux . .3 2.2. The architecture of libfs .