Faces in the Clouds: Long-Duration, Multi-User, Cloud-Assisted Video Conferencing Richard G

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Verizon Communications Inc. 2020 Form 10-K

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K (Mark one) ☒ ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2020 OR ☐ TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission file number: 1-8606 Verizon Communications Inc. (Exact name of registrant as specified in its charter) Delaware 23-2259884 (State or other jurisdiction (I.R.S. Employer Identification No.) of incorporation or organization) 1095 Avenue of the Americas New York, New York 10036 (Address of principal executive offices) (Zip Code) Registrant’s telephone number, including area code: (212) 395-1000 Securities registered pursuant to Section 12(b) of the Act: Title of Each Class Trading Symbol(s) Name of Each Exchange on Which Registered Common Stock, par value $0.10 VZ New York Stock Exchange Common Stock, par value $0.10 VZ The NASDAQ Global Select Market 1.625% Notes due 2024 VZ24B New York Stock Exchange 4.073% Notes due 2024 VZ24C New York Stock Exchange 0.875% Notes due 2025 VZ25 New York Stock Exchange 3.250% Notes due 2026 VZ26 New York Stock Exchange 1.375% Notes due 2026 VZ26B New York Stock Exchange 0.875% Notes due 2027 VZ27E New York Stock Exchange 1.375% Notes due 2028 VZ28 New York Stock Exchange 1.125% Notes due 2028 VZ28A New York Stock Exchange 1.875% Notes due 2029 VZ29B New York Stock Exchange 1.250% Notes due 2030 VZ30 New York Stock Exchange 1.875% Notes due 2030 VZ30A -

Vendor Contract

d/W^sEKZ'ZDEd ĞƚǁĞĞŶ t'ŽǀĞƌŶŵĞŶƚ͕>>ĂŶĚ d,/EdZ>K>WhZ,^/E'^z^dD;d/W^Ϳ &Žƌ Z&Wϭϴ1102 Internet & Network Security 'ĞŶĞƌĂů/ŶĨŽƌŵĂƚŝŽŶ dŚĞsĞŶĚŽƌŐƌĞĞŵĞŶƚ;͞ŐƌĞĞŵĞŶƚ͟ͿŵĂĚĞĂŶĚĞŶƚĞƌĞĚŝŶƚŽďLJĂŶĚďĞƚǁĞĞŶdŚĞ/ŶƚĞƌůŽĐĂů WƵƌĐŚĂƐŝŶŐ^LJƐƚĞŵ;ŚĞƌĞŝŶĂĨƚĞƌƌĞĨĞƌƌĞĚƚŽĂƐ͞d/W^͟ƌĞƐƉĞĐƚĨƵůůLJͿĂŐŽǀĞƌŶŵĞŶƚĐŽŽƉĞƌĂƚŝǀĞ ƉƵƌĐŚĂƐŝŶŐƉƌŽŐƌĂŵĂƵƚŚŽƌŝnjĞĚďLJƚŚĞZĞŐŝŽŶϴĚƵĐĂƚŝŽŶ^ĞƌǀŝĐĞĞŶƚĞƌ͕ŚĂǀŝŶŐŝƚƐƉƌŝŶĐŝƉĂůƉůĂĐĞ ŽĨďƵƐŝŶĞƐƐĂƚϰϴϰϱh^,ǁLJϮϳϭEŽƌƚŚ͕WŝƚƚƐďƵƌŐ͕dĞdžĂƐϳϱϲϴϲ͘dŚŝƐŐƌĞĞŵĞŶƚĐŽŶƐŝƐƚƐŽĨƚŚĞ ƉƌŽǀŝƐŝŽŶƐƐĞƚĨŽƌƚŚďĞůŽǁ͕ŝŶĐůƵĚŝŶŐƉƌŽǀŝƐŝŽŶƐŽĨĂůůƚƚĂĐŚŵĞŶƚƐƌĞĨĞƌĞŶĐĞĚŚĞƌĞŝŶ͘/ŶƚŚĞĞǀĞŶƚŽĨ ĂĐŽŶĨůŝĐƚďĞƚǁĞĞŶƚŚĞƉƌŽǀŝƐŝŽŶƐƐĞƚĨŽƌƚŚďĞůŽǁĂŶĚƚŚŽƐĞĐŽŶƚĂŝŶĞĚŝŶĂŶLJƚƚĂĐŚŵĞŶƚ͕ƚŚĞ ƉƌŽǀŝƐŝŽŶƐƐĞƚĨŽƌƚŚƐŚĂůůĐŽŶƚƌŽů͘ dŚĞǀĞŶĚŽƌŐƌĞĞŵĞŶƚƐŚĂůůŝŶĐůƵĚĞĂŶĚŝŶĐŽƌƉŽƌĂƚĞďLJƌĞĨĞƌĞŶĐĞƚŚŝƐŐƌĞĞŵĞŶƚ͕ƚŚĞƚĞƌŵƐĂŶĚ ĐŽŶĚŝƚŝŽŶƐ͕ƐƉĞĐŝĂůƚĞƌŵƐĂŶĚĐŽŶĚŝƚŝŽŶƐ͕ĂŶLJĂŐƌĞĞĚƵƉŽŶĂŵĞŶĚŵĞŶƚƐ͕ĂƐǁĞůůĂƐĂůůŽĨƚŚĞƐĞĐƚŝŽŶƐ ŽĨƚŚĞƐŽůŝĐŝƚĂƚŝŽŶĂƐƉŽƐƚĞĚ͕ŝŶĐůƵĚŝŶŐĂŶLJĂĚĚĞŶĚĂĂŶĚƚŚĞĂǁĂƌĚĞĚǀĞŶĚŽƌ͛ƐƉƌŽƉŽƐĂů͘͘KƚŚĞƌ ĚŽĐƵŵĞŶƚƐƚŽďĞŝŶĐůƵĚĞĚĂƌĞƚŚĞĂǁĂƌĚĞĚǀĞŶĚŽƌ͛ƐƉƌŽƉŽƐĂůƐ͕ƚĂƐŬŽƌĚĞƌƐ͕ƉƵƌĐŚĂƐĞŽƌĚĞƌƐĂŶĚĂŶLJ ĂĚũƵƐƚŵĞŶƚƐǁŚŝĐŚŚĂǀĞďĞĞŶŝƐƐƵĞĚ͘/ĨĚĞǀŝĂƚŝŽŶƐĂƌĞƐƵďŵŝƚƚĞĚƚŽd/W^ďLJƚŚĞƉƌŽƉŽƐŝŶŐǀĞŶĚŽƌĂƐ ƉƌŽǀŝĚĞĚďLJĂŶĚǁŝƚŚŝŶƚŚĞƐŽůŝĐŝƚĂƚŝŽŶƉƌŽĐĞƐƐ͕ƚŚŝƐŐƌĞĞŵĞŶƚŵĂLJďĞĂŵĞŶĚĞĚƚŽŝŶĐŽƌƉŽƌĂƚĞĂŶLJ ĂŐƌĞĞĚĚĞǀŝĂƚŝŽŶƐ͘ dŚĞĨŽůůŽǁŝŶŐƉĂŐĞƐǁŝůůĐŽŶƐƚŝƚƵƚĞƚŚĞŐƌĞĞŵĞŶƚďĞƚǁĞĞŶƚŚĞƐƵĐĐĞƐƐĨƵůǀĞŶĚŽƌƐ;ƐͿĂŶĚd/W^͘ ŝĚĚĞƌƐƐŚĂůůƐƚĂƚĞ͕ŝŶĂƐĞƉĂƌĂƚĞǁƌŝƚŝŶŐ͕ĂŶĚŝŶĐůƵĚĞǁŝƚŚƚŚĞŝƌƉƌŽƉŽƐĂůƌĞƐƉŽŶƐĞ͕ĂŶLJƌĞƋƵŝƌĞĚ ĞdžĐĞƉƚŝŽŶƐŽƌĚĞǀŝĂƚŝŽŶƐĨƌŽŵƚŚĞƐĞƚĞƌŵƐ͕ĐŽŶĚŝƚŝŽŶƐ͕ĂŶĚƐƉĞĐŝĨŝĐĂƚŝŽŶƐ͘/ĨĂŐƌĞĞĚƚŽďLJd/W^͕ƚŚĞLJ ǁŝůůďĞŝŶĐŽƌƉŽƌĂƚĞĚŝŶƚŽƚŚĞĨŝŶĂůŐƌĞĞŵĞŶƚ͘ WƵƌĐŚĂƐĞKƌĚĞƌ͕ŐƌĞĞŵĞŶƚŽƌŽŶƚƌĂĐƚŝƐƚŚĞd/W^DĞŵďĞƌ͛ƐĂƉƉƌŽǀĂůƉƌŽǀŝĚŝŶŐƚŚĞ ĂƵƚŚŽƌŝƚLJƚŽƉƌŽĐĞĞĚǁŝƚŚƚŚĞŶĞŐŽƚŝĂƚĞĚĚĞůŝǀĞƌLJŽƌĚĞƌƵŶĚĞƌƚŚĞŐƌĞĞŵĞŶƚ͘^ƉĞĐŝĂůƚĞƌŵƐ -

Market Research Research and Education

June 1, 2020 UW Finance Association The Outlook of Mergers and Acquisitions for 2020 Market Research Research and Education The Outlook of Mergers and Acquisitions for 2020 All amounts in $US unless otherwise stated. Before the Pandemic Author(s): When 2019 came to a close, it marked the end of more than a Albert Liu Research Analyst decade-long bull run. The market was at all-time highs and Onat Karacan businesses were flourishing. Corporate transactions were Research Analyst prominent during this time as companies sought to grow their Editor(s): businesses to new heights. John Derraugh and Brent Huang Co-VPs of Research and Education Mergers and Acquisitions (M&A) deals were no exception. A recent poll done by the Deloitte US M&A Team, who surveyed a thousand executives at corporations and private equity firms, found that the majority agreed that transaction activity will remain robust for the year 2020. Exhibit 1: Infographic on professional perspective of M&A deal volume expectations going into 2020. (Deloitte) Deal volume expectations 63% Increase 79% 33% Stay the same 18% 4% Decrease 3% Fall 2019 Fall 2018 Before the pandemic, M&A activity had been declining for a few years, as the decade-long bull run began to increase and inflate valuations of corporations. While professional expectations were bullish on the volume of M&A transactions in 2020, the presence of larger deals was anticipated to decrease. According to Deloitte, the marketplace is seeing more interest in deals in the sweet spot between $100 million and June 1, 2020 1 UW Finance Association The Outlook of Mergers and Acquisitions for 2020 $500 million. -

Toolkit for Teachers – Technologies Is Supplementary Material for the Toolkit for Teachers

Toolkit for Technologies teachers i www. universitiesofthefuture.eu Partners Maria Teresa Pereira (Project manager) [email protected] Maria Clavert Piotr Palka Rui Moura Frank Russi [email protected] [email protected] [email protected] [email protected] Ricardo Migueis Sanja Murso Wojciech Gackowski Maciej Markowski [email protected] [email protected] [email protected] [email protected] Olivier Schulbaum Francisco Pacheo Pedro Costa Aki Malinen [email protected] [email protected] [email protected] [email protected] ii INDEX 1 INTRODUCTION AND FOREWORD 1 2 CURRENT SITUATION 3 2.1 INDUSTRY 4.0: STATE OF AFFAIRS 3 2.2 TRENDS 3 2.3 NEEDS 4 2.3.1 Addressing modern challenges in information acquisition 4 2.3.2 Supporting collaborative work 4 2.3.3 IT-assisted teaching 4 3 TECHNOLOGIES FOR EDUCATION 4.0 7 3.1 TOOLS SUPPORTING GROUP AND PROJECT WORK 7 3.1.1 Cloud storage services 7 3.1.2 Document Collaboration Tools 9 3.1.3 Documenting projects - Wiki services 11 3.1.4 Collaborative information collection, sharing and organization 13 3.1.5 Project management tools 17 3.1.6 Collaborative Design Tools 21 3.2 E-LEARNING AND BLENDED LEARNING TOOLS 24 3.2.1 Direct communication tools 24 3.2.1.1 Webcasts 24 3.2.1.2 Streaming servers 26 3.2.1.3 Teleconferencing tools 27 3.2.1.4 Webinars 28 3.2.2 Creating e-learning materials 29 3.2.2.1 Creation of educational videos 29 3.2.2.2 Authoring of interactive educational content 32 3.2.3 Learning Management Systems (LMS) 33 4 SUMMARY 36 5 REFERENCES 38 4.1 BIBLIOGRAPHY 38 4.2 NETOGRAPHY 39 iii Introduction and foreword iv 1 Introduction and foreword This toolkit for teachers – technologies is supplementary material for the toolkit for teachers. -

Magic Quadrant for Unified Communications As a Service, Worldwide

LICENSED FOR DISTRIBUTION Magic Quadrant for Unified Communications as a Service, Worldwide Published: 23 August 2016 ID: G00291960 Analyst(s): Daniel O'Connell, Bern Elliot Summary UCaaS is now a viable alternative for many (not all) enterprise deployments. Enterprise planners should look for expanded global capacity, better customer service and project management, an expanded set of APIs, and the ability to deploy a richer set of UC functionality led by mobility and video. Market Definition/Description This document was revised on 30 August 2016. The document you are viewing is the corrected version. For more information, see the Corrections page on gartner.com. Unified communications as a service (UCaaS) supports the same functions as its premises-based unified communications (UC) counterpart. The chief difference is that UCaaS adheres to a cloud service delivery model. Gartner therefore uses the same six broad communications functions for both (see Note 1 for detailed definitions): • Voice and telephony, including mobility support • Conferencing — Audioconferencing, videoconferencing and web conferencing • Messaging — Email with voice mail and unified messaging (UM) • Presence and instant messaging (IM) • Clients — Including desktop clients and thin browser clients • Communications-enabled applications — For example, integrated collaboration and contact center applications Two types of cloud delivery architectures are in the UCaaS market. The first is multitenant, in which all customers share a common (single) software instance. The second is multi-instance, in which each customer receives its own software instance. Both the multitenant and multi-instance architectures possess such cloud characteristics as shared infrastructure (for example, data centers, racks, common equipment and blades), shared tools (for example, provisioning, performance and network management tools), per-user-per-month pricing, and elasticity to dynamically add and subtract users. -

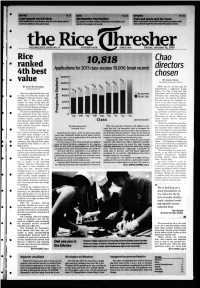

Rice Ranked 4Th Best Value

OP-ED P. 3 A&E P. 11 SPORTS P. 14 Loop hazards run lull-circle Merrlweather Post Pavilion Track and tennis rock the house Both pedestrians and drivers may be In the dark when It Eric Doctor reviews Animal Collective's new album and Men's and women's tennis hold their opening matches while comes to safety on the outer-loop. deems It an orgasm of sound. track sprints through their first meet this season at home. thVOLUME XCVI,e ISSUE NO . 1Ric6 STUDENT-RUe N SINCE 1916 FRIDAY, JANUARY 16, 2009 Rice Chao ranked 10,818 directors 4th best Applications for 2013 class surpass 10,000, break records 12000 chosen *->(A value BY CINDY DINH C 10000 THRESHER EDITORIAL STAFF BY JOSH RUTENBERG While the rest of Rice may be 3 THRESHER STAFF 8000 experiencing a temporary hiring freeze, the Ting Tsung and Wei Rice was ranked fourth last week Fong Chao Center for Asian Studies 0> ACCEPTED on both the Princeton Review and welcomes new leadership. Earlier 6000 I REJECTED Kiplinger's Personal Finance maga- this month, Tani Barlow started her zine's lists of best value private u five-year term as the inaugural di- schools for 2009, falling from the a> 4000 rector of the Chao Center after be- number one position it held on last a 1 ing selected from an international year's Princeton Review and improv- </> pool of applicants in May 2008. o ing by one spot on Kiplinger's list. 2000 Barlow, who previously worked The lists compiled data from pri- as a professor in history and wom- vate campuses across the country. -

![Verizon up to Speed Live Friday, July 24, 2020 [Music]. >> Hello My Name Is Christallyne Pagulayan I'm Currently an Indire](https://docslib.b-cdn.net/cover/3876/verizon-up-to-speed-live-friday-july-24-2020-music-hello-my-name-is-christallyne-pagulayan-im-currently-an-indire-2923876.webp)

Verizon up to Speed Live Friday, July 24, 2020 [Music]. >> Hello My Name Is Christallyne Pagulayan I'm Currently an Indire

Verizon Up To Speed Live Friday, July 24, 2020 _Redefining Communication Access www.acscaptions.com _ [Music]. >> Hello my name is Christallyne Pagulayan I'm currently an indirect account manager based here in viva Las Vegas. I've been with Verizon for ten years now ten years this month I was born and raised in the Philippines. About 40 minutes or an hour from Manila. I've always been that person that's always like a go-getter let's go after it let's go. I traveled with my flipflops with one luggage and $500 in my pocket. All I knew was I was going to San Francisco. I had a map and my American Dream. Until now it still gives me goosies when I think about it I'm like oh, my God what was I thinking I arrived it was freezing cold I used my $500 to get boots I was freezing Verizon said they were hiring for part-time service rep long story short I got the job for me along the way the journey with Verizon my journey in the uses being resilient and patience and compassion helped me in my life where I came from in the Philippines there's places where they don't have electricity. I am so thankful that Verizon has provided me the means of being able to help out. So last month along with my high school classmates we were able to generate almost 300,000 pesos so we could buy basic needs, basic food and basic medical supplies and here in Vegas every weekend I make masks with my mother. -

Verizon Internet and Value Added Services, Effective July 20, 2021

VERIZON ONLINE TERMS OF SERVICE FOR VERIZON INTERNET AND VALUE ADDED SERVICES THESE TERMS AND CONDITIONS CONTAIN IMPORTANT INFORMATION REGARDING YOUR RIGHTS AND OBLIGATIONS, AND OURS, IN CONNECTION WITH YOUR USE OF VERIZON ONLINE'S SERVICES. PLEASE READ THEM CAREFULLY This Agreement is entered into betw een you as our customer ("you", "your", "Company") and Verizon Online LLC. ("Verizon", "w e", "our") and includes these Terms of Service, our Acceptable Use Policy ("AUP") at verizon.com/about/terms-conditions/acceptable-use-policy, Website Terms of Use, Email Policy and other Policies, as set forth at verizon.com/terms, as well as our Privacy policies located at w w w.verizon.com/about/privacy/ (collectively, "Agreement"). By accepting this Agreement, you agree to comply w ith its terms and the specific terms of the service plan you selected (including the plan's duration and applicable early termination fee). Your acceptance of this Agreement occurs by and upon the earliest of: (a) submission of your order; (b) your acceptance of the Agreement electronically or in the cou rse of installing the Softw are; (c) your use of the Service; or (d) your retention of the Softw are we provide beyond thirty (30) days follow ing delivery. The follow ing terms apply to all Services (as defined below ): 1. Services and Definitions. The term "Service" shall mean any Verizon Internet access service based on digital subscriber loop (DSL) technology including services marketed under the Basic Internet, Business Internet or DSL name w ith or w ithout local service (collectively "Basic Internet Service"), any Verizon Internet access service delivered over a fiber optic transmission facility including services marketed as Verizon Fios Internet Service ("Fios Service") and the "Value Added Services" w hich may be made available to you for a separate charge or included w ith your Basic Internet Service or Fios Service as set forth in Attachment A hereto. -

C Harre R Rlfi:Irä M M Rssro N 223 East 4Th Street, Room 16O Poê Angeles, Washington

c HARrE R Rlfi:irä M M rssro N 223 East 4th Street, Room 16O PoÊ Angeles, Washington '/¿,/.',r',;, / r¡w I m.'cngüs¡ -.. -* October22,2O2O-6p.m. **ATTE N*x In response to the current pandemic, to promote social distancing, and in compliance with Governor's Proclamation whích prohibits in-person attendance at meetings, the Charter Review Commission strongly encourages the public to take advantage of remote options for attending and participating in open public meetings. Meeting information can be found on the Clallam County website at: http : //www.cla I lam. net/bocc/homerulecha rter. html This meeting can be viewed on a live stream at this link: http : //www.clal lam. net/featu res/meetings. htm I If you would like to participate in the meetíng via BlueJeans audio only, call 408-419-1715 and join with Meeting ID: 875 56L 784 If you would like to participate in the meeting via BlueJeans vídeo conference, vísit www.bluejeans.com and join with Meeting ID: 875 56L 784 Citizens are encouraged to make public comment by phone, video or in writing, Citizens with comments or questions regarding the Charter Review Commission may contact the Clerk of the Charter Review Comm ission at [email protected] llam.wa. us or 360-417 -2256. All Charter Review Commissioners will be appearing by BlueJeans audio or video conferencing options only for this meeting. No in-person attendance will be allowed until Governor's Proclamation is lifted. CALL TO ORDE& PLEDGE OF ALLEGIANCE, ROLL CALL APPROVAL OF AGENDA APPROVAL OF MINUTES o Minutes October 8,2020 PUBLIC COMMENT - Please limit comments to three minutes CoRRESPON D ENCE/ PETTTTONS REPORTS o Chair Report - Committees o Forum Report - Commissioner Turner AGENDA for the Meeting of October 22,2O2O CHARTER REVIEW COMMISSION Page 2 BUSINESS ITEMS . -

Verizon Annouinces C-Band Auction Results

News Release FOR IMMEDIATE RELEASE Media contact: March 10, 2021 Kim Ancin [email protected] 908-801-0500 Karen Schulz [email protected] 864-561-1527 Verizon announces C-Band auction results Download Verizon’s Investor Day 2021 infographic. NEW YORK, NY – Verizon will soon be able to offer increased mobility and broadband services to millions more consumers and businesses as a result of the recent FCC C-Band auction. In an investor event tonight, the company’s executive team discussed the acceleration of its strategy and plans to use the strong spectrum position in the industry to continue to drive growth. C-Band auction results Verizon succeeded in more than doubling its existing mid-band spectrum holdings by adding an average of 161 MHz of C-Band nationwide for $52.9 billion including incentive payments and clearing costs. Verizon won between 140 and 200 megahertz of C-Band spectrum in every available market. Specifically, Verizon: ● Secured a minimum 140 megahertz of total spectrum in the contiguous United States and an average of 161 megahertz nationwide; that’s bandwidth in every available market, 406 markets in all. ● Secured a consistent 60 megahertz of early clearing spectrum in the initial 46 markets - this is the swath of spectrum targeted for clearing by the end of 2021, home to more than half of the U.S. population. ● Secured up to 200 megahertz in 158 mostly rural markets covering nearly 40 million people. This will further enhance Verizon’s broadband solution portfolio for rural America. The auction results represent a 120 percent increase in Verizon’s spectrum holdings in sub-6 gigahertz bands. -

Verizon up to Speed Live May 19, 2020 CART/CAPTIONING PROVIDED BY: ALTERNATIVE COMMUNICATION SERVICES, LLC

Verizon Up To Speed Live May 19, 2020 CART/CAPTIONING PROVIDED BY: ALTERNATIVE COMMUNICATION SERVICES, LLC WWW.CAPTIONFAMILY.COM >>> TELL ME, WHAT DO YOU BUILD A NETWORK FOR? WHAT DID VERIZON BUILD THEIR NETWORK FOR? PEOPLE. EVERY HOLE DUG, EVERY WIRE SPLICED, EVERY TOWER RAISED IS FOR PEOPLE. WHEN PEOPLE'S EVERY DAY IS BEING CHALLENGED, THAT'S WHEN THE NETWORK STANDS UP TO SHOW US WHAT IT'S MADE OF. BUSINESSES LIKE VERIZON KEEP RUNNING AND CONNECTING IN NEW WAYS. VERIZON CUSTOMERS ARE MAKING AN AVERAGE OF OVER 600 MILLION CALLS AND SENDING NEARLY 8 BILLION TEXTS A DAY EVERY DAY. OUR CONNECTIONS MAKE US ALL STRONGER. WHEN YOU KNOW PEOPLE ARE DEPENDING ON YOU FOR THOSE CONNECTIONS, YOU DO WHATEVER IT TAKES. >>> COMING TO THE OFFICE TODAY THERE, ARE CLOSED SIGNS ALL ON BUSINESSES, DOORS ARE LOCKED, LIGHTS ARE OFF. IT'S EMPTY. >> WHAT I MISS MOST IS THE PEOPLE. NOT ONLY IS IT A COFFEE HOUSE, BUT REALLY A MEETING PLACE. >> IF WE WERE TO CLOSE, WE CLOSE AS A COMMUNITY. >> OUR BUSINESS MEANS THE WORLD TO ME. >> MY GOAL IS BEING ABLE TO FLIP MY BUSINESS, TRAINING HOPE IN YOUNG WOMEN. >> ARTS AND CRAFTS FOR LOCAL REFUGEES THAT WE SELL THROUGH PHOENIX. >> IT'S AN OPPORTUNITY FOR US TO CONTINUE TO PAY OUR EMPLOYEES. >> IT WILL ABSOLUTELY SAVE OUR BUSINESS. >> I'M SO GRATEFUL THAT WE'RE PAYING IT FORWARD. >> WE MISS YOU ALL AND LOOK FORWARD TO SEEING YOU SOON. >>> WHAT DOES IT MEAN TO BE AMERICA'S MOST RELIABLE NETWORK? RIGHT NOW, IT MEANS HELPING THOSE WHO SERVE STAY CONNECTED TO THEIR FAMILIES. -

Video Conferencing: Silicon Valley’S 50-Year History

Video Conferencing: Silicon Valley’s 50-Year History Dave House, Eric Dorsey & Bryan Martin; Moderator: Ken Pyle Organized by IEEE Silicon Valley Technology History Committee Co-Sponsored by IEEE Consultants’ Network of Silicon Valley (IEEE-CNSV) © 2020 IEEE Silicon Valley Technology History Committee www.SiliconValleyHistory.com 22 July 2020 Today’s Agenda IEEE Silicon Valley Technology History Committee Info: – Tom Coughlin, IEEE-USA Immediate Past President Recent IEEE historical Milestones – Brian A. Berg, IEEE Volunteer Main Event – Dave House, ex-Intel – Eric Dorsey, ex-Compression Labs, Inc. – Bryan Martin, 8x8, Inc. CTO and Chairman – Ken Pyle, Viodi (Moderator) 2 © 2020 IEEE Silicon Valley Technology History Committee IEEE Silicon Valley Technology History Committee Committee members: – Tom Coughlin, Chair – Brian A. Berg, Vice Chair – Tom Gardner, Treasurer – Ken Pyle, Videographer – Francine Bellson – Paul Wesling – Ted Hoff – Alan Weissberger 3 © 2020 IEEE Silicon Valley Technology History Committee IEEE Silicon Valley Technology History Committee Founded in 2013 Our purpose: to hold meetings on the history of a broad range of technologies that were conceived, developed, or progressed in greater Silicon Valley If you are interested in offering your help, contact –Tom Coughlin: [email protected] –Brian Berg: [email protected] 4 © 2020 IEEE Silicon Valley Technology History Committee Some Recent SV Tech History Events October 10, 2019 – A Partial History of Makers in Silicon Valley June 13, 2019 – Challenger Shuttle Disaster: