Matrix Algebra

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2.1 the Algebra of Sets

Chapter 2 I Abstract Algebra 83 part of abstract algebra, sets are fundamental to all areas of mathematics and we need to establish a precise language for sets. We also explore operations on sets and relations between sets, developing an “algebra of sets” that strongly resembles aspects of the algebra of sentential logic. In addition, as we discussed in chapter 1, a fundamental goal in mathematics is crafting articulate, thorough, convincing, and insightful arguments for the truth of mathematical statements. We continue the development of theorem-proving and proof-writing skills in the context of basic set theory. After exploring the algebra of sets, we study two number systems denoted Zn and U(n) that are closely related to the integers. Our approach is based on a widely used strategy of mathematicians: we work with specific examples and look for general patterns. This study leads to the definition of modified addition and multiplication operations on certain finite subsets of the integers. We isolate key axioms, or properties, that are satisfied by these and many other number systems and then examine number systems that share the “group” properties of the integers. Finally, we consider an application of this mathematics to check digit schemes, which have become increasingly important for the success of business and telecommunications in our technologically based society. Through the study of these topics, we engage in a thorough introduction to abstract algebra from the perspective of the mathematician— working with specific examples to identify key abstract properties common to diverse and interesting mathematical systems. 2.1 The Algebra of Sets Intuitively, a set is a “collection” of objects known as “elements.” But in the early 1900’s, a radical transformation occurred in mathematicians’ understanding of sets when the British philosopher Bertrand Russell identified a fundamental paradox inherent in this intuitive notion of a set (this paradox is discussed in exercises 66–70 at the end of this section). -

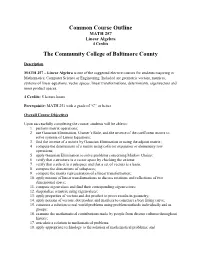

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

The Five Fundamental Operations of Mathematics: Addition, Subtraction

The five fundamental operations of mathematics: addition, subtraction, multiplication, division, and modular forms Kenneth A. Ribet UC Berkeley Trinity University March 31, 2008 Kenneth A. Ribet Five fundamental operations This talk is about counting, and it’s about solving equations. Counting is a very familiar activity in mathematics. Many universities teach sophomore-level courses on discrete mathematics that turn out to be mostly about counting. For example, we ask our students to find the number of different ways of constituting a bag of a dozen lollipops if there are 5 different flavors. (The answer is 1820, I think.) Kenneth A. Ribet Five fundamental operations Solving equations is even more of a flagship activity for mathematicians. At a mathematics conference at Sundance, Robert Redford told a group of my colleagues “I hope you solve all your equations”! The kind of equations that I like to solve are Diophantine equations. Diophantus of Alexandria (third century AD) was Robert Redford’s kind of mathematician. This “father of algebra” focused on the solution to algebraic equations, especially in contexts where the solutions are constrained to be whole numbers or fractions. Kenneth A. Ribet Five fundamental operations Here’s a typical example. Consider the equation y 2 = x3 + 1. In an algebra or high school class, we might graph this equation in the plane; there’s little challenge. But what if we ask for solutions in integers (i.e., whole numbers)? It is relatively easy to discover the solutions (0; ±1), (−1; 0) and (2; ±3), and Diophantus might have asked if there are any more. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

A Quick Algebra Review

A Quick Algebra Review 1. Simplifying Expressions 2. Solving Equations 3. Problem Solving 4. Inequalities 5. Absolute Values 6. Linear Equations 7. Systems of Equations 8. Laws of Exponents 9. Quadratics 10. Rationals 11. Radicals Simplifying Expressions An expression is a mathematical “phrase.” Expressions contain numbers and variables, but not an equal sign. An equation has an “equal” sign. For example: Expression: Equation: 5 + 3 5 + 3 = 8 x + 3 x + 3 = 8 (x + 4)(x – 2) (x + 4)(x – 2) = 10 x² + 5x + 6 x² + 5x + 6 = 0 x – 8 x – 8 > 3 When we simplify an expression, we work until there are as few terms as possible. This process makes the expression easier to use, (that’s why it’s called “simplify”). The first thing we want to do when simplifying an expression is to combine like terms. For example: There are many terms to look at! Let’s start with x². There Simplify: are no other terms with x² in them, so we move on. 10x x² + 10x – 6 – 5x + 4 and 5x are like terms, so we add their coefficients = x² + 5x – 6 + 4 together. 10 + (-5) = 5, so we write 5x. -6 and 4 are also = x² + 5x – 2 like terms, so we can combine them to get -2. Isn’t the simplified expression much nicer? Now you try: x² + 5x + 3x² + x³ - 5 + 3 [You should get x³ + 4x² + 5x – 2] Order of Operations PEMDAS – Please Excuse My Dear Aunt Sally, remember that from Algebra class? It tells the order in which we can complete operations when solving an equation. -

7.2 Binary Operators Closure

last edited April 19, 2016 7.2 Binary Operators A precise discussion of symmetry benefits from the development of what math- ematicians call a group, which is a special kind of set we have not yet explicitly considered. However, before we define a group and explore its properties, we reconsider several familiar sets and some of their most basic features. Over the last several sections, we have considered many di↵erent kinds of sets. We have considered sets of integers (natural numbers, even numbers, odd numbers), sets of rational numbers, sets of vertices, edges, colors, polyhedra and many others. In many of these examples – though certainly not in all of them – we are familiar with rules that tell us how to combine two elements to form another element. For example, if we are dealing with the natural numbers, we might considered the rules of addition, or the rules of multiplication, both of which tell us how to take two elements of N and combine them to give us a (possibly distinct) third element. This motivates the following definition. Definition 26. Given a set S,abinary operator ? is a rule that takes two elements a, b S and manipulates them to give us a third, not necessarily distinct, element2 a?b. Although the term binary operator might be new to us, we are already familiar with many examples. As hinted to earlier, the rule for adding two numbers to give us a third number is a binary operator on the set of integers, or on the set of rational numbers, or on the set of real numbers. -

What's in a Name? the Matrix As an Introduction to Mathematics

St. John Fisher College Fisher Digital Publications Mathematical and Computing Sciences Faculty/Staff Publications Mathematical and Computing Sciences 9-2008 What's in a Name? The Matrix as an Introduction to Mathematics Kris H. Green St. John Fisher College, [email protected] Follow this and additional works at: https://fisherpub.sjfc.edu/math_facpub Part of the Mathematics Commons How has open access to Fisher Digital Publications benefited ou?y Publication Information Green, Kris H. (2008). "What's in a Name? The Matrix as an Introduction to Mathematics." Math Horizons 16.1, 18-21. Please note that the Publication Information provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/math_facpub/12 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. What's in a Name? The Matrix as an Introduction to Mathematics Abstract In lieu of an abstract, here is the article's first paragraph: In my classes on the nature of scientific thought, I have often used the movie The Matrix to illustrate the nature of evidence and how it shapes the reality we perceive (or think we perceive). As a mathematician, I usually field questions elatedr to the movie whenever the subject of linear algebra arises, since this field is the study of matrices and their properties. So it is natural to ask, why does the movie title reference a mathematical object? Disciplines Mathematics Comments Article copyright 2008 by Math Horizons. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

Eigenvalues and Eigenvectors

Jim Lambers MAT 605 Fall Semester 2015-16 Lecture 14 and 15 Notes These notes correspond to Sections 4.4 and 4.5 in the text. Eigenvalues and Eigenvectors In order to compute the matrix exponential eAt for a given matrix A, it is helpful to know the eigenvalues and eigenvectors of A. Definitions and Properties Let A be an n × n matrix. A nonzero vector x is called an eigenvector of A if there exists a scalar λ such that Ax = λx: The scalar λ is called an eigenvalue of A, and we say that x is an eigenvector of A corresponding to λ. We see that an eigenvector of A is a vector for which matrix-vector multiplication with A is equivalent to scalar multiplication by λ. Because x is nonzero, it follows that if x is an eigenvector of A, then the matrix A − λI is singular, where λ is the corresponding eigenvalue. Therefore, λ satisfies the equation det(A − λI) = 0: The expression det(A−λI) is a polynomial of degree n in λ, and therefore is called the characteristic polynomial of A (eigenvalues are sometimes called characteristic values). It follows from the fact that the eigenvalues of A are the roots of the characteristic polynomial that A has n eigenvalues, which can repeat, and can also be complex, even if A is real. However, if A is real, any complex eigenvalues must occur in complex-conjugate pairs. The set of eigenvalues of A is called the spectrum of A, and denoted by λ(A). This terminology explains why the magnitude of the largest eigenvalues is called the spectral radius of A. -

Appendix a Spinors in Four Dimensions

Appendix A Spinors in Four Dimensions In this appendix we collect the conventions used for spinors in both Minkowski and Euclidean spaces. In Minkowski space the flat metric has the 0 1 2 3 form ηµν = diag(−1, 1, 1, 1), and the coordinates are labelled (x ,x , x , x ). The analytic continuation into Euclidean space is madethrough the replace- ment x0 = ix4 (and in momentum space, p0 = −ip4) the coordinates in this case being labelled (x1,x2, x3, x4). The Lorentz group in four dimensions, SO(3, 1), is not simply connected and therefore, strictly speaking, has no spinorial representations. To deal with these types of representations one must consider its double covering, the spin group Spin(3, 1), which is isomorphic to SL(2, C). The group SL(2, C) pos- sesses a natural complex two-dimensional representation. Let us denote this representation by S andlet us consider an element ψ ∈ S with components ψα =(ψ1,ψ2) relative to some basis. The action of an element M ∈ SL(2, C) is β (Mψ)α = Mα ψβ. (A.1) This is not the only action of SL(2, C) which one could choose. Instead of M we could have used its complex conjugate M, its inverse transpose (M T)−1,or its inverse adjoint (M †)−1. All of them satisfy the same group multiplication law. These choices would correspond to the complex conjugate representation S, the dual representation S,and the dual complex conjugate representation S. We will use the following conventions for elements of these representations: α α˙ ψα ∈ S, ψα˙ ∈ S, ψ ∈ S, ψ ∈ S. -

Algebra of Linear Transformations and Matrices Math 130 Linear Algebra

Then the two compositions are 0 −1 1 0 0 1 BA = = 1 0 0 −1 1 0 Algebra of linear transformations and 1 0 0 −1 0 −1 AB = = matrices 0 −1 1 0 −1 0 Math 130 Linear Algebra D Joyce, Fall 2013 The products aren't the same. You can perform these on physical objects. Take We've looked at the operations of addition and a book. First rotate it 90◦ then flip it over. Start scalar multiplication on linear transformations and again but flip first then rotate 90◦. The book ends used them to define addition and scalar multipli- up in different orientations. cation on matrices. For a given basis β on V and another basis γ on W , we have an isomorphism Matrix multiplication is associative. Al- γ ' φβ : Hom(V; W ) ! Mm×n of vector spaces which though it's not commutative, it is associative. assigns to a linear transformation T : V ! W its That's because it corresponds to composition of γ standard matrix [T ]β. functions, and that's associative. Given any three We also have matrix multiplication which corre- functions f, g, and h, we'll show (f ◦ g) ◦ h = sponds to composition of linear transformations. If f ◦ (g ◦ h) by showing the two sides have the same A is the standard matrix for a transformation S, values for all x. and B is the standard matrix for a transformation T , then we defined multiplication of matrices so ((f ◦ g) ◦ h)(x) = (f ◦ g)(h(x)) = f(g(h(x))) that the product AB is be the standard matrix for S ◦ T . -

Rules for Matrix Operations

Math 2270 - Lecture 8: Rules for Matrix Operations Dylan Zwick Fall 2012 This lecture covers section 2.4 of the textbook. 1 Matrix Basix Most of this lecture is about formalizing rules and operations that we’ve already been using in the class up to this point. So, it should be mostly a review, but a necessary one. If any of this is new to you please make sure you understand it, as it is the foundation for everything else we’ll be doing in this course! A matrix is a rectangular array of numbers, and an “m by n” matrix, also written rn x n, has rn rows and n columns. We can add two matrices if they are the same shape and size. Addition is termwise. We can also mul tiply any matrix A by a constant c, and this multiplication just multiplies every entry of A by c. For example: /2 3\ /3 5\ /5 8 (34 )+( 10 Hf \i 2) \\2 3) \\3 5 /1 2\ /3 6 3 3 ‘ = 9 12 1 I 1 2 4) \6 12 1 Moving on. Matrix multiplication is more tricky than matrix addition, because it isn’t done termwise. In fact, if two matrices have the same size and shape, it’s not necessarily true that you can multiply them. In fact, it’s only true if that shape is square. In order to multiply two matrices A and B to get AB the number of columns of A must equal the number of rows of B. So, we could not, for example, multiply a 2 x 3 matrix by a 2 x 3 matrix.