A Generative Data Augmentation Model for Enhancing Chinese Dialect Pronunciation Prediction Chu-Cheng Lin and Richard Tzong-Han Tsai

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

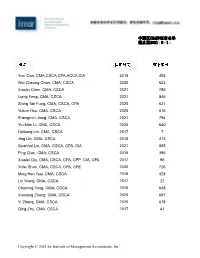

中国区cma持证者名单 截止至2021年9月1日

中国区CMA持证者名单 截止至2021年9月1日 Yixu Cao, CMA,CSCA,CPA,ACCA,CIA 2019 492 Wai Cheung Chan, CMA, CSCA 2020 622 Xiaolin Chen, CMA, CSCA 2021 785 Liang Feng, CMA, CSCA 2021 845 Shing Tak Fung, CMA, CSCA, CPA 2020 621 Yukun Hsu, CMA, CSCA 2020 676 Shengmin Jiang, CMA, CSCA 2021 794 Yiu Man Li, CMA, CSCA 2020 640 Huikang Lin, CMA, CSCA 2017 7 Jing Lin, CMA, CSCA 2018 415 Quanhui Liu, CMA, CSCA, CPA, CIA 2021 855 Ping Qian, CMA, CSCA 2018 396 Xiaolei Qiu, CMA, CSCA, CPA, CFP, CIA, CFA 2017 96 Yufei Shan, CMA, CSCA, CPA, CFE 2020 726 Ming Han Tsai, CMA, CSCA 2018 428 Lin Wang, CMA, CSCA 2017 22 Chunling Yang, CMA, CSCA 2020 648 Xiaolong Zhang, CMA, CSCA 2020 697 Yi Zhang, CMA, CSCA 2020 678 Qing Zhu, CMA, CSCA 2017 41 Copyright © 2021 by Institute of Management Accountants, Inc. 中国区CMA持证者名单 截止至2021年9月1日 Siha A, CMA 2020 81134 Bei Ai, CMA 2020 84918 Danlu Ai, CMA 2021 94445 Fengting Ai, CMA 2019 75078 Huaqin Ai, CMA 2019 67498 Jie Ai, CMA 2021 94013 Jinmei Ai, CMA 2020 79690 Qingqing Ai, CMA 2019 67514 Weiran Ai, CMA 2021 99010 Xia Ai, CMA 2021 97218 Xiaowei Ai, CMA, CIA 2019 75739 Yizhan Ai, CMA 2021 92785 Zi Ai, CMA 2021 93990 Guanfei An, CMA 2021 99952 Haifeng An, CMA 2021 92781 Haixia An, CMA 2016 51078 Haiying An, CMA 2021 98016 Jie An, CMA 2012 38197 Jujie An, CMA 2018 58081 Jun An, CMA 2019 70068 Juntong An, CMA 2021 94474 Kewei An, CMA 2021 93137 Lanying An, CMA, CPA 2021 90699 Copyright © 2021 by Institute of Management Accountants, Inc. -

Characteristics of Chinese Poetic-Musical Creations

Characteristics of Chinese Poetic-Musical Creations Yan GENG1 Abstract: The present study intoduces a series of characteristics related to Chinese poetry. It shows that, together with rhythmical structure and intonation (which has a crucial role in conveying meaning), an additional, fundamental aspect of Chinese poetry lies in the latent, pictorial effect of the writing. Various genres and forms of Chinese poetry are touched upon, as well as a series of figures of speech, themes (nature, love, sadness, mythology etc.) and symbols (particularly of vegetal and animal origin), which are frequently encountered in the poems. Key-words: rhythm, intonation, system of tones, rhyme, system of writing, figures of speech 1. Introduction In his Advanced Music Theory course, &RQVWDQWLQ 5kSă VKRZV WKDW ³we can differentiate between two levels of the phenomenon of rhythm: the first, a general philosophical one, meaning, within the context of music, the ensemble of movements perceived, thus the macrostructural level; the second, the micro-VWUXFWXUH ZKHUH UK\WKP PHDQV GXUDWLRQV « LQWHQVLWLHVDQGWHPSR « 0RUHRYHUZHFDQVD\WKDWUK\WKPGRHVQRWH[LVWEXWUDWKHUMXVW the succession of sounds in time [does].´2 Studies on rhythm, carried out by ethno- musicology researchers, can guide us to its genesis. A first fact that these studies point towards is the indissoluble unity of the birth process of artistic creation: poetry, music (rhythm-melody) and dance, which manifested syncretically for a very lengthy period of time. These aspects are not singular or characteristic for just one culture, as it appears that they have manifested everywhere from the very beginning of mankind. There is proof both in Chinese culture, as well as in ancient Romanian culture, that certifies the existence of a syncretic development of the arts and language. -

Syllabus 1 Lín Táo 林燾 and Gêng Zhènshëng 耿振生

CHINESE 542 Introduction to Chinese Historical Phonology Spring 2005 This course is a basic introduction at the graduate level to methods and materials in Chinese historical phonology. Reading ability in Chinese is required. It is assumed that students have taken Chinese 342, 442, or the equivalent, and are familiar with articulatory phonetics concepts and terminology, including the International Phonetic Alphabet, and with general notions of historical sound change. Topics covered include the periodization of the Chinese language; the source materials for reconstructing earlier stages of the language; traditional Chinese phonological categories and terminology; fânqiè spellings; major reconstruction systems; the use of reference materials to determine reconstructions in these systems. The focus of the course is on Middle Chinese. Class: Mondays & Fridays 3:30 - 5:20, Savery 335 Web: http://courses.washington.edu/chin532/ Instructor: Zev Handel 245 Gowen, 543-4863 [email protected] Office hours: MF 2-3pm Grading: homework exercises 30% quiz 5% comprehensive test 25% short translations 15% annotated translation 25% Readings: Readings are available on e-reserves or in the East Asian library. Items below marked with a call number are on reserve in the East Asian Library or (if the call number starts with REF) on the reference shelves. Items marked eres are on course e-reserves. Baxter, William H. 1992. A handbook of Old Chinese phonology. (Trends in linguistics: studies and monographs, 64.) Berlin and New York: Mouton de Gruyter. PL1201.B38 1992 [eres: chapters 2, 8, 9] Baxter, William H. and Laurent Sagart. 1998 . “Word formation in Old Chinese” . In New approaches to Chinese word formation: morphology, phonology and the lexicon in modern and ancient Chinese. -

Glottal Stop Initials and Nasalization in Sino-Vietnamese and Southern Chinese

Glottal Stop Initials and Nasalization in Sino-Vietnamese and Southern Chinese Grainger Lanneau A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts University of Washington 2020 Committee: Zev Handel William Boltz Program Authorized to Offer Degree: Asian Languages and Literature ©Copyright 2020 Grainger Lanneau University of Washington Abstract Glottal Stop Initials and Nasalization in Sino-Vietnamese and Southern Chinese Grainger Lanneau Chair of Supervisory Committee: Professor Zev Handel Asian Languages and Literature Middle Chinese glottal stop Ying [ʔ-] initials usually develop into zero initials with rare occasions of nasalization in modern day Sinitic1 languages and Sino-Vietnamese. Scholars such as Edwin Pullyblank (1984) and Jiang Jialu (2011) have briefly mentioned this development but have not yet thoroughly investigated it. There are approximately 26 Sino-Vietnamese words2 with Ying- initials that nasalize. Scholars such as John Phan (2013: 2016) and Hilario deSousa (2016) argue that Sino-Vietnamese in part comes from a spoken interaction between Việt-Mường and Chinese speakers in Annam speaking a variety of Chinese called Annamese Middle Chinese AMC, part of a larger dialect continuum called Southwestern Middle Chinese SMC. Phan and deSousa also claim that SMC developed into dialects spoken 1 I will use the terms “Sinitic” and “Chinese” interchangeably to refer to languages and speakers of the Sinitic branch of the Sino-Tibetan language family. 2 For the sake of simplicity, I shall refer to free and bound morphemes alike as “words.” 1 in Southwestern China today (Phan, Desousa: 2016). Using data of dialects mentioned by Phan and deSousa in their hypothesis, this study investigates initial nasalization in Ying-initial words in Southwestern Chinese Languages and in the 26 Sino-Vietnamese words. -

The Chinese Script T � * 'L

Norman, Jerry, Chinese, Cambridge: Cambridge University Press, 1988. 1 3.1 Th e beginnings of Chinese writing 59 3 FISH HORSE ELEPHANT cow (yu) (m ii) (xiimg) (niu) " The Chinese script t � * 'l Figure 3.1. Pictographs in early Chinese writing 3.1 The beginnings of Chinese writing1 The Chinese script appears as a fully developed writing system in the late Shang .dynasty (fourteenth to eleventh centuries BC). From this period we have copious examples of the script inscribed or written on bones and tortoise shells, for the most part in the form of short divinatory texts. From the same period there also Figure 3.2. The graph fo r quiin'dog' exist a number of inscriptions on bronze vessels of various sorts. The former type of graphic record is referred to as the oracle bone script while the latter is com of this sort of graph are shown in Figure 3.1. The more truly representational a monly known· as the bronze script. The script of this period is already a fully graph is, the more difficult and time-consuming it is to depict. There is a natural developed writing system, capable of recording the contemporary Chinese lan tendency for such graphs to become progressively simplified and stylized as a guage in a complete and unambiguous manner. The maturity of this early script writing system matures and becomes more widely used. As a result, pictographs has suggested to many scholars that it must have passed through a fairly long gradually tend to lose their obvious pictorial quality. The graph for qui'in 'dog' period of development before reaching this stage, but the few examples of writing shown in Figure 3.2 can serve as a good illustration of this sort of development. -

Japanization in the Field of Classical Chinese Dictionaries

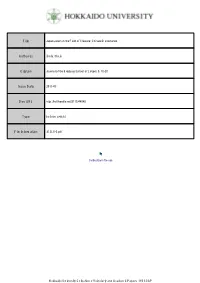

Title Japanization in the Field of Classical Chinese Dictionaries Author(s) Ikeda, Shoju Citation Journal of the Graduate School of Letters, 6, 15-25 Issue Date 2011-03 Doc URL http://hdl.handle.net/2115/44945 Type bulletin (article) File Information JGSL6-2.pdf Instructions for use Hokkaido University Collection of Scholarly and Academic Papers : HUSCAP Journal of the Graduate School of Letters,Hokkaido University Vol.6;pp.15-25,March 2011 15 Japanization in the Field of Classical Chinese Dictionaries Shoju IKEDA Abstract:How did dictionaries arranged by radical undergo Japanization?In the following I shall take up for consideration the Tenrei bansh썚omeigi,Shinsenjiky썚o,and Ruiju my썚ogi sh썚oand consider this question by examining in particular their relationship with the original version of the Chinese Yupian,compiled in 543 by Gu Yewang of the Liang.There is much that needs to be said about early Japanese dictionaries.In this paper I have focused on their relationship with the Yupian and have discussed questions such as its position as a source among Buddhist monks and its connections with questions pertaining to radicals,in particular the manner in which the arrangement of characters under individual radicals in the Yupian was modified. (Received on December 7,2010) 1.Dictionaries Arranged According to the Shape,Sound and Meaning of Chinese Characters and the Compilation of Early Dictionaries in Japan When considered in light of extant dictionaries,it would seem that dictionaries arranged by radical or classifier(shape)appeared first,followed by dictionaries arranged by meaning,and that dictionaries arranged by pronunciation(sound)came some time later. -

Research on the Time When Ping Split Into Yin and Yang in Chinese Northern Dialect

Chinese Studies 2014. Vol.3, No.1, 19-23 Published Online February 2014 in SciRes (http://www.scirp.org/journal/chnstd) http://dx.doi.org/10.4236/chnstd.2014.31005 Research on the Time When Ping Split into Yin and Yang in Chinese Northern Dialect Ma Chuandong1*, Tan Lunhua2 1College of Fundamental Education, Sichuan Normal University, Chengdu, China 2Sichuan Science and Technology University for Employees, Chengdu, China Email: *[email protected] Received January 7th, 2014; revised February 8th, 2014; accepted February 18th, 2014 Copyright © 2014 Ma Chuandong, Tan Lunhua. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. In accordance of the Creative Commons Attribution License all Copyrights © 2014 are reserved for SCIRP and the owner of the intellectual property Ma Chuandong, Tan Lun- hua. All Copyright © 2014 are guarded by law and by SCIRP as a guardian. The phonetic phenomenon “ping split into yin and yang” 平分阴阳 is one of the most important changes of Chinese tones in the early modern Chinese, which is reflected clearly in Zhongyuan Yinyun 中原音韵 by Zhou Deqing 周德清 (1277-1356) in the Yuan Dynasty. The authors of this paper think the phe- nomenon “ping split into yin and yang” should not have occurred so late as in the Yuan Dynasty, based on previous research results and modern Chinese dialects, making use of historical comparative method and rhyming books. The changes of tones have close relationship with the voiced and voiceless initials in Chinese, and the voiced initials have turned into voiceless in Song Dynasty, so it could not be in the Yuan Dynasty that ping split into yin and yang, but no later than the Song Dynasty. -

Ideophones in Middle Chinese

KU LEUVEN FACULTY OF ARTS BLIJDE INKOMSTSTRAAT 21 BOX 3301 3000 LEUVEN, BELGIË ! Ideophones in Middle Chinese: A Typological Study of a Tang Dynasty Poetic Corpus Thomas'Van'Hoey' ' Presented(in(fulfilment(of(the(requirements(for(the(degree(of(( Master(of(Arts(in(Linguistics( ( Supervisor:(prof.(dr.(Jean=Christophe(Verstraete((promotor)( ( ( Academic(year(2014=2015 149(431(characters Abstract (English) Ideophones in Middle Chinese: A Typological Study of a Tang Dynasty Poetic Corpus Thomas Van Hoey This M.A. thesis investigates ideophones in Tang dynasty (618-907 AD) Middle Chinese (Sinitic, Sino- Tibetan) from a typological perspective. Ideophones are defined as a set of words that are phonologically and morphologically marked and depict some form of sensory image (Dingemanse 2011b). Middle Chinese has a large body of ideophones, whose domains range from the depiction of sound, movement, visual and other external senses to the depiction of internal senses (cf. Dingemanse 2012a). There is some work on modern variants of Sinitic languages (cf. Mok 2001; Bodomo 2006; de Sousa 2008; de Sousa 2011; Meng 2012; Wu 2014), but so far, there is no encompassing study of ideophones of a stage in the historical development of Sinitic languages. The purpose of this study is to develop a descriptive model for ideophones in Middle Chinese, which is compatible with what we know about them cross-linguistically. The main research question of this study is “what are the phonological, morphological, semantic and syntactic features of ideophones in Middle Chinese?” This question is studied in terms of three parameters, viz. the parameters of form, of meaning and of use. -

The Fundamentals of Chinese Historical Phonology

ChinHistPhon – MA 1st yr Basics/ 1 Bartos The fundamentals of Chinese historical phonology 1. Old Mandarin (early modern Chinese; 14th c.) − 中原音韵 Zhongyuan Yinyun “Rhymes of the Central Plain”, written in 1324 by 周德清 Zhou Deqing: A pronunciation guide for writers and performers of 北曲 beiqu-verse in vernacular plays. − Arrangement: o 19 rhyme categories, each named with two characters, e.g. 真文, 江阳, 先天, 鱼模. o Within each rhyme category, words are divided according to tone category: 平声阴 平声阳 上声 去声 入声作 X 声 o Within each tone, words are divided into homophone groups separated by circles. o An appendix lists pairs of characters whose pronunciation is frequently confused, e.g.: 死有史 米有美 因有英 The 19 Zhongyuan Yinyun rhyme categories: Old Mandarin tones: The tone categories were the same as for modern standard Mandarin, except: − The former 入-tone words joined the other tone categories in a more regular fashion. ChinHistPhon – MA 1st yr Basics/ 2 Bartos 2. The reconstruction of the Middle Chinese sound system 2.1. Main sources 2.1.1. Primary – rhyme dictionaries, rhyme tables (Qieyun 切韵, Guangyun 广韵, …, Jiyun 集韵, Yunjing 韵镜, Qiyinlüe 七音略) – a comparison of modern Chinese dialects – shape of Chinese loanwords in ’sinoxenic’ languages (Japanese, Korean, Vietnamese) 2.1.2. Secondary – use of poetic devices (rhyming words, metric (= tonal patterns)) – transcriptions: - contemporary alphabetic transcription of Chinese names/words, e.g. Brahmi, Tibetan, … - contemporary Chinese transcription of foreign words/names of known origin ChinHistPhon – MA 1st yr Basics/ 3 Bartos – the content problem of the Qieyun: Is it some ‘reconstructed’ pre-Tang variety, or the language of the capital (Chang’an 长安), or a newly created norm, based on certain ‘compromises’? – the classic problem of ‘time-span’: Qieyun: 601 … Yunjing: 1161 → Pulleyblank: the Qieyun and the rhyme tables ( 等韵图) reflect different varieties (both geographically, and diachronically) → Early vs. -

Writing Taiwanese: the Development of Modern Written Taiwanese

SINO-PLATONIC PAPERS Number 89 January, 1999 Writing Taiwanese: The Development of Modern Written Taiwanese by Alvin Lin Victor H. Mair, Editor Sino-Platonic Papers Department of East Asian Languages and Civilizations University of Pennsylvania Philadelphia, PA 19104-6305 USA [email protected] www.sino-platonic.org SINO-PLATONIC PAPERS is an occasional series edited by Victor H. Mair. The purpose of the series is to make available to specialists and the interested public the results of research that, because of its unconventional or controversial nature, might otherwise go unpublished. The editor actively encourages younger, not yet well established, scholars and independent authors to submit manuscripts for consideration. Contributions in any of the major scholarly languages of the world, including Romanized Modern Standard Mandarin (MSM) and Japanese, are acceptable. In special circumstances, papers written in one of the Sinitic topolects (fangyan) may be considered for publication. Although the chief focus of Sino-Platonic Papers is on the intercultural relations of China with other peoples, challenging and creative studies on a wide variety of philological subjects will be entertained. This series is not the place for safe, sober, and stodgy presentations. Sino-Platonic Papers prefers lively work that, while taking reasonable risks to advance the field, capitalizes on brilliant new insights into the development of civilization. The only style-sheet we honor is that of consistency. Where possible, we prefer the usages of the Journal of Asian Studies. Sinographs (hanzi, also called tetragraphs [fangkuaizi]) and other unusual symbols should be kept to an absolute minimum. Sino-Platonic Papers emphasizes substance over form. -

UC Berkeley Dissertations, Department of Linguistics

UC Berkeley Dissertations, Department of Linguistics Title The Consonant System of Middle-Old Tibetan and the Tonogenesis of Tibetan Permalink https://escholarship.org/uc/item/18g449sj Author Zhang, Lian Publication Date 1987 eScholarship.org Powered by the California Digital Library University of California The Consonant System of Middle-Old Tibetan and the Tonogenesis of Tibetan By Lian Sheng Zhang Graduate of Central Institute for Minority Nationalities, People's Republic of China, 1966 C.Phil. (University of California) 1986 DISSERTATION Submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in Linguistics in the GRADUATE DIVISION OF THE UNIVERSITY OF CALIFORNIA, BERKELEY Approved: Chairman Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. THE CONSONANT SYSTEM OF MIDDLE-OLD TIBETAN AND THE TONOGENESIS OF TIBETAN Copyright © 1987 LIAN SHENG ZHANG Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. ABSTRACT Lian Sheng Zhang This study not only tries to reconstruct the consonant system of Middle-Old Tibetan, but also provides proof for my proposition that the period of Middle-Old Tibetan (from the middle of the 7th century to the second half of the 9th century, A.D.) was the time when the original Tibetan voiced consonants were devoiced and tonogenesis occurred. I have used three kinds of source materials: 1. extant old Tibetan documents and books, as well as wooden slips and bronze or stone tablets dating from the 7th century to the 9th century; 2. 7th to 9th century transcribed (translated or transliterated) materials between Tibetan and other languages, especially Chinese transcriptions of Tibetan documents and Tibetan transcriptions of Chinese documents; 3- linguistic data from various dialects of Modern Tibetan. -

Norman 1988 Chapter 3.Pdf

3 The Chinese script 3.1 The beginnings of,CJunese '!riting1 The Chinese script appears "Sa fully ,developed writing system in, the late Shang • :1 r "' I dynasty (f9urteenth to elev~nth cellt,uries ~C). From this pc;riod we have copious examples of the script inscribed or written on bones and tortoise shells, for. the most part in t~e form of short divinatory texts. From the same,period there also e)cist.a number of inscriptiops on bropze vessels of ~aljous sorts. The fprmer type of graphic,rec9rd is referr¥d to ,as th~ oracle ~bsme s~ript while the latter is com monly known as the bronze script. The script of. this period is already a fully ,deve!oped writing syst;nt: capable of recordi~~ the.contem.p~rary chinese lan guage in a, complete and unampigpous manner. TJle maturity. of this early script has, sqgg~steq to Il!any s~holars that.itJnust have passed through.a fairly long period of development before reaching this stage, but the few, examples of writing whic,h prece<!e th((. ~ourteenth century are unfortunately too sparse to allow any sort of reconstru~tion of. ~4i~ developm~nt,. 2 On \he basis of avaija,ble evidence, hpw~verl it would l)Ot be unreas~.nal;>lp to assup1e., ;h,at Chine~e 'Vriting began sometime in the early Shang or even somewhat earlier in the late Xia dynasty or approximately in the seventeenth century BC (Qiu 1978, 169). From the very beginning the Chinese writing system has basically been mor phemic: that is, almost every graph represents a single morph~me.