The Portals 4.0.2 Message Passing Interface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

L22: Parallel Programming Language Features (Chapel and Mapreduce)

12/1/09 Administrative • Schedule for the rest of the semester - “Midterm Quiz” = long homework - Handed out over the holiday (Tuesday, Dec. 1) L22: Parallel - Return by Dec. 15 - Projects Programming - 1 page status report on Dec. 3 – handin cs4961 pdesc <file, ascii or PDF ok> Language Features - Poster session dry run (to see material) Dec. 8 (Chapel and - Poster details (next slide) MapReduce) • Mailing list: [email protected] December 1, 2009 12/01/09 Poster Details Outline • I am providing: • Global View Languages • Foam core, tape, push pins, easels • Chapel Programming Language • Plan on 2ft by 3ft or so of material (9-12 slides) • Map-Reduce (popularized by Google) • Content: • Reading: Ch. 8 and 9 in textbook - Problem description and why it is important • Sources for today’s lecture - Parallelization challenges - Brad Chamberlain, Cray - Parallel Algorithm - John Gilbert, UCSB - How are two programming models combined? - Performance results (speedup over sequential) 12/01/09 12/01/09 1 12/1/09 Shifting Gears Global View Versus Local View • What are some important features of parallel • P-Independence programming languages (Ch. 9)? - If and only if a program always produces the same output on - Correctness the same input regardless of number or arrangement of processors - Performance - Scalability • Global view - Portability • A language construct that preserves P-independence • Example (today’s lecture) • Local view - Does not preserve P-independent program behavior - Example from previous lecture? And what about ease -

Mellanox Scalableshmem Support the Openshmem Parallel Programming Language Over Infiniband

SOFTWARE PRODUCT BRIEF Mellanox ScalableSHMEM Support the OpenSHMEM Parallel Programming Language over InfiniBand The SHMEM programming library is a one-side communications library that supports a unique set of parallel programming features including point-to-point and collective routines, synchronizations, atomic operations, and a shared memory paradigm used between the processes of a parallel programming application. SHMEM Details API defined by the OpenSHMEM.org consortium. There are two types of models for parallel The library works with the OpenFabrics RDMA programming. The first is the shared memory for Linux stack (OFED), and also has the ability model, in which all processes interact through a to utilize Mellanox Messaging libraries (MXM) globally addressable memory space. The other as well as Mellanox Fabric Collective Accelera- HIGHLIGHTS is a distributed memory model, in which each tions (FCA), providing an unprecedented level of FEATURES processor has its own memory, and interaction scalability for SHMEM programs running over – Use of symmetric variables and one- with another processors memory is done though InfiniBand. sided communication (put/get) message communication. The PGAS model, The use of Mellanox FCA provides for collective – RDMA for performance optimizations or Partitioned Global Address Space, uses a optimizations by taking advantage of the high for one-sided communications combination of these two methods, in which each performance features within the InfiniBand fabric, – Provides shared memory data transfer process has access to its own private memory, including topology aware coalescing, hardware operations (put/get), collective and also to shared variables that make up the operations, and atomic memory multicast and separate quality of service levels for global memory space. -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Introduction to Parallel Programming

Introduction to Parallel Programming Martin Čuma Center for High Performance Computing University of Utah [email protected] Overview • Types of parallel computers. • Parallel programming options. • How to write parallel applications. • How to compile. • How to debug/profile. • Summary, future expansion. 3/13/2009 http://www.chpc.utah.edu Slide 2 Parallel architectures Single processor: • SISD – single instruction single data. Multiple processors: • SIMD - single instruction multiple data. • MIMD – multiple instruction multiple data. Shared Memory Distributed Memory 3/13/2009 http://www.chpc.utah.edu Slide 3 Shared memory Dual quad-core node • All processors have BUS access to local memory CPU Memory • Simpler programming CPU Memory • Concurrent memory access Many-core node (e.g. SGI) • More specialized CPU Memory hardware BUS • CHPC : CPU Memory Linux clusters, 2, 4, 8 CPU Memory core nodes CPU Memory 3/13/2009 http://www.chpc.utah.edu Slide 4 Distributed memory • Process has access only BUS CPU to its local memory Memory • Data between processes CPU Memory must be communicated • More complex Node Network Node programming Node Node • Cheap commodity hardware Node Node • CHPC: Linux clusters Node Node (Arches, Updraft) 8 node cluster (64 cores) 3/13/2009 http://www.chpc.utah.edu Slide 5 Parallel programming options Shared Memory • Threads – POSIX Pthreads, OpenMP – Thread – own execution sequence but shares memory space with the original process • Message passing – processes – Process – entity that executes a program – has its own -

An Open Standard for SHMEM Implementations Introduction

OpenSHMEM: An Open Standard for SHMEM Implementations Introduction • OpenSHMEM is an effort to create a standardized SHMEM library for C , C++, and Fortran • OpenSHMEM is a Partitioned Global Address Space (PGAS) library and supports Single Program Multiple Data (SPMD) style of programming • SGI’s SHMEM API is the baseline for OpenSHMEM Specification 1.0 • One-sided communication is achieved through Symmetric variables • globals C/C++: Non-stack variables Fortran: objects in common blocks or with the SAVE attribute • dynamically allocated and managed by the OpenSHMEM Library Figure 1. Dynamic allocation of symmetric variable ‘x’ OpenSHMEM API • OpenSHMEM Specification 1.0 provides support for data transfer operations, collective and point- to-point synchronization mechanisms (barrier, fence, quiet, wait), collective operations (broadcast, collection, reduction), atomic memory operations (swap, fetch/add, increment), and distributed locks. • Open to the community for reviews and contributions. Current Status • University of Houston has developed a portable reference OpenSHMEM library. • OpenSHMEM Specification 1.0 man pages are available. • OpenSHMEM mailing list for discussions and contributions can be joined by emailing [email protected] • Website for OpenSHMEM and a Wiki for community use are available. Figure 2. University of Houston http://www.openshmem.org/ Implementation Future Work and Goals • Develop OpenSHMEM Specification 2.0 with co-operation and contribution from the OpenSHMEM community • Discuss and extend specification with important new capabilities • Improve on the OpenSHMEM reference implementation by providing support for scalable collective algorithms • Explore innovative hardware platforms . -

Parallel Programming

Parallel Programming Libraries and implementations Outline • MPI – distributed memory de-facto standard • Using MPI • OpenMP – shared memory de-facto standard • Using OpenMP • CUDA – GPGPU de-facto standard • Using CUDA • Others • Hybrid programming • Xeon Phi Programming • SHMEM • PGAS MPI Library Distributed, message-passing programming Message-passing concepts Explicit Parallelism • In message-passing all the parallelism is explicit • The program includes specific instructions for each communication • What to send or receive • When to send or receive • Synchronisation • It is up to the developer to design the parallel decomposition and implement it • How will you divide up the problem? • When will you need to communicate between processes? Message Passing Interface (MPI) • MPI is a portable library used for writing parallel programs using the message passing model • You can expect MPI to be available on any HPC platform you use • Based on a number of processes running independently in parallel • HPC resource provides a command to launch multiple processes simultaneously (e.g. mpiexec, aprun) • There are a number of different implementations but all should support the MPI 2 standard • As with different compilers, there will be variations between implementations but all the features specified in the standard should work. • Examples: MPICH2, OpenMPI Point-to-point communications • A message sent by one process and received by another • Both processes are actively involved in the communication – not necessarily at the same time • Wide variety of semantics provided: • Blocking vs. non-blocking • Ready vs. synchronous vs. buffered • Tags, communicators, wild-cards • Built-in and custom data-types • Can be used to implement any communication pattern • Collective operations, if applicable, can be more efficient Collective communications • A communication that involves all processes • “all” within a communicator, i.e. -

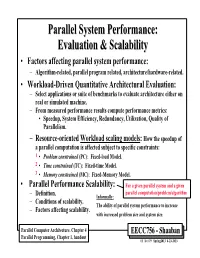

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Outro to Parallel Computing

Outro To Parallel Computing John Urbanic Pittsburgh Supercomputing Center Parallel Computing Scientist Purpose of this talk Now that you know how to do some real parallel programming, you may wonder how much you don’t know. With your newly informed perspective we will take a look at the parallel software landscape so that you can see how much of it you are equipped to traverse. How parallel is a code? ⚫ Parallel performance is defined in terms of scalability Strong Scalability Can we get faster for a given problem size? Weak Scalability Can we maintain runtime as we scale up the problem? Weak vs. Strong scaling More Processors Weak Scaling More accurate results More Processors Strong Scaling Faster results (Tornado on way!) Your Scaling Enemy: Amdahl’s Law How many processors can we really use? Let’s say we have a legacy code such that is it only feasible to convert half of the heavily used routines to parallel: Amdahl’s Law If we run this on a parallel machine with five processors: Our code now takes about 60s. We have sped it up by about 40%. Let’s say we use a thousand processors: We have now sped our code by about a factor of two. Is this a big enough win? Amdahl’s Law ⚫ If there is x% of serial component, speedup cannot be better than 100/x. ⚫ If you decompose a problem into many parts, then the parallel time cannot be less than the largest of the parts. ⚫ If the critical path through a computation is T, you cannot complete in less time than T, no matter how many processors you use . -

The Continuing Renaissance in Parallel Programming Languages

The continuing renaissance in parallel programming languages Simon McIntosh-Smith University of Bristol Microelectronics Research Group [email protected] © Simon McIntosh-Smith 1 Didn’t parallel computing use to be a niche? © Simon McIntosh-Smith 2 When I were a lad… © Simon McIntosh-Smith 3 But now parallelism is mainstream Samsung Exynos 5 Octa: • 4 fast ARM cores and 4 energy efficient ARM cores • Includes OpenCL programmable GPU from Imagination 4 HPC scaling to millions of cores Tianhe-2 at NUDT in China 33.86 PetaFLOPS (33.86x1015), 16,000 nodes Each node has 2 CPUs and 3 Xeon Phis 3.12 million cores, $390M, 17.6 MW, 720m2 © Simon McIntosh-Smith 5 A renaissance in parallel programming Metal C++11 OpenMP OpenCL Erlang Unified Parallel C Fortress XC Go Cilk HMPP CHARM++ CUDA Co-Array Fortran Chapel Linda X10 MPI Pthreads C++ AMP © Simon McIntosh-Smith 6 Groupings of || languages Partitioned Global Address Space GPU languages: (PGAS): • OpenCL • Fortress • CUDA • X10 • HMPP • Chapel • Metal • Co-array Fortran • Unified Parallel C Object oriented: • C++ AMP CSP: XC • CHARM++ Message passing: MPI Multi-threaded: • Cilk Shared memory: OpenMP • Go • C++11 © Simon McIntosh-Smith 7 Emerging GPGPU standards • OpenCL, DirectCompute, C++ AMP, … • Also OpenMP 4.0, OpenACC, CUDA… © Simon McIntosh-Smith 8 Apple's Metal • A "ground up" parallel programming language for GPUs • Designed for compute and graphics • Potential to replace OpenGL compute shaders, OpenCL/GL interop etc. • Close to the "metal" • Low overheads • "Shading" language based -

Enabling Efficient Use of UPC and Openshmem PGAS Models on GPU Clusters

Enabling Efficient Use of UPC and OpenSHMEM PGAS Models on GPU Clusters Presented at GTC ’15 Presented by Dhabaleswar K. (DK) Panda The Ohio State University E-mail: [email protected] hCp://www.cse.ohio-state.edu/~panda Accelerator Era GTC ’15 • Accelerators are becominG common in hiGh-end system architectures Top 100 – Nov 2014 (28% use Accelerators) 57% use NVIDIA GPUs 57% 28% • IncreasinG number of workloads are beinG ported to take advantage of NVIDIA GPUs • As they scale to larGe GPU clusters with hiGh compute density – hiGher the synchronizaon and communicaon overheads – hiGher the penalty • CriPcal to minimize these overheads to achieve maximum performance 3 Parallel ProGramminG Models Overview GTC ’15 P1 P2 P3 P1 P2 P3 P1 P2 P3 LoGical shared memory Shared Memory Memory Memory Memory Memory Memory Memory Shared Memory Model Distributed Memory Model ParPPoned Global Address Space (PGAS) DSM MPI (Message PassinG Interface) Global Arrays, UPC, Chapel, X10, CAF, … • ProGramminG models provide abstract machine models • Models can be mapped on different types of systems - e.G. Distributed Shared Memory (DSM), MPI within a node, etc. • Each model has strenGths and drawbacks - suite different problems or applicaons 4 Outline GTC ’15 • Overview of PGAS models (UPC and OpenSHMEM) • Limitaons in PGAS models for GPU compuPnG • Proposed DesiGns and Alternaves • Performance Evaluaon • ExploiPnG GPUDirect RDMA 5 ParPPoned Global Address Space (PGAS) Models GTC ’15 • PGAS models, an aracPve alternave to tradiPonal message passinG - Simple