Domain-Specific Languages in Software Development

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mallard BASIC: Introduction and Reference

Mallard BASIC For the Amstrad PCW8256/8512 & PCW9512 LOCOMOTIVE Introduction SOFTWARE and Reference Mallard BASIC Introduction and Reference The world speed record for a steam locomotive is held by LNER 4-6-2 No. 4468 “Mallard”, which hauled seven coaches weighing 240 tons over a measured quarter mile at 126 mph (202 kph) on 3rd July 1938. LOCOMOTIVE SOFTWARE © Copyright 1987 Locomotive Software Limited All rights reserved. Neither the whole, nor any part of the information contained in this manual may be adapted or reproduced in any material form except with the prior written approval of Locomotive Software Limited. While every effort has been made to verify that this software works as described, it is not possible to test any program of this complexity under all possible circumstances. Therefore Mallard BASIC is provided ‘as is’ without warranty of any kind either express or implied. The particulars supplied in this manual are given by Locomotive Software in good faith. However, Mallard BASIC is subject to continuous development and improvement, and it is acknowledged that there may be errors or omissions in this manual. Locomotive Software reserves the right to revise this manual without notice. Written by Locomotive Software Ltd and Ed Phipps Documentation Services Produced and typeset electronically by Locomotive Software Ltd Printed by Grosvenor Press (Portsmouth) Ltd Published by Locomotive Software Ltd Allen Court Dorking Surrey RH4 1YL 2nd Edition Published 1987 (Reprinted with corrections May 1989) ISBN 185195 009 5 Mallard BASIC is a trademark of Locomotive Software Ltd LOCOMOTIVE is a registered trademark of Locomotive Software Ltd AMSTRAD is a registered trademark of AMSTRAD plc IBM is a registered trademark of Intemational Business Machines Corp CP/M-80, CCP/M-86 and MP/M-86 are trademarks of Digital Research Inc MS-DOS is a trademark of Microsoft® Corporation VT52 is a trademark of Digital Equipment Corp Preface This book describes how to use Locomotive Software's Mallard BASIC interpreter to write and use BASIC programs on your Amstrad PCW. -

8000 Plus Magazine Issue 17

THE BEST SELLIINIG IVI A<3 AZI INI E EOF=t THE AMSTRAD PCW Ten copies ofMin^g/jf^^ Office Professional to be ISSUE 17 • FEBRUARY 1988* £1.50 Could AMS's new desktop publishing package be the best yet? f PLUS: Complete buyer's guide to word processing, accounts, utilities and DTP software jgl- ) MASTERFILE 8000 FOR ALL AMSTRAD PCW COMPUTERS MASTERFILE 8000, the subject of so many Any file can make RELATIONAL references to up enquiries, is now available. to EIGHT read-only keyed files, the linkage being effected purely by the use of matching file and MASTERFILE 8000 is a totally new database data names. product. While drawing on the best features of the CPC versions, it has been designed specifically for You can import/merge ASCII files (e.g. from the PCW range. The resulting combination of MASTERFILE III), or export any data (e.g. to a control and power is a delight to use. word-processor), and merge files. For keyed files this is a true merge, not just an append operation. Other products offer a choice between fast but By virtue of export and re-import you can make a limited-capacity RAM files, and large-capacity but copy of a file in another key sequence. New data cumbersome fixed-length, direct-access disc files. fields can be added at any time. MASTERFILE 8000 and the PCW RAM disc combine to offer high capacity with fast access to File searches combine flexibility with speed. variable-length data. File capacity is limited only (MASTERFILE 8000 usually waits for you, not by the size of your RAM disc. -

Acorn User 1986 Covers and Contents

• BBC MICRO ELECTRON’ATOMI £1.20 EXJANUARY 1986 USB* SPECTRAIYIANIA: Zippy game for Electron and BBC LOGO SOFTWARE: Joe Telford writes awordprocessor HyfHimm,H BBC B+ PROGRAMS: Our advanced HdlilSIii t user’s guide mm BASIC AIDS: pjpifjBpia *- -* ‘‘.r? r v arwv -v v .* - The hard facts about debuggers WIN AT ELITE: Secrets of the Commander files SUPER Forget the rest, our graphics program’s best! COMPETITION Three Taxan monitors to be won COUPON OFFER Save £20 on a Kaga NLQ printer ISSUEACORNUSERNo JANUARY 1986 42 EDITOR Tony Quinn NEW USERS 48 TECHNICAL EDITOR HINTS AND TIPS: Bruce Smith Martin Phillips asks how compatible are Epson compatible printers? 53 SUBEDITOR FIRST BYTE: Julie Carman How to build up your system wisely is Tessie Revivis’ topic PRODUCTION ASSISTANT Kitty Milne BUSINESS 129 EDITORIAL SECRETARY BUSINESS NEWS: Isobel Macdonald All the latest for users of Acorn computers in business, plus half-price Mallard Basic offer PROCESS: 133 TECHNICAL ASSISTANT WHICH WORD TO David Acton Guidelines from Roger Carus on choosing a wordprocessor to fulfil your business needs 139 ART DIRECTOR BASIC CHOICES: Mike Lackersteen Edward Brown compares BBC Basic and Mallard Professional Basic, supplied with the Z80 ART EDITOR Liz Thompson EDUCATION EDUCATION NEWS: 153 ART ASSISTANT questions Paul Holmes Proposed European standard for educational micros raises many OF WORDPROCESSING: 158 ADVERTISEMENT MANAGER THE WONDER Simon Goode Chris Drage and Nick Evans look at wordprocessors to help children express themselves j SALES EXECUTIVE j ; -

COBOL – an Introduction

COBOL – An Introduction identification division. program-id. COBOL-Workshop. author. Mike4 Harris. procedure division. display "Hello OxDUG!". My Programming Background ● Started with ZX BASIC on ZX81 and ZX Spectrum ● Moved on to Mallard BASIC (Amstrad PCW) and then to (the excellent and still my favourite) GFA BASIC (Atari ST) ● Learnt Pascal, C, Ada, C++, and OCCAM at University ● Learnt Java professionally, then never used it much ● For my sins, programmed in Perl and PHP for (far too many) years. ● Also wrote bad JavaScript, tried to learn good JavaScript, and toyed with stuff like AngularJS, Node.js and React.js ● Done some Python (nice) and Ruby (hmm) ● Basically messed about with lots of languages over the years COBOL - History ● COmmon Business Orientated Language (Completely Obsolete Business Orientated Language?) ● “Invented” by Grace Hopper, who was the inventor of FLOW-MATIC. ● Standardised between 1959 and 1960 by our friends at the Pentagon by the group CODASYL. ● Design goal was to be platform and proprietor independent. COBOL - History ● Appeared in 1959. ● CODASYL COBOL-60 ● ANSI COBOL-68 ● ANSI COBOL-74 (at this point the most used language in world) ● ANSI COBOL-85 (structured programming additions) ● ISO COBOL-2002 (object orientated additions) ● ISO COBOL-2014 (dynamic tables and modular features) Genealogy COBOL: pros & cons ● It's arguably very well adapted to its domain ● There's a LOT of legacy code, which is of finance and mass data processing. spaghetti-like (but then there's a lot of JavaScript like that!) ● It's verbose and this helps readability of code and thus is said to be self-documenting. -

Appendix a CP/M Software

Appendix A CP/M Software A major feature of the PCW8256/8512 is that it uses the CP/M operating system which means you can use a wide range of standard software products. Note that some of this software requires a second disk drive. Because new software is continually being made available for these machines it is impossible to produce a complete list. However, it is hoped that this list (compiled in Summer 1986) will demonstrate that the PCW is more than just a Word Processor! TITLE FROJ[ TYPE ABC Suite Quest Sales Invoicing Sales Ledger Stock Control Purchase Ledger Nominal Ledger A.B.C.S. Amsoft Sales/Debtors Ledger Sales Invoicing System Stock Control System Purchase/Creditors Ledger Nominal/General Ledger Amstrad Paymaster Amsoft Payroll system Analyser Quest Report generator (see Cash Trader) Assembler Plus MicroSoft Programming tool Aztec C II Manx Programming tool Back-up Xitan Copy utility BASIC Nevada Programming language BASIC Compiler MicroSoft Programming language Brainstorm Caxton Ideas processor 3-D scratchpad CBASIC Compiler Digital Research Programming language compiler 153 154 Word Processing with Amstrad C BDS Programming language compiler C HiSoft Programming language compiler CalcStar MicroPro Spreadsheet Camsoft Business Camsoft Database Software Nominal Ledger Payroll Purchase Ledger Sales Invoicing Sales Ledger Stock Control Cardbox Caxton Database Cardbox Plus Cash Trader Quest Accounting package (see Analyser) Catalog HiSoft Disk organiser Chit-Chat SageSoft Communications CIS-COBOL MicroFocus Programming language Classic Adventure Abersoft Text adventure game COBOL Microsoft Programming language Compact Accounts Compact Software Accounting packages Compact Daybook DataGem Gemini Database DataStar MicroPro Database dBaseII Ashton-Tate Database Del ta 1. -

Twillstar Computers Provides an Unbeatable Delivery Service

YOUR ONE STOP MECA-COMPUSTORE OUR POLICY OVER THE YEARS HAS BEEN TO SUPPLY THE VERY BEST PRODUCT AT UNBEATABLE PRICES, AND THROUGH THIS POLICY WE HAVE BUILT UP A WELL DESERVED REPUTATION. WE ARE CONTINUING WITH THIS POLICY IN OUR NEW CATALOGUE WHICH WE FEEL WILL HELP YOU GET THE VERY BEST FROM TWILLSTAR. HUGE STOCKS OUR WAREHOUSE OPERATION IS ONE OF THE LARGEST OF ITS KIND IN THE U.K. ALL ITEMS LISTED IN OUR PRICE LIST ARE HELD IN VOLUME STOCK TO ENSURE CONTINUOUS AVAILIBILITY OF ALL THE LEADING BRANDS AND THE LATEST PRODUCTS. ""=·INSTANT DELIVERY USING THE MOST EFFICIENT COMPUTER CONTROLLED DISTRIBUTION SYSTEM AVAILABLE FOR DOOR TO DOOR SERVICE TWILLSTAR COMPUTERS PROVIDES AN UNBEATABLE DELIVERY SERVICE. 24 HOURS ON MOST PRODUCTS MNAUTUMNAlUJ1f1lJJMNA1UJ1r1UJMNA1UJ11UJMNA1UJru1 ll~fPRICE LIST1F1RR(1E 1LR~f1F1RRCCE1F1RR(1E 1LR~f1F1RR~ CODE PRODUCT ExcVAT Inc. VAT CODE PRODUCT ExcVAT Inc. VAT £ £ £ £ BBC MICROCOMPUTERS & ACCESSORIES BBC FURNITURE BBC MASTER SERIES COMPUTERS BF050005 Com~uter Trolle;t 86.09 99.00 BF050006 Master Com~uter Trolle;t 104.35 120.00 BC01 0006 BBC Master 128K Com~uter 395.00 454.25 BF050007 BBC Securi!;i Clam~ 17.35 19.95 BC01 0007 BBC Master Econet Terminal 325.00 373.75 BF050008 Master Securi!;i Clam~ 20.87 24.00 BC01 0008 Turbo Upgrade Kit 99.09 113.95 BF050009 BBC Single Plinth, Metal 10.00 11.50 BC01 0009 512UpgradeKit 346.96 399.00 BF050010 BBC Dual Plinth, Metal 18.26 21 .00 BC01 0010 SCUpgrade T. B.A. T.B.A. BF050011 Master Single Plinth, Metal 12.17 14.00 BF050012 Master Dual Plinth, Metal 20.87 24.00 MASTER WORD PROCESING PACKAGE BF050013 Copyholders 14.78 17.00 BC01 0015 MastrwW.P. -

Referat: BASIC

[email protected] (D.Gryschock) 28.03.99 Referat: BASIC [David Gryschock AE; U 702] - 1 - [email protected] (D.Gryschock) Inhaltsverzeichnis: 1.) ................................................Was zum Geier ist BASIC ?......................................................................Seite 3 2.)........................................Woher stammt es und welche Entwicklungen folgten ?.........Seite 3 3.) ................................................Funktion ....................................................................................................Seite 5 4.)........................................Erklärung anhand eines Beispielprogrammes.........................Seite 5 5.)........................................Übersicht der wichtigsten BASIC-Befehle.............................Seite 9 6.)........................................Quellen....................................................................................Seite 9 [David Gryschock AE; U 702] - 2 - [email protected] (D.Gryschock) 1.) Was ist BASIC? BASIC ist eine Abkürzung mit folgender Bedeutung: B - steht für Beginners A - steht für All-purpose S - steht für Symbolic I - steht für Instruction C - steht für Code BASIC bedeutet also Beginners All-purpose Symbolic Instruction Code, was soviel heißt wie Allzweck-Programmiersprache für Anfänger. Mit BASIC sollen also auch Anfänger in die Lage versetzt werden, Programme zu schreiben. Die Programmiersprache BASIC sollte folgende Ansprüche erfüllen: • sie ist leicht zu erlernen und -

Computer Manuals Ltd. 021-706 6000 30 Lincoln Road, Olton Birmingham 827 6Pa Trade Enquiries: Tel

COMPUTER MANUALS LTD. 021-706 6000 30 LINCOLN ROAD, OLTON BIRMINGHAM 827 6PA TRADE ENQUIRIES: TEL. 021-707 5511 EXHIBITIONS BOOKSELLERS TRADE FAIR EASTBOURNE 2MAY Stand 73 THE IDEAL MICRO KENSINGTON EXHIBITION 2-3 MAY COMPUTER SHOW CENTRE ELECTRON & BBC USER HORTICULTURAL HALL 8-10 MAY SHOW Stand 75 OFFICE AUTOMATION SHOW BARB ICAN 2-4JUNE Stand 109 COMMODORE COMPUTER SHOW NOVOTEL 12-14 JUNE Stand 151 PC USER 87 OLYMPIA 30 JUNE- 2 JULY Stand 62A AMSTRAD SHOW ALEXANDRA PALACE 10-12 JULY Stand C15 IBM SYSTEM USER OLYMPIA II 2-4 SEPTEMBER Stand G12 ELECTRON & BBC USER HORTICULTURAL HALL 13-15 NOVEMBER Stand 75 Computer Manuals Limited offer "book by post" service to users of office Personal Computers. We offer * special orders for bOoks not on our list * quantities for training, courses, etc. * visits with car stock for large companies with multi users * same day despatch by first class post up to 1 p.m. For phoned orders, queries, special requests etc, ring 021-706 6000 otherwise write to the address above, quoting small orders. ORDERS MUST BE PREPAID unless otherwise agreed (payable to Computer Manuals Ltd) by cheque/P.O./Visa/ American Express/Access plus £1.70 post and packing per total order. Thank you for your interest in our books. REF del t/f TITLE PUBLISHER ISBN AUTHORISI RETAIL A. P. L 0979 Learn i ng & Applying APL WILEY 0-471-90243-8 Legrand 17. 95 ACT APRICOT 2792 Adv User Guide to APRICOT HEINEMANN 0-434-91744-3 Rodwell 14. 95 .2820 . Busine~s Compu~ing Apricot Com HEINEMANN 0-434-91745-1 Rodwell 14. -

ACORN USER AUGUST 1984 :UM the ONE and ONLY BBC, ELECTRON and ATOM MAGAZINE ^S OHI Nm3 J^ I

>^^*^ SCHIZOPHRENIC! he new SABA colour TV that doubles up as a colour computer monitor (or is if the other way around?). Scientilic House. Badge Street. Sandiacre Nottingham. NCI OSBA Telephone (060'i) 394000 ROM SOFTWARE INC VAT Wanjwise 39 95 Vmw B9.00 Prinlmasler 32.95 Care la kB' 33.95 Disc Doctor 32 9b Termi (le'mmdl emirlalui) 32 95 EPSON GtaphicB Eiiansron 32 95 DFS The Upgrade 27 95 PX80 Acorn SppBcn System UpgraO B 55 00 , HCCS Fonh 39 95 BBC HCCS LOQD fofTd 67 85 ACORN ELECTRON HCCS Pascai 57 (M BBC Micm Model 9 Micro S Double HCCS E.cal 71175 BBC Modal + flOM E.pansion Board [ATPL| densily DOS RING Micro OistUpgraBe P.OA Thie Best' 43 70 BBC BBC Micro A-B Eull Upgrade 95.00 BOOKS NO VAT BBC Micro Telete.t Reeeiuer 225 00 BBC Micnj Disc Cornpanion 7 95 P BBC Micro Z80 Jnd Procasso T8A Creative Grephica 7 5Q BBC Micro 6502 2nd Procsssor I.BA Graphs & Charts 7.50 ^j3^Sr^^d«Sebig. Double Density DOS Upgrada 104.95 Usp Manual 7.50 " DOPi'lai nr HIGH Pace DFS 39 fB Fartti Manual 7 50 BCPL Manual 1500 ONLY ="°:SiH=«r DISC DRIVES INC VAT Micro LVL Dual lOOK 340 00 Discdiuering BBC 169.95 Machine Code 6 95 50p Caniaaa Pace SmalB 100K No Pace SinglB 40/80T D/Sided 282.90 BBC Micro Disk Manual 1 95 • ENl.«II.I„Ch«„,^ Dual 338.00 Drill For Pace tOOK Svstams 573.95 Tbp aaC Micro 6 95 RING FOR 't.jflf Pace Dual 40/80T D/Sided Torcb Dual 400KZ80 Disc Pac 799.00 MICRO POWER INC VAT LATEST Pace ZOOK 40T D/Sided 243.00 Killer Gorilla 7.95 HOaaiT FLOPPY DRIVES 99.95 TORCH Z8Q IJwrD.dn.d '-''"«'•' Str Cybenron Mission 7.95 INC VAT Cosmir. -

Revista De Usuarios Amstradpublicación Electrónica Del Amstrad CPC Y PCW - Número 4 - Diciembre 2011

AmstradRevista de Usuarios AmstradPublicación electrónica del Amstrad CPC y PCW - Número 4 - Diciembre 2011 Crea tus ROMs Formatos de sonido Limpieza de diskettes Desprotecciones El renacer de los PCW ¡ 90 páginas ! Redescubre algunos de los juegos clásicos de CPC Revista de Usuarios Amstrad — Número 4 — Página 2 Editorial Dos años después del último ejemplar de la revista, volvemos a la carga, y lo hace- En este número... mos con fuerzas renovadas. En este número podrás encontrar Pag. 4 CPC Actual: novedades hard y soft reviews de juegos clásicos y de juegos Pag. 9 ¿Recuerdas? Reseñas de juegos clási- recientes, recetas de hardware (cómo cos limpiar y recuperar diskettes), programa- Pag. 19 Parecidos razonables ción para novatos y programación avan- Pag. 22 El ―Top Ten‖ CPC de … Mode 2 zada para expertos, todo ello aderezado con artículos que te hagan sonreír (como Pag. 24 El Juego: Hora bruja el de parecidos razonables) y otros de Pag. 26 Entrevista a... Metr culturilla general (como el de formatos Pag. 28 Videojuegos: Sprites (1) de sonido). También te acercaremos las Pag. 32 Limpieza y recuperación de diskettes novedades hardware para nuestros equi- de 3‖. pos, y reservamos un huequecito para la Pag. 34 Formatos de sonido gama PCW, que está muy movida última- Pag. 42 Aprende BASIC... Creando un mini- mente. juego Pag. 50 Desprotecciones Suena bien, ¿verdad? Esperamos que lo Pag. 56 El rincón del ensamblador disfrutes. Nos vemos… ¿pronto? Pag. 57 Añade música a tus programas La redacción. Pag. 60 Lector de cabeceras Pag. 63 Crea ROMs como si estuvieses en pri- mero Pag. -

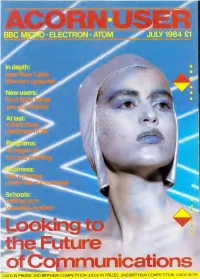

Acorn User Jul Y 1984 the One and Only Bbc, Electron and Atom Magazine

ommunications £1000 IN PRIZES-2ND BIRTHDAY COMPETITION £1000 IN PRIZES -2ND BIRTHDAY COMPETITION £1000 IN PR COMPLETE CONTROL AT YOUR FINGERTIPS.... ritish made and fully guaranteed, the LVL twin joysticks are professional units with which your aim should be nothing less than total control. They will operate any programmes that have a joystick option and are written in a way that is compatible with ACORNSOFT. • Nylon encased - Steel 1 2 months guarantee shafted joysticks with ball Fully compatible with the and socket joint BBC model 'B' or 'A' fitted • Fast spring return to centre with an A/D interface and • Graphite wiper linear an analogue part. potentiometers Scientific House. Bridge Street, Sandiacre Nottingham NG10 5BA Telephone (0602) 394000 ' THREE NEW PROGRAMS FROM MICROTEST SATAN'S CHALLENGE DAIRYFILE FOR or (Nevil Rides Out) DAIRY FARMERS MICROTEST FONT ROM. This exciting new ROM from Microtest will enable you to get all sorts of new characters Keep on that economic line between over and and fonts from your BBC Computer. Once you underfeeding! have produced your masterpiece on the screen, all you have to do is use the inbuilt screen- dump utility to produce a hard copy on to Save time recording milk yield and calculating paper. feed amounts! Typing '*HELP FONTS' gives a list of available Quickly decide feeding policy with the fonts and the blocks of characters which they 'Monthly Calving Group' Performance Graph! replace. Available fonts arc Print out a recording sheet with cows in *Accents Accents and miscellaneous. numerical order. Print out graphs or tables of *Block Small capitals.