Encoding Script-Specific Writing Rules Based on the Unicode Character Set ______

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

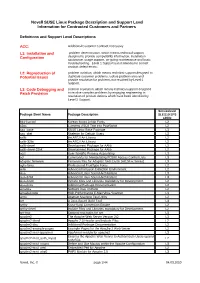

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 s390x 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-32bit An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-devel-32bit Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-32bit Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. L2 alsa-docs Additional Package Documentation. L2 amanda Network Disk Archiver L2 amavisd-new High-Performance E-Mail Virus Scanner L3 amtu Abstract Machine Test Utility L2 ant A Java-Based Build Tool L3 anthy Kana-Kanji Conversion Engine L2 anthy-devel Include Files and Libraries mandatory for Development. -

Translate's Localization Guide

Translate’s Localization Guide Release 0.9.0 Translate Jun 26, 2020 Contents 1 Localisation Guide 1 2 Glossary 191 3 Language Information 195 i ii CHAPTER 1 Localisation Guide The general aim of this document is not to replace other well written works but to draw them together. So for instance the section on projects contains information that should help you get started and point you to the documents that are often hard to find. The section of translation should provide a general enough overview of common mistakes and pitfalls. We have found the localisation community very fragmented and hope that through this document we can bring people together and unify information that is out there but in many many different places. The one section that we feel is unique is the guide to developers – they make assumptions about localisation without fully understanding the implications, we complain but honestly there is not one place that can help give a developer and overview of what is needed from them, we hope that the developer section goes a long way to solving that issue. 1.1 Purpose The purpose of this document is to provide one reference for localisers. You will find lots of information on localising and packaging on the web but not a single resource that can guide you. Most of the information is also domain specific ie it addresses KDE, Mozilla, etc. We hope that this is more general. This document also goes beyond the technical aspects of localisation which seems to be the domain of other lo- calisation documents. -

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 ia64 3ddiag A Tool to Verify the 3D Configuration L3 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 This software changes the resolution of an available vbios 915resolution L2 mode. a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-x86 An ASCII Art Library L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 acpid Executes Actions at ACPI Events L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. -

(Cellular) Phones, Pagers, Calculators, Digital Cameras, Wearable Computing

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.21 TuxMobil Berlin Copyright © 2000−2005 Werner Heuser 2005−11−14 Revision History Revision 3.21 2005−11−14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005−11−14 Revised by: wh Some typos have been fixed. Revision 3.19 2005−11−14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005−10−10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005−09−28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005−08−28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross−compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop−mode−tools added, some URLs updated, spelling cleaned, minor changes. Revision 3.16 2005−07−15 Revised by: wh Added some information about pcmciautils, link to SoftwareSuspend2 added, localepurge for small HDDs, added chapter about FingerPrint Readers, added chapter about ExpressCards, link to Smart Battery System utils added to Batteries chapter, some additions to External Monitors chapter, links and descriptions added for: IBAM − the Intelligent Battery Monitor, lcdtest, DDCcontrol updated Credits section, minor changes. -

Secure Content Distribution Using Untrusted Servers Kevin Fu

Secure content distribution using untrusted servers Kevin Fu MIT Computer Science and Artificial Intelligence Lab in collaboration with M. Frans Kaashoek (MIT), Mahesh Kallahalla (DoCoMo Labs), Seny Kamara (JHU), Yoshi Kohno (UCSD), David Mazières (NYU), Raj Rajagopalan (HP Labs), Ron Rivest (MIT), Ram Swaminathan (HP Labs) For Peter Szolovits slide #1 January-April 2005 How do we distribute content? For Peter Szolovits slide #2 January-April 2005 We pay services For Peter Szolovits slide #3 January-April 2005 We coerce friends For Peter Szolovits slide #4 January-April 2005 We coerce friends For Peter Szolovits slide #4 January-April 2005 We enlist volunteers For Peter Szolovits slide #5 January-April 2005 Fast content distribution, so what’s left? • Clients want ◦ Authenticated content ◦ Example: software updates, virus scanners • Publishers want ◦ Access control ◦ Example: online newspapers But what if • Servers are untrusted • Malicious parties control the network For Peter Szolovits slide #6 January-April 2005 Taxonomy of content Content Many-writer Single-writer General purpose file systems Many-reader Single-reader Content distribution Personal storage Public Private For Peter Szolovits slide #7 January-April 2005 Framework • Publishers write➜ content, manage keys • Clients read/verify➜ content, trust publisher • Untrusted servers replicate➜ content • File system protects➜ data and metadata For Peter Szolovits slide #8 January-April 2005 Contributions • Authenticated content distribution SFSRO➜ ◦ Self-certifying File System Read-Only -

The Indic Fonts HOWTO

The Indic Fonts HOWTO Maninder Bali Dan Scott − Conversion from HTML to DocBook v4.1.2 (XML) Revision History Revision 0.1 2002/01/07 Revised by: mb First rendition released by Maninder Bali. This is a detailed guide on how to install and use Indic scripts (devanagri etc.) using UTF−8 encoding under GNU/Linux. This HOWTO is a work in progress. More sections regarding fonts and other related things shall be added to this HOWTO in due course of time. Special thanks to Dan Scott for conversion from HTML to DocBook v4.1.2(XML). Any feedback, sugestions, pointers, gifts, cds, BMWs will be gladly accepted. All flames will be redirected to /mnt/praises_for_thee/ for future reference. Be afraid. The Indic Fonts HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 2. Installing the IndiX system............................................................................................................................3 2.1. Installing IndiX.................................................................................................................................3 2.2. Running Simpm................................................................................................................................3 3. Devanagri Input and Output setup...............................................................................................................5 3.1. Linux console....................................................................................................................................5 -

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 x86 3ddiag A Tool to Verify the 3D Configuration L3 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 This software changes the resolution of an available vbios 855resolution L2 mode. This software changes the resolution of an available vbios 915resolution L2 mode. a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 acpid Executes Actions at ACPI Events L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. -

Unicode-HOWTO.Pdf

The Unicode HOWTO The Unicode HOWTO Table of Contents The Unicode HOWTO........................................................................................................................................1 Bruno Haible, <[email protected]>.................................................................................................1 1. Introduction..........................................................................................................................................1 2. Display setup........................................................................................................................................1 3. Locale setup.........................................................................................................................................1 4. Specific applications............................................................................................................................1 5. Printing.................................................................................................................................................2 6. Making your programs Unicode aware................................................................................................2 7. Other sources of information...............................................................................................................2 1. Introduction..........................................................................................................................................2 1.1 Why Unicode?...................................................................................................................................2 -

The Unicode HOWTO

Content The Unicode HOWTO Bruno Haible, <[email protected]> v0.18, 4 August 2000 This document describes how to change your Linux system so it uses UTF-8 as text encoding. This is work in progress. Any tips, patches, pointers, URLs are very welcome. ConTEXt typesetting and design David Antoš, 2001 Stop Content Content 1 Introduction 4 4 Specific applications 25 1.1 Why Unicode? 4 4.1 Networking 26 1.2 Unicode encodings 5 4.1.1 telnet 26 1.2.1 Footnotes for 4.1.2 kermit 27 C/C++ developers 7 4.2 Browsers 27 1.3 Related resources 8 4.2.1 Netscape 27 4.2.2 Mozilla 28 2 Display setup 9 4.2.3 lynx 28 2.1 Linux console 9 4.2.4 w3m 29 2.2 X11 Foreign fonts 10 4.2.5 Test pages 30 2.3 X11 Unicode fonts 12 4.3 Editors 30 2.4 Unicode xterm 13 4.3.1 yudit 30 2.5 TrueType fonts 15 4.3.2 vim 31 2.6 Miscellaneous 17 4.3.3 emacs 31 4.3.4 xemacs 36 3 Locale setup 18 4.3.5 nedit 37 3.1 Files & the kernel 18 4.3.6 xedit 38 3.2 Ttys & the kernel 19 4.3.7 axe 38 3.3 General data conversion 20 4.3.8 pico 38 3.4 Locale environment 4.3.9 mined98 38 variables 21 4.4 Mailers 39 Stop 3.5 Creating the locale 4.4.1 pine 39 support files 23 4.4.2 kmail 40 3.6 Adding support to the 4.4.3 Netscape Communicator 40 C library 24 4.4.4 emacs (rmail, vm) 41 1 4.4.5 mutt 41 5.2.3 groff -Tps 66 Content 4.4.6 exmh 41 5.3 No luck with..