General Caching Is Hard: Even with Small Pages?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Framework for Short Tweak Tweakable Block Cipher

Elastic-Tweak: A Framework for Short Tweak Tweakable Block Cipher Avik Chakraborti1, Nilanjan Datta2, Ashwin Jha2, Cuauhtemoc Mancillas Lopez3, Mridul Nandi2, Yu Sasaki1 1 NTT Secure Platform Laboratories, Japan 2 Indian Statistical Institute, Kolkata, India 3 Computer Science Department, CINVESTAV-IPN, Mexico [email protected], nilanjan isi [email protected], [email protected], [email protected], [email protected], [email protected] Abstract. Tweakable block cipher (TBC), a stronger notion than stan- dard block ciphers, has wide-scale applications in symmetric-key schemes. At a high level, it provides flexibility in design and (possibly) better security bounds. In multi-keyed applications, a TBC with short tweak values can be used to replace multiple keys. However, the existing TBC construction frameworks, including TWEAKEY and XEX, are designed for general purpose tweak sizes. Specifically, they are not optimized for short tweaks, which might render them inefficient for certain resource constrained applications. So a dedicated paradigm to construct short- tweak TBCs (tBC) is highly desirable. In this paper, we present a ded- icated framework, called the Elastic-Tweak framework (ET in short), to convert any reasonably secure SPN block cipher into a secure tBC. We apply the ET framework on GIFT and AES to construct efficient tBCs, named TweGIFT and TweAES. We present hardware and software results to show that the performance overheads for these tBCs are minimal. We perform comprehensive security analysis and observe that TweGIFT and TweAES provide sufficient security without any increase in the number of block cipher rounds when compared to GIFT and AES. -

The Case of Robert Gober = Der Fall Robert Gober

The case of Robert Gober = Der Fall Robert Gober Autor(en): Liebmann, Lisa / Nansen Objekttyp: Article Zeitschrift: Parkett : the Parkett series with contemporary artists = Die Parkett- Reihe mit Gegenwartskünstlern Band (Jahr): - (1989) Heft 21: Collaboration Alex Katz PDF erstellt am: 27.09.2021 Persistenter Link: http://doi.org/10.5169/seals-680389 Nutzungsbedingungen Die ETH-Bibliothek ist Anbieterin der digitalisierten Zeitschriften. Sie besitzt keine Urheberrechte an den Inhalten der Zeitschriften. Die Rechte liegen in der Regel bei den Herausgebern. Die auf der Plattform e-periodica veröffentlichten Dokumente stehen für nicht-kommerzielle Zwecke in Lehre und Forschung sowie für die private Nutzung frei zur Verfügung. Einzelne Dateien oder Ausdrucke aus diesem Angebot können zusammen mit diesen Nutzungsbedingungen und den korrekten Herkunftsbezeichnungen weitergegeben werden. Das Veröffentlichen von Bildern in Print- und Online-Publikationen ist nur mit vorheriger Genehmigung der Rechteinhaber erlaubt. Die systematische Speicherung von Teilen des elektronischen Angebots auf anderen Servern bedarf ebenfalls des schriftlichen Einverständnisses der Rechteinhaber. Haftungsausschluss Alle Angaben erfolgen ohne Gewähr für Vollständigkeit oder Richtigkeit. Es wird keine Haftung übernommen für Schäden durch die Verwendung von Informationen aus diesem Online-Angebot oder durch das Fehlen von Informationen. Dies gilt auch für Inhalte Dritter, die über dieses Angebot zugänglich sind. Ein Dienst der ETH-Bibliothek ETH Zürich, Rämistrasse 101, 8092 Zürich, Schweiz, www.library.ethz.ch http://www.e-periodica.ch L/Sd T/.EÄAMAGV THE BOBESZ GOBER, ZWZZTZED (7M/R OES/AOLS',)/OHNE TITEL (ZWEI BECKEN), 1985, PZASTBR, WOOD, R7RZZA7Z/, SPEEZ, SSM/- CASE OF GZOSS RAMMBZ PA/AIP, 2 P/SCSS/ GIPS, HOLZ, MASCHENDRAHT, STAHL, SEIDENGLANZ-EMAILFARBE, 2-TEILIG, OKERAZZ/ ZUSAMMEN: 50x 54*27 "/ 76 x213 x 68 cm. -

Tu; ?F/75 a Zerctctme Detector for Nuclear

TU; ?F/75 A ZERCTCTME DETECTOR FOR NUCLEAR FRAO1ENTS US I! IG CHANNEL FLECTRnN MULTIPLIER PLATES Bo Sundqvist Tanden Accelerator Laboratory, Uppsala, Sweden •Hr '.vACT: . *-. literature on zerotime detectors which use the emission of ". ocndary electrons from a thin foil is reviewed. The construction • a zerotiine detector using multiplication of the secondary •,'2Ctrons with two Mallard channel electron multiplier-plates (CEMP) •.' tandem is described. Results of tests of such a detector with a particles from a natural a source are given. Tstal time resolutions of about 200 ps (FVHM) with a Si(Sb) detector as the stop detector has been achieved. The contribution from the zerotiine detector is estimated to be less than 150 ps (FVJW). The application of this detector technique to the construction of a heavy-ion spectrometer o and a Be detector is discussed. This work was supported by the 9wedish Atomic Research Council CONTENTS 1 INTRODUCTION 3 2 A REVIEW OF THE LITERATURE ON ZEROTIME DETECTORS USING 5 THIN ELECTRON EMITTING FOILS 3 THE CHANNEL ELECTRON HiLTTPLTER PLATE (CEHP), AND ITS 8 USE IN ZEROTIME DETECTORS i* THE CONSTRUCTION OF A ZEROTIME DETECTOR USING CEMP 10 MULTIPLICATION OF SECONDARY EMITTED ELECTRONS FROM A THEN FOIL 5 TESTS OF THE SEEZT-DETECTOF. AND DISCUSSION OF RESULTS lu 6 FUTURE APPLICATIONS OF THIS DETECTOR TECHNIQUE 17 ACKNOWLEDGEMENTS 19 REFERENCES 20 TABLE CAPTIONS 22 TÄBIZS 23 FIGURE CAPTIONS 25 FIGURES 25 1 INTRODUCTION The interest has recently been growing rapidly in the corrlex nuclear reactions in which the ingoing particles induce neny different reactions. To be able to study a particular reaction the fragments produced must be identified, i e the ness (M) the nuclear charge (Z) and the energy (E) of the different fragRjents have to be determined. -

5892 Cisco Category: Standards Track August 2010 ISSN: 2070-1721

Internet Engineering Task Force (IETF) P. Faltstrom, Ed. Request for Comments: 5892 Cisco Category: Standards Track August 2010 ISSN: 2070-1721 The Unicode Code Points and Internationalized Domain Names for Applications (IDNA) Abstract This document specifies rules for deciding whether a code point, considered in isolation or in context, is a candidate for inclusion in an Internationalized Domain Name (IDN). It is part of the specification of Internationalizing Domain Names in Applications 2008 (IDNA2008). Status of This Memo This is an Internet Standards Track document. This document is a product of the Internet Engineering Task Force (IETF). It represents the consensus of the IETF community. It has received public review and has been approved for publication by the Internet Engineering Steering Group (IESG). Further information on Internet Standards is available in Section 2 of RFC 5741. Information about the current status of this document, any errata, and how to provide feedback on it may be obtained at http://www.rfc-editor.org/info/rfc5892. Copyright Notice Copyright (c) 2010 IETF Trust and the persons identified as the document authors. All rights reserved. This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents (http://trustee.ietf.org/license-info) in effect on the date of publication of this document. Please review these documents carefully, as they describe your rights and restrictions with respect to this document. Code Components extracted from this document must include Simplified BSD License text as described in Section 4.e of the Trust Legal Provisions and are provided without warranty as described in the Simplified BSD License. -

Proexr Manual

ProEXR Advanced OpenEXR plug-ins 1 ProEXR by Brendan Bolles Version 2.5 November 4, 2019 fnord software 159 Jasper Place San Francisco, CA 94133 www.fnordware.com For support, comments, feature requests, and insults, send email to [email protected] or participate in the After Effects email list, available through www.media-motion.tv. Plain English License Agreement These plug-ins are free! Use them, share them with your friends, include them on free CDs that ship with magazines, whatever you want. Just don’t sell them, please. And because they’re free, there is no warranty that they work well or work at all. They may crash your computer, erase all your work, get you fired from your job, sleep with your spouse, and otherwise ruin your life. So test first and use them at your own risk. But in the fortunate event that all that bad stuff doesn’t happen, enjoy! © 2007–2019 fnord. All rights reserved. 2 About ProEXR Industrial Light and Magic’s (ILM’s) OpenEXR format has quickly gained wide adoption in the high-end world of computer graphics. It’s now the preferred output format for most 3D renderers and is starting to become the standard for digital film scanning and printing too. But while many programs have basic support for the format, hardly any provide full access to all of its capabilities. And OpenEXR is still being developed!new compression strategies and other features are being added while some big application developers are not interested in keeping up. And that’s where ProEXR comes in. -

1 Symbols (2286)

1 Symbols (2286) USV Symbol Macro(s) Description 0009 \textHT <control> 000A \textLF <control> 000D \textCR <control> 0022 ” \textquotedbl QUOTATION MARK 0023 # \texthash NUMBER SIGN \textnumbersign 0024 $ \textdollar DOLLAR SIGN 0025 % \textpercent PERCENT SIGN 0026 & \textampersand AMPERSAND 0027 ’ \textquotesingle APOSTROPHE 0028 ( \textparenleft LEFT PARENTHESIS 0029 ) \textparenright RIGHT PARENTHESIS 002A * \textasteriskcentered ASTERISK 002B + \textMVPlus PLUS SIGN 002C , \textMVComma COMMA 002D - \textMVMinus HYPHEN-MINUS 002E . \textMVPeriod FULL STOP 002F / \textMVDivision SOLIDUS 0030 0 \textMVZero DIGIT ZERO 0031 1 \textMVOne DIGIT ONE 0032 2 \textMVTwo DIGIT TWO 0033 3 \textMVThree DIGIT THREE 0034 4 \textMVFour DIGIT FOUR 0035 5 \textMVFive DIGIT FIVE 0036 6 \textMVSix DIGIT SIX 0037 7 \textMVSeven DIGIT SEVEN 0038 8 \textMVEight DIGIT EIGHT 0039 9 \textMVNine DIGIT NINE 003C < \textless LESS-THAN SIGN 003D = \textequals EQUALS SIGN 003E > \textgreater GREATER-THAN SIGN 0040 @ \textMVAt COMMERCIAL AT 005C \ \textbackslash REVERSE SOLIDUS 005E ^ \textasciicircum CIRCUMFLEX ACCENT 005F _ \textunderscore LOW LINE 0060 ‘ \textasciigrave GRAVE ACCENT 0067 g \textg LATIN SMALL LETTER G 007B { \textbraceleft LEFT CURLY BRACKET 007C | \textbar VERTICAL LINE 007D } \textbraceright RIGHT CURLY BRACKET 007E ~ \textasciitilde TILDE 00A0 \nobreakspace NO-BREAK SPACE 00A1 ¡ \textexclamdown INVERTED EXCLAMATION MARK 00A2 ¢ \textcent CENT SIGN 00A3 £ \textsterling POUND SIGN 00A4 ¤ \textcurrency CURRENCY SIGN 00A5 ¥ \textyen YEN SIGN 00A6 -

Three Generations of Italians: Interview with Mario Pantano by Norma Dilibero

Rhode Island College Digital Commons @ RIC Three Generations of Italians Ethnic Heritage Studies Project 2-20-1979 Three Generations of Italians: Interview with Mario Pantano by Norma DiLibero Mario Pantano Follow this and additional works at: https://digitalcommons.ric.edu/italians Part of the Social and Cultural Anthropology Commons Recommended Citation Pantano, Mario, "Three Generations of Italians: Interview with Mario Pantano by Norma DiLibero" (1979). Three Generations of Italians. 31. https://digitalcommons.ric.edu/italians/31 This Article is brought to you for free and open access by the Ethnic Heritage Studies Project at Digital Commons @ RIC. It has been accepted for inclusion in Three Generations of Italians by an authorized administrator of Digital Commons @ RIC. For more information, please contact [email protected]. ... .:.. ~ " . ... ... ' ... , :.·- •, ,. .... ~ . .. ..... ·Norma DiLiberci · .~ .... .. .·• . ... - .. :zti.Of79 .. -~ ·. -. ·. ..... \.. ·. .· . Life in Italy as a child, in the service and married Financial decisions and plans for America Entering a new country with no money, r~atives or friends ' . Examining the job market--saving money to send back home .:~ :Work · and saV:ing money so that the rest of the ·family could come Arrlval of family · Job change Educating of children in U.S. Death of first wife and second marriage Fulfillment through children and grand children Gratitude for living in U.S. ..... .· Oral H1ate~y I•tePT1ew wita MARIO PANTANO, INTERVIEWEE February 20, 1979 Prevideaee, Rhed Itla•d by Neraa A. D1 Lib•~•, IatePTiewer I a• happy te 1•treduee Mr. Mar1• Pa~ta•e, a ret1r•d laaura•e• ·~••t fer Prud~11.tial Life Ia!!uraee Ceapaay. Mr. Pa•taae aa~ led aa aetive a•d riea life 1a A•er1ea aad teday a• 1a ~raeleua •~•u~a te ~1ve ua a deepe7 uadersta•d1a~ er •••• ef tae at~u~~l•• ae ••••u•t•~•d aa a aew 1aa1~raat te tae BAeres ef tke Ualted State2. -

Fonts for Latin Paleography

FONTS FOR LATIN PALEOGRAPHY Capitalis elegans, capitalis rustica, uncialis, semiuncialis, antiqua cursiva romana, merovingia, insularis majuscula, insularis minuscula, visigothica, beneventana, carolina minuscula, gothica rotunda, gothica textura prescissa, gothica textura quadrata, gothica cursiva, gothica bastarda, humanistica. User's manual 5th edition 2 January 2017 Juan-José Marcos [email protected] Professor of Classics. Plasencia. (Cáceres). Spain. Designer of fonts for ancient scripts and linguistics ALPHABETUM Unicode font http://guindo.pntic.mec.es/jmag0042/alphabet.html PALEOGRAPHIC fonts http://guindo.pntic.mec.es/jmag0042/palefont.html TABLE OF CONTENTS CHAPTER Page Table of contents 2 Introduction 3 Epigraphy and Paleography 3 The Roman majuscule book-hand 4 Square Capitals ( capitalis elegans ) 5 Rustic Capitals ( capitalis rustica ) 8 Uncial script ( uncialis ) 10 Old Roman cursive ( antiqua cursiva romana ) 13 New Roman cursive ( nova cursiva romana ) 16 Half-uncial or Semi-uncial (semiuncialis ) 19 Post-Roman scripts or national hands 22 Germanic script ( scriptura germanica ) 23 Merovingian minuscule ( merovingia , luxoviensis minuscula ) 24 Visigothic minuscule ( visigothica ) 27 Lombardic and Beneventan scripts ( beneventana ) 30 Insular scripts 33 Insular Half-uncial or Insular majuscule ( insularis majuscula ) 33 Insular minuscule or pointed hand ( insularis minuscula ) 38 Caroline minuscule ( carolingia minuscula ) 45 Gothic script ( gothica prescissa , quadrata , rotunda , cursiva , bastarda ) 51 Humanist writing ( humanistica antiqua ) 77 Epilogue 80 Bibliography and resources in the internet 81 Price of the paleographic set of fonts 82 Paleographic fonts for Latin script 2 Juan-José Marcos: [email protected] INTRODUCTION The following pages will give you short descriptions and visual examples of Latin lettering which can be imitated through my package of "Paleographic fonts", closely based on historical models, and specifically designed to reproduce digitally the main Latin handwritings used from the 3 rd to the 15 th century. -

Periodico Tchê Química

PERIÓDICO TCHÊ QUÍMICA ARTIGO ORIGINAL COMPARAÇÃO DE ABORDAGENS CIRÚRGICAS E NÃO CIRÚRGICAS AO TRAUMA ESPLÊNICO COMPARISON OF SURGICAL AND NON-SURGICAL APPROACHES TO SPLENIC TRAUMA MOUSAVIE, Seyed Hamzeh1; BEIGI RIZI, Kamran2; HOSSEINPOUR Parisa3; NEGAHI Ali Reza*1. 1 Hazrat Rasoul Medical Complex, Iran University of Medical Sciences, Tehran, Iran 2 Department of surgery, Rasool-Akram Hospital, Iran University of Medical Sciences, Tehran, Iran 3 Student Research Committee, School of Medicine, Iran University of Medical Sciences, Tehran, Iran * Corresponding author e-mail: [email protected] Received 24 August 2019; received in revised form 13 January 2020; accepted 02 February 2020 RESUMO A perda do baço acarreta em aumento do risco de sepse, pielonefrite, pneumonia e embolia pulmonar ao longo da vida de pacientes com trauma esplênico. Com relação à sensibilidade do baço e à importância de terapias apropriadas para trauma espástico, este estudo teve como objetivo determinar as consequências do trauma raquimedular com base em diferentes métodos terapêuticos. Este estudo de coorte retrospectivo foi realizado em pacientes com trauma esplênico que foram encaminhados ao Hospital Rasool Akram em Teerã, Irã, durante 2011-2017. Todos os registros médicos de 133 pacientes com trauma esplênico foram coletados entre 2011 e 2017. Os dados foram coletados relacionados à ultrassonografia e tomografia computadorizada ou a outros métodos de diagnóstico dos pacientes admitidos na enfermaria cirúrgica. Finalmente, pacientes com trauma esplênico com abordagem cirúrgica foram comparados com indivíduos com abordagem não cirúrgica. As abordagens cirúrgicas e não cirúrgicas foram realizadas em 80% (n = 104) e 20% (n = 26) dos indivíduos, respectivamente. Houve diferença significativa entre os dois grupos em relação ao tempo de permanência na unidade de terapia intensiva e duração total da internação. -

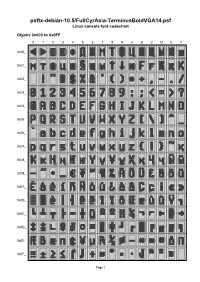

Psftx-Debian-10.5/Fullcyrasia-Terminusboldvga14.Psf Linux Console Font Codechart

psftx-debian-10.5/FullCyrAsia-TerminusBoldVGA14.psf Linux console font codechart Glyphs 0x000 to 0x0FF 0 1 2 3 4 5 6 7 8 9 A B C D E F 0x00_ 0x01_ 0x02_ 0x03_ 0x04_ 0x05_ 0x06_ 0x07_ 0x08_ 0x09_ 0x0A_ 0x0B_ 0x0C_ 0x0D_ 0x0E_ 0x0F_ Page 1 Glyphs 0x100 to 0x1FF 0 1 2 3 4 5 6 7 8 9 A B C D E F 0x10_ 0x11_ 0x12_ 0x13_ 0x14_ 0x15_ 0x16_ 0x17_ 0x18_ 0x19_ 0x1A_ 0x1B_ 0x1C_ 0x1D_ 0x1E_ 0x1F_ Page 2 Font information 0x015 U+00A7 SECTION SIGN Filename: psftx-debian-10.5/FullCyrAsia-Termi 0x016 U+0449 CYRILLIC SMALL LETTER nusBoldVGA14.psf SHCHA PSF version: 1 0x017 U+044B CYRILLIC SMALL LETTER Glyph size: 8 × 14 pixels YERU Glyph count: 512 0x018 U+2191 UPWARDS ARROW Unicode font: Yes (mapping table present) 0x019 U+2193 DOWNWARDS ARROW Unicode mappings 0x01A U+044E CYRILLIC SMALL LETTER 0x000 U+25C0 BLACK LEFT-POINTING YU TRIANGLE 0x01B U+0492 CYRILLIC CAPITAL LETTER 0x001 U+25B6 BLACK RIGHT-POINTING GHE WITH STROKE TRIANGLE 0x01C U+0493 CYRILLIC SMALL LETTER 0x002 U+00A9 COPYRIGHT SIGN GHE WITH STROKE 0x003 U+00AE REGISTERED SIGN 0x01D U+0496 CYRILLIC CAPITAL LETTER ZHE WITH DESCENDER 0x004 U+2666 BLACK DIAMOND SUIT 0x01E U+0497 CYRILLIC SMALL LETTER 0x005 U+0414 CYRILLIC CAPITAL LETTER ZHE WITH DESCENDER DE 0x01F U+049A CYRILLIC CAPITAL LETTER 0x006 U+0416 CYRILLIC CAPITAL LETTER KA WITH DESCENDER ZHE 0x020 U+0020 SPACE 0x007 U+041C CYRILLIC CAPITAL LETTER EM 0x021 U+0021 EXCLAMATION MARK 0x008 U+0422 CYRILLIC CAPITAL LETTER 0x022 U+0022 QUOTATION MARK TE 0x023 U+0023 NUMBER SIGN 0x009 U+0424 CYRILLIC CAPITAL LETTER EF 0x024 U+0024 DOLLAR SIGN -

Maximizing the Profit for Cache Replacement in a Transcoding Proxy

MAXIMIZING THE PROFIT FOR CACHE REPLACEMENT IN A TRANSCODING PROXY Hao-Ping Hung and Ming-Syan Chen Graduate Institute of Communication Engineering National Taiwan University E-mail: [email protected], [email protected] ABSTRACT Effect) in [3] can be viewed as a greedy algorithm. Note that the greedy algorithm only performs well in fractional Recent technology advances in multimedia communication knapsack problem. In order to improve the performance have ushered in a new era of personal communication. Users ofAE,weproposeaMaximumProfit Replacement algo- can ubiquitously access the Internet via various mobile de- rithm, abbreviated as MPR. Given a specificcachesizecon- vices. For the moible devices featured with lower-bandwidth straint, we first calculate the caching priority by considering network connectivity, transcoding can be used to reduce the the fetching delay, the reference rate, and the item size of the object size by lowering the quality of a mutimedia object. objects. By using the concept of dynamic programming [7], In this paper, we focus on the cache replacement policy in the caching candidate set DH , which contains the objects a transcoding proxy, which is a proxy server responsible with high caching priority, will be determined. Extended for transcoding the object and reducing the network traffic. from the conventional two running parameters in solving 0- 1 knapsack problem, the proposed procedure DP (standing Based on the architecture in prior works, we propose a Max- for Dynamic Programming) comprises three parameters to imum Profit Replacement algorithm, abbreviated as MPR. retain the information of the cache size, the object id and the MPR performs cache replacement according to the content version id. -

Iso/Iec 10646:2011 Fdis

Proposed Draft Amendment (PDAM) 2 ISO/IEC 10646:2012/Amd.2: 2012 (E) Information technology — Universal Coded Character Set (UCS) — AMENDMENT 2: Caucasian Albanian, Psalter Pahlavi, Old Hungarian, Mahajani, Grantha, Modi, Pahawh Hmong, Mende, and other characters Page 22, Sub-clause 16.3 Format characters Insert the following entry in the list of format characters: 061C ARABIC LETTER MARK 1107F BRAHMI NUMBER JOINER Page 23, Sub-clause 16.5 Variation selectors and variation sequences Remove the first sentence of the third paragraph (starting with ‘No variation sequences using characters’). Insert the following text at the end of the sub-clause. The following list provides a list of variation sequences corresponding to the use of appropriate variation selec- tors with allowed pictographic symbols. The range of presentations may include a traditional black and white text style, using FE0E VARIATION SELECTOR-15, or an ‘emoji’ style, using FE0F VARIATION SELECTOR-16, whose presentation often involves color/grayscale and/or animation. Sequence (UID notation) Description of sequence <0023, FE0E, 20E3> NUMBER SIGN inside a COMBINING ENCLOSING KEYCAP <0023, FE0F, 20E3> <0030, FE0E, 20E3> DIGIT ZERO inside a COMBINING ENCLOSING KEYCAP <0030, FE0F, 20E3> <0031, FE0E, 20E3> DIGIT ONE inside a COMBINING ENCLOSING KEYCAP <0031, FE0F, 20E3> <0032, FE0E, 20E3> DIGIT TWO inside a COMBINING ENCLOSING KEYCAP <0032, FE0F, 20E3> <0033, FE0E, 20E3> DIGIT THREE inside a COMBINING ENCLOSING KEYCAP <0033, FE0F, 20E3> <0034, FE0E, 20E3> DIGIT FOUR inside a COMBINING