Differentiating Under the Integral Sign

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MATH 305 Complex Analysis, Spring 2016 Using Residues to Evaluate Improper Integrals Worksheet for Sections 78 and 79

MATH 305 Complex Analysis, Spring 2016 Using Residues to Evaluate Improper Integrals Worksheet for Sections 78 and 79 One of the interesting applications of Cauchy's Residue Theorem is to find exact values of real improper integrals. The idea is to integrate a complex rational function around a closed contour C that can be arbitrarily large. As the size of the contour becomes infinite, the piece in the complex plane (typically an arc of a circle) contributes 0 to the integral, while the part remaining covers the entire real axis (e.g., an improper integral from −∞ to 1). An Example Let us use residues to derive the formula p Z 1 x2 2 π 4 dx = : (1) 0 x + 1 4 Note the somewhat surprising appearance of π for the value of this integral. z2 First, let f(z) = and let C = L + C be the contour that consists of the line segment L z4 + 1 R R R on the real axis from −R to R, followed by the semi-circle CR of radius R traversed CCW (see figure below). Note that C is a positively oriented, simple, closed contour. We will assume that R > 1. Next, notice that f(z) has two singular points (simple poles) inside C. Call them z0 and z1, as shown in the figure. By Cauchy's Residue Theorem. we have I f(z) dz = 2πi Res f(z) + Res f(z) C z=z0 z=z1 On the other hand, we can parametrize the line segment LR by z = x; −R ≤ x ≤ R, so that I Z R x2 Z z2 f(z) dz = 4 dx + 4 dz; C −R x + 1 CR z + 1 since C = LR + CR. -

Section 8.8: Improper Integrals

Section 8.8: Improper Integrals One of the main applications of integrals is to compute the areas under curves, as you know. A geometric question. But there are some geometric questions which we do not yet know how to do by calculus, even though they appear to have the same form. Consider the curve y = 1=x2. We can ask, what is the area of the region under the curve and right of the line x = 1? We have no reason to believe this area is finite, but let's ask. Now no integral will compute this{we have to integrate over a bounded interval. Nonetheless, we don't want to throw up our hands. So note that b 2 b Z (1=x )dx = ( 1=x) 1 = 1 1=b: 1 − j − In other words, as b gets larger and larger, the area under the curve and above [1; b] gets larger and larger; but note that it gets closer and closer to 1. Thus, our intuition tells us that the area of the region we're interested in is exactly 1. More formally: lim 1 1=b = 1: b − !1 We can rewrite that as b 2 lim Z (1=x )dx: b !1 1 Indeed, in general, if we want to compute the area under y = f(x) and right of the line x = a, we are computing b lim Z f(x)dx: b !1 a ASK: Does this limit always exist? Give some situations where it does not exist. They'll give something that blows up. -

Conjugate Trigonometric Integrals! by R

SOME THEOREMS ON FOURIER TRANSFORMS AND CONJUGATE TRIGONOMETRIC INTEGRALS! BY R. P. BOAS, JR. 1. Introduction. Functions whose Fourier transforms vanish for large val- ues of the argument have been studied by Paley and Wiener.f It is natural, then, to ask what properties are possessed by functions whose Fourier trans- forms vanish over a finite interval. The two classes of functions are evidently closely related, since a function whose Fourier transform vanishes on ( —A, A) is the difference of two suitably chosen functions, one of which has its Fourier transform vanishing outside (—A, A). In this paper, however, we obtain, for a function having a Fourier transform vanishing over a finite interval, a criterion which does not seem to be trivially related to known results. It is stated in terms of a transform which is closely related to the Hilbert trans- form, and which may be written in the simplest (and probably most interest- ing) case, 1 C" /(* + *) - fix - 0 (1) /*(*) = —- sin t dt. J IT J o t2 With this notation, we shall show that a necessary and sufficient condition for a function/(x) belonging to L2(—<», oo) to have a Fourier transform vanishing almost everywhere on ( — 1, 1) is that/**(x) = —/(*) almost every- where;/**^) is the result of applying the transform (1) to/*(x). The result holds also if (sin t)/t is replaced in the integrand in (1) by a certain more general function Hit). As a preliminary result, we discuss the effect of the general transform/*(x) on a trigonometric Stieltjes integral, (2) /(*) = f e^'dait), J -R where a(/) is a normalized! complex-valued function of bounded variation. -

Leibniz's Rule and Fubini's Theorem Associated with Hahn Difference

View metadata, citation and similar papers at core.ac.uk brought to you by CORE I S S N 2provided 3 4 7 -by1921 KHALSA PUBLICATIONS Volume 12 Number 06 J o u r n a l of Advances in Mathematics Leibniz’s rule and Fubini’s theorem associated with Hahn difference operators å Alaa E. Hamza † , S. D. Makharesh † † Jeddah University, Department of Mathematics, Saudi, Arabia † Cairo University, Department of Mathematics, Giza, Egypt E-mail: [email protected] å † Cairo University, Department of Mathematics, Giza, Egypt E-mail: [email protected] ABSTRACT In 1945 , Wolfgang Hahn introduced his difference operator Dq, , which is defined by f (qt ) f (t) D f (t) = , t , q, (qt ) t 0 where = with 0 < q <1, > 0. In this paper, we establish Leibniz’s rule and Fubini’s theorem associated with 0 1 q this Hahn difference operator. Keywords. q, -difference operator; q, –Integral; q, –Leibniz Rule; q, –Fubini’s Theorem. 1Introduction The Hahn difference operator is defined by f (qt ) f (t) D f (t) = , t , (1) q, (qt ) t 0 where q(0,1) and > 0 are fixed, see [2]. This operator unifies and generalizes two well known difference operators. The first is the Jackson q -difference operator which is defined by f (qt) f (t) D f (t) = , t 0, (2) q qt t see [3, 4, 5, 6]. The second difference operator which Hahn’s operator generalizes is the forward difference operator f (t ) f (t) f (t) = , t R, (3) (t ) t where is a fixed positive number, see [9, 10, 13, 14]. -

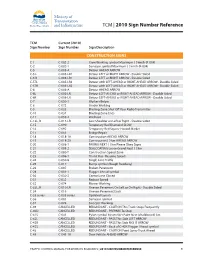

2010 Sign Number Reference for Traffic Control Manual

TCM | 2010 Sign Number Reference TCM Current (2010) Sign Number Sign Number Sign Description CONSTRUCTION SIGNS C-1 C-002-2 Crew Working symbol Maximum ( ) km/h (R-004) C-2 C-002-1 Surveyor symbol Maximum ( ) km/h (R-004) C-5 C-005-A Detour AHEAD ARROW C-5 L C-005-LR1 Detour LEFT or RIGHT ARROW - Double Sided C-5 R C-005-LR1 Detour LEFT or RIGHT ARROW - Double Sided C-5TL C-005-LR2 Detour with LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-5TR C-005-LR2 Detour with LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-6 C-006-A Detour AHEAD ARROW C-6L C-006-LR Detour LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-6R C-006-LR Detour LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-7 C-050-1 Workers Below C-8 C-072 Grader Working C-9 C-033 Blasting Zone Shut Off Your Radio Transmitter C-10 C-034 Blasting Zone Ends C-11 C-059-2 Washout C-13L, R C-013-LR Low Shoulder on Left or Right - Double Sided C-15 C-090 Temporary Red Diamond SLOW C-16 C-092 Temporary Red Square Hazard Marker C-17 C-051 Bridge Repair C-18 C-018-1A Construction AHEAD ARROW C-19 C-018-2A Construction ( ) km AHEAD ARROW C-20 C-008-1 PAVING NEXT ( ) km Please Obey Signs C-21 C-008-2 SEALCOATING Loose Gravel Next ( ) km C-22 C-080-T Construction Speed Zone C-23 C-086-1 Thank You - Resume Speed C-24 C-030-8 Single Lane Traffic C-25 C-017 Bump symbol (Rough Roadway) C-26 C-007 Broken Pavement C-28 C-001-1 Flagger Ahead symbol C-30 C-030-2 Centre Lane Closed C-31 C-032 Reduce Speed C-32 C-074 Mower Working C-33L, R C-010-LR Uneven Pavement On Left or On Right - Double Sided C-34 -

Math 1B, Lecture 11: Improper Integrals I

Math 1B, lecture 11: Improper integrals I Nathan Pflueger 30 September 2011 1 Introduction An improper integral is an integral that is unbounded in one of two ways: either the domain of integration is infinite, or the function approaches infinity in the domain (or both). Conceptually, they are no different from ordinary integrals (and indeed, for many students including myself, it is not at all clear at first why they have a special name and are treated as a separate topic). Like any other integral, an improper integral computes the area underneath a curve. The difference is foundational: if integrals are defined in terms of Riemann sums which divide the domain of integration into n equal pieces, what are we to make of an integral with an infinite domain of integration? The purpose of these next two lecture will be to settle on the appropriate foundation for speaking of improper integrals. Today we consider integrals with infinite domains of integration; next time we will consider functions which approach infinity in the domain of integration. An important observation is that not all improper integrals can be considered to have meaningful values. These will be said to diverge, while the improper integrals with coherent values will be said to converge. Much of our work will consist in understanding which improper integrals converge or diverge. Indeed, in many cases for this course, we will only ask the question: \does this improper integral converge or diverge?" The reading for today is the first part of Gottlieb x29:4 (up to the top of page 907). -

Mathematics 136 – Calculus 2 Summary of Trigonometric Substitutions October 17 and 18, 2016

Mathematics 136 – Calculus 2 Summary of Trigonometric Substitutions October 17 and 18, 2016 The trigonometric substitution method handles many integrals containing expressions like a2 x2, x2 + a2, x2 a2 p − p p − (possibly including expressions without the square roots!) The basis for this approach is the trigonometric identities 1 = sin2 θ + cos2 θ sec2 θ = tan2 θ +1. ⇒ from which we derive other related identities: a2 (a sin θ)2 = a2(1 sin2 θ) = √a2 cos2 θ = a cos θ p − q − (a tan θ)2 + a2 = a2(tan2 θ +1) = √a2 sec2 θ = a sec θ p q (a sec θ)2 a2 = a2(sec2 θ 1) = a2 tan2 θ = a tan θ p − p − p (Technical note: We usually assume that a > 0 and 0 <θ<π/2 here so that all the trig functions take positive values.) Hence, 1. If our integral contains √a2 x2, the substitution x = a sin θ will convert this radical to the simpler form a cos θ. − 2. If our integral contains √x2 + a2, the substitution x = a tan θ will convert this radical to the simpler form a sec θ. 3. If our integral contains √x2 a2, the substitution x = a sec θ will convert this radical to the simpler form a tan θ. − We substitute for the rest of the integral including the dx. For instance if x = a sin θ, then the dx = a cos θ dθ. If x = a tan θ, then dx = a sec2 θ dθ. If x = a sec θ, then dx = a sec θ tan θ dθ. -

Glossary of Linear Algebra Terms

INNER PRODUCT SPACES AND THE GRAM-SCHMIDT PROCESS A. HAVENS 1. The Dot Product and Orthogonality 1.1. Review of the Dot Product. We first recall the notion of the dot product, which gives us a familiar example of an inner product structure on the real vector spaces Rn. This product is connected to the Euclidean geometry of Rn, via lengths and angles measured in Rn. Later, we will introduce inner product spaces in general, and use their structure to define general notions of length and angle on other vector spaces. Definition 1.1. The dot product of real n-vectors in the Euclidean vector space Rn is the scalar product · : Rn × Rn ! R given by the rule n n ! n X X X (u; v) = uiei; viei 7! uivi : i=1 i=1 i n Here BS := (e1;:::; en) is the standard basis of R . With respect to our conventions on basis and matrix multiplication, we may also express the dot product as the matrix-vector product 2 3 v1 6 7 t î ó 6 . 7 u v = u1 : : : un 6 . 7 : 4 5 vn It is a good exercise to verify the following proposition. Proposition 1.1. Let u; v; w 2 Rn be any real n-vectors, and s; t 2 R be any scalars. The Euclidean dot product (u; v) 7! u · v satisfies the following properties. (i:) The dot product is symmetric: u · v = v · u. (ii:) The dot product is bilinear: • (su) · v = s(u · v) = u · (sv), • (u + v) · w = u · w + v · w. -

Calculus Terminology

AP Calculus BC Calculus Terminology Absolute Convergence Asymptote Continued Sum Absolute Maximum Average Rate of Change Continuous Function Absolute Minimum Average Value of a Function Continuously Differentiable Function Absolutely Convergent Axis of Rotation Converge Acceleration Boundary Value Problem Converge Absolutely Alternating Series Bounded Function Converge Conditionally Alternating Series Remainder Bounded Sequence Convergence Tests Alternating Series Test Bounds of Integration Convergent Sequence Analytic Methods Calculus Convergent Series Annulus Cartesian Form Critical Number Antiderivative of a Function Cavalieri’s Principle Critical Point Approximation by Differentials Center of Mass Formula Critical Value Arc Length of a Curve Centroid Curly d Area below a Curve Chain Rule Curve Area between Curves Comparison Test Curve Sketching Area of an Ellipse Concave Cusp Area of a Parabolic Segment Concave Down Cylindrical Shell Method Area under a Curve Concave Up Decreasing Function Area Using Parametric Equations Conditional Convergence Definite Integral Area Using Polar Coordinates Constant Term Definite Integral Rules Degenerate Divergent Series Function Operations Del Operator e Fundamental Theorem of Calculus Deleted Neighborhood Ellipsoid GLB Derivative End Behavior Global Maximum Derivative of a Power Series Essential Discontinuity Global Minimum Derivative Rules Explicit Differentiation Golden Spiral Difference Quotient Explicit Function Graphic Methods Differentiable Exponential Decay Greatest Lower Bound Differential -

Rotation Matrix - Wikipedia, the Free Encyclopedia Page 1 of 22

Rotation matrix - Wikipedia, the free encyclopedia Page 1 of 22 Rotation matrix From Wikipedia, the free encyclopedia In linear algebra, a rotation matrix is a matrix that is used to perform a rotation in Euclidean space. For example the matrix rotates points in the xy -Cartesian plane counterclockwise through an angle θ about the origin of the Cartesian coordinate system. To perform the rotation, the position of each point must be represented by a column vector v, containing the coordinates of the point. A rotated vector is obtained by using the matrix multiplication Rv (see below for details). In two and three dimensions, rotation matrices are among the simplest algebraic descriptions of rotations, and are used extensively for computations in geometry, physics, and computer graphics. Though most applications involve rotations in two or three dimensions, rotation matrices can be defined for n-dimensional space. Rotation matrices are always square, with real entries. Algebraically, a rotation matrix in n-dimensions is a n × n special orthogonal matrix, i.e. an orthogonal matrix whose determinant is 1: . The set of all rotation matrices forms a group, known as the rotation group or the special orthogonal group. It is a subset of the orthogonal group, which includes reflections and consists of all orthogonal matrices with determinant 1 or -1, and of the special linear group, which includes all volume-preserving transformations and consists of matrices with determinant 1. Contents 1 Rotations in two dimensions 1.1 Non-standard orientation -

Elementary Calculus

Elementary Calculus 2 v0 2g 2 0 v0 g Michael Corral Elementary Calculus Michael Corral Schoolcraft College About the author: Michael Corral is an Adjunct Faculty member of the Department of Mathematics at School- craft College. He received a B.A. in Mathematics from the University of California, Berkeley, and received an M.A. in Mathematics and an M.S. in Industrial & Operations Engineering from the University of Michigan. This text was typeset in LATEXwith the KOMA-Script bundle, using the GNU Emacs text editor on a Fedora Linux system. The graphics were created using TikZ and Gnuplot. Copyright © 2020 Michael Corral. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. Preface This book covers calculus of a single variable. It is suitable for a year-long (or two-semester) course, normally known as Calculus I and II in the United States. The prerequisites are high school or college algebra, geometry and trigonometry. The book is designed for students in engineering, physics, mathematics, chemistry and other sciences. One reason for writing this text was because I had already written its sequel, Vector Cal- culus. More importantly, I was dissatisfied with the current crop of calculus textbooks, which I feel are bloated and keep moving further away from the subject’s roots in physics. In addi- tion, many of the intuitive approaches and techniques from the early days of calculus—which I think often yield more insights for students—seem to have been lost. -

1 Sets and Set Notation. Definition 1 (Naive Definition of a Set)

LINEAR ALGEBRA MATH 2700.006 SPRING 2013 (COHEN) LECTURE NOTES 1 Sets and Set Notation. Definition 1 (Naive Definition of a Set). A set is any collection of objects, called the elements of that set. We will most often name sets using capital letters, like A, B, X, Y , etc., while the elements of a set will usually be given lower-case letters, like x, y, z, v, etc. Two sets X and Y are called equal if X and Y consist of exactly the same elements. In this case we write X = Y . Example 1 (Examples of Sets). (1) Let X be the collection of all integers greater than or equal to 5 and strictly less than 10. Then X is a set, and we may write: X = f5; 6; 7; 8; 9g The above notation is an example of a set being described explicitly, i.e. just by listing out all of its elements. The set brackets {· · ·} indicate that we are talking about a set and not a number, sequence, or other mathematical object. (2) Let E be the set of all even natural numbers. We may write: E = f0; 2; 4; 6; 8; :::g This is an example of an explicity described set with infinitely many elements. The ellipsis (:::) in the above notation is used somewhat informally, but in this case its meaning, that we should \continue counting forever," is clear from the context. (3) Let Y be the collection of all real numbers greater than or equal to 5 and strictly less than 10. Recalling notation from previous math courses, we may write: Y = [5; 10) This is an example of using interval notation to describe a set.