Computational Augmented Reality Eyeglasses

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vuzix Corporation Annual Report 2021

Vuzix Corporation Annual Report 2021 Form 10-K (NASDAQ:VUZI) Published: March 15th, 2021 PDF generated by stocklight.com UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K ☑ ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2020 ☐ TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 Commission file number: 001-35955 Vuzix Corporation ( Exact name of registrant as specified in its charter ) Delaware 04-3392453 (State of incorporation) (I.R.S. employer identification no.) 25 Hendrix Road West Henrietta, New York 14586 (Address of principal executive office) (Zip code) (585) 359-5900 (Registrant’s telephone number including area code) Securities registered pursuant to Section 12(b) of the Act: Title of each class: Trading Symbol(s) Name of each exchange on which registered Common Stock, par value $0.001 VUZI Nasdaq Capital Market Securities registered pursuant to Section 12(g) of the Act: None. Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes ◻ No þ Indicate by check mark if the registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Exchange Act. Yes ◻ No þ Indicate by check mark whether the registrant: (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

View the Future® Vuzix Developer Newsletter

Game Developer Conference - Special Edition - March, 2010 View the Future® Vuzix Developer Newsletter Stereoscopic 3D Eyewear – Goes From Geek to Chic Royalty Free SDK Update Stereoscopic 3D is the latest trend in movies and games – which format(s) do I support? Beta Release SDK Supports Side- by-Side Stereoscopic 3D Video and 6-DoF Tracker. Vuzix has been providing a roy- alty free SDK for its PC compat- ible video eyewear since 2006. A new release of the Vuzix SDK (beta release) will be available for download from the Vuzix website (www.vuzix.com) in late March, 2010. This new release expands the depth of supported stereoscop- ic formats and adds support for the soon to be released Wrap 6-DoF (Degree of Freedom) Tracker. This is a question many game developers are asking themselves, as there is no single clear winner in the format The Side-by-Side stereoscopic wars. Until recently, Frame Sequential appeared to be the leading format for PC games, but recently Side-by-Side 3D format has become popular of has made great strides. Vuzix offers end-users two options, Frame Sequential through the iWear® VR920 video late and is expected to show up in eyewear or Side-by-Side through the Wrap line of video eyewear. other titles moving forward. This format is supported in this new The sunglass style Wrap™ eyewear line is the latest addition to the Vuzix eyewear line. When equipped with an SDK release and Vuzix encour- optional Wrap VGA Adapter, it can be connected to any Windows based PC running XP, Vista or 7 - 32 or 64-bit ages software developers to sup- versions. -

Whitepaper Head Mounted Displays & Data Glasses Applications and Systems

Whitepaper Head Mounted Displays & Data Glasses Applications and Systems Dr.-Ing. Dipl.-Kfm. Christoph Runde Virtual Dimension Center (VDC) Fellbach Auberlenstr. 13 70736 Fellbach www.vdc-fellbach.de © Competence Centre for Virtual Reality and Cooperative Engineering w. V. – Virtual Dimension Center (VDC) System classes Application fields Directions of development Summary Content . System classes Head Mounted Display (HMD) – Video glasses – Data glasses . Simulator disease / Cyber Sickness . Application fields HMDs: interior inspections, training, virtual hedging engineering / ergonomics . Application fields data glasses: process support, teleservice, consistency checks, collaboration . Directions of development: technical specifications, (eye) tracking, retinal displays, light field technology, imaging depth sensors . Application preconditions information & integration (human, IT, processes) . Final remark 2 SystemSystem classes classes Application fields Directions of development Summary Head Mounted Displays (HMDs) – Overview . 1961: first HMD on market . 1965: 3D-tracked HMD by Ivan Sutherland . Since the 1970s a significant number of HMDs is applied in the military sector (training, additional display) Table: Important HMD- projects since the 1970s [Quelle: Li, Hua et. al.: Review and analysis of avionic helmet-mounted displays. In : Op-tical Engineering 52(11), 110901, Novembre2013] 3 SystemSystem classes classes Application fields Directions of development Summary Classification HMD – Video glasses – Data glasses Head Mounted Display -

STAR 1200 & 1200XL User Guide

STAR 1200 & 1200XL Augmented Reality Systems User Guide VUZIX CORPORATION VUZIX CORPORATION STAR 1200 & 1200XL – User Guide © 2012 Vuzix Corporation 2166 Brighton Henrietta Town Line Road Rochester, New York 14623 Phone: 585.359.5900 ! Fax: 585.359.4172 www.vuzix.com 2 1. STAR 1200 & 1200XL OVERVIEW ................................... 7! System Requirements & Compatibility .................................. 7! 2. INSTALLATION & SETUP .................................................. 14! Step 1: Accessory Installation .................................... 14! Step 2: Hardware Connections .................................. 15! Step 3: Adjustment ..................................................... 19! Step 4: Software Installation ...................................... 21! Step 5: Tracker Calibration ........................................ 24! 3. CONTROL BUTTON & ON SCREEN DISPLAY ..................... 27! VGA Controller .................................................................... 27! PowerPak+ Controller ......................................................... 29! OSD Display Options ........................................................... 30! 4. STAR HARDWARE ......................................................... 34! STAR Display ...................................................................... 34! Nose Bridge ......................................................................... 36! Focus Adjustment ................................................................ 37! Eye Separation ................................................................... -

Augmented and Virtual Reality in Operations

Augmented and Virtual Reality in Operations A guide for investment Introduction Augmented Reality and Virtual Reality (AR/VR) are not new, but recent advances in computational power, storage, graphics processing, and high-resolution displays have helped overcome some of the constraints that have stood in the way of the widespread use of these immersive technologies. Global spending on AR/VR is now expected to reach $17.8 billion in 2018, an increase of nearly 95% over the $9.1 billion estimate for 2017.1 Given this impetus, we wanted to understand more about the current state of play with AR/VR in the enterprise. We surveyed executives at 700+ companies, including 600 companies who are either experimenting with or implementing AR/VR; spoke with AR/VR-focused leadership at global companies, start-ups, and vendors; and analyzed over 35 use cases, with a particular focus on: • The use of AR/VR for internal industrial company operations, including, but not limited to, design, engineering, and field services • The automotive, utility, and discrete manufacturing sectors. This research focuses on organizations that have initiated their AR/VR journey, whether by experimentation or implementation, and aims to answer a number of questions: • How is AR/VR being used today? • Where should organizations invest – what are the use cases that generate the most value? • How can companies either begin or evolve their initiatives? Outside of this report, terms you will commonly find when reading about AR/VR are mixed reality, hybrid reality, or merged reality in which both the physical and the virtual or digital realities coexist or even merge. -

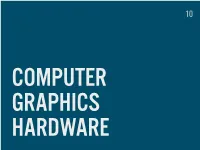

GRAPHICS HARDWARE WHAT’S in STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High Performance Power Consumption Heat Emission

10 COMPUTER GRAPHICS HARDWARE WHAT’S IN STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High performance Power consumption Heat emission INTEGRATED (INTERNAL) Low power, low heat Mobile devices, on board Integrated with CPU ARCHITECTURE VERTEX SHADERS (TRANSFORM GEOMETRY) GEOMETRY SHADERS (CREATE NEW GEOMETRY) PIXEL SHADERS (COLORS, SHADOWS…) UNIFIED SHADERS One type of processors for all operations GTX 680 = 1536 unified shader cores CUDA, GPGPU General (not only graphics) performed on GPU Parallelism (HW video encoding, numerical computations) CONNECTORS VGA Analog DVI Digital + Analog HDMI Digital miniHDMI, microHDMI DISPLAYPORT Digital, Analog Mini DisplayPort (Apple) MANUFACTURERS NVIDIA GeForce, Quadro, Tesla AMD (FORMERLY ATI) Radeon, FirePro INTEL Integrated in Core CPUs POWERVR ARM Mali DISPLAYS CATHODE RAY TUBE (CRT) CATHODE RAY TUBE (CRT) ANALOG TVS OLD COMPUTER MONITORS MEDIUM SIZE DISPLAYS LIGHT EMISSION Black is black FLICKER 75-100 Hz for work http://www.bthompson.net/ PLASMA (PDP, NEO-PDP) NOT SUITABLE FOR COMPUTER DISPLAYS LARGE SCREENS (30”+) LIGHT EMISSION Black is black HIGH ENERGY DEMANDS VIEW ANGLE DOESN’T MATTER IMAGE RETENTION Reduced for Neo-PDP LIQUID CRYSTAL DISPLAY (LCD) CRYSTALS BLOCK LIGHT FROM BACK SOURCE Black is hard to achieve DIFFERENT TECHNOLOGIES (TN, IPS, MVA, …) LOW ENERGY CONSUMPTION LARGE SCREENS VIEW ANGLES ISSUE LCD LED CONFUSION OLED (ORGANIC LIGHT-EMITTING DIODE) LIGHT EMISSION GOOD CONTRAST AND COLORS EXPENSIVE PRODUCTION Small screens so far LOW ENERGY POTENTIALLY FLEXIBLE VIEW ANGLE -

Laval Virtual's Missions Are to Gather, Inspire and Valorize Involved in This Study

The VR/AR special edition #4 health Clinical VR Medicine Well Being #EDITORIAL How VR is changing the way women breast cancer is diagnosed, treated and managed LAURENT CHRÉTIEN DIRECTOR / LAVAL VIRTUAL ancer cells live in complex communities. They will then take all the information they Just like houses in a city, each cell in a collect about the cells in a tumour and use it tumour is different from its neighbour, to construct a 3D version that can be studied Cand relies on infrastructure to support using virtual reality. its existence. And we know that there are different neighbourhoods, some worse than Using virtual reality will allow scientists others. Where we have roads, tumours contain to immerse themselves in a tumour, blood vessels that deliver nutrients, and act meaning they can study patterns and other as highways for different cell types to move characteristics within it, in entirely new around. And when a tumour spreads, the can- ways that aren’t possible in 2D. It will also cer cells themselves use these blood ‘roads’ to allow multiple doctors and scientists to look migrate. at a tumour at the same time, meaning people at opposite ends of a country, and with different areas of expertise, can What the healthcare experts need is a Google Earth-like view work together to help diagnose and treat patients better. And of a tumour. If they could make a 3D map, they would find with the Covid19 crisis, the use of virtual reality to cooperate new targets for treatment and, eventually, could use this view remotely is even more obvious! to track what’s going on in real time, such as in response to treatment. -

Using Virtual Reality to Improve Sitting Balance

BearWorks MSU Graduate Theses Fall 2017 Using Virtual Reality to Improve Sitting Balance Alice Kay Barnes Missouri State University, [email protected] As with any intellectual project, the content and views expressed in this thesis may be considered objectionable by some readers. However, this student-scholar’s work has been judged to have academic value by the student’s thesis committee members trained in the discipline. The content and views expressed in this thesis are those of the student-scholar and are not endorsed by Missouri State University, its Graduate College, or its employees. Follow this and additional works at: https://bearworks.missouristate.edu/theses Part of the Graphics and Human Computer Interfaces Commons, Occupational Therapy Commons, and the Physical Therapy Commons Recommended Citation Barnes, Alice Kay, "Using Virtual Reality to Improve Sitting Balance" (2017). MSU Graduate Theses. 3221. https://bearworks.missouristate.edu/theses/3221 This article or document was made available through BearWorks, the institutional repository of Missouri State University. The work contained in it may be protected by copyright and require permission of the copyright holder for reuse or redistribution. For more information, please contact [email protected]. USING VIRTUAL REALITY TO IMPROVE SITTING BALANCE A Master’s Thesis Presented to The Graduate College of Missouri State University TEMPLATE In Partial Fulfillment Of the Requirements for the Degree Master of Natural and Applied Science, Computer Science By Alice K. Barnes December 2017 Copyright 2017 by Alice Kay Barnes ii USING VIRTUAL REALITY TO IMPROVE SITTING BALANCE Computer Science Missouri State University, December 2017 Master of Natural and Applied Science Alice K. -

Virtual Reality and Augmented Reality a Survey from Scania’S Perspective

EXAMENSARBETE INOM MASKINTEKNIK, AVANCERAD NIVÅ, 30 HP STOCKHOLM, SVERIGE 2019 Virtual Reality and Augmented Reality A Survey from Scania’s Perspective KARL INGERSTAM KTH SKOLAN FÖR INDUSTRIELL TEKNIK OCH MANAGEMENT Virtual Reality and Augmented Reality A Survey from Scania’s Perspective Karl Ingerstam 2019 Master of Science Thesis TPRMM 2019 KTH – Industrial Engineering and Management Production Engineering SE-100 44 Stockholm Abstract Virtual reality and augmented reality are technological fields that have developed and expanded at a great pace the last few years. A virtual reality is a digitally created environment where computer- generated elements are displayed to a user via different interfaces for the respective senses. Video is used for displaying images, creating a realistic environment, while audio is played to stimulate hearing and other sorts of feedback is used to stimulate the sense of touch in particular. Augmented reality is a sub-category of virtual reality where the user sees the real surroundings, but computer-generated imagery is displayed on top of objects in the environment. This type of technology brings a lot of new possibilities and potential use cases in all sorts of areas, ranging from personal entertainment, communication and education to medicine and heavy industry. Scania is a global manufacturer of heavy trucks and buses, and provider of related services, based in Sweden. By studying Scania’s different departments and surveying the fields of virtual reality and augmented reality, the aim of this thesis is to identify situations and use cases where there is potential for Scania to implement virtual reality and augmented reality technology. This thesis also studies what obstacles implementation of these technologies bring. -

Vuzix Corporation Reality (MR) and Virtual Reality (VR) and the Areas Interpolated Among 25 Hendrix Road Them

Management Team Company Overview We are engaged in the design, manufacture, marketing and sale of Paul J. Travers augmented reality wearable display and computing devices, also referred CEO, President and Director to as head mounted displays (or HMDs, but also known as near-eye Grant Russell displays), in the form of Smart Glasses and Augmented Reality (AR) glasses. Our AR wearable display devices are worn like eyeglasses or CFO, Executive Vice President, Treasurer and Director attach to a head worn mount. These devices typically include a wearable Shane Porzio computer, cameras, and sensors that enable the user to view, record and Vice President Engineering interact with video and digital content, such as computer data, the Internet, enterprise data, social media or entertainment applications. Our Nathaniel S. Bank wearable display products integrate micro-display technology with our Corporate Counsel advanced optics to produce compact high-resolution display engines, less than half an inch diagonally, which when viewed through our Smart Glasses and AR products create virtual images that appear comparable in size to that of a computer monitor or a large-screen television. This includes the representative forms such as augmented reality (AR), mixed Vuzix Corporation reality (MR) and virtual reality (VR) and the areas interpolated among 25 Hendrix Road them. Extended reality (XR) is a term referring to all real-and-virtual Suite A combined environments. Our wearable display products cover the entire West Henrietta, NY 14586 spectrum from "the complete real" to "the complete virtual" in the concept of reality–virtuality. For AR glasses, historically, see-through HMDs displayed the real world using semi-transparent optics placed in front of the user’s eyes. -

3D - Widescreen Experience the Future of Video Eyewear

Amaze Your Eyes! 3D - Widescreen Experience The Future of Video Eyewear Vuzix, the world leader in video eyewear technology, continues to set the global standard for video eyewear AVAILABLE NOW products with its newest Wrap™ product line. Enjoy the big widescreen 2D or 3D movie experience Wrap™ 920 Wrap™ 920AR with your own personal theater – anytime, anywhere. Premium Augmented performance 2D and A full range of Wrap eyewear accessories allow you to Reality Eyewear 3D video eyewear The world’s first consumer customize your experience, keep abreast with the latest The sunglass-style Wrap 920 provides a market augmented reality eyewear portable big-screen experience equivalent to a The Wrap 920AR features a 67-inch display as seen from ten feet technology, and watch your favorite movies from virtually any video source. 67-inch screen viewed from ten feet (3 m). This crystal clear (3 m). Its stereo camera pair captures a 1st person view of the Vuzix Wrap video eyewear – View the Future® higher-resolution display supports standard 2D video as well real world, bringing augmented reality to life. Each camera as most common 3D video formats. Two AA batteries afford up captures VGA quality images at 60 fps, enabling your real-world to three hours of continuous use. Connects to virtually any view to be displayed in 2D or 3D stereoscopic video, mixed with portable media player or to your PC with an optional video 2D or 3D computer graphics and data. Comes complete with adapter cable. As with all Wrap model eyewear, the Wrap 920 COMING – SPRING 2011 composite and Windows graphic card connections and Vuzix includes removable high-quality noise isolating earphones. -

Augmented Reality Smart Glasses in the Smart Factory: Product Evaluation Guidelines and Review of Available Products

Received March 29, 2017, accepted May 8, 2017, date of publication May 12, 2017, date of current version June 28, 2017. Digital Object Identifier 10.1109/ACCESS.2017.2703952 Augmented Reality Smart Glasses in the Smart Factory: Product Evaluation Guidelines and Review of Available Products ANNA SYBERFELDT, OSCAR DANIELSSON, AND PATRIK GUSTAVSSON Department of Engineering Science, University of Skövde, 54128 Skövde, Sweden Corresponding author: Anna Syberfeldt ([email protected]) ABSTRACT Augmented reality smart glasses (ARSG) are increasingly popular and have been identified as a vital technology supporting shop-floor operators in the smart factories of the future. By improving our knowledge of how to efficiently evaluate and select the ARSG for the shop-floor context, this paper aims to facilitate and accelerate the adoption of the ARSG by the manufacturing industry. The market for ARSG has exploded in recent years, and the large variety of products to select from makes it not only difficult but also time consuming to identify the best alternative. To address this problem, this paper presents an efficient step-by-step process for evaluating the ARSG, including concrete guidelines as to what parameters to consider and their recommended minimum values. Using the suggested evaluation process, manufacturing companies can quickly make optimal decisions about what products to implement on their shop floors. This paper demonstrates the evaluation process in practice, presenting a comprehensive review of currently available products along with a recommended best buy. This paper also identifies and discusses topics meriting research attention to ensure that the ARSG are successfully implemented on the industrial shop floor.