Zuse-Dokumentation, Layout 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Group Developed Weighing Matrices∗

AUSTRALASIAN JOURNAL OF COMBINATORICS Volume 55 (2013), Pages 205–233 Group developed weighing matrices∗ K. T. Arasu Department of Mathematics & Statistics Wright State University 3640 Colonel Glenn Highway, Dayton, OH 45435 U.S.A. Jeffrey R. Hollon Department of Mathematics Sinclair Community College 444 W 3rd Street, Dayton, OH 45402 U.S.A. Abstract A weighing matrix is a square matrix whose entries are 1, 0 or −1,such that the matrix times its transpose is some integer multiple of the identity matrix. We examine the case where these matrices are said to be devel- oped by an abelian group. Through a combination of extending previous results and by giving explicit constructions we will answer the question of existence for 318 such matrices of order and weight both below 100. At the end, we are left with 98 open cases out of a possible 1,022. Further, some of the new results provide insight into the existence of matrices with larger weights and orders. 1 Introduction 1.1 Group Developed Weighing Matrices A weighing matrix W = W (n, k) is a square matrix, of order n, whose entries are in t the set wi,j ∈{−1, 0, +1}. This matrix satisfies WW = kIn, where t denotes the matrix transpose, k is a positive integer known as the weight, and In is the identity matrix of size n. Definition 1.1. Let G be a group of order n.Ann×n matrix A =(agh) indexed by the elements of the group G (such that g and h belong to G)issaidtobeG-developed if it satisfies the condition agh = ag+k,h+k for all g, h, k ∈ G. -

Operating Manual R&S NRP-Z22

Operating Manual Average Power Sensor R&S NRP-Z22 1137.7506.02 R&S NRP-Z23 1137.8002.02 R&S NRP-Z24 1137.8502.02 Test and Measurement 1137.7870.12-07- 1 Dear Customer, R&S® is a registered trademark of Rohde & Schwarz GmbH & Co. KG Trade names are trademarks of the owners. 1137.7870.12-07- 2 Basic Safety Instructions Always read through and comply with the following safety instructions! All plants and locations of the Rohde & Schwarz group of companies make every effort to keep the safety standards of our products up to date and to offer our customers the highest possible degree of safety. Our products and the auxiliary equipment they require are designed, built and tested in accordance with the safety standards that apply in each case. Compliance with these standards is continuously monitored by our quality assurance system. The product described here has been designed, built and tested in accordance with the EC Certificate of Conformity and has left the manufacturer’s plant in a condition fully complying with safety standards. To maintain this condition and to ensure safe operation, you must observe all instructions and warnings provided in this manual. If you have any questions regarding these safety instructions, the Rohde & Schwarz group of companies will be happy to answer them. Furthermore, it is your responsibility to use the product in an appropriate manner. This product is designed for use solely in industrial and laboratory environments or, if expressly permitted, also in the field and must not be used in any way that may cause personal injury or property damage. -

Kobbelt, Slides on Surface Representations

)NPUT $ATA 2ANGE 3CAN #!$ 4OMOGRAPHY 2EMOVAL OF TOPOLOGICAL AND GEOMETRICAL ERRORS !NALYSIS OF SURFACE QUALITY 3URFACE SMOOTHING FOR NOISE REMOVAL 0ARAMETERIZATION Surface Representations 3IMPLIFICATION FOR COMPLEXITY REDUCTION Leif Kobbelt RWTH Aachen University 2EMESHING FOR IMPROVING MESH QUALITY &REEFORM AND MULTIRESOLUTION MODELING 1 Outline • (mathematical) geometry representations – parametric vs. implicit • approximation properties • types of operations – distance queries – evaluation – modification / deformation • data structures Leif Kobbelt RWTH Aachen University 2 2 Outline • (mathematical) geometry representations – parametric vs. implicit • approximation properties • types of operations – distance queries – evaluation – modification / deformation • data structures Leif Kobbelt RWTH Aachen University 3 3 Mathematical Representations • parametric – range of a function – surface patch 2 3 f : R → R , SΩ = f(Ω) • implicit – kernel of a function – level set 3 F : R → R, Sc = {p : F (p)=c} Leif Kobbelt RWTH Aachen University 4 4 2D-Example: Circle • parametric r cos(t) f : t !→ , S = f([0, 2π]) ! r sin(t) " • implicit 2 2 2 F (x, y)=x + y − r S = {(x, y):F (x, y)=0} Leif Kobbelt RWTH Aachen University 5 5 2D-Example: Island • parametric r cos(???t) f : t !→ , S = f([0, 2π]) ! r sin(???t) " • implicit 2 2 2 F (x, y)=x +???y − r S = {(x, y):F (x, y)=0} Leif Kobbelt RWTH Aachen University 6 6 Approximation Quality • piecewise parametric r cos(???t) f : t !→ , S = f([0, 2π]) ! r sin(???t) " • piecewise implicit 2 2 2 F (x, y)=x +???y − r S = {(x, y):F (x, y)=0} Leif Kobbelt RWTH Aachen University 7 7 Approximation Quality • piecewise parametric r cos(???t) f : t !→ , S = f([0, 2π]) ! r sin(???t) " • piecewise implicit 2 2 2 F (x, y)=x +???y − r S = {(x, y):F (x, y)=0} Leif Kobbelt RWTH Aachen University 8 8 Requirements / Properties • continuity – interpolation / approximation f(ui,vi) ≈ pi • topological consistency – manifold-ness • smoothness – C0, C1, C2, .. -

Konrad Zuse Und Die Schweiz

Research Collection Report Konrad Zuse und die Schweiz Relaisrechner Z4 an der ETH Zürich : Rechenlocher M9 für die Schweizer Remington Rand : Eigenbau des Röhrenrechners ERMETH : Zeitzeugenbericht zur Z4 : unbekannte Dokumente zur M9 : ein Beitrag zu den Anfängen der Schweizer Informatikgeschichte Author(s): Bruderer, Herbert Publication Date: 2011 Permanent Link: https://doi.org/10.3929/ethz-a-006517565 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Konrad Zuse und die Schweiz Relaisrechner Z4 an der ETH Zürich Rechenlocher M9 für die Schweizer Remington Rand Eigenbau des Röhrenrechners ERMETH Zeitzeugenbericht zur Z4 Unbekannte Dokumente zur M9 Ein Beitrag zu den Anfängen der Schweizer Informatikgeschichte Herbert Bruderer ETH Zürich Departement Informatik Professur für Informationstechnologie und Ausbildung Zürich, Juli 2011 Adresse des Verfassers: Herbert Bruderer ETH Zürich Informationstechnologie und Ausbildung CAB F 14 Universitätsstrasse 6 CH-8092 Zürich Telefon: +41 44 632 73 83 Telefax: +41 44 632 13 90 [email protected] www.ite.ethz.ch privat: Herbert Bruderer Bruderer Informatik Seehaldenstrasse 26 Postfach 47 CH-9401 Rorschach Telefon: +41 71 855 77 11 Telefax: +41 71 855 72 11 [email protected] Titelbild: Relaisschränke der Z4 (links: Heinz Rutishauser, rechts: Ambros Speiser), ETH Zürich 1950, © ETH-Bibliothek Zürich, Bildarchiv Bild 4. Umschlagseite: Verabschiedungsrede von Konrad Zuse an der Z4 am 6. Juli 1950 in der Zuse KG in Neukirchen (Kreis Hünfeld) © Privatarchiv Horst Zuse, Berlin Eidgenössische Technische Hochschule Zürich Departement Informatik Professur für Informationstechnologie und Ausbildung CH-8092 Zürich www.abz.inf.ethz.ch 1. -

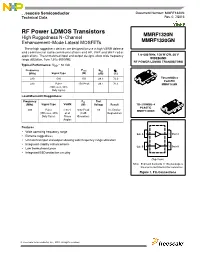

1.8-600 Mhz, 150 W CW, 50 V RF Power LDMOS Transistor Data Sheet

Freescale Semiconductor Document Number: MMRF1320N Technical Data Rev. 0, 7/2015 RF Power LDMOS Transistors MMRF1320N High Ruggedness N--Channel Enhancement--Mode Lateral MOSFETs MMRF1320GN These high ruggedness devices are designed for use in high VSWR defense and commercial radio communications and HF, VHF and UHF radar applications. The unmatched input and output designs allow wide frequency 1.8–600 MHz, 150 W CW, 50 V range utilization, from 1.8 to 600 MHz. WIDEBAND RF POWER LDMOS TRANSISTORS Typical Performance: VDD =50Vdc Frequency Pout Gps D (MHz) Signal Type (W) (dB) (%) 230 CW 150 26.3 72.0 TO--270WB--4 PLASTIC 230 Pulse 150 Peak 26.1 70.3 MMRF1320N (100 sec, 20% Duty Cycle) Load Mismatch/Ruggedness Frequency Pin Test (MHz) Signal Type VSWR (W) Voltage Result TO--270WBG--4 PLASTIC 230 Pulse > 65:1 0.62 Peak 50 No Device MMRF1320GN (100 sec, 20% at all (3 dB Degradation Duty Cycle) Phase Overdrive) Angles Features Wide operating frequency range Gate A 32Drain A Extreme ruggedness Unmatched input and output allowing wide frequency range utilization Integrated stability enhancements Gate B 41Drain B Low thermal resistance Integrated ESD protection circuitry (Top View) Note: Exposed backside of the package is the source terminal for the transistors. Figure 1. Pin Connections Freescale Semiconductor, Inc., 2015. All rights reserved. MMRF1320N MMRF1320GN RF Device Data Freescale Semiconductor, Inc. 1 Table 1. Maximum Ratings Rating Symbol Value Unit Drain--Source Voltage VDSS –0.5, +133 Vdc Gate--Source Voltage VGS –6.0, +10 Vdc Storage Temperature Range Tstg –65 to +150 C Case Operating Temperature Range TC –40 to +150 C (1,2) Operating Junction Temperature Range TJ –40 to +225 C Total Device Dissipation @ TC =25C PD 952 W Derate above 25C 4.76 W/C Table 2. -

MRFE6VP5600H.Pdf

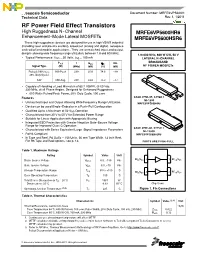

Freescale Semiconductor Document Number: MRFE6VP5600H Technical Data Rev. 1, 1/2011 RF Power Field Effect Transistors High Ruggedness N--Channel MRFE6VP5600HR6 Enhancement--Mode Lateral MOSFETs MRFE6VP5600HSR6 These high ruggedness devices are designed for use in high VSWR industrial (including laser and plasma exciters), broadcast (analog and digital), aerospace and radio/land mobile applications. They are unmatched input and output designs allowing wide frequency range utilization, between 1.8 and 600 MHz. 1.8--600 MHz, 600 W CW, 50 V • Typical Performance: VDD =50Volts,IDQ = 100 mA LATERAL N--CHANNEL BROADBAND Pout f Gps ηD IRL Signal Type (W) (MHz) (dB) (%) (dB) RF POWER MOSFETs Pulsed (100 μsec, 600 Peak 230 25.0 74.6 -- 1 8 20% Duty Cycle) CW 600 Avg. 230 24.6 75.2 -- 1 7 • Capable of Handling a Load Mismatch of 65:1 VSWR, @ 50 Vdc, 230 MHz, at all Phase Angles, Designed for Enhanced Ruggedness • 600 Watts Pulsed Peak Power, 20% Duty Cycle, 100 μsec Features CASE 375D--05, STYLE 1 NI--1230 • Unmatched Input and Output Allowing Wide Frequency Range Utilization MRFE6VP5600HR6 • Device can be used Single--Ended or in a Push--Pull Configuration • Qualified Up to a Maximum of 50 VDD Operation • Characterized from 30 V to 50 V for Extended Power Range • Suitable for Linear Application with Appropriate Biasing • Integrated ESD Protection with Greater Negative Gate--Source Voltage Range for Improved Class C Operation CASE 375E--04, STYLE 1 • Characterized with Series Equivalent Large--Signal Impedance Parameters NI--1230S • RoHS Compliant MRFE6VP5600HSR6 • In Tape and Reel. R6 Suffix = 150 Units, 56 mm Tape Width, 13 inch Reel. -

Computer Simulation of an Unsprung Vehicle, Part I C

Agricultural and Biosystems Engineering Agricultural and Biosystems Engineering Publications 1967 Computer Simulation of an Unsprung Vehicle, Part I C. E. Goering University of Missouri Wesley F. Buchele Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/abe_eng_pubs Part of the Agriculture Commons, and the Bioresource and Agricultural Engineering Commons The ompc lete bibliographic information for this item can be found at https://lib.dr.iastate.edu/ abe_eng_pubs/960. For information on how to cite this item, please visit http://lib.dr.iastate.edu/ howtocite.html. This Article is brought to you for free and open access by the Agricultural and Biosystems Engineering at Iowa State University Digital Repository. It has been accepted for inclusion in Agricultural and Biosystems Engineering Publications by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Computer Simulation of an Unsprung Vehicle, Part I Abstract The mechanics of unsprung wheel tractors has received extensive study in the last 40 years. The quantitative approach to the problem essentially began with the work of McKibben (7) in the 1920s. Twenty years later, Worthington (12) analyzed the effect of pneumatic tires on tractor stabiIity. Later, Buchele (3) drew on land- locomotion theory to introduce soil variables into the equations for tractor stability. Differential equations were avoided in these analyses by assuming that the tractor moved with zero or constant acceleration. Thus, vibration and actual tipping of the tractor were beyond the scope of the analyses. Disciplines Agriculture | Bioresource and Agricultural Engineering Comments This article is published as Goering, C. -

MVME8100/MVME8105/MVME8110 Installation and Use P/N: 6806800P25O September 2019

MVME8100/MVME8105/MVME8110 Installation and Use P/N: 6806800P25O September 2019 © 2019 SMART™ Embedded Computing, Inc. All Rights Reserved. Trademarks The stylized "S" and "SMART" is a registered trademark of SMART Modular Technologies, Inc. and “SMART Embedded Computing” and the SMART Embedded Computing logo are trademarks of SMART Modular Technologies, Inc. All other names and logos referred to are trade names, trademarks, or registered trademarks of their respective owners. These materials are provided by SMART Embedded Computing as a service to its customers and may be used for informational purposes only. Disclaimer* SMART Embedded Computing (SMART EC) assumes no responsibility for errors or omissions in these materials. These materials are provided "AS IS" without warranty of any kind, either expressed or implied, including but not limited to, the implied warranties of merchantability, fitness for a particular purpose, or non-infringement. SMART EC further does not warrant the accuracy or completeness of the information, text, graphics, links or other items contained within these materials. SMART EC shall not be liable for any special, indirect, incidental, or consequential damages, including without limitation, lost revenues or lost profits, which may result from the use of these materials. SMART EC may make changes to these materials, or to the products described therein, at any time without notice. SMART EC makes no commitment to update the information contained within these materials. Electronic versions of this material may be read online, downloaded for personal use, or referenced in another document as a URL to a SMART EC website. The text itself may not be published commercially in print or electronic form, edited, translated, or otherwise altered without the permission of SMART EC. -

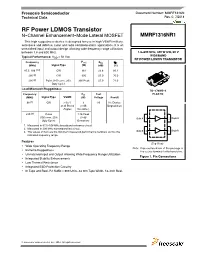

RF Power LDMOS Transistor

Freescale Semiconductor Document Number: MMRF1316N Technical Data Rev. 0, 7/2014 RF Power LDMOS Transistor N--Channel Enhancement--Mode Lateral MOSFET MMRF1316NR1 This high ruggedness device is designed for use in high VSWR military, aerospace and defense, radar and radio communications applications. It is an unmatched input and output design allowing wide frequency range utilization, between 1.8 and 600 MHz. 1.8–600 MHz, 300 W CW, 50 V WIDEBAND Typical Performance: VDD =50Vdc RF POWER LDMOS TRANSISTOR Frequency Pout Gps D (MHz) Signal Type (W) (dB) (%) 87.5--108 (1,3) CW 361 23.8 80.1 230 (2) CW 300 25.0 70.0 230 (2) Pulse (100 sec, 20% 300 Peak 27.0 71.0 Duty Cycle) Load Mismatch/Ruggedness TO--270WB--4 Frequency Pin Test PLASTIC (MHz) Signal Type VSWR (W) Voltage Result 98 (1) CW > 65:1 3 50 No Device at all Phase (3 dB Degradation Angles Overdrive) 230 (2) Pulse 1.16 Peak (100 sec, 20% (3 dB Gate A 32Drain A Duty Cycle) Overdrive) 1. Measured in 87.5–108 MHz broadband reference circuit. 2. Measured in 230 MHz narrowband test circuit. 3. The values shown are the minimum measured performance numbers across the Gate B 41Drain B indicated frequency range. Features (Top View) Wide Operating Frequency Range Note: Exposed backside of the package is Extreme Ruggedness the source terminal for the transistors. Unmatched Input and Output Allowing Wide Frequency Range Utilization Figure 1. Pin Connections Integrated Stability Enhancements Low Thermal Resistance Integrated ESD Protection Circuitry In Tape and Reel. R1 Suffix = 500 Units, 44 mm Tape Width, 13--inch Reel. -

Erste Arbeiten Mit Zuse-Computern

HERMANN FLESSNER ERSTE ARBEITEN MIT ZUSE-COMPUTERN BAND 1 Erste Arbeiten mit Zuse-Computern Mit einem Geleitwort von Prof. Dr.-Ing. Horst Zuse Band 1 Rechnen, Planen und Konstruieren mit Computern Von Prof. em. Dr.-Ing. Hermann Flessner Universit¨at Hamburg Mit 166 Abbildungen Prof. em. Dr.-Ing. Hermann Flessner Geboren 1930 in Hamburg. Nach Abitur 1950 – 1952 Zimmererlehre in Dusseldorf.¨ 1953 – 1957 Studium des Bauingenieurwesens an der Technischen Hochschule Hannover. 1958 – 1962 Statiker undKonstrukteurinderEd.Zublin¨ AG. 1962 Wiss. Mitarbeiter am Institut fur¨ Massivbau der TH Hannover; dort 1964 Lehrauftrag und 1965 Promotion. Ebd. 1966 Professor fur¨ Elektronisches Rechnen im Bauwesen. 1968 – 1978 Professor fur¨ Angewandte Informatik im Ingenieurwesen an der Ruhr-Universit¨at Bochum; 1969 – 70 zwischenzeitlich Gastprofessor am Massachusetts Institute of Technology (M.I.T.), Cambridge, USA. Ab 1978 Ordentl. Professor fur¨ Angewandte Informatik in Naturwissenschaft und Technik an der Universit¨at Hamburg. Dort 1994 Emeritierung, danach beratender Ingenieur. Biografische Information der Deutschen Nationalbibliothek Die Deutsche Nationalbibliothek verzeichnet diese Publikation in der Deutschen Nationalbibliothek; detaillierte bibliografische Daten sind im Internet uber¨ http://dnb.d-nb.de abrufbar uber:¨ Flessner, Hermann: Erste Arbeiten mit ZUSE-Computern, Band 1 Dieses Werk ist urheberrechtlich geschutzt.¨ Die dadurch begrundeten¨ Rechte, insbesondere die der Ubersetzung,¨ des Nachdrucks, der Entnahme von Abbildungen, der Wiedergabe auf photomechani- schem oder digitalem Wege und der Speicherung in Datenverarbeitungsanlagen bzw. Datentr¨agern jeglicher Art bleiben, auch bei nur auszugsweiser Verwertung, vorbehalten. c 2016 H. Flessner, D - 21029 Hamburg 2. Auflage 2017 Herstellung..und Verlag:nBoD – Books on Demand, Norderstedt Text und Umschlaggestaltung: Hermann Flessner ISBN: 978-3-7412-2897-1 V Geleitwort Hermann Flessner kenne ich, pr¨aziser gesagt, er kennt mich als 17-j¨ahrigen seit ca. -

MVME5100 Single Board Computer Installation and Use Manual Provides the Information You Will Need to Install and Configure Your MVME5100 Single Board Computer

MVME5100 Single Board Computer Installation and Use V5100A/IH3 April 2002 Edition © Copyright 1999, 2000, 2001, 2002 Motorola, Inc. All rights reserved. Printed in the United States of America. Motorola and the Motorola logo are registered trademarks and AltiVec is a trademark of Motorola, Inc. All other products mentioned in this document are trademarks or registered trademarks of their respective holders. Safety Summary The following general safety precautions must be observed during all phases of operation, service, and repair of this equipment. Failure to comply with these precautions or with specific warnings elsewhere in this manual could result in personal injury or damage to the equipment. The safety precautions listed below represent warnings of certain dangers of which Motorola is aware. You, as the user of the product, should follow these warnings and all other safety precautions necessary for the safe operation of the equipment in your operating environment. Ground the Instrument. To minimize shock hazard, the equipment chassis and enclosure must be connected to an electrical ground. If the equipment is supplied with a three-conductor AC power cable, the power cable must be plugged into an approved three-contact electrical outlet, with the grounding wire (green/yellow) reliably connected to an electrical ground (safety ground) at the power outlet. The power jack and mating plug of the power cable meet International Electrotechnical Commission (IEC) safety standards and local electrical regulatory codes. Do Not Operate in an Explosive Atmosphere. Do not operate the equipment in any explosive atmosphere such as in the presence of flammable gases or fumes. Operation of any electrical equipment in such an environment could result in an explosion and cause injury or damage. -

Cable Technician Pocket Guide Subscriber Access Networks

RD-24 CommScope Cable Technician Pocket Guide Subscriber Access Networks Document MX0398 Revision U © 2021 CommScope, Inc. All rights reserved. Trademarks ARRIS, the ARRIS logo, CommScope, and the CommScope logo are trademarks of CommScope, Inc. and/or its affiliates. All other trademarks are the property of their respective owners. E-2000 is a trademark of Diamond S.A. CommScope is not sponsored, affiliated or endorsed by Diamond S.A. No part of this content may be reproduced in any form or by any means or used to make any derivative work (such as translation, transformation, or adaptation) without written permission from CommScope, Inc and/or its affiliates ("CommScope"). CommScope reserves the right to revise or change this content from time to time without obligation on the part of CommScope to provide notification of such revision or change. CommScope provides this content without warranty of any kind, implied or expressed, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. CommScope may make improvements or changes in the products or services described in this content at any time. The capabilities, system requirements and/or compatibility with third-party products described herein are subject to change without notice. ii CommScope, Inc. CommScope (NASDAQ: COMM) helps design, build and manage wired and wireless networks around the world. As a communications infrastructure leader, we shape the always-on networks of tomor- row. For more than 40 years, our global team of greater than 20,000 employees, innovators and technologists have empowered customers in all regions of the world to anticipate what's next and push the boundaries of what's possible.