Echo & Narziss

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pymupdf 1.12.2 Documentation » Next | Index Pymupdf Documentation

PyMuPDF 1.12.2 documentation » next | index PyMuPDF Documentation Introduction Note on the Name fitz License Covered Version Installation Option 1: Install from Sources Step 1: Download PyMuPDF Step 2: Download and Generate MuPDF Step 3: Build / Setup PyMuPDF Option 2: Install from Binaries Step 1: Download Binary Step 2: Install PyMuPDF MD5 Checksums Targeting Parallel Python Installations Using UPX Tutorial Importing the Bindings Opening a Document Some Document Methods and Attributes Accessing Meta Data Working with Outlines Working with Pages Inspecting the Links of a Page Rendering a Page Saving the Page Image in a File Displaying the Image in Dialog Managers Extracting Text Searching Text PDF Maintenance Modifying, Creating, Re-arranging and Deleting Pages Joining and Splitting PDF Documents Saving Closing Example: Dynamically Cleaning up Corrupt PDF Documents Further Reading Classes Annot Example Colorspace Document Remarks on select() select() Examples setMetadata() Example setToC() Example insertPDF() Examples Other Examples Identity IRect Remark IRect Algebra Examples Link linkDest Matrix Remarks 1 Remarks 2 Matrix Algebra Examples Shifting Flipping Shearing Rotating Outline Page Description of getLinks() Entries Notes on Supporting Links Homologous Methods of Document and Page Pixmap Supported Input Image Types Details on Saving Images with writeImage() Pixmap Example Code Snippets Point Remark Point Algebra Examples Shape Usage Examples Common Parameters Rect Remark Rect Algebra Examples Operator Algebra for Geometry Objects -

1 Gnustep Frequently Asked Questions with Answers

1 GNUstep Frequently Asked Questions with Answers Last updated 1 January 2008. Please send corrections to [email protected]. Also look at the user FAQ for more user oriented questions. 1.1 Compatibility 1.1.1 Is it easy to port OPENSTEP programs to GNUstep? It is probably easy for simple programs. There are some portability tools to make this easier, or rewrite the Makefiles yourself. You will also have to translate the NIB files (if there are any) to GNUstep model files using the nib2gmodel program (from ftp://ftp.gnustep.org/pub/gnustep/dev-apps). 1.1.2 How about porting between Cocoa and GNUstep? It’s easier from GNUstep to Cocoa than Cocoa to GNUstep. Cocoa is constantly changing, much faster than GNUstep could hope to keep up. They have added extensions and new classes that aren’t available in GNUstep yet. Plus there are some other issues. If you start with Cocoa: • Use #ifndef GNUSTEP for Apple only code. • Do not use CoreFoundation • Do not use Objective-C++ (except with gcc 4.1 or later) • Do not use Quicktime or other proprietary extension • GNUstep should be able to read Cocoa nib files automatically, so there is no need to port these, although you might want to have GNUstep specific versions of them anyway. See also http://mediawiki.gnustep.org/index.php/Writing_portable_code for more information. 1.1.3 Tools for porting While the programming interface should be almost transparent between systems (expect for the unimplemented parts, of course), there are a variety of other files and tools that are necessary for porting programs. -

Replacing C Library Code with Rust

Replacing C library code with Rust: What I learned with librsvg Federico Mena Quintero [email protected] GUADEC 2017 Manchester, UK What uses librsvg? GNOME platform and desktop: gtk+ indirectly through gdk-pixbuf thumbnailer via gdk-pixbuf eog gstreamer-plugins-bad What uses librsvg? Fine applications: gnome-games (gnome-chess, five-or-more, etc.) gimp gcompris claws-mail darktable What uses librsvg? Desktop environments mate-panel Evas / Enlightenment emacs-x11 What uses librsvg? Things you may not expect ImageMagick Wikipedia ← they have been awesome Long History ● First commit, Eazel, 2001 ● Experiment instead of building a DOM, stream in SVG by using libbxml2’s SAX parser ● Renderer used libart ● Gill → Sodipodi → Inkscape ● Librsvg was being written while the SVG spec was being written ● Ported to Cairo eventually – I’ll remove the last libart-ism any day now Federico takes over ● Librsvg was mostly unmaintained in 2015 ● Took over maintenance in February 2015 ● Started the Rust port in October 2016 Pain points: librsvg’s CVEs ● CVE-2011-3146 - invalid cast of RsvgNode type, crash ● CVE-2015-7557 - out-of-bounds heap read in list-of-points ● CVE-2015-7558 - Infinite loop / stack overflow from cyclic element references (thanks to Benjamin Otte for the epic fix) ● CVE-2016-4348 - NOT OUR PROBLEM - integer overflow when writing PNGs in Cairo (rowstride computation) ● CVE-2017-11464 - Division by zero in Gaussian blur code (my bad, sorry) ● Many non-CVEs found through fuzz testing and random SVGs on the net ● https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=librsvg -

Debian Build Dependecies

Debian build dependecies Valtteri Rahkonen valtteri.rahkonen@movial.fi Debian build dependecies by Valtteri Rahkonen Revision history Version: Author: Description: 2004-04-29 Rahkonen Changed title 2004-04-20 Rahkonen Added introduction, reorganized document 2004-04-19 Rahkonen Initial version Table of Contents 1. Introduction............................................................................................................................................1 2. Required source packages.....................................................................................................................2 2.1. Build dependencies .....................................................................................................................2 2.2. Misc packages .............................................................................................................................4 3. Optional source packages......................................................................................................................5 3.1. Build dependencies .....................................................................................................................5 3.2. Misc packages ...........................................................................................................................19 A. Required sources library dependencies ............................................................................................21 B. Optional sources library dependencies .............................................................................................22 -

Pymupdf Documentation Release 1.18.19

PyMuPDF Documentation Release 1.18.19 Jorj X. McKie Sep 17, 2021 Contents 1 Introduction 1 1.1 Note on the Name fitz ..........................................2 1.2 License and Copyright..........................................2 1.3 Covered Version.............................................2 2 Installation 3 2.1 Step 1: Install MuPDF..........................................3 2.2 Step 2: Download and Generate PyMuPDF...............................3 3 Tutorial 5 3.1 Importing the Bindings..........................................5 3.2 Opening a Document...........................................5 3.3 Some Document Methods and Attributes................................6 3.4 Accessing Meta Data...........................................6 3.5 Working with Outlines..........................................6 3.6 Working with Pages...........................................7 3.6.1 Inspecting the Links, Annotations or Form Fields of a Page..................7 3.6.2 Rendering a Page........................................8 3.6.3 Saving the Page Image in a File................................8 3.6.4 Displaying the Image in GUIs.................................8 3.6.4.1 wxPython.......................................8 3.6.4.2 Tkinter.........................................9 3.6.4.3 PyQt4, PyQt5, PySide.................................9 3.6.5 Extracting Text and Images...................................9 3.6.6 Searching for Text....................................... 10 3.7 PDF Maintenance............................................ 10 3.7.1 Modifying, -

Strong Dependencies Between Software Components

Specific Targeted Research Project Contract no.214898 Seventh Framework Programme: FP7-ICT-2007-1 Technical Report 0002 MANCOOSI Managing the Complexity of the Open Source Infrastructure Strong Dependencies between Software Components Pietro Abate ([email protected]) Jaap Boender ([email protected]) Roberto Di Cosmo ([email protected]) Stefano Zacchiroli ([email protected]) Universit`eParis Diderot, PPS UMR 7126, Paris, France May 24, 2009 Web site: www.mancoosi.org Contents 1 Introduction . .2 2 Strong dependencies . .3 3 Strong dependencies in Debian . .7 3.1 Strong vs direct sensitivity: exceptions . .9 3.2 Using strong dominance to cluster data . 11 3.3 Debian is a small world . 11 4 Efficient computation . 12 5 Applications . 13 6 Related works . 16 7 Conclusion and future work . 17 8 Acknowledgements . 18 A Case Study: Evaluation of debian structure . 21 Abstract Component-based systems often describe context requirements in terms of explicit inter-component dependencies. Studying large instances of such systems|such as free and open source software (FOSS) distributions|in terms of declared dependencies between packages is appealing. It is however also misleading when the language to express dependencies is as expressive as boolean formulae, which is often the case. In such settings, a more appropriate notion of component dependency exists: strong dependency. This paper introduces such notion as a first step towards modeling semantic, rather then syntactic, inter-component relationships. Furthermore, a notion of component sensitivity is derived from strong dependencies, with ap- plications to quality assurance and to the evaluation of upgrade risks. An empirical study of strong dependencies and sensitivity is presented, in the context of one of the largest, freely available, component-based system. -

DA-683 Linux User's Manual

DA-683 Linux User’s Manual First Edition, January 2011 www.moxa.com/product © 2011 Moxa Inc. All rights reserved. Reproduction without permission is prohibited. DA-683 Linux User’s Manual The software described in this manual is furnished under a license agreement and may be used only in accordance with the terms of that agreement. Copyright Notice Copyright ©2011 Moxa Inc. All rights reserved. Reproduction without permission is prohibited. Trademarks The MOXA logo is a registered trademark of Moxa Inc. All other trademarks or registered marks in this manual belong to their respective manufacturers. Disclaimer Information in this document is subject to change without notice and does not represent a commitment on the part of Moxa. Moxa provides this document as is, without warranty of any kind, either expressed or implied, including, but not limited to, its particular purpose. Moxa reserves the right to make improvements and/or changes to this manual, or to the products and/or the programs described in this manual, at any time. Information provided in this manual is intended to be accurate and reliable. However, Moxa assumes no responsibility for its use, or for any infringements on the rights of third parties that may result from its use. This product might include unintentional technical or typographical errors. Changes are periodically made to the information herein to correct such errors, and these changes are incorporated into new editions of the publication. Technical Support Contact Information www.moxa.com/support Moxa Americas Moxa China (Shanghai office) Toll-free: 1-888-669-2872 Toll-free: 800-820-5036 Tel: +1-714-528-6777 Tel: +86-21-5258-9955 Fax: +1-714-528-6778 Fax: +86-21-5258-5505 Moxa Europe Moxa Asia-Pacific Tel: +49-89-3 70 03 99-0 Tel: +886-2-8919-1230 Fax: +49-89-3 70 03 99-99 Fax: +886-2-8919-1231 Table of Contents 1. -

Licensing Information User Manual Oracle Solaris 11.3 Last Updated September 2018

Licensing Information User Manual Oracle Solaris 11.3 Last Updated September 2018 Part No: E54836 September 2018 Licensing Information User Manual Oracle Solaris 11.3 Part No: E54836 Copyright © 2018, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

V2616 Linux User's Manual Introduction

V2616 Linux User’s Manual First Edition, October 2011 www.moxa.com/product © 2011 Moxa Inc. All rights reserved. V2616 Linux User’s Manual The software described in this manual is furnished under a license agreement and may be used only in accordance with the terms of that agreement. Copyright Notice © 2011 Moxa Inc. All rights reserved. Trademarks The MOXA logo is a registered trademark of Moxa Inc. All other trademarks or registered marks in this manual belong to their respective manufacturers. Disclaimer Information in this document is subject to change without notice and does not represent a commitment on the part of Moxa. Moxa provides this document as is, without warranty of any kind, either expressed or implied, including, but not limited to, its particular purpose. Moxa reserves the right to make improvements and/or changes to this manual, or to the products and/or the programs described in this manual, at any time. Information provided in this manual is intended to be accurate and reliable. However, Moxa assumes no responsibility for its use, or for any infringements on the rights of third parties that may result from its use. This product might include unintentional technical or typographical errors. Changes are periodically made to the information herein to correct such errors, and these changes are incorporated into new editions of the publication. Technical Support Contact Information www.moxa.com/support Moxa Americas Moxa China (Shanghai office) Toll-free: 1-888-669-2872 Toll-free: 800-820-5036 Tel: +1-714-528-6777 Tel: +86-21-5258-9955 Fax: +1-714-528-6778 Fax: +86-21-5258-5505 Moxa Europe Moxa Asia-Pacific Tel: +49-89-3 70 03 99-0 Tel: +886-2-8919-1230 Fax: +49-89-3 70 03 99-99 Fax: +886-2-8919-1231 Table of Contents 1. -

Is 2-D Graphics the Next Killer App for Linux? 1 Introduction 2 the Evolution of Resolution

Is 2-D graphics the next killer app for Linux? Raph Levien artofcode LLC Abstract This paper describes some of the technical issues and challenges involved in high quality 2D graphics displays. We further describe a number of projects that provide infrastructure for 2D graphics applications under Linux and other free operating systems. 1 Introduction The compelling technical strengths of Linux and other free software systems in multitasking and networking have brought it considerable success in the area of Web servers. In this presentation, I demonstrate that the similar technical strengths free software is gaining in 2D graphics, and argue that this area could be the next “killer app” for Linux. Advances in display technology present an opportunity and a challenge for 2D graphics software. As resolutions pass 140 dpi for CRT’s and 200 dpi for LCD’s, the user experience becomes a sharp, clear display of information rather than a field of pixels, with a dilemma between smooth but fuzzy antialiasing, or sharp but jaggy edges. These displays pose a formidable challenge to drive well. First, software must be designed to be scalable, or resolution independent. Second, at these resolutions, good antialiasing is crucial for the highest quality. Third, these displays require generating pixels at a very high bandwidth. Fortunately, between existing technology and new projects in development, Linux will meet these challenges. We will present and demonstrate four particularly instructive technologies: Ghostscript is a mature program, long having provided scalable, resolution independent rendering for both screen and printer. We will demonstrate the new transparency and blending capabilities of PDF 1.4, scheduled for the next major release. -

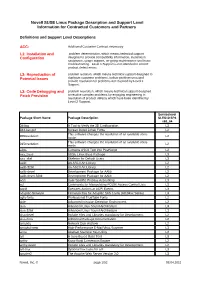

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP4 x86_64 3ddiag A Tool to Verify the 3D Configuration L3 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 This software changes the resolution of an available vbios 855resolution L2 mode. This software changes the resolution of an available vbios 915resolution L2 mode. a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-32bit An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-devel-32bit Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 acpid Executes Actions at ACPI Events L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-32bit Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. -

Menüführung Für Raspberry Pi Mit Piface LCD-Display ______

Dokumentation „Rasputin-4 PiFace Menü“ Menüführung für Raspberry Pi mit PiFace LCD-Display ______________________________________________________________________ Seite 1 - 67 Dokumentation „Rasputin-4 PiFace Menü“ Menüführung für Raspberry Pi mit PiFace LCD-Display ______________________________________________________________________ Nachdruck und/oder Vervielfältigungen, dieser Beschreibung ist ausdrücklich gestattet. Quellenangaben über Abbildungen in dieser Beschreibung: - Der Tux mit dem Notebook stammt von der Webseite: http://www.openclipart.org/detail/60343 1. Auflage Copyright 02-2014 by Dieter Broichhagen | Humboldtstraße 106 | 51145 Köln. e-Mail: [email protected] Web: http://www.broichhagen.de | http://www.illugen-collasionen.com Satz, Layout, Screenshot´s: Dieter Broichhagen Alle Rechte dieser Beschreibung liegen beim Autor Gesamtherstellung: Dieter Broichhagen Irrtum und Änderungen vorbehalten! (Stand: 08.02.2014) Seite 2 - 67 Dokumentation „Rasputin-4 PiFace Menü“ Menüführung für Raspberry Pi mit PiFace LCD-Display ______________________________________________________________________ V o r w o r t 2012 gewann ich ein Buch bei einer Tombola am SFD-2012 über das ARDUINO-Board. Ich fand dies alles sehr interessant, was man mit dem ARDUINO anstellen kann. Ich konnte auch kurze Zeit später einen ARDUINO-UNO beim Franzis-Verlag für 20 € erstehen, habe aber bis heute nichts damit gemacht, weil der RaspberryPi mich voll und ganz in seinem Bann hielt und noch immer hält. Seit Oktober 2012 beschäftige ich mich in meiner Freizeit mit diesem Minicomputer. Ich hatte auch viele Ideen im Kopf, was man so alles mit ihm anstellen könnte. Ich dachte da nicht an Garagentoröffner oder Videoballerspiele. Eher an Anwendungen wie z.B. die Sicherung einer SDRAM-Karte von der Digital-Kamera im Urlaub oder ein Internetradio, oder, oder, oder... Anwendungen, wo man keinen Monitor und keine Tastatur benötigt.