Knowledge-Based Verification of Concatenative Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bit Shifts Bit Operations, Logical Shifts, Arithmetic Shifts, Rotate Shifts

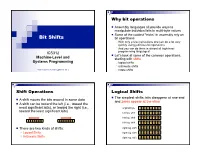

Why bit operations Assembly languages all provide ways to manipulate individual bits in multi-byte values Some of the coolest “tricks” in assembly rely on Bit Shifts bit operations With only a few instructions one can do a lot very quickly using judicious bit operations And you can do them in almost all high-level ICS312 programming languages! Let’s look at some of the common operations, Machine-Level and starting with shifts Systems Programming logical shifts arithmetic shifts Henri Casanova ([email protected]) rotate shifts Shift Operations Logical Shifts The simplest shifts: bits disappear at one end A shift moves the bits around in some data and zeros appear at the other A shift can be toward the left (i.e., toward the most significant bits), or toward the right (i.e., original byte 1 0 1 1 0 1 0 1 toward the least significant bits) left log. shift 0 1 1 0 1 0 1 0 left log. shift 1 1 0 1 0 1 0 0 left log. shift 1 0 1 0 1 0 0 0 There are two kinds of shifts: right log. shift 0 1 0 1 0 1 0 0 Logical Shifts right log. shift 0 0 1 0 1 0 1 0 Arithmetic Shifts right log. shift 0 0 0 1 0 1 0 1 Logical Shift Instructions Shifts and Numbers Two instructions: shl and shr The common use for shifts: quickly multiply and divide by powers of 2 One specifies by how many bits the data is shifted In decimal, for instance: multiplying 0013 by 10 amounts to doing one left shift to obtain 0130 Either by just passing a constant to the instruction multiplying by 100=102 amounts to doing two left shifts to obtain 1300 Or by using whatever -

Chapter 1 Introduction to Computers, Programs, and Java

Chapter 1 Introduction to Computers, Programs, and Java 1.1 Introduction • The central theme of this book is to learn how to solve problems by writing a program . • This book teaches you how to create programs by using the Java programming languages . • Java is the Internet program language • Why Java? The answer is that Java enables user to deploy applications on the Internet for servers , desktop computers , and small hand-held devices . 1.2 What is a Computer? • A computer is an electronic device that stores and processes data. • A computer includes both hardware and software. o Hardware is the physical aspect of the computer that can be seen. o Software is the invisible instructions that control the hardware and make it work. • Computer programming consists of writing instructions for computers to perform. • A computer consists of the following hardware components o CPU (Central Processing Unit) o Memory (Main memory) o Storage Devices (hard disk, floppy disk, CDs) o Input/Output devices (monitor, printer, keyboard, mouse) o Communication devices (Modem, NIC (Network Interface Card)). Bus Storage Communication Input Output Memory CPU Devices Devices Devices Devices e.g., Disk, CD, e.g., Modem, e.g., Keyboard, e.g., Monitor, and Tape and NIC Mouse Printer FIGURE 1.1 A computer consists of a CPU, memory, Hard disk, floppy disk, monitor, printer, and communication devices. CMPS161 Class Notes (Chap 01) Page 1 / 15 Kuo-pao Yang 1.2.1 Central Processing Unit (CPU) • The central processing unit (CPU) is the brain of a computer. • It retrieves instructions from memory and executes them. -

Tinyos Meets Wireless Mesh Networks

TinyOS Meets Wireless Mesh Networks Muhammad Hamad Alizai, Bernhard Kirchen, Jo´ Agila´ Bitsch Link, Hanno Wirtz, Klaus Wehrle Communication and Distributed Systems, RWTH Aachen University, Germany [email protected] Abstract 2 TinyWifi We present TinyWifi, a nesC code base extending TinyOS The goal of TinyWifi is to enable direct execution of to support Linux powered network nodes. It enables devel- TinyOS applications and protocols on Linux driven network opers to build arbitrary TinyOS applications and protocols nodes with no additional effort. To achieve this, the Tiny- and execute them directly on Linux by compiling for the Wifi platform extends the existing TinyOS core to provide new TinyWifi platform. Using TinyWifi as a TinyOS plat- the exact same hardware independent functionality as any form, we expand the applicability and means of evaluation of other platform (see Figure 1). At the same time, it exploits wireless protocols originally designed for sensornets towards the customary advantages of typical Linux driven network inherently similar Linux driven ad hoc and mesh networks. devices such as large memory, more processing power and higher communication bandwidth. In the following we de- 1 Motivation scribe the architecture of each component of our TinyWifi Implementation. Although different in their applications and resource con- straints, sensornets and Wi-Fi based multihop networks share 2.1 Timers inherent similarities: (1) They operate on the same frequency The TinyOS timing functionality is based on the hardware band, (2) experience highly dynamic and bursty links due to timers present in current microcontrollers. A sensor-node radio interferences and other physical influences resulting in platform provides multiple realtime hardware timers to spe- unreliable routing paths, (3) each node can only communi- cific TinyOS components at the HAL layer - such as alarms, cate with nodes within its radio range forming a mesh topol- counters, and virtualization. -

Shared Sensor Networks Fundamentals, Challenges, Opportunities, Virtualization Techniques, Comparative Analysis, Novel Architecture and Taxonomy

Journal of Sensor and Actuator Networks Review Shared Sensor Networks Fundamentals, Challenges, Opportunities, Virtualization Techniques, Comparative Analysis, Novel Architecture and Taxonomy Nahla S. Abdel Azeem 1, Ibrahim Tarrad 2, Anar Abdel Hady 3,4, M. I. Youssef 2 and Sherine M. Abd El-kader 3,* 1 Information Technology Center, Electronics Research Institute (ERI), El Tahrir st, El Dokki, Giza 12622, Egypt; [email protected] 2 Electrical Engineering Department, Al-Azhar University, Naser City, Cairo 11651, Egypt; [email protected] (I.T.); [email protected] (M.I.Y.) 3 Computers & Systems Department, Electronics Research Institute (ERI), El Tahrir st, El Dokki, Giza 12622, Egypt; [email protected] 4 Department of Computer Science & Engineering, School of Engineering and Applied Science, Washington University in St. Louis, St. Louis, MO 63130, 1045, USA; [email protected] * Correspondence: [email protected] Received: 19 March 2019; Accepted: 7 May 2019; Published: 15 May 2019 Abstract: The rabid growth of today’s technological world has led us to connecting every electronic device worldwide together, which guides us towards the Internet of Things (IoT). Gathering the produced information based on a very tiny sensing devices under the umbrella of Wireless Sensor Networks (WSNs). The nature of these networks suffers from missing sharing among them in both hardware and software, which causes redundancy and more budget to be used. Thus, the appearance of Shared Sensor Networks (SSNs) provides a real modern revolution in it. Where it targets making a real change in its nature from domain specific networks to concurrent running domain networks. That happens by merging it with the technology of virtualization that enables the sharing feature over different levels of its hardware and software to provide the optimal utilization of the deployed infrastructure with a reduced cost. -

Low Power Or High Performance? a Tradeoff Whose Time

Low Power or High Performance? ATradeoffWhoseTimeHasCome(andNearlyGone) JeongGil Ko1,KevinKlues2,ChristianRichter3,WanjaHofer4, Branislav Kusy3,MichaelBruenig3,ThomasSchmid5,QiangWang6, Prabal Dutta7,andAndreasTerzis1 1 Department of Computer Science, Johns Hopkins University 2 Computer Science Division, University of California, Berkeley 3 Australian Commonwealth Scientific and Research Organization (CSIRO) 4 Department of Computer Science, Friedrich–Alexander University Erlangen–Nuremberg 5 Department Computer Science, University of Utah 6 Department of Control Science and Engineering, Harbin Institute of Technology 7 Division of Computer Science and Engineering, University of Michigan, Ann Arbor Abstract. Some have argued that the dichotomy between high-performance op- eration and low resource utilization is false – an artifact that will soon succumb to Moore’s Law and careful engineering. If such claims prove to be true, then the traditional 8/16- vs. 32-bit power-performance tradeoffs become irrelevant, at least for some low-power embedded systems. We explore the veracity of this the- sis using the 32-bit ARM Cortex-M3 microprocessor and find quite substantial progress but not deliverance. The Cortex-M3, compared to 8/16-bit microcon- trollers, reduces latency and energy consumption for computationally intensive tasks as well as achieves near parity on code density. However, it still incurs a 2 overhead in power draw for “traditional” sense-store-send-sleep applica- tions.∼ × These results suggest that while 32-bit processors are not yet ready for applications with very tight power requirements, they are poised for adoption ev- erywhere else. Moore’s Law may yet prevail. 1Introduction The desire to operate unattended for long periods of time has been a driving force in de- signing wireless sensor networks (WSNs) over the past decade. -

Building Intelligent Middleware for Large Scale CPS Systems

Building Intelligent Middleware for Large Scale CPS Systems Niels Reijers, Yu-Chung Wang, Chi-Sheng Shih, Jane Y. Kwei-Jay Lin Hsu Department of Electrical Eng. and Comp. Sci. Intel-NTU Connected Context Computing Center University of California, Irvine National Taiwan University Irvine, CA, USA Taipei, Taiwan Abstract—This paper presents a study on building intelligent application to express globally what it really wants from the middleware on wireless sensor network (WSN) for large-scale sensor network. Furthermore, since most WSN applications are cyber-physical systems (LCPS). A large portion of WSN projects specifically designed to particular scenarios and unique target has focused on low-level algorithms such as routing, MAC layers, environments, most behavior is hardcoded, resulting in rigid and data aggregation. At a higher level, various WSN networks that are typically not very flexible at adjusting their applications with specific target environments have been behavior to faults or changing external conditions. A more high developed and deployed. Most applications are built directly on level vision on how large-scale cyber-physical systems (LCPS) top of a small OS, which is notoriously hard to work with, and or networks of sensors should be programmed, deployed, and results in applications that are fragile and cannot be ported to configured is still missing. other platforms. The gap between the low level algorithms and the application development process has received significantly Indeed, we are far from conducting Rapid Application less attention. Our interest is to fill that gap by developing Development (RAD) for LCPS. This project aims to fill the gap, intelligent middleware for building LCPS applications. -

Language Translators

Student Notes Theory LANGUAGE TRANSLATORS A. HIGH AND LOW LEVEL LANGUAGES Programming languages Low – Level Languages High-Level Languages Example: Assembly Language Example: Pascal, Basic, Java Characteristics of LOW Level Languages: They are machine oriented : an assembly language program written for one machine will not work on any other type of machine unless they happen to use the same processor chip. Each assembly language statement generally translates into one machine code instruction, therefore the program becomes long and time-consuming to create. Example: 10100101 01110001 LDA &71 01101001 00000001 ADD #&01 10000101 01110001 STA &71 Characteristics of HIGH Level Languages: They are not machine oriented: in theory they are portable , meaning that a program written for one machine will run on any other machine for which the appropriate compiler or interpreter is available. They are problem oriented: most high level languages have structures and facilities appropriate to a particular use or type of problem. For example, FORTRAN was developed for use in solving mathematical problems. Some languages, such as PASCAL were developed as general-purpose languages. Statements in high-level languages usually resemble English sentences or mathematical expressions and these languages tend to be easier to learn and understand than assembly language. Each statement in a high level language will be translated into several machine code instructions. Example: number:= number + 1; 10100101 01110001 01101001 00000001 10000101 01110001 B. GENERATIONS OF PROGRAMMING LANGUAGES 4th generation 4GLs 3rd generation High Level Languages 2nd generation Low-level Languages 1st generation Machine Code Page 1 of 5 K Aquilina Student Notes Theory 1. MACHINE LANGUAGE – 1ST GENERATION In the early days of computer programming all programs had to be written in machine code. -

![Arxiv:1712.05590V2 [Cs.PL] 18 Dec 2017 Ffaue,Btsffrfo Lwono N Otoodr Fm and of Throughput Orders Reducing Two to Consumption](https://docslib.b-cdn.net/cover/1635/arxiv-1712-05590v2-cs-pl-18-dec-2017-ffaue-bts-rfo-lwono-n-otoodr-fm-and-of-throughput-orders-reducing-two-to-consumption-781635.webp)

Arxiv:1712.05590V2 [Cs.PL] 18 Dec 2017 Ffaue,Btsffrfo Lwono N Otoodr Fm and of Throughput Orders Reducing Two to Consumption

Abstract Many virtual machines exist for sensor nodes with only a few KB RAM and tens to a few hundred KB flash memory. They pack an impressive set of features, but suffer from a slowdown of one to two orders of magnitude compared to optimised native code, reducing throughput and increasing power consumption. Compiling bytecode to native code to improve performance has been studied extensively for larger devices, but the restricted resources on sen- sor nodes mean most modern techniques cannot be applied. Simply replac- ing bytecode instructions with predefined sequences of native instructions is known to improve performance, but produces code several times larger than the optimised C equivalent, limiting the size of programmes that can fit onto a device. This paper identifies the major sources of overhead resulting from this basic approach, and presents optimisations to remove most of the remain- ing performance overhead, and over half the size overhead, reducing them to 69% and 91% respectively. While this increases the size of the VM, the break-even point at which this fixed cost is compensated for is well within the range of memory available on a sensor device, allowing us to both improve performance and load more code on a device. arXiv:1712.05590v2 [cs.PL] 18 Dec 2017 1 Improved Ahead-of-Time Compilation of Stack-Based JVM Bytecode on Resource-Constrained Devices Niels Reijers, Chi-Sheng Shih NTU-IoX Research Center Department of Computer Science and Information Engineering National Taiwan University 1 Introduction Internet-of-Things devices come in a wide range, with vastly different perfor- mance characteristics, cost, and power requirements. -

Fortran Programming Guide

Sun Studio 12: Fortran Programming Guide Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 819–5262 Copyright 2007 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. Sun Microsystems, Inc. has intellectual property rights relating to technology embodied in the product that is described in this document. In particular, and without limitation, these intellectual property rights may include one or more U.S. patents or pending patent applications in the U.S. and in other countries. U.S. Government Rights – Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions of the FAR and its supplements. This distribution may include materials developed by third parties. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, the Solaris logo, the Java Coffee Cup logo, docs.sun.com, Java, and Solaris are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. -

Using LLVM for Optimized Lightweight Binary Re-Writing at Runtime

Using LLVM for Optimized Lightweight Binary Re-Writing at Runtime Alexis Engelke, Josef Weidendorfer Department of Informatics Technical University of Munich Munich, Germany EMail: [email protected], [email protected] Abstract—Providing new parallel programming models/ab- helpful in reducing any overhead of provided abstractions. stractions as a set of library functions has the huge advantage But with new languages, porting of existing application that it allows for an relatively easy incremental porting path for code is required, being a high burden for adoption with legacy HPC applications, in contrast to the huge effort needed when novel concepts are only provided in new programming old code bases. Therefore, to provide abstractions such as languages or language extensions. However, performance issues PGAS for legacy codes, APIs implemented as libraries are are to be expected with fine granular usage of library functions. proposed [5], [6]. Libraries have the benefit that they easily In previous work, we argued that binary rewriting can bridge can be composed and can stay small and focused. However, the gap by tightly coupling application and library functions at libraries come with the caveat that fine granular usage of runtime. We showed that runtime specialization at the binary level, starting from a compiled, generic stencil code can help library functions in inner kernels will severely limit compiler in approaching performance of manually written, statically optimizations such as vectorization and thus, may heavily compiled version. reduce performance. In this paper, we analyze the benefits of post-processing the To this end, in previous work [7], we proposed a technique re-written binary code using standard compiler optimizations for lightweight code generation by re-combining existing as provided by LLVM. -

Complete Code Generation from UML State Machine

Complete Code Generation from UML State Machine Van Cam Pham, Ansgar Radermacher, Sebastien´ Gerard´ and Shuai Li CEA, LIST, Laboratory of Model Driven Engineering for Embedded Systems, P.C. 174, Gif-sur-Yvette, 91191, France Keywords: UML State Machine, Code Generation, Semantics-conformance, Efficiency, Events, C++. Abstract: An event-driven architecture is a useful way to design and implement complex systems. The UML State Machine and its visualizations are a powerful means to the modeling of the logical behavior of such an archi- tecture. In Model Driven Engineering, executable code can be automatically generated from state machines. However, existing generation approaches and tools from UML State Machines are still limited to simple cases, especially when considering concurrency and pseudo states such as history, junction, and event types. This paper provides a pattern and tool for complete and efficient code generation approach from UML State Ma- chine. It extends IF-ELSE-SWITCH constructions of programming languages with concurrency support. The code generated with our approach has been executed with a set of state-machine examples that are part of a test-suite described in the recent OMG standard Precise Semantics Of State Machine. The traced execution results comply with the standard and are a good hint that the execution is semantically correct. The generated code is also efficient: it supports multi-thread-based concurrency, and the (static and dynamic) efficiency of generated code is improved compared to considered approaches. 1 INTRODUCTION Completeness: Existing tools and approaches mainly focus on the sequential aspect while the concurrency The UML State Machine (USM) (Specification and of state machines is limitedly supported. -

Chapter 1 Introduction to Computers, Programs, and Java

Chapter 1 Introduction to Computers, Programs, and Java 1.1 Introduction • Java is the Internet program language • Why Java? The answer is that Java enables user to deploy applications on the Internet for servers, desktop computers, and small hand-held devices. 1.2 What is a Computer? • A computer is an electronic device that stores and processes data. • A computer includes both hardware and software. o Hardware is the physical aspect of the computer that can be seen. o Software is the invisible instructions that control the hardware and make it work. • Programming consists of writing instructions for computers to perform. • A computer consists of the following hardware components o CPU (Central Processing Unit) o Memory (Main memory) o Storage Devices (hard disk, floppy disk, CDs) o Input/Output devices (monitor, printer, keyboard, mouse) o Communication devices (Modem, NIC (Network Interface Card)). Memory Disk, CD, and Storage Input Keyboard, Tape Devices Devices Mouse CPU Modem, and Communication Output Monitor, NIC Devices Devices Printer FIGURE 1.1 A computer consists of a CPU, memory, Hard disk, floppy disk, monitor, printer, and communication devices. 1.2.1 Central Processing Unit (CPU) • The central processing unit (CPU) is the brain of a computer. • It retrieves instructions from memory and executes them. • The CPU usually has two components: a control Unit and Arithmetic/Logic Unit. • The control unit coordinates the actions of the other components. • The ALU unit performs numeric operations (+ - / *) and logical operations (comparison). • The CPU speed is measured by clock speed in megahertz (MHz), with 1 megahertz equaling 1 million pulses per second.