A Decision-Making Framework to Determine the Value of On-Orbit Servicing Compared to Replacement of Space Telescopes Mark Baldes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dod Space Technology Guide

Foreword Space-based capabilities are integral to the U.S.’s national security operational doctrines and processes. Such capa- bilities as reliable, real-time high-bandwidth communica- tions can provide an invaluable combat advantage in terms of clarity of command intentions and flexibility in the face of operational changes. Satellite-generated knowledge of enemy dispositions and movements can be and has been exploited by U.S. and allied commanders to achieve deci- sive victories. Precision navigation and weather data from space permit optimal force disposition, maneuver, decision- making, and responsiveness. At the same time, space systems focused on strategic nuclear assets have enabled the National Command Authorities to act with confidence during times of crisis, secure in their understanding of the strategic force postures. Access to space and the advantages deriving from operat- ing in space are being affected by technological progress If our Armed Forces are to be throughout the world. As in other areas of technology, the faster, more lethal, and more precise in 2020 advantages our military derives from its uses of space are than they are today, we must continue to dynamic. Current space capabilities derive from prior invest in and develop new military capabilities. decades of technology development and application. Joint Vision 2020 Future capabilities will depend on space technology programs of today. Thus, continuing investment in space technologies is needed to maintain the “full spectrum dominance” called for by Joint Vision 2010 and 2020, and to protect freedom of access to space by all law-abiding nations. Trends in the availability and directions of technology clearly suggest that the U.S. -

Design Characteristics of Iran's Ballistic and Cruise Missiles

Design Characteristics of Iran’s Ballistic and Cruise Missiles Last update: January 2013 Missile Nato or Type/ Length Diameter Payload Range (km) Accuracy ‐ Propellant Guidance Other Name System (m) (m) (kg)/warhead CEP (m) /Stages Artillery* Hasib/Fajr‐11* Rocket artillery (O) 0.83 0.107 6; HE 8.5 ‐ Solid Spin stabilized Falaq‐12* Rocket artillery (O) 1.29 0.244 50; HE 10 Solid Spin stabilized Falaq‐23* Rocket artillery (O) 1.82 0.333 120; HE 11 Solid Spin stabilized Arash‐14* Rocket artillery (O) 2.8 0.122 18.3; HE 21.5 Solid Spin stabilized Arash‐25* Rocket artillery (O) 3.2 0.122 18.3; HE 30 Solid Spin stabilized Arash‐36* Rocket artillery (O) 2 0.122 18.3; HE 18 Solid Spin stabilized Shahin‐17* Rocket artillery (O) 2.9 0.33 190; HE 13 Solid Spin stabilized Shahin‐28* Rocket artillery (O) 3.9 0.33 190; HE 20 Solid Spin stabilized Oghab9* Rocket artillery (O) 4.82 0.233 70; HE 40 Solid Spin stabilized Fajr‐310* Rocket artillery (O) 5.2 0.24 45; HE 45 Solid Spin stabilized Fajr‐511* Rocket artillery (O) 6.6 0.33 90; HE 75 Solid Spin stabilized Falaq‐112* Rocket artillery (O) 1.38 0.24 50; HE 10 Solid Spin stabilized Falaq‐213* Rocket artillery (O) 1.8 0.333 60; HE 11 Solid Spin stabilized Nazeat‐614* Rocket artillery (O) 6.3 0.355 150; HE 100 Solid Spin stabilized Nazeat15* Rocket artillery (O) 5.9 0.355 150; HE 120 Solid Spin stabilized Zelzal‐116* Iran‐130 Rocket artillery (O) 8.3 0.61 500‐600; HE 100‐125 Solid Spin stabilized Zelzal‐1A17* Mushak‐120 Rocket artillery (O) 8.3 0.61 500‐600; HE 160 Solid Spin stabilized Nazeat‐1018* Mushak‐160 Rocket artillery (O) 8.3 0.45 250; HE 150 Solid Spin stabilized Related content is available on the website for the Nuclear Threat Initiative, www.nti.org. -

Thesis Submitted to Florida Institute of Technology in Partial Fulfllment of the Requirements for the Degree Of

Dynamics of Spacecraft Orbital Refueling by Casey Clark Bachelor of Aerospace Engineering Mechanical & Aerospace Engineering College of Engineering 2016 A thesis submitted to Florida Institute of Technology in partial fulfllment of the requirements for the degree of Master of Science in Aerospace Engineering Melbourne, Florida July, 2018 ⃝c Copyright 2018 Casey Clark All Rights Reserved The author grants permission to make single copies. We the undersigned committee hereby approve the attached thesis Dynamics of Spacecraft Orbital Refueling by Casey Clark Dr. Tiauw Go, Sc.D. Associate Professor. Mechanical & Aerospace Engineering Committee Chair Dr. Jay Kovats, Ph.D. Associate Professor Mathematics Outside Committee Member Dr. Markus Wilde, Ph.D. Assistant Professor Mechanical & Aerospace Engineering Committee Member Dr. Hamid Hefazi, Ph.D. Professor and Department Head Mechanical & Aerospace Engineering ABSTRACT Title: Dynamics of Spacecraft Orbital Refueling Author: Casey Clark Major Advisor: Dr. Tiauw Go, Sc.D. A quantitative collation of relevant parameters for successfully completed exper- imental on-orbit fuid transfers and anticipated orbital refueling future missions is performed. The dynamics of connected satellites sustaining fuel transfer are derived by treating the connected spacecraft as a rigid body and including an in- ternal mass fow rate. An orbital refueling results in a time-varying local center of mass related to the connected spacecraft. This is accounted for by incorporating a constant mass fow rate in the inertia tensor. Simulations of the equations of motion are performed using the values of the parameters of authentic missions in an endeavor to provide conclusions regarding the efect of an internal mass transfer on the attitude of refueling spacecraft. -

Ruimtewapens Schuldwoestijn Graffiti Without Gravity Van De Hoofdredacteur

Ruimtewapens Schuldwoestijn Graffiti without Gravity Van de hoofdredacteur: Het zal u hopelijk niet ontgaan zijn dat, door de komst van het Space Studies Program (SSP) van de International Space University én een groot aantal evenementen in de regio Delft, Den Haag, Leiden en Noordwijk, het een bijzondere ruimtevaartzomer geweest is. Deze ‘Sizzling Summer of Space’ is nu echter toch echt afgelopen. In het volgende nummer staat een nabeschouwing over SSP in Nederland gepland, maar in dit nummer vindt u hiernaast al vast een bijzondere foto van de Koning met de deelnemers tijdens de openingsceremonie en een verslag van een side event gesponsord door de NVR. De voorstelling ‘Fly me to the Moon’ is ook door de NVR ondersteund en is bezocht door een aantal van onze leden. Het theaterstuk maakte mooi gebruik van de bijzondere omgeving van Decos op de Space Campus in Noordwijk. Op de Space Campus zelf zijn veel nieuwe ontwikkelingen gaande waaraan we in de nabije toekomst aandacht willen geven. Bij de voorplaat In dit nummer vindt u het eerste artikel uit een serie over ruimtewapens en een artikel over Gloveboxen dat we Het winnende kunstwerk van de ‘Graffiti without Gravity’ wedstrijd, overgenomen hebben, onder de samenwerkingsover- door Shane Sutton. [ESA/Hague Street Art/S. Sutton] eenkomst met de British Interplanetary Society, uit het blad Spaceflight. Het is goed als Nederlandse partijen zelf positief over hun producten zijn maar het is natuurlijk nog Foto van het kwartaal beter als een onafhankelijke buitenlandse publicatie dat opschrijft. Frappant is verder dat twee artikelen gerelateerd zijn aan lezingen bij het Ruimtevaart Museum in Lelystad, want zowel het boek Schuldwoestijn als het artikel over ruimtewapens zijn onderwerp van een lezing daar geweest. -

The Washington Institute for Near East Policy August

THE WASHINGTON INSTITUTE FOR NEAR EAST POLICY n AUGUST 2020 n PN84 PHOTO CREDIT: REUTERS © 2020 THE WASHINGTON INSTITUTE FOR NEAR EAST POLICY. ALL RIGHTS RESERVED. FARZIN NADIMI n April 22, 2020, Iran’s Islamic Revolutionary Guard Corps Aerospace Force (IRGC-ASF) Olaunched its first-ever satellite, the Nour-1, into orbit. The launch, conducted from a desert platform near Shahrud, about 210 miles northeast of Tehran, employed Iran’s new Qased (“messenger”) space- launch vehicle (SLV). In broad terms, the launch showed the risks of lifting arms restrictions on Iran, a pursuit in which the Islamic Republic enjoys support from potential arms-trade partners Russia and China. Practically, lifting the embargo could facilitate Iran’s unhindered access to dual-use materials and other components used to produce small satellites with military or even terrorist applications. Beyond this, the IRGC’s emerging military space program proves its ambition to field larger solid-propellant missiles. Britain, France, and Germany—the EU-3 signatories of the Joint Comprehensive Plan of Action, as the 2015 Iran nuclear deal is known—support upholding the arms embargo until 2023. The United States, which has withdrawn from the deal, started a process on August 20, 2020, that could lead to a snapback of all UN sanctions enacted since 2006.1 The IRGC’s Qased space-launch vehicle, shown at the Shahrud site The Qased-1, for its part, succeeded over its three in April. stages in placing the very small Nour-1 satellite in a near circular low earth orbit (LEO) of about 425 km. The first stage involved an off-the-shelf Shahab-3/ Ghadr liquid-fuel missile, although without the warhead section, produced by the Iranian Ministry of Defense.2 According to ASF commander Gen. -

ELV Launch History (1998-2011)

NASA-LSP Managed ELV Launch History (1998-2011) ELV Performance Class 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 2011 CHART LEGEND WIRE (PXL)HETE II (PHYB) Small Class (WR) 3/4/99 (Kw) 10/9/00 SWAS (PXL) HESSI (PXL) SORCE (PXL) DART (PXL) ST-5 (PXL) AIM (PXL) IBEX (PXL) Athena (AT) (WR) 12/5/98 KODIAK STAR (AT) (ER) 2/5/02 (ER) 1/25/03 (WR) 4/15/05 (WR) 3/22/06 (WR) 4/25/07(Kwaj) 10/19/08 Pegasus XL (PXL) TERRIERS/ (K) 9/29/01 MUBLCOM (PXL) *Glory(TXL)* Glory (T-XL) Pegasus Hybrid (PHYB) (WR) 5/17/99 (WR) 3/4/11 NOAA-N' (DII) Taurus (T) GALEX (PXL) (WR) 2/6/09 (ER) 4/28/03 DEEP SPACE-1/ IMAGE (DII)ODYSSEY (DII) Medium Class SEDSAT (DII) (WR) 3/25/00 (ER) 4/7/01 (ER) 10/24/98 MARS LANDER 1 SDO (AV) Delta II (DII) DEEP SPACE 2 (DII) SCISAT (PXL) (ER) 2/11/10 (ER) 1/3/99 AQUA (DII) (()WR) 8/12/03 * OCO (T) Delta II Heavy (DIIH) (WR) 5/4/02 (WR) 2/24/09 GPB (DII) THEMIS (DII) Delta III (DIII) (WR) 4/20/04 (ER) 2/17/07 EO1/SAC-C MAP (DII) STEREO (DII) GLAST (DIIH) MUNN (DII) (ER) 6/30/01 (ER) 10/25/06 (ER) 6/11/08 STARDUST (DII) (WR) 11/21/00 (ER) 2/7/99 Intermediate / Heavy Class CONTOUR (DII) ICESAT / DEEP KEPLER (DII) (ER) 7/3/02 CHIPSAT (DII) IMPACT (DII) (ER) 3/6/09 Atlas II (()IIA) (()WR) 1/12/03 (()ER) 1/12/05 MARS AURA (DII) PHOENIX (DII) ORBITER 1 (DII) (WR) 7/15/04 (ER) 8/4/07 Atlas II with Solids (IIAS) (ER) 12/11/98 LANDSAT-7 (DII) GENESIS (DII) PLUTO - NEW Atlas V (AV) (WR) 4/15/99 (ER) 8/8/01 HORIZON (AV-551) GOES-L (IIA) (ER) 1/19/06 OSTM (DII) Delta IV (DIV) (ER) 5/3/00 (WR) 6/20/08 TDRS-I (IIA) MER-A -

STS-S26 Stage Set

SatCom For Net-Centric Warfare September/October 2010 MilsatMagazine STS-S26 stage set Military satellites Kodiak Island Launch Complex, photo courtesy of Alaska Aerospace Corp. PAYLOAD command center intel Colonel Carol P. Welsch, Commander Video Intelligence ..................................................26 Space Development Group, Kirtland AFB by MilsatMagazine Editors ...............................04 Zombiesats & On-Orbit Servicing by Brian Weeden .............................................38 Karl Fuchs, Vice President of Engineering HI-CAP Satellites iDirect Government Technologies by Bruce Rowe ................................................62 by MilsatMagazine Editors ...............................32 The Orbiting Vehicle Series (OV1) by Jos Heyman ................................................82 Brig. General Robert T. Osterhaler, U.S.A.F. (Ret.) CEO, SES WORLD SKIES, U.S. Government Solutions by MilsatMagazine Editors ...............................76 India’s Missile Defense/Anti-Satellite NEXUS by Victoria Samson ..........................................82 focus Warfighter-On-The-Move by Bhumika Baksir ...........................................22 MILSATCOM For The Next Decade by Chris Hazel .................................................54 The First Line Of Defense by Angie Champsaur .......................................70 MILSATCOM In Harsh Conditions.........................89 2 MILSATMAGAZINE — SEPTEMBER/OCTOBER 2010 Video Intelligence ..................................................26 Zombiesats & On-Orbit -

Amsat-Sm Info 99/3

Medlemstidning för AMSAT-SM Nummer 3 September 1999 TRX4 packettransciever för vår senaste satellit UoSAT-12 (Sidan 10) Innehåll Sid 3 SM7ANL Silent Key Sid 4 Nya listan från AMSAT-UK Sid 8 QSO via Oscar 10 Sid 9 Testbeställning från AMSAT-UK Sid 10 Experiment med 38k4 Sid 11 70 cm-bandet hotat Sid 12 Frekvensanvändning 70 cm 70cm-antenn för AO-27 Sid 13 Rapport från 14:e kollokvium (Sidan18) Sid 14 Phase 3-D status Sid 15 Satellitstatus Sid 16 En DX-sommar med Oscar 10 Sid 18 Notiser Sid 20 Från söndagsnätet Sid 22 Solförmörkelsen Sid 23 PSK 31 Sid 24 Sun Clock Sid 25 Vad krävs för att köra P3D Del 2/2 AMSAT-SM Styrelsen: Suppleant Ingemar Myhrberg - SM0AIG Århusvägen 98, 164 45 Kista Ordförande Tel/fax: 08-751 48 50 Olle Enstam - SM0DY E-post: [email protected] Idunavägen 36, 181 61 Lidingö Tel/Fax: 08-766 51 27 Suppleant E-post: [email protected] Sven Grahn Rättviksvägen 44, 192 71 Sollentuna Sekreterare/INFO-nätet HF Tel: 08- 754 19 04 Fax: 08-626 70 44 Henry Bervenmark - SM5BVF E-post: [email protected] Vallmovägen 10, 176 74 Järfälla Tel/fax: 08-583 555 80 E-post: [email protected] Funktionärer: Kassör INFO/Hemsidan Kim Pettersson - SM1TDX Lars Thunberg - SM0TGU Signalgatan 26B, 621 47 Visby Svarvargatan 20 2tr , 112 49 Stockholm Tel: 0498-21 37 52 Tel: 08-654 28 21 E-post: [email protected] E-post: [email protected] Teknisk sekreterare ELMER Bruce Lockhart - SM0TER, Göran Gerkman - SM5UFB Rymdgatan 56, 195 58 Märsta V:a Esplanaden 17, 591 60 Motala Tel/fax: 08-591 116 12 Tel: 0141-575 04 E-post: [email protected] E-post: [email protected] QTC-redaktör Tryckning INFO Anders Svensson - SM0DZL Leif Möller - SM0PUY Blåbärsvägen 9, 761 63 Norrtälje Ekebyvägen 18, 186 34 Vallentuna Tel: 0176-198 62 Tel: 08-511 802 01 E-post: [email protected] E-post: [email protected] Adress INFO-kanaler Medlemskontakt AMSAT-SM Info-nätet HF: Allmänna frågor om föreningen c/o Lars Thunberg Söndagar kl. -

<> CRONOLOGIA DE LOS SATÉLITES ARTIFICIALES DE LA

1 SATELITES ARTIFICIALES. Capítulo 5º Subcap. 10 <> CRONOLOGIA DE LOS SATÉLITES ARTIFICIALES DE LA TIERRA. Esta es una relación cronológica de todos los lanzamientos de satélites artificiales de nuestro planeta, con independencia de su éxito o fracaso, tanto en el disparo como en órbita. Significa pues que muchos de ellos no han alcanzado el espacio y fueron destruidos. Se señala en primer lugar (a la izquierda) su nombre, seguido de la fecha del lanzamiento, el país al que pertenece el satélite (que puede ser otro distinto al que lo lanza) y el tipo de satélite; este último aspecto podría no corresponderse en exactitud dado que algunos son de finalidad múltiple. En los lanzamientos múltiples, cada satélite figura separado (salvo en los casos de fracaso, en que no llegan a separarse) pero naturalmente en la misma fecha y juntos. NO ESTÁN incluidos los llevados en vuelos tripulados, si bien se citan en el programa de satélites correspondiente y en el capítulo de “Cronología general de lanzamientos”. .SATÉLITE Fecha País Tipo SPUTNIK F1 15.05.1957 URSS Experimental o tecnológico SPUTNIK F2 21.08.1957 URSS Experimental o tecnológico SPUTNIK 01 04.10.1957 URSS Experimental o tecnológico SPUTNIK 02 03.11.1957 URSS Científico VANGUARD-1A 06.12.1957 USA Experimental o tecnológico EXPLORER 01 31.01.1958 USA Científico VANGUARD-1B 05.02.1958 USA Experimental o tecnológico EXPLORER 02 05.03.1958 USA Científico VANGUARD-1 17.03.1958 USA Experimental o tecnológico EXPLORER 03 26.03.1958 USA Científico SPUTNIK D1 27.04.1958 URSS Geodésico VANGUARD-2A -

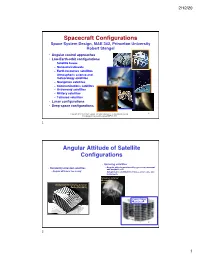

10. Spacecraft Configurations MAE 342 2016

2/12/20 Spacecraft Configurations Space System Design, MAE 342, Princeton University Robert Stengel • Angular control approaches • Low-Earth-orbit configurations – Satellite buses – Nanosats/cubesats – Earth resources satellites – Atmospheric science and meteorology satellites – Navigation satellites – Communications satellites – Astronomy satellites – Military satellites – Tethered satellites • Lunar configurations • Deep-space configurations Copyright 2016 by Robert Stengel. All rights reserved. For educational use only. 1 http://www.princeton.edu/~stengel/MAE342.html 1 Angular Attitude of Satellite Configurations • Spinning satellites – Angular attitude maintained by gyroscopic moment • Randomly oriented satellites and magnetic coil – Angular attitude is free to vary – Axisymmetric distribution of mass, solar cells, and instruments Television Infrared Observation (TIROS-7) Orbital Satellite Carrying Amateur Radio (OSCAR-1) ESSA-2 TIROS “Cartwheel” 2 2 1 2/12/20 Attitude-Controlled Satellite Configurations • Dual-spin satellites • Attitude-controlled satellites – Angular attitude maintained by gyroscopic moment and thrusters – Angular attitude maintained by 3-axis control system – Axisymmetric distribution of mass and solar cells – Non-symmetric distribution of mass, solar cells – Instruments and antennas do not spin and instruments INTELSAT-IVA NOAA-17 3 3 LADEE Bus Modules Satellite Buses Standardization of common components for a variety of missions Modular Common Spacecraft Bus Lander Congiguration 4 4 2 2/12/20 Hine et al 5 5 Evolution -

Guidance, Navigation and Control System for Autonomous Proximity Operations and Docking of Spacecraft

Scholars' Mine Doctoral Dissertations Student Theses and Dissertations Summer 2009 Guidance, navigation and control system for autonomous proximity operations and docking of spacecraft Daero Lee Follow this and additional works at: https://scholarsmine.mst.edu/doctoral_dissertations Part of the Aerospace Engineering Commons Department: Mechanical and Aerospace Engineering Recommended Citation Lee, Daero, "Guidance, navigation and control system for autonomous proximity operations and docking of spacecraft" (2009). Doctoral Dissertations. 1942. https://scholarsmine.mst.edu/doctoral_dissertations/1942 This thesis is brought to you by Scholars' Mine, a service of the Missouri S&T Library and Learning Resources. This work is protected by U. S. Copyright Law. Unauthorized use including reproduction for redistribution requires the permission of the copyright holder. For more information, please contact [email protected]. GUIDANCE, NAVIGATION AND CONTROL SYSTEM FOR AUTONOMOUS PROXIMITY OPERATIONS AND DOCKING OF SPACECRAFT by DAERO LEE A DISSERTATION Presented to the Faculty of the Graduate School of the MISSOURI UNIVERSITY OF SCIENCE AND TECHNOLOGY In Partial Fulfillment of the Requirements for the Degree DOCTOR OF PHILOSOPHY in AEROSPACE ENGINEERING 2009 Approved by Henry Pernicka, Advisor Jagannathan Sarangapani Ashok Midha Robert G. Landers Cihan H Dagli 2009 DAERO LEE All Rights Reserved iii ABSTRACT This study develops an integrated guidance, navigation and control system for use in autonomous proximity operations and docking of spacecraft. A new approach strategy is proposed based on a modified system developed for use with the International Space Station. It is composed of three “V-bar hops” in the closing transfer phase, two periods of stationkeeping and a “straight line V-bar” approach to the docking port. -

Iran's Space Program: Timeline and Technology

Report Iran’s Space Program: Timeline and Technology 29 Apr. 2020 Contents I- Iran’s Space and Satellite Program’s Timeline ..................... 1 II- Satellite and SLV Technology in Iran ................................. 3 Conclusion ...........................................................................6 Iran successfully launched its first dual purpose military-non-military Noor satellite into orbit on April 22, 2020. Produced domestically, the satellite was launched by a new Space Launch Vehicle (SLV), the Qased. The launch publicly disclosed military aspects of Iran’s space program that were previously denied. Noor has increased international concerns over Iran’s hidden Inter- Continental Ballistic Missile Program (ICBM). Iran’s latest satellite technological and military advancement is a far cry from its early commitment to United Nations treaties and principles promoting the peaceful use of space. Iran is a founding member of the United Nations Committee on the Peaceful Uses of Outer Space (COPUOS) launched in December 1958. The following sections review the timeline and technology of Iran’s space program, to show that it had a military component that evolved over time. I- Iran’s Space and Satellite Program’s Timeline Set up over a decade ago, Iran’s satellite and inter-continental missile technology was launched by the Islamic Revolutionary Guard Corps (IRGC)-affiliated Self-Sufficiency Jihad Organization (SSJO). The components of the program were laid out as early as 2004, when Iran established a Supreme Space Council chaired by its sitting president, and monitored by the Iranian Ministry of Communication and Informational Technology.1 In the early stages of Iran’s space program, communication satellites were developed jointly at lower cost with foreign space agencies.