CNTR: Lightweight OS Containers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Securing Embedded Systems: Analyses of Modern Automotive Systems and Enabling Near-Real Time Dynamic Analysis

Securing Embedded Systems: Analyses of Modern Automotive Systems and Enabling Near-Real Time Dynamic Analysis Karl Koscher A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2014 Reading Committee: Tadayoshi Kohno, Chair Gaetano Borriello Shwetak Patel Program Authorized to Offer Degree: Computer Science and Engineering © Copyright 2014 Karl Koscher University of Washington Abstract Securing Embedded Systems: From Analyses of Modern Automotive Systems to Enabling Dynamic Analysis Karl Koscher Chair of the Supervisory Committee: Associate Professor Tadayoshi Kohno Department of Computer Science and Engineering Today, our life is pervaded by computer systems embedded inside everyday products. These embedded systems are found in everything from cars to microwave ovens. These systems are becoming increasingly sophisticated and interconnected, both to each other and to the Internet. Unfortunately, it appears that the security implications of this complexity and connectivity have mostly been overlooked, even though ignoring security could have disastrous consequences; since embedded systems control much of our environment, compromised systems could be used to inflict physical harm. This work presents an analysis of security issues in embedded systems, including a comprehensive security analysis of modern automotive systems. We hypothesize that dynamic analysis tools would quickly discover many of the vulnerabilities we found. However, as we will discuss, there -

Root Filesystem

>>> Operating Systems And Applications For Embedded Systems >>> Root Filesystem Name: Mariusz Naumowicz Date: 27 sierpnia 2018 [~]$ _ [1/21] >>> Plan 1. Root Filesystem Useful System Filesystem Hierarchy Standard (FHS) Staging directory 2. Programs The init program Shell Utilities BusyBox ToyBox Libraries Reducing size by stripping Device nodes The proc and sysfs flesystems Mounting flesystems Additional reading Standalone ramdisk Minimizing size Booting with QEMU Additional reading [~]$ _ [2/21] >>> Useful System * init: The program that starts everything off, usually by running a series of scripts. * shell: Needed to give you a command prompt but, more importantly, to run the shell scripts called by init and other programs. * daemons: Various server programs, started by init. * libraries: Usually, the programs mentioned so far are linked with shared libraries which must be present in the root filesystem. * Configuration files: The configuration for init and other daemons is stored in a series of ASCII text files, usually in the /etc directory. * Device nodes: The special files that give access to various device drivers. * /proc and /sys: Two pseudo filesystems that represent kernel data structures as a hierarchy of directories and files. Many programs and library functions read these files. * kernel modules: If you have configured some parts of your kernel to be modules, they will be here, usually in /lib/modules/[kernel version]. [1. Root Filesystem]$ _ [3/21] >>> Filesystem Hierarchy Standard (FHS) * /bin: programs essential for all -

Yocto-Slides.Pdf

Yocto Project and OpenEmbedded Training Yocto Project and OpenEmbedded Training © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Latest update: October 6, 2021. Document updates and sources: https://bootlin.com/doc/training/yocto Corrections, suggestions, contributions and translations are welcome! embedded Linux and kernel engineering Send them to [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/296 Rights to copy © Copyright 2004-2021, Bootlin License: Creative Commons Attribution - Share Alike 3.0 https://creativecommons.org/licenses/by-sa/3.0/legalcode You are free: I to copy, distribute, display, and perform the work I to make derivative works I to make commercial use of the work Under the following conditions: I Attribution. You must give the original author credit. I Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. I For any reuse or distribution, you must make clear to others the license terms of this work. I Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. Document sources: https://github.com/bootlin/training-materials/ - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/296 Hyperlinks in the document There are many hyperlinks in the document I Regular hyperlinks: https://kernel.org/ I Kernel documentation links: dev-tools/kasan I Links to kernel source files and directories: drivers/input/ include/linux/fb.h I Links to the declarations, definitions and instances of kernel symbols (functions, types, data, structures): platform_get_irq() GFP_KERNEL struct file_operations - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/296 Company at a glance I Engineering company created in 2004, named ”Free Electrons” until Feb. -

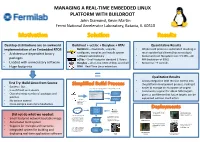

MANAGING a REAL-TIME EMBEDDED LINUX PLATFORM with BUILDROOT John Diamond, Kevin Martin Fermi National Accelerator Laboratory, Batavia, IL 60510

MANAGING A REAL-TIME EMBEDDED LINUX PLATFORM WITH BUILDROOT John Diamond, Kevin Martin Fermi National Accelerator Laboratory, Batavia, IL 60510 Desktop distributions are an awkward Buildroot + ucLibc + Busybox + RTAI Quantitative Results implementation of an Embedded RTOS Buildroot – downloads, unpacks, • Whole build process is automated resulting in • Architecture-dependent binary configures, compiles and installs system much quicker build times (hours not days) software automatically • Kernel and root filesystem size: 3.5 MB – 20 packages uClibc – Small-footprint standard C library MB (reduction of 99%) • Loaded with unnecessary software Busybox – all-in-one UNIX utilities and shell • Boot-time: ~9 seconds • Huge footprints RTAI – Real-Time Linux extensions = Qualitative Results • Allows integration with revision control into First Try: Build Linux from Source the platform development process, making it • Success! But.. 2. Buildroot’s menuconfig generates a package configuration file easier to manage an ecosystem of targets • Is as difficult as it sounds and kernel configuration file • Community support for x86 & ARM targets Linux Kernel • Overwhelming number of packages and Configuration gives us confidence that future targets can be patches Package supported without much effort 1. Developer Configuration • No version control configures build via Buildroot’s • Cross-compile even more headaches menuconfig Internet Build Process Power Supply Control Quench Protection Git / CVS / SVN and Regulation for the System for Tevatron Did not do what we needed: Fermilab Linac Electron Lens (TEL II) 3. The build process pulls 4. The output from the software packages from build process is a kernel • Small-footprint network bootable image the internet and custom bzImage bzImage file with an softare packages from a integrated root filesystem ARM Cortex A-9 source code repository file PC/104 AMD • Automated build system Geode SBC Beam Position Monitor Power Supply Control prototype for Fermilab and Regulation for • Support for multiple architectures 5. -

Cross-Compiler Bipartite Vulnerability Search

electronics Article Cross-Compiler Bipartite Vulnerability Search Paul Black * and Iqbal Gondal Internet Commerce Security Laboratory (ICSL), Federation University, Ballarat 3353, Australia; [email protected] * Correspondence: [email protected] Abstract: Open-source libraries are widely used in software development, and the functions from these libraries may contain security vulnerabilities that can provide gateways for attackers. This paper provides a function similarity technique to identify vulnerable functions in compiled programs and proposes a new technique called Cross-Compiler Bipartite Vulnerability Search (CCBVS). CCBVS uses a novel training process, and bipartite matching to filter SVM model false positives to improve the quality of similar function identification. This research uses debug symbols in programs compiled from open-source software products to generate the ground truth. This automatic extraction of ground truth allows experimentation with a wide range of programs. The results presented in the paper show that an SVM model trained on a wide variety of programs compiled for Windows and Linux, x86 and Intel 64 architectures can be used to predict function similarity and that the use of bipartite matching substantially improves the function similarity matching performance. Keywords: malware similarity; function similarity; binary similarity; machine-learning; bipar- tite matching 1. Introduction Citation: Black, P.; Gondal, I. Cross-Compiler Bipartite Function similarity techniques are used in the following activities, the triage of mal- Vulnerability Search. Electronics 2021, ware [1], analysis of program patches [2], identification of library functions [3], analysis of 10, 1356. https://doi.org/10.3390/ code authorship [4], the identification of similar function pairs to reduce manual analysis electronics10111356 workload, [5], plagiarism analysis [6], and for vulnerable function identification [7–9]. -

No Starch Press, Inc

Table of Contents The Book of VMware—The Complete Guide to VMware Workstation.......................................................1 Chapter 1: Introduction.....................................................................................................................................3 Overview..................................................................................................................................................3 1.1 Who Should Read This Book............................................................................................................3 1.2 Terms and Conventions.....................................................................................................................4 1.3 Book Layout......................................................................................................................................4 1.4 VMware Applications........................................................................................................................6 1.4.1 Quality Assurance (QA)....................................................................................................6 1.4.2 Network Programming and Testing...................................................................................6 1.4.3 Operating System Development, Research, and Education..............................................7 1.4.4 Other VMware Products....................................................................................................7 Chapter 2: The VMware Virtual Machine.......................................................................................................8 -

Operating System Components for an Embedded Linux System

INSTITUTEFORREAL-TIMECOMPUTERSYSTEMS TECHNISCHEUNIVERSITATM¨ UNCHEN¨ PROFESSOR G. F ARBER¨ Operating System Components for an Embedded Linux System Martin Hintermann Studienarbeit ii Operating System Components for an Embedded Linux System Studienarbeit Executed at the Institute for Real-Time Computer Systems Technische Universitat¨ Munchen¨ Prof. Dr.-Ing. Georg Farber¨ Advisor: Prof.Dr.rer.nat.habil. Thomas Braunl¨ Author: Martin Hintermann Kirchberg 34 82069 Hohenschaftlarn¨ Submitted in February 2007 iii Acknowledgements At first, i would like to thank my supervisor Prof. Dr. Thomas Braunl¨ for giving me the opportunity to take part at a really interesting project. Many thanks to Thomas Sommer, my project partner, for his contribution to our good work. I also want to thank also Bernard Blackham for his assistance by email and phone at any time. In my opinion, it was a great cooperation of all persons taking part in this project. Abstract Embedded systems can be found in more and more devices. Linux as a free operating system is also becoming more and more important in embedded applications. Linux even replaces other operating systems in certain areas (e.g. mobile phones). This thesis deals with the employment of Linux in embedded systems. Various architectures of embedded systems are introduced and the characteristics of common operating systems for these devices are reviewed. The architecture of Linux is examined by looking at the particular components such as kernel, standard C libraries and POSIX tools for embedded systems. Furthermore, there is a survey of real-time extensions for the Linux kernel. The thesis also treats software development for embedded Linux ranging from the prerequi- sites for compiling software to the debugging of binaries. -

Run Ds Homebrew Through Ds Download Play Pc How to Homebrew a Nintendo 3DS/2DS

run ds homebrew through ds download play pc How to Homebrew a Nintendo 3DS/2DS. Homebrewing is the process of using various exploits to allow your device to run custom software, this can include games, emulators, and various tools. There are a wide variety of ways to homebrew your 3DS, but for this Instructable we will be using Browserhax, an exploit using the Nintendo 3DS's internet browser. This is a fairly simple and quick process that just about anyone can accomplish that works on all versions of the 3DS, including the 2DS. Step 1: Determine If Your 3DS Is Capable of Being Homebrewed. The firmware is the operating system that the 3DS runs on. Firmware is updated as time goes on just like any major product, with higher numbers being newer versions. The firmware version of your 3DS can easily be determined by selecting the System Settings icon and checking the bottom- right corner of the top screen. Currently in order to use Browserhax your firmware must be lower than 10.3.0-28. However, starting with version 9.9 the firmware will check if the system is up to date, and if not, refuse to allow you to use the browser. If you see the nag message you will not be able to use Browserhax, but it is possible to circumvent the nag message by performing the following steps: 1.Go to System Settings and change the date to January 1, 2000, 00:00. 2.Use the browser 'Initialize save data' option, before any page gets loaded triggering the browser version message.(Note that you can't press the HOME button to return from the browser, otherwise you will have to re-initialize the save data again. -

Buildroot Training Buildroot Training

Buildroot Training Buildroot Training © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Latest update: October 6, 2021. Document updates and sources: https://bootlin.com/doc/training/buildroot Corrections, suggestions, contributions and translations are welcome! embedded Linux and kernel engineering Send them to [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/353 Rights to copy © Copyright 2004-2021, Bootlin License: Creative Commons Attribution - Share Alike 3.0 https://creativecommons.org/licenses/by-sa/3.0/legalcode You are free: I to copy, distribute, display, and perform the work I to make derivative works I to make commercial use of the work Under the following conditions: I Attribution. You must give the original author credit. I Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. I For any reuse or distribution, you must make clear to others the license terms of this work. I Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. Document sources: https://github.com/bootlin/training-materials/ - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/353 Hyperlinks in the document There are many hyperlinks in the document I Regular hyperlinks: https://kernel.org/ I Kernel documentation links: dev-tools/kasan I Links to kernel source files and directories: drivers/input/ include/linux/fb.h I Links to the declarations, definitions and instances of kernel symbols (functions, types, data, structures): platform_get_irq() GFP_KERNEL struct file_operations - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/353 Company at a glance I Engineering company created in 2004, named ”Free Electrons” until Feb. -

0137017839.Pdf

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The author and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The publisher offers excellent discounts on this book when ordered in quantity for bulk purchases or special sales, which may include electronic versions and/or custom covers and content particular to your business, training goals, marketing focus, and branding interests. For more information, please contact: U.S. Corporate and Government Sales (800) 382-3419 [email protected] For sales outside the United States, please contact: International Sales [email protected] Visit us on the Web: informit.com/aw Library of Congress Cataloging-in-Publication Data: Hallinan, Christopher. Embedded Linux primer : a practical real-world approach / Christopher Hallinan. p. cm. ISBN 978-0-13-701783-6 (hardback : alk. paper) 1. Linux. 2. Operating systems (Computers) 3. Embedded computer systems--Programming. I. Title. QA76.76.O63H34462 2011 005.4’32--dc22 2010032891 Copyright © 2011 Pearson Education, Inc. All rights reserved. Printed in the United States of America. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction, storage in a retrieval system, or transmission in any form or by any means, electronic, mechanical, photocopying, recording, or likewise. -

Embedded Linux Size Reduction Techniques

Embedded Linux Conference 2017 Embedded Linux size reduction techniques Michael Opdenacker free electrons [email protected] free electrons - Embedded Linux, kernel, drivers - Development, consulting, training and support. http://free-electrons.com 1/1 Michael Opdenacker I Michael Opdenacker I Founder and Embedded Linux engineer at free electrons I Embedded Linux expertise I Development, consulting and training I Strong open-source focus I Long time interest in embedded Linux boot time, and one of its prerequisites: small system size. I From Orange, France Penguin from Justin Ternet (https://openclipart.org/detail/182875/pinguin) free electrons - Embedded Linux, kernel, drivers - Development, consulting, training and support. http://free-electrons.com 2/1 Why reduce size? There are multiple reasons for having a small kernel and system I Run on very small systems (IoT) I Run Linux as a bootloader I Boot faster (for example on FPGAs) I Reduce power consumption Even conceivable to run the whole system in CPU internal RAM or cache (DRAM is power hungry and needs refreshing) I Security: reduce the attack surface I Cloud workloads: optimize instances for size and boot time. I Spare as much RAM as possible for applications and maximizing performance. See https://tiny.wiki.kernel.org/use_cases free electrons - Embedded Linux, kernel, drivers - Development, consulting, training and support. http://free-electrons.com 3/1 Reasons for this talk I No talk about size since ELCE 2015 I Some projects stalled (Linux tinification, LLVM Linux...) I Opportunity to have a look at solutions I didn’t try: musl library, Toybox, gcc LTO, new gcc versions, compiling with Clang.. -

Checkpoints of GUI-Based Applications Victor C

Checkpoints of GUI-based Applications Victor C. Zandy and Barton P. Miller Computer Sciences Department University of Wisconsin – Madison, USA {zandy,bart}@cs.wisc.edu Abstract tion’s GUI might migrate as the application itself migrates, or independently of the application. Both We describe a new system, called guievict, that enables the types of migration require the ability to capture the graphical user interface (GUI) of any application to be application’s window session, a transportable represen- transparently migrated to or replicated on another display tation of its GUI. Window sessions are difficult to cap- without premeditative steps such as re-linking the application ture because not all of the state they represent is resident program binary or re-directing the application process’s in the application. Portions of an application’s GUI state window system communication through a proxy. Guievict is reside in the window server, a separate system that han- based on a small X window server extension that enables an dles the display of all GUI-based applications on the application to retrieve its window session, a transportable representation of its GUI, from the window server and a library same host. Most window servers do not provide a way to of GUI migration functionality that is injected in the extract the state of an individual application’s resources. application process at run time. We discuss the underlying Guievict has been implemented for the X window technical issues: controlling and synchronizing the system [19] and has the following characteristics: communication between the application and the window ❏ Migration occurs at application granularity; users system, identifying and retrieving the GUI resources that form the window session, regenerating the window session in a new can select and move the GUI of individual applica- window system, and maintaining application transparency.