CHAPTER 2 Data Representation in Computer Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

INTERSKILL MAINFRAME QUARTERLY December 2011

INTERSKILL MAINFRAME QUARTERLY December 2011 Retaining Data Center Skills Inside This Issue and Knowledge Retaining Data Center Skills and Knowledge 1 Interskill Releases - December 2011 2 By Greg Hamlyn Vendor Briefs 3 This the final chapter of this four part series that briefly Taking Care of Storage 4 explains the data center skills crisis and the pros and cons of Learning Spotlight – Managing Projects 5 implementing a coaching or mentoring program. In this installment we will look at some of the steps to Tech-Head Knowledge Test – Utilizing ISPF 5 implementing a program such as this into your data center. OPINION: The Case for a Fresh Technical If you missed these earlier installments, click the links Opinion 6 below. TECHNICAL: Lost in Translation Part 1 - EBCDIC Code Pages 7 Part 1 – The Data Center Skills Crisis MAINFRAME – Weird and Unusual! 10 Part 2 – How Can I Prevent Skills Loss in My Data Center? Part 3 – Barriers to Implementing a Coaching or Mentoring Program should consider is the GROW model - Determine whether an external consultant should be Part Four – Implementing a Successful Coaching used (include pros and cons) - Create a basic timeline of the project or Mentoring Program - Identify how you will measure the effectiveness of the project The success of any project comes down to its planning. If - Provide some basic steps describing the coaching you already believe that your data center can benefit from and mentoring activities skills and knowledge transfer and that coaching and - Next phase if the pilot program is deemed successful mentoring will assist with this, then outlining a solid (i.e. -

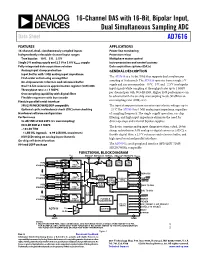

16-Channel DAS with 16-Bit, Bipolar Input, Dual Simultaneous Sampling

16-Channel DAS with 16-Bit, Bipolar Input, Dual Simultaneous Sampling ADC Data Sheet AD7616 FEATURES APPLICATIONS 16-channel, dual, simultaneously sampled inputs Power line monitoring Independently selectable channel input ranges Protective relays True bipolar: ±10 V, ±5 V, ±2.5 V Multiphase motor control Single 5 V analog supply and 2.3 V to 3.6 V VDRIVE supply Instrumentation and control systems Fully integrated data acquisition solution Data acquisition systems (DASs) Analog input clamp protection GENERAL DESCRIPTION Input buffer with 1 MΩ analog input impedance First-order antialiasing analog filter The AD7616 is a 16-bit, DAS that supports dual simultaneous On-chip accurate reference and reference buffer sampling of 16 channels. The AD7616 operates from a single 5 V Dual 16-bit successive approximation register (SAR) ADC supply and can accommodate ±10 V, ±5 V, and ±2.5 V true bipolar Throughput rate: 2 × 1 MSPS input signals while sampling at throughput rates up to 1 MSPS Oversampling capability with digital filter per channel pair with 90.5 dB SNR. Higher SNR performance can Flexible sequencer with burst mode be achieved with the on-chip oversampling mode (92 dB for an Flexible parallel/serial interface oversampling ratio (OSR) of 2). SPI/QSPI/MICROWIRE/DSP compatible The input clamp protection circuitry can tolerate voltages up to Optional cyclic redundancy check (CRC) error checking ±21 V. T h e AD7616 has 1 MΩ analog input impedance, regardless Hardware/software configuration of sampling frequency. The single-supply operation, on-chip Performance filtering, and high input impedance eliminate the need for 92 dB SNR at 500 kSPS (2× oversampling) driver op amps and external bipolar supplies. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

The CLASP Application Security Process

The CLASP Application Security Process Secure Software, Inc. Copyright (c) 2005, Secure Software, Inc. The CLASP Application Security Process The CLASP Application Security Process TABLE OF CONTENTS CHAPTER 1 Introduction 1 CLASP Status 4 An Activity-Centric Approach 4 The CLASP Implementation Guide 5 The Root-Cause Database 6 Supporting Material 7 CHAPTER 2 Implementation Guide 9 The CLASP Activities 11 Institute security awareness program 11 Monitor security metrics 12 Specify operational environment 13 Identify global security policy 14 Identify resources and trust boundaries 15 Identify user roles and resource capabilities 16 Document security-relevant requirements 17 Detail misuse cases 18 Identify attack surface 19 Apply security principles to design 20 Research and assess security posture of technology solutions 21 Annotate class designs with security properties 22 Specify database security configuration 23 Perform security analysis of system requirements and design (threat modeling) 24 Integrate security analysis into source management process 25 Implement interface contracts 26 Implement and elaborate resource policies and security technologies 27 Address reported security issues 28 Perform source-level security review 29 Identify, implement and perform security tests 30 The CLASP Application Security Process i Verify security attributes of resources 31 Perform code signing 32 Build operational security guide 33 Manage security issue disclosure process 34 Developing a Process Engineering Plan 35 Business objectives 35 Process -

Iso/Iec 8632-4:1999(E)

This is a preview - click here to buy the full publication INTERNATIONAL ISO/IEC STANDARD 8632-4 Second edition 1999-12-01 Information technology — Computer graphics — Metafile for the storage and transfer of picture description information — Part 4: Clear text encoding Technologies de l'information — Infographie — Métafichier de stockage et de transfert des informations de description d'images — Partie 4: Codage en clair des textes Reference number ISO/IEC 8632-4:1999(E) © ISO/IEC 1999 ISO/IEC 8632-4:1999(E) This is a preview - click here to buy the full publication PDF disclaimer This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but shall not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In downloading this file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat accepts no liability in this area. Adobe is a trademark of Adobe Systems Incorporated. Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation parameters were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In the unlikely event that a problem relating to it is found, please inform the Central Secretariat at the address given below. © ISO/IEC 1999 All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or ISO's member body in the country of the requester. -

SAS 9.3 UTF-8 Encoding Support and Related Issue Troubleshooting

SAS 9.3 UTF-8 Encoding Support and Related Issue Troubleshooting Jason (Jianduan) Liang SAS certified: Platform Administrator, Advanced Programmer for SAS 9 Agenda Introduction UTF-8 and other encodings SAS options for encoding and configuration Other Considerations for UTF-8 data Encoding issues troubleshooting techniques (tips) Introduction What is UTF-8? . A character encoding capable of encoding all possible characters Why UTF-8? . Dominant encoding of the www (86.5%) SAS system options for encoding . Encoding – instructs SAS how to read, process and store data . Locale - instructs SAS how to present or display currency, date and time, set timezone values UTF-8 and other Encodings ASSCII (American Standard Code for Information Interchange) . 7-bit . 128 - character set . Examples (code point-char-hex): 32-Space-20; 63-?-3F; 64-@-40; 65-A-41 UTF-8 and other Encodings ISO 8859-1 (Latin-1) for Western European languages Windows-1252 (Latin-1) for Western European languages . 8-bit (1 byte, 256 character set) . Identical to asscii for the first 128 chars . Extended ascii chars examples: . 155-£-A3; 161- ©-A9 . SAS option encoding value: wlatin1 (latin1) UTF-8 and other Encodings UTF-8 and other Encodings Problems . Only covers English and Western Europe languages, ISO-8859-2, …15 . Multiple encoding is required to support national languages . Same character encoded differently, same code point represents different chars Unicode . Unicode – assign a unique code/number to every possible character of all languages . Examples of unicode points: o U+0020 – Space U+0041 – A o U+00A9 - © U+C3BF - ÿ UTF-8 and other Encodings UTF-8 . -

Database Globalization Support Guide

Oracle® Database Database Globalization Support Guide 19c E96349-05 May 2021 Oracle Database Database Globalization Support Guide, 19c E96349-05 Copyright © 2007, 2021, Oracle and/or its affiliates. Primary Author: Rajesh Bhatiya Contributors: Dan Chiba, Winson Chu, Claire Ho, Gary Hua, Simon Law, Geoff Lee, Peter Linsley, Qianrong Ma, Keni Matsuda, Meghna Mehta, Valarie Moore, Cathy Shea, Shige Takeda, Linus Tanaka, Makoto Tozawa, Barry Trute, Ying Wu, Peter Wallack, Chao Wang, Huaqing Wang, Sergiusz Wolicki, Simon Wong, Michael Yau, Jianping Yang, Qin Yu, Tim Yu, Weiran Zhang, Yan Zhu This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. -

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding A

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding Adobe-Symbol-Encoding csHPPSMath Adobe-Zapf-Dingbats-Encoding csZapfDingbats Arabic ISO-8859-6, csISOLatinArabic, iso-ir-127, ECMA-114, ASMO-708 ASCII US-ASCII, ANSI_X3.4-1968, iso-ir-6, ANSI_X3.4-1986, ISO646-US, us, IBM367, csASCI big-endian ISO-10646-UCS-2, BigEndian, 68k, PowerPC, Mac, Macintosh Big5 csBig5, cn-big5, x-x-big5 Big5Plus Big5+, csBig5Plus BMP ISO-10646-UCS-2, BMPstring CCSID-1027 csCCSID1027, IBM1027 CCSID-1047 csCCSID1047, IBM1047 CCSID-290 csCCSID290, CCSID290, IBM290 CCSID-300 csCCSID300, CCSID300, IBM300 CCSID-930 csCCSID930, CCSID930, IBM930 CCSID-935 csCCSID935, CCSID935, IBM935 CCSID-937 csCCSID937, CCSID937, IBM937 CCSID-939 csCCSID939, CCSID939, IBM939 CCSID-942 csCCSID942, CCSID942, IBM942 ChineseAutoDetect csChineseAutoDetect: Candidate encodings: GB2312, Big5, GB18030, UTF32:UTF8, UCS2, UTF32 EUC-H, csCNS11643EUC, EUC-TW, TW-EUC, H-EUC, CNS-11643-1992, EUC-H-1992, csCNS11643-1992-EUC, EUC-TW-1992, CNS-11643 TW-EUC-1992, H-EUC-1992 CNS-11643-1986 EUC-H-1986, csCNS11643_1986_EUC, EUC-TW-1986, TW-EUC-1986, H-EUC-1986 CP10000 csCP10000, windows-10000 CP10001 csCP10001, windows-10001 CP10002 csCP10002, windows-10002 CP10003 csCP10003, windows-10003 CP10004 csCP10004, windows-10004 CP10005 csCP10005, windows-10005 CP10006 csCP10006, windows-10006 CP10007 csCP10007, windows-10007 CP10008 csCP10008, windows-10008 CP10010 csCP10010, windows-10010 CP10017 csCP10017, windows-10017 CP10029 csCP10029, windows-10029 CP10079 csCP10079, windows-10079 -

IS 13737 (1993): Isoinformation Technology

इंटरनेट मानक Disclosure to Promote the Right To Information Whereas the Parliament of India has set out to provide a practical regime of right to information for citizens to secure access to information under the control of public authorities, in order to promote transparency and accountability in the working of every public authority, and whereas the attached publication of the Bureau of Indian Standards is of particular interest to the public, particularly disadvantaged communities and those engaged in the pursuit of education and knowledge, the attached public safety standard is made available to promote the timely dissemination of this information in an accurate manner to the public. “जान का अधकार, जी का अधकार” “परा को छोड न 5 तरफ” Mazdoor Kisan Shakti Sangathan Jawaharlal Nehru “The Right to Information, The Right to Live” “Step Out From the Old to the New” IS 13737 (1993): ISOInformation Technology - 130 mm Rewritable optical disk cartridges for information interchange [LITD 16: Computer Hardware, Peripherals and Identification Cards] “ान $ एक न भारत का नमण” Satyanarayan Gangaram Pitroda “Invent a New India Using Knowledge” “ान एक ऐसा खजाना > जो कभी चराया नह जा सकताह ै”ै Bhartṛhari—Nītiśatakam “Knowledge is such a treasure which cannot be stolen” IS 13737 : 1993 ISO/IEC 10089 : 1991 CONTENTS Page NationalForeword..........,..........................................‘.““““’ . (vii) 1 Scope 1 2 Conformance 1 3 Normative references 1 4 Conventions and notations 1 5 List of acronyms 2 6 Definitions 2 2 6. I case 2 6.2 Clamping Zone 6.3 -

1. Background This Paper Is About a Method for Recovering Data from Floppy Disks That Are Failing Due to “Weak Bits”

A Method for Recovering Data From Failing Floppy Disks with a Practical Example Dr. Frederick B. Cohen, Ph.D. and Charles M. Preston Fred Cohen & Associates and California Sciences Institute Abstract: As floppy disks and other similar media age, they have a tendency to lose data because of the reduction in retention of electromagnetic fields over time associated with various environmental degradation factors. In attempting to read these disks, the coding techniques used to write them can be exploited along with the proposed fault mechanisms to demonstrate that repetitions of read attempts under proper conditions yield valid data subject to specific error modes. In many cases those errors can be partially or completely reversed by analysis of read results. A practical example involving a case of substantial legal value is used as an example of the application of these methods. Keywords: weak bits, floppy disks, digital forensics, coding, field density loss, the birthday problem, error inversion 1. Background This paper is about a method for recovering data from floppy disks that are failing due to “weak bits”. It describes a repetitive read technique that has successfully recovered data from failing floppy disks in forensic cases and describes analysis of the results of such repetitive reads in terms of yielding forensically sound data values. These techniques are not new or particularly unique; however, they are not widely published and analysis necessary to support their use in legal matters has not been found elsewhere. The particular matter used as an example in this analysis involved a floppy disk that was more than 15 years old and that contained the only copy of a binary assembled version of a software program that was subject to intellectual property claims of sufficient value to warrant recovery beyond the means normally used by commercial recovery firms. -

JS Character Encodings

JS � Character Encodings Anna Henningsen · @addaleax · she/her 1 It’s good to be back! 2 ??? https://travis-ci.org/node-ffi-napi/get-symbol-from-current-process-h/jobs/641550176 3 So … what’s a character encoding? People are good with text, computers are good with numbers Text List of characters “Encoding” List of bytes List of integers 4 So … what’s a character encoding? People are good with text, computers are good with numbers Hello [‘H’,’e’,’l’,’l’,’o’] 68 65 6c 6c 6f [72, 101, 108, 108, 111] 5 So … what’s a character encoding? People are good with text, computers are good with numbers 你好! [‘你’,’好’] ??? ??? 6 ASCII 0 0x00 <NUL> … … … 65 0x41 A 66 0x42 B 67 0x43 C … … … 97 0x61 a 98 0x62 b … … … 127 0x7F <DEL> 7 ASCII ● 7-bit ● Covers most English-language use cases ● … and that’s pretty much it 8 ISO-8859-*, Windows code pages ● Idea: Usually, transmission has 8 bit per byte available, so create ASCII-extending charsets for more languages ISO-8859-1 (Western) ISO-8859-5 (Cyrillic) Windows-1251 (Cyrillic) (aka Latin-1) … … … … 0xD0 Ð а Р 0xD1 Ñ б С 0xD2 Ò в Т … … … … 9 GBK ● Idea: Also extend ASCII, but use 2-byte for Chinese characters … … 0x41 A 0x42 B … … 0xC4 0xE3 你 0xC4 0xE4 匿 … … 10 https://xkcd.com/927/ 11 Unicode: Multiple encodings! 4d c3 bc 6c 6c (UTF-8) U+004D M “Müll” U+00FC ü 4d 00 fc 00 6c 00 6c 00 (UTF-16LE) U+006C l U+006C l 00 4d 00 fc 00 6c 00 6c (UTF-16BE) 12 Unicode ● New idea: Don’t create a gazillion charsets, and drop 1-byte/2-byte restriction ● Shared character set for multiple encodings: U+XXXX with 4 hex digits, e.g.