Facebook Vs. Hate

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Little Nazis KE V I N D

20170828 subscribers_cover61404-postal.qxd 8/22/2017 3:17 PM Page 1 September 11, 2017 $5.99 TheThe Little Nazis KE V I N D . W I L L I A M S O N O N T H E C HI L D I S H A L T- R I G H T $5.99 37 PLUS KYLE SMITH: The Great Confederate Panic MICHAEL LIND: The Case for Cultural Nationalism 0 73361 08155 1 www.nationalreview.com base_new_milliken-mar 22.qxd 1/3/2017 5:38 PM Page 1 !!!!!!!! ! !! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! !! "e Reagan Ranch Center ! 217 State Street National Headquarters ! 11480 Commerce Park Drive, Santa Barbara, California 93101 ! 888-USA-1776 Sixth Floor ! Reston, Virginia 20191 ! 800-USA-1776 TOC-FINAL_QXP-1127940144.qxp 8/23/2017 2:41 PM Page 1 Contents SEPTEMBER 11, 2017 | VOLUME LXIX, NO. 17 | www.nationalreview.com ON THE COVER Page 22 Lucy Caldwell on Jeff Flake The ‘N’ Word p. 15 Everybody is ten feet tall on the BOOKS, ARTS Internet, and that is why the & MANNERS Internet is where the alt-right really lives, one big online 35 THE DEATH OF FREUD E. Fuller Torrey reviews Freud: group-therapy session The Making of an Illusion, masquerading as a political by Frederick Crews. movement. Kevin D. Williamson 37 ILLUMINATIONS Michael Brendan Dougherty reviews Why Buddhism Is True: The COVER: ROMAN GENN Science and Philosophy of Meditation and Enlightenment, ARTICLES by Robert Wright. DIVIDED THEY STAND (OR FALL) by Ramesh Ponnuru 38 UNJUST PROSECUTION 12 David Bahnsen reviews The Anti-Trump Republicans are not facing their challenges. -

2017-2018 Wisconsin Blue Book: Election Results

ELECTION RESULTS County vote for superintendent of public instruction, February 21, 2017 spring primary Tony Evers* Lowell E. Holtz John Humphries Total Adams . 585 264 95 948 Ashland. 893 101 49 1,047 Barron. 1,190 374 172 1,740 Bayfield . 1,457 178 96 1,732 Brown. 8,941 2,920 1,134 13,011 Buffalo . 597 178 66 843 Burnett ���������������������������������������������������������������������������� 393 165 66 625 Calumet . 1,605 594 251 2,452 Chippewa . 1,922 572 242 2,736 Clark. 891 387 166 1,447 Columbia. 2,688 680 299 3,670 Crawford ������������������������������������������������������������������������� 719 130 86 939 Dane . 60,046 4,793 2,677 67,720 Dodge . 2,407 1,606 306 4,325 Door. 1,602 350 133 2,093 Douglas. 2,089 766 809 3,701 Dunn . 1,561 342 147 2,054 Eau Claire. 5,437 912 412 6,783 Florence . 97 52 18 167 Fond du Lac ������������������������������������������������������������������� 3,151 1,726 495 5,388 Forest ������������������������������������������������������������������������������� 241 92 41 375 Grant . 2,056 329 240 2,634 Green ������������������������������������������������������������������������������� 1,888 379 160 2,439 Green Lake. 462 251 95 809 Iowa . 1,989 311 189 2,498 Iron . 344 106 43 494 Jackson . 675 187 91 955 Jefferson ������������������������������������������������������������������������� 3,149 1,544 305 5,016 Juneau . 794 287 110 1,195 Kenosha . 4,443 1,757 526 6,780 Kewaunee ���������������������������������������������������������������������� 619 218 85 923 La Crosse . 5,992 848 632 7,486 Lafayette ������������������������������������������������������������������������� 814 172 105 1,094 Langlade ������������������������������������������������������������������������� 515 201 103 820 Lincoln ���������������������������������������������������������������������������� 843 280 117 1,245 Manitowoc. 2,656 1,405 543 4,616 Marathon. -

Charlottesville: One Year Later

Charlottesville: One Year Later Sections 1 Introduction 4 Unite the Right 2 2 The Charlottesville Backlash 5 White Supremacy Continues to Surge 3 Disunity and Division INTRODUCTION On August 11, 2017, the world watched in horror as hundreds of torch-wielding white supremacists descended on the University of Virginia campus, chanting, “Jews will not replace us!” The next day, the streets of Charlottesville exploded in violence, ringing with the racist shouts of th thththththeeee n nnneo-Nazis,eo-Nazis,eo-Nazis,eo-Nazis, Klan KlanKlanKlan m mmmembersembersembersembers an ananandddd alt altaltalt rightrightrightright agitat agitatagitatagitatorsorsorsors wh whwhwhoooo gath gathgathgathererereredededed in ininin an ananan unpr unprunprunprecedentedecedentedecedentedecedented sh shshshowowowow of ofofof unity unityunityunity. Their stated 1 / 9 common cause: To protest the removal of a Confederate statue from a local park. Their true purpose: To show to the world the strength and defiance of the white supremacist movement. The promise of Unite the Right, organized primarily by alt right activist Jason Kessler, brought white supremacists of all stripesstripesstripesstripes t tttogethogethogethogetherererer for forforfor a aaa week weekweekweekenenenendddd of ofofof prprprprotestotestotestotest that thatthatthat quickly quicklyquicklyquickly turn turnturnturnedededed t tttoooo vio viovioviolenlenlenlence,ce,ce,ce, culminating in the brutal murder of anti- racist counter-protester Heather Heyer. The white supremacist mayhem prevented the Saturday rally itself from actually occurring, as local and state police converged on the chaotic scene, urging everyone off the streets and away from the parks. Virginia Governor Terry McAuliffe declared a state of emergency and authorities shut down Unite the Right. Despite this, high profile white supremacists like Richard Spencer and David Duke declardeclardeclardeclaredededed Unite UniteUniteUnite th thththeeee Right RightRightRight an ananan o ooovvvverallerallerallerall vict victvictvictororororyyyy..... -

Alt-Reality Leaves Its Mark on Presidential

Fall Dispatches > CHARLES J. SYKES Alt-reality leaves its mark on presidential campaign With the arrival of fall, an anxious electorate increas- ingly feels like the kids in the back seat asking their parents, “Are we there … yet?” Some of us are even old enough to remember when round-the-clock television commercials were the most annoying aspect of our endless political campaigns. That now seems a calmer, gentler time. support is not a wise decision of his,” Palin continued. Palin soon was joined by such conservative luminaries as Ann Coulter and Michelle Malkin, who parachuted into None of the above Ryan’s district on behalf of his opponent, Paul Nehlen, who The current mood was captured in a late August focus also enjoyed the full-throated group held in Brookfield, Wisconsin. Reported The Washing- support of the alt-reality ton Post: conservative media. Foremost “For a small group of undecided among Nehlen’s media cheer- voters here, the presidential choices leaders was Breibart.com, this year are bleak: Hillary Clinton which headlined his momen- is a ‘liar’ with a lifetime of political tum on a nearly daily basis. skullduggery and a ruthless agenda for “Ann Coulter lights Wis- power, while Donald Trump is your consin on fire for Paul Nehlen ‘drunk uncle’ who can’t be trusted against Paul Ryan: ‘This to listen even to the good advice he’s is it, this is your last chance paying for. to save America,’ ” Breitbart “Describing the election as a headlined. On the day of cesspool, 12 swing voters participat- the Aug. -

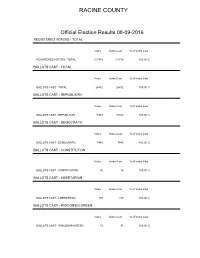

Final Summary Report 8-9-2016

RACINE COUNTY Official Election Results 08-09-2016 REGISTERED VOTERS - TOTAL Votes Votes Cast % of Votes Cast REGISTERED VOTERS - TOTAL 113718 113718 100.00 % BALLOTS CAST - TOTAL Votes Votes Cast % of Votes Cast BALLOTS CAST - TOTAL 26472 26472 100.00 % BALLOTS CAST - REPUBLICAN Votes Votes Cast % of Votes Cast BALLOTS CAST - REPUBLICAN 18342 18342 100.00 % BALLOTS CAST - DEMOCRATIC Votes Votes Cast % of Votes Cast BALLOTS CAST - DEMOCRATIC 7845 7845 100.00 % BALLOTS CAST - CONSTITUTION Votes Votes Cast % of Votes Cast BALLOTS CAST - CONSTITUTION 16 16 100.00 % BALLOTS CAST - LIBERTARIAN Votes Votes Cast % of Votes Cast BALLOTS CAST - LIBERTARIAN 107 107 100.00 % BALLOTS CAST - WISCONSIN GREEN Votes Votes Cast % of Votes Cast BALLOTS CAST - WISCONSIN GREEN 31 31 100.00 % RACINE COUNTY Party Preference Votes Votes Cast % of Votes Cast Republican 16532 26470 62.46 % Democratic 6295 26470 23.78 % Constitution 14 26470 0.05 % Libertarian 63 26470 0.24 % Wisconsin Green 18 26470 0.07 % OVER VOTES 7 26470 0.03 % UNDER VOTES 3541 26470 13.38 % United States Senator Votes Votes Cast % of Votes Cast Ron Johnson 15284 18357 83.26 % WRITE-IN 0 18357 0.00 % OVER VOTES 0 18357 0.00 % UNDER VOTES 3073 18357 16.74 % Representative in Congress, District 1 Votes Votes Cast % of Votes Cast Paul Ryan 15214 18356 82.88 % Paul Nehlen 2666 18356 14.52 % WRITE-IN 0 18356 0.00 % OVER VOTES 0 18356 0.00 % UNDER VOTES 476 18356 2.59 % State Senator, District 22 Votes Votes Cast % of Votes Cast WRITE-IN 14 2779 0.50 % OVER VOTES 0 2779 0.00 % UNDER VOTES 2765 -

Antisemitism

Antisemitism: A Persistent Threat to Human Rights A Six-Month Review of Antisemitism’s Global Impact following the UN’s ‘Historic’ Report ANNEX Recent Antisemitic Incidents related to COVID-19 April 2020 North America • Canada o On April 19, an online prayer service by the the Shaarei Shomayim synagogue in Toronto was ‘Zoombombed’ by a number of individuals who yelled antisemitic insults at participants and used their screens to show pornography. Immigration Minister Marco Mendicino has condemned the incident saying anti-Semitism, hatred and division have no place anywhere in Canada. A Toronto police spokesperson said the incident was being investigated as a possible hate crime.1 • United States o Colorado: On November 1, 2019, the FBI arrested a 27 year-old man with white supremacist beliefs who had expressed antisemitic hatred on Facebook for attempting to bomb a synagogue in Pueblo, Colorado.2 o Massachusetts: On April 2, police discovered a homemade incendiary device at Ruth’s House, a Jewish assisted living residence in Longmeadow, Massachusetts. On April 15, police arrested John Michael Rathbun and charged him with two counts of attempted arson. It has been reported that a white supremacist organization operating on two unnamed social media platforms had specified Ruth’s House as one of two possible locations for committing a mass killing, with one user referring to it as “that jew nursing home in longmeadow massachusetts,” and that a calendar event potentially created by the same user listed April 3, 2020 as “jew killing day.”3 o Missouri: Timothy Wilson, a white supremacist who was active on two neo-Nazi channels on Telegram and had very recently posted that the COVID-19 pandemic “was engineered by Jews as a power grab,”4 was shot and killed on March 24 as FBI agents attempted to arrest him for plotting to blow up a hospital treating patients of the virus. -

Antisemitism in Today's America

Alvin H. Rosenfeld Antisemitism in Today’sAmerica LeonardDinnerstein’s Antisemitism in America,published in 1994,remains the most comprehensive and authoritative studyofits subject to date. In his book’sfinal sentence, however,Dinnerstein steps out of his role as areliable guide to the past and ventures aprediction about the future that has proven to be seriously wrong.Antisemitism, he concludes, “has declinedinpotency and will continue to do so for the foreseeable future.”¹ In the years since he for- mulated this optimistic view,antisemitism in America,far from declining,has been on the rise, as Iwill aim to demonstrate. Ibegin with apersonal anecdote. During alecture visit to Boca Raton, Florida,inJanuary 2017,Iattended religious services at one of the city’slarge synagogues and was surprised to see heavy security outside and inside the building. “What’sgoing on?” Iasked afellow worshipper. “Nothing special,” he replied, “having these guys here is just normal these days.” It didn’tstrike me as normal, especiallyinAmerica.From visitstosynago- gues in Europe, Iamused to seeing security guards in place—mostlypolicemen but,inFrance, sometimes also soldiers. As targets of ongoing threats, Europe’s Jews need such protection and have come to relyonit. Whysuch need exists is clear: Europe has along history of antisemitism,and, in recent years, it has be- come resurgent—in manycases, violentlyso. European Jews are doing,then, what they can and must do to defend them- selvesagainst the threats they face. Some, fearing still worse to come, have left their homecountries for residence elsewhere; others are thinking about doing the same. Most remain, but apprehensively,and some have adopted ways to mute their Jewish identities to avert attention from themselves. -

The Proud Boys: a Republican Party Street Gang in Search of New Frames: Q&A with the Authors of Producers, Parasites, Patriots Editor’S Letter

SPRING 2019 The Public Eye In this issue: The Intersectional Right: A Roundtable on Gender and White Supremacy Aberration or Reflection? How to Understand Changes on the Political Right The Proud Boys: A Republican Party Street Gang In Search of New Frames: Q&A with the Authors of Producers, Parasites, Patriots editor’s letter THE PUBLIC EYE QUARTERLY Last November, PRA worked with writer, professor, and longtime advocate Loretta PUBLISHER Ross to convene a conversation about the relationship between gender and White su- Tarso Luís Ramos premacy. For decades, Ross says, too many fight-the-Right organizations neglected EDITOR Kathryn Joyce to pay attention to this perverse, right-wing version of intersectionality, although its COVER ART impacts were numerous—evident in overlaps between White supremacist and anti- Danbee Kim abortion violence; in family planning campaigns centered on myths of overpopula- PRINTING tion; in concepts of White womanhood used to further repression and bigotry; and in Red Sun Press how White women themselves formed the backbone of segregationist movements. By contrast, today there is a solid core of researchers and activists working on this issue. At November’s meeting, PRA spoke to a number of them (pg. 3) about their work, the The Public Eye is published by current stakes, and the way forward. Political Research Associates Tarso Luís Ramos Our second feature this issue, by Carolyn Gallaher, looks at another dynamic situ- EXECUTIVE DIRECTOR ation: how to understand changes on the political Right (pg. 9). Since Trump came Frederick Clarkson to power, numerous conservative commentators—mostly “never Trumpers”—have SENIOR ReseARCH ANALYST predicted (or declared) the death of the Republican Party. -

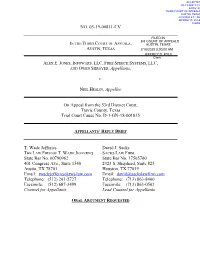

Appellants' Reply Brief

ACCEPTED 03-19-00811-CV 40902128 THIRD COURT OF APPEALS AUSTIN, TEXAS 2/18/2020 4:11 AM JEFFREY D. KYLE CLERK NO. 03-19-00811-CV FILED IN 3rd COURT OF APPEALS IN THE THIRD COURT OF APPEALS, AUSTIN, TEXAS USTIN EXAS A , T 2/18/2020 8:00:00 AM JEFFREY D. KYLE Clerk ALEX E. JONES, INFOWARS, LLC, FREE SPEECH SYSTEMS, LLC, AND OWEN SHROYER, Appellants, v. NEIL HESLIN, Appellee On Appeal from the 53rd District Court, Travis County, Texas Trial Court Cause No. D-1-GN-18-001835 APPELLANTS’ REPLY BRIEF T. Wade Jefferies David J. Sacks THE LAW FIRM OF T. WADE JEFFERIES SACKS LAW FIRM State Bar No. 00790962 State Bar No. 17505700 401 Congress Ave., Suite 1540 2323 S. Shepherd, Suite 825 Austin, TX 78701 Houston, TX 77019 Email: [email protected] Email: [email protected] Telephone: (512) 201-2727 Telephone: (713) 863-8400 Facsimile: (512) 687-3499 Facsimile: (713) 863-0502 Counsel for Appellants Lead Counsel for Appellants ORAL ARGUMENT REQUESTED NO. 03-19-00811-CV TABLE OF CONTENTS TABLE OF CONTENTS .......................................................................................... ii INDEX OF AUTHORITIES .....................................................................................iv INTRODUCTION ..................................................................................................... 1 ARGUMENT ............................................................................................................. 7 I. APPELLEE’S DEFAMATION CAUSE OF ACTION SHOULD BE DISMISSED UNDER THE TCPA. ............................................................... -

Investplusecole De Formation Agréée Par L’Etat Sous Le N° 1650 - Exercices Individuels Sur PC - Supports De Cours Documentation EMPLOI FLEXIBILITÉ

Le Brent perd 6% de sa valeur record en une semaine Réunion de gouvernement DERNIÈRE COMPOSITION DE DZAIRINDEX Les pays de l’Opep sous une forte pression Approbation de projets de décrets exécutifs VALEUR Ouv Clôture Var % Cap.flottante ALLIANCE ASSURANCES 421,00 4220,00 0,10 767 894 620,00 américaine Le gouvernement a approuvé dans sa réu- BIOPHARM 1140,00 1140,00 0,00 5 819 591 700,00 Le prix du pétrole va terminer le week-end nion tenue jeudi et présidée par le Premier avec une baisse supplémentaire estimée à ministre, Noureddine Bedoui, des projets de EGH EL AURASSI 530,60 520,08 9,00 679 356 160,00 2%, et ce, en réaction au plan américain, décrets exécutifs et entendu des exposés rela- NCA-ROUIBA 320,10 320,00 -0,97 679 356 160,00 qui prévoit non seulement l’augmentation... tifs aux secteurs des finances, de la santé... SAIDAL 669,00 660,00 -6,25 1 320 000 000,00 ACTUALITÉ > PAGE 24 ACTUALITÉ > PAGE 24 LeLe ChiffreChiffre d’Affairesd’Affaires Vendredi 3 - Samedi 4 mai 2019 N° 2585 Prix : 10 DA Le Quotidien algérien de l’économie et des finances créé le 09 avril 2009 www.lechiffredaffaires.com Allant de 1,5 à 6% à partir du mois de mai Journée mondiale de la liberté de la presse Certaines avancées, mais Les pensions de retraite toujours à la traîne Tous les ans, la Journée mon- diale de la liberté de la presse augmentées permet de célébrer les prin- cipes fondamentaux de la liberté de la presse, d’évaluer la Par Zahir R. -

2020 Audit of Antisemitic Incidents All Incidents Have Been Corroborated

2020 Audit of Antisemitic Incidents All incidents have been corroborated The Jewish Community Relations Council’s 2020 Audit of Antisemitic Incidents indicates an overall increase in incidents and some troubling trends. The audit includes 99 reported and corroborated incidents, which reflects a 36% increase in incidents from 2019. Geographical Considerations: Our reporting includes events that occur in the State of Wisconsin, are committed by persons residing in the State of Wisconsin, relate to Wisconsin institutions, relate to or specifically respond to Wisconsin persons, and/or would otherwise go unreported if not reported within our audit. Trends • Expression (82% increase) • Conspiracy theories (90% increase) • Hate group activity (20% increase) • Pejorative references to Israel and Zionism in an antisemitic context (61% increase) • College and University activity (75% increase) • Social media activity (100% increase) • Middle School activity (71% decrease) Expression: There was a significant spike in expressions of antisemitism this year in comparison to years prior (18 in 2017, 21 in 2018, 34 in 2019, and 62 in 2020). Part of this spike may be attributed to COVID-19 pushing some hateful expression online instead of in person, further illustrated by the spike in social media incidents. Some examples: • Bend the Arc: Jewish Action Milwaukee sent out texts about a post-election meeting, one response stated, “No Jews are the problems in this country they all in Hollywood they own all the banks they own all the media they own Jew York Do f*** off”. • During a virtual high school history class, a student stated, "I hate Jews". • A Wisconsin Mayor received an email after a local antisemitic incident "Lol, the man was right, his neighbor is a dirty Jew and Jews are responsible for organizing violent protests all over the country. -

The Boogaloo Movement

The Boogaloo Movement Key Points The boogaloo movement is an anti-government extremist movement that formed in 2019. In 2020, boogalooers increasingly engaged in real world activities as well as online activities, showing up at protests and rallies around gun rights, pandemic restrictions and police-related killings. The term “boogaloo” is a slang reference to a future civil war, a concept boogalooers anticipate and even embrace. The ideology of the boogaloo movement is still developing but is primarily anti-government, anti-authority and anti-police in nature. Most boogalooers are not white supremacists, though one can find white supremacists within the movement. The boogalooers’ anti-police beliefs prompted them to participate widely in the Black Lives Matters protests following the killing of George Floyd by Minneapolis police in May 2020. Boogalooers rely on memes and in-jokes, as well as gear and apparel, to create a sense of community and share their ideology. Boogalooers have been arrested for crimes up to and including murder and terrorist plots. I. Origins: From Slang Term to Movement The boogaloo movement is a developing anti-government extremist movement that arose in 2019 and features a loose anti-government and anti-police ideology. The participation of boogaloo adherents in 2020’s anti-lockdown and Black Lives 1 / 38 Matter protests has focused significant attention on the movement, as have the criminal and violent acts committed by some of its adherents. Before there was a boogaloo movement, there was the concept of “the boogaloo” itself: a slang phrase used as a shorthand reference for a future civil war that became popular in various fringe circles in late 2018.