Time-Based Signal Processing and Shape in Alternative Rock Recordings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Excesss Karaoke Master by Artist

XS Master by ARTIST Artist Song Title Artist Song Title (hed) Planet Earth Bartender TOOTIMETOOTIMETOOTIM ? & The Mysterians 96 Tears E 10 Years Beautiful UGH! Wasteland 1999 Man United Squad Lift It High (All About 10,000 Maniacs Candy Everybody Wants Belief) More Than This 2 Chainz Bigger Than You (feat. Drake & Quavo) [clean] Trouble Me I'm Different 100 Proof Aged In Soul Somebody's Been Sleeping I'm Different (explicit) 10cc Donna 2 Chainz & Chris Brown Countdown Dreadlock Holiday 2 Chainz & Kendrick Fuckin' Problems I'm Mandy Fly Me Lamar I'm Not In Love 2 Chainz & Pharrell Feds Watching (explicit) Rubber Bullets 2 Chainz feat Drake No Lie (explicit) Things We Do For Love, 2 Chainz feat Kanye West Birthday Song (explicit) The 2 Evisa Oh La La La Wall Street Shuffle 2 Live Crew Do Wah Diddy Diddy 112 Dance With Me Me So Horny It's Over Now We Want Some Pussy Peaches & Cream 2 Pac California Love U Already Know Changes 112 feat Mase Puff Daddy Only You & Notorious B.I.G. Dear Mama 12 Gauge Dunkie Butt I Get Around 12 Stones We Are One Thugz Mansion 1910 Fruitgum Co. Simon Says Until The End Of Time 1975, The Chocolate 2 Pistols & Ray J You Know Me City, The 2 Pistols & T-Pain & Tay She Got It Dizm Girls (clean) 2 Unlimited No Limits If You're Too Shy (Let Me Know) 20 Fingers Short Dick Man If You're Too Shy (Let Me 21 Savage & Offset &Metro Ghostface Killers Know) Boomin & Travis Scott It's Not Living (If It's Not 21st Century Girls 21st Century Girls With You 2am Club Too Fucked Up To Call It's Not Living (If It's Not 2AM Club Not -

Half Light Press

HALF LIGHT PRESS The Lost Album (2015) Three Imaginary Girls Earlier this summer, I haphazardly found myself observing Half Light during their CD release party that took place at The Comet Tavern in Seattle. At the time I didn’t know anything about them, but I was certainly impressed by the atmospheric and reverb-laden sound that emanated from their amplifiers. Almost two months later, I acquired a copy of their debut album, entitled Sleep More Take More Drugs Do Whatever We Want, and this disc does not disappoint. In fact, it is the best release that I have heard by a Seattle band this year. The members of Half Light are big fans of acoustic and electric based space rock such as Mojave 3, Slowdive, Mazzy Star and The Church. Impressively, they managed to convince Tim Powles, drummer of The Church, to produce this recording. His wonderful production style that weaves a hazy, shimmering cacophony suits this band perfectly as they balance the line between beautiful and chaotic. In Powles’ own words, Half Light "certainly has what many bands dream of, a sound." A unique and memorable sound collage is what they offer their listeners, and the nine songs featured on Sleep More are all one needs to be convinced of this point. Vocalist Dayna Loeffler comes across with a soft and bashful voice that has a slightly raspy quality, which sounds akin to Allison Shaw of Cranes at times. On the track "Barbs," these innate tones are augmented with a heavy reverb production effect. The guitars are also drenched in delay and generous amounts of echo, making the whole thing take on a truly drugged-out in space effect. -

Epilogue 231

UvA-DARE (Digital Academic Repository) Noise resonance Technological sound reproduction and the logic of filtering Kromhout, M.J. Publication date 2017 Document Version Other version License Other Link to publication Citation for published version (APA): Kromhout, M. J. (2017). Noise resonance: Technological sound reproduction and the logic of filtering. General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:30 Sep 2021 NOISE RESONANCE | EPILOGUE 231 Epilogue: Listening for the event | on the possibility of an ‘other music’ 6.1 Locating the ‘other music’ Throughout this thesis, I wrote about phonographs and gramophones, magnetic tape recording and analogue-to-digital converters, dual-ended noise reduction and dither, sine waves and Dirac impulses; in short, about chains of recording technologies that affect the sound of their output with each irrepresentable technological filtering operation. -

My Bloody Valentine's Loveless David R

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2006 My Bloody Valentine's Loveless David R. Fisher Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF MUSIC MY BLOODY VALENTINE’S LOVELESS By David R. Fisher A thesis submitted to the College of Music In partial fulfillment of the requirements for the degree of Master of Music Degree Awarded: Spring Semester, 2006 The members of the Committee approve the thesis of David Fisher on March 29, 2006. ______________________________ Charles E. Brewer Professor Directing Thesis ______________________________ Frank Gunderson Committee Member ______________________________ Evan Jones Outside Committee M ember The Office of Graduate Studies has verified and approved the above named committee members. ii TABLE OF CONTENTS List of Tables......................................................................................................................iv Abstract................................................................................................................................v 1. THE ORIGINS OF THE SHOEGAZER.........................................................................1 2. A BIOGRAPHICAL ACCOUNT OF MY BLOODY VALENTINE.………..………17 3. AN ANALYSIS OF MY BLOODY VALENTINE’S LOVELESS...............................28 4. LOVELESS AND ITS LEGACY...................................................................................50 BIBLIOGRAPHY..............................................................................................................63 -

In BLACK CLOCK, Alaska Quarterly Review, the Rattling Wall and Trop, and She Is Co-Organizer of the Griffith Park Storytelling Series

BLACK CLOCK no. 20 SPRING/SUMMER 2015 2 EDITOR Steve Erickson SENIOR EDITOR Bruce Bauman MANAGING EDITOR Orli Low ASSISTANT MANAGING EDITOR Joe Milazzo PRODUCTION EDITOR Anne-Marie Kinney POETRY EDITOR Arielle Greenberg SENIOR ASSOCIATE EDITOR Emma Kemp ASSOCIATE EDITORS Lauren Artiles • Anna Cruze • Regine Darius • Mychal Schillaci • T.M. Semrad EDITORIAL ASSISTANTS Quinn Gancedo • Jonathan Goodnick • Lauren Schmidt Jasmine Stein • Daniel Warren • Jacqueline Young COMMUNICATIONS EDITOR Chrysanthe Tan SUBMISSIONS COORDINATOR Adriana Widdoes ROVING GENIUSES AND EDITORS-AT-LARGE Anthony Miller • Dwayne Moser • David L. Ulin ART DIRECTOR Ophelia Chong COVER PHOTO Tom Martinelli AD DIRECTOR Patrick Benjamin GUIDING LIGHT AND VISIONARY Gail Swanlund FOUNDING FATHER Jon Wagner Black Clock © 2015 California Institute of the Arts Black Clock: ISBN: 978-0-9836625-8-7 Black Clock is published semi-annually under cover of night by the MFA Creative Writing Program at the California Institute of the Arts, 24700 McBean Parkway, Valencia CA 91355 THANK YOU TO THE ROSENTHAL FAMILY FOUNDATION FOR ITS GENEROUS SUPPORT Issues can be purchased at blackclock.org Editorial email: [email protected] Distributed through Ingram, Ingram International, Bertrams, Gardners and Trust Media. Printed by Lightning Source 3 Norman Dubie The Doorbell as Fiction Howard Hampton Field Trips to Mars (Psychedelic Flashbacks, With Scones and Jam) Jon Savage The Third Eye Jerry Burgan with Alan Rifkin Wounds to Bind Kyra Simone Photo Album Ann Powers The Sound of Free Love Claire -

Direct-To-Master Recording

Direct-To-Master Recording J. I. Agnew S. Steldinger Magnetic Fidelity http://www.magneticfidelity.com info@magneticfidelity.com July 31, 2016 Abstract Direct-to-Master Recording is a method of recording sound, where the music is performed entirely live and captured directly onto the master medium. This is usually done entirely in the analog domain using either magnetic tape or a phonograph disk as the recording medium. The result is an intense and realistic sonic image of the performance with an outstandig dynamic range. 1 The evolution of sound tracks can now also be edited note by note to recording technology compile a solid performance that can be altered or \improved" at will. Sound recording technology has greatly evolved This technological progress has made it pos- since the 1940's, when Direct-To-Master record- sible for far less competent musicians to make ing was not actually something special, but more a more or less competent sounding album and like one of the few options for recording music. for washed out rock stars who, if all put in the This evolution has enabled us to do things that same room at the same time, would probably would be unthinkable in those early days, such as murder each other, to make an album together. multitrack recording, which allows different in- Or, at least almost together. This ability, how- struments to be recorded at different times, and ever, comes at a certain cost. The recording pro- mixed later to create what sounds like a perfor- cess has been broken up into several stages, per- mance by many instruments at the same time. -

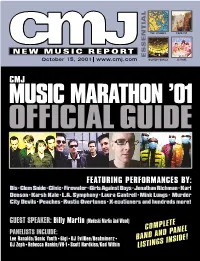

Complete Band and Panel Listings Inside!

THE STROKES FOUR TET NEW MUSIC REPORT ESSENTIAL October 15, 2001 www.cmj.com DILATED PEOPLES LE TIGRE CMJ MUSIC MARATHON ’01 OFFICIALGUIDE FEATURING PERFORMANCES BY: Bis•Clem Snide•Clinic•Firewater•Girls Against Boys•Jonathan Richman•Karl Denson•Karsh Kale•L.A. Symphony•Laura Cantrell•Mink Lungs• Murder City Devils•Peaches•Rustic Overtones•X-ecutioners and hundreds more! GUEST SPEAKER: Billy Martin (Medeski Martin And Wood) COMPLETE D PANEL PANELISTS INCLUDE: BAND AN Lee Ranaldo/Sonic Youth•Gigi•DJ EvilDee/Beatminerz• GS INSIDE! DJ Zeph•Rebecca Rankin/VH-1•Scott Hardkiss/God Within LISTIN ININ STORESSTORES TUESDAY,TUESDAY, SEPTEMBERSEPTEMBER 4.4. SYSTEM OF A DOWN AND SLIPKNOT CO-HEADLINING “THE PLEDGE OF ALLEGIANCE TOUR” BEGINNING SEPTEMBER 14, 2001 SEE WEBSITE FOR DETAILS CONTACT: STEVE THEO COLUMBIA RECORDS 212-833-7329 [email protected] PRODUCED BY RICK RUBIN AND DARON MALAKIAN CO-PRODUCED BY SERJ TANKIAN MANAGEMENT: VELVET HAMMER MANAGEMENT, DAVID BENVENISTE "COLUMBIA" AND W REG. U.S. PAT. & TM. OFF. MARCA REGISTRADA./Ꭿ 2001 SONY MUSIC ENTERTAINMENT INC./ Ꭿ 2001 THE AMERICAN RECORDING COMPANY, LLC. WWW.SYSTEMOFADOWN.COM 10/15/2001 Issue 735 • Vol 69 • No 5 CMJ MUSIC MARATHON 2001 39 Festival Guide Thousands of music professionals, artists and fans converge on New York City every year for CMJ Music Marathon to celebrate today's music and chart its future. In addition to keynote speaker Billy Martin and an exhibition area with a live performance stage, the event features dozens of panels covering topics affecting all corners of the music industry. Here’s our complete guide to all the convention’s featured events, including College Day, listings of panels by 24 topic, day and nighttime performances, guest speakers, exhibitors, Filmfest screenings, hotel and subway maps, venue listings, band descriptions — everything you need to make the most of your time in the Big Apple. -

Document Sur La Redirection Dâ•Žimprimante Dans Le Bureau Ã

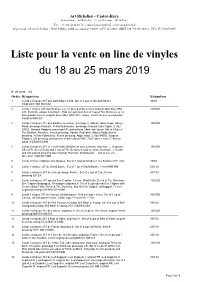

Art Richelieu - Castor-Hara Informations : Art Richelieu - 51, rue Decamps - 75116 Paris Tel : + 33 (0)1 42 24 80 76 - [email protected] - www.art-richelieu.fr Siège Social : 25, rue Le Peletier - 75009 PARIS - SARL au capital de 9 000 € - OVV 107-2018 - SIRET 834 790 958 000 13 - TVA N°17834790958 Liste pour la vente on line de vinyles du 18 au 25 mars 2019 N° de vente : 162 Ordre Désignation Estimation 1 Lot de 2 disques 33T des Aphrodite's Child. Set of 2 Lps of the Aphrodite's 30/60 Child.VG+/ NM EX/ NM 2 Lot de 1 disque 33T des Beatles Les 14 plus grands succes original label bleu OSX 400/600 231, Pochette unique à la france. Etat exceptionnel Set of 1 Lp of The Beatles Les 14 plus grands succes, original blue label, OSX 231, unique french sleeve. Exceptional condition.NM/ EX 3 Lot de 4 disques 33T des Beatles. Revolver, pressage fr, Odeon, label rouge. Abbey 100/200 Road, pressage français. Yellow Submarine, pressage français, label Apple, C 062- 04002. Sergent Peppers, pressage UK, parlophone, label noir/ jaune. Set of 4 Lps of The Beatles. Revolver, french pressing, Odeon, Red label. Abbey Road, french pressing. Yellow Submarine, french pressing, Apple label, C 062-04002. Sergent Peppers, UK pressing, parlophone, black/ yellow PMC 7027 label + inner ( Tear on spine )VG/NM EX/NM 4 Lot de 5 disques 33T et 1 coffret des Beatles en solo (Lennon, Harrison...). Originaux 100/200 US et FR. Set of 5 Lps and 1 box of The Beatles in solo (Lennon, Harrison...), french and US original pressing.Sauf George Harrison, Wonderwall : etat G sur une face.VG+ / NM VG+/NM 5 Lot de 3 livres originaux des Beatles. -

In the Studio: the Role of Recording Techniques in Rock Music (2006)

21 In the Studio: The Role of Recording Techniques in Rock Music (2006) John Covach I want this record to be perfect. Meticulously perfect. Steely Dan-perfect. -Dave Grohl, commencing work on the Foo Fighters 2002 record One by One When we speak of popular music, we should speak not of songs but rather; of recordings, which are created in the studio by musicians, engineers and producers who aim not only to capture good performances, but more, to create aesthetic objects. (Zak 200 I, xvi-xvii) In this "interlude" Jon Covach, Professor of Music at the Eastman School of Music, provides a clear introduction to the basic elements of recorded sound: ambience, which includes reverb and echo; equalization; and stereo placement He also describes a particularly useful means of visualizing and analyzing recordings. The student might begin by becoming sensitive to the three dimensions of height (frequency range), width (stereo placement) and depth (ambience), and from there go on to con sider other special effects. One way to analyze the music, then, is to work backward from the final product, to listen carefully and imagine how it was created by the engineer and producer. To illustrate this process, Covach provides analyses .of two songs created by famous producers in different eras: Steely Dan's "Josie" and Phil Spector's "Da Doo Ron Ron:' Records, tapes, and CDs are central to the history of rock music, and since the mid 1990s, digital downloading and file sharing have also become significant factors in how music gets from the artists to listeners. Live performance is also important, and some groups-such as the Grateful Dead, the Allman Brothers Band, and more recently Phish and Widespread Panic-have been more oriented toward performances that change from night to night than with authoritative versions of tunes that are produced in a recording studio. -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case 2:21-cv-04806-RSWL-SK Document 1 Filed 06/14/21 Page 1 of 12 Page ID #:1 1 COHEN MUSIC LAW 2 Evan S. Cohen (SBN 119601) [email protected] 3 1180 South Beverly Drive. Suite 510 4 Los Angeles, CA 90035-1157 (310) 556-9800 5 6 BYRNES HIRSCH P.C. 7 Bridget B. HirsCh (SBN 257015) [email protected] 8 2272 Colorado Blvd., #1152 9 Los Angeles, CA 90041 (323) 387-3413 10 11 Attorneys for Plaintiffs 12 13 UNITED STATES DISTRICT COURT 14 CENTRAL DISTRICT OF CALIFORNIA 15 16 WESTERN DIVISION 17 18 JAMES REID, an individual, and Case No.: 2:21-cv-04806 WILLIAM REID, an individual, both 19 doing business as The Jesus and Mary 20 Chain, COMPLAINT FOR COPYRIGHT INFRINGMENT AND 21 Plaintiffs, DECLARATORY RELIEF 22 v. JURY TRIAL DEMANDED 23 WARNER MUSIC GROUP CORP., a 24 Delaware Corporation; and DOES 1 through 10, inClusive, 25 26 Defendants. 27 28 COMPLAINT FOR COPYRIGHT INFRINGEMENT AND DECLARATORY RELIEF - 1 - Case 2:21-cv-04806-RSWL-SK Document 1 Filed 06/14/21 Page 2 of 12 Page ID #:2 1 Plaintiffs JAMES REID and WILLIAM REID allege as follows: 2 3 I 4 NATURE OF THE ACTION 5 1. This is an aCtion brought by James Reid and William Reid, two of the 6 founding members of the successful and critically acclaimed alternative musical 7 group The Jesus and Mary Chain (“JAMC”), formed in their native Scotland in 1983, 8 along with Douglas Hart (“Hart”), against Warner Music Group Corp. (“WMG”), the 9 third-largest reCord company conglomerate in the world, for willful copyright 10 infringement and deClaratory relief. -

Mediated Music Makers. Constructing Author Images in Popular Music

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Helsingin yliopiston digitaalinen arkisto Laura Ahonen Mediated music makers Constructing author images in popular music Academic dissertation to be publicly discussed, by due permission of the Faculty of Arts at the University of Helsinki in auditorium XII, on the 10th of November, 2007 at 10 o’clock. Laura Ahonen Mediated music makers Constructing author images in popular music Finnish Society for Ethnomusicology Publ. 16. © Laura Ahonen Layout: Tiina Kaarela, Federation of Finnish Learned Societies ISBN 978-952-99945-0-2 (paperback) ISBN 978-952-10-4117-4 (PDF) Finnish Society for Ethnomusicology Publ. 16. ISSN 0785-2746. Contents Acknowledgements. 9 INTRODUCTION – UNRAVELLING MUSICAL AUTHORSHIP. 11 Background – On authorship in popular music. 13 Underlying themes and leading ideas – The author and the work. 15 Theoretical framework – Constructing the image. 17 Specifying the image types – Presented, mediated, compiled. 18 Research material – Media texts and online sources . 22 Methodology – Social constructions and discursive readings. 24 Context and focus – Defining the object of study. 26 Research questions, aims and execution – On the work at hand. 28 I STARRING THE AUTHOR – IN THE SPOTLIGHT AND UNDERGROUND . 31 1. The author effect – Tracking down the source. .32 The author as the point of origin. 32 Authoring identities and celebrity signs. 33 Tracing back the Romantic impact . 35 Leading the way – The case of Björk . 37 Media texts and present-day myths. .39 Pieces of stardom. .40 Single authors with distinct features . 42 Between nature and technology . 45 The taskmaster and her crew. -

A Passing Fancy

A Passing Fancy Patricia Walker A Passing Fancy 2 ArtAge supplies books, plays, and materials to older performers around the world. Directors and actors have come to rely on our 30+ years of experience in the field to help them find useful materials and information that makes their productions stimulating, fun, and entertaining. ArtAge’s unique program has been featured in Wall Street Journal, LA Times, Chicago Tribune, American Theatre, Time Magazine, Modern Maturity, on CNN, NBC, and in many other media sources. ArtAge is more than a catalog. We also supply information, news, and trends on our top-rated website, www.seniortheatre.com. We stay in touch with the field with our very popular e-newsletter, Senior Theatre Online. Our President, Bonnie Vorenberg, is asked to speak at conferences and present workshops that supplement her writing and consulting efforts. We’re here to help you be successful in Senior Theatre! We help older performers fulfill their theatrical dreams! ArtAge Publications Bonnie L. Vorenberg, President PO Box 19955 Portland OR 97280 503-246-3000 or 800-858-4998 [email protected] www.seniortheatre.com ArtAge Senior Theatre Resource Center, 800-858-4998, www.seniortheatre.com A Passing Fancy 3 NOTICE Copyright: This play is fully protected under the Copyright Laws of the United States of America, Canada, and all other countries of the Universal Copyright Convention. The laws are specific regarding the piracy of copyrighted materials. Sharing the material with other organizations or persons is prohibited. Unlawful use of a playwright’s work deprives the creator of his or her rightful income.