Firewalls and Virtual Private Networks Acknowledgements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2013 Annual Telecommunications Monitoring Report

ISSN 1179-724X Project no. 13.3/10147 Public Annual Telecommunications Monitoring Report 2013 Telecommunications monitoring report Date: May 2014 2 CONTENTS Executive Summary 3 Introduction 5 Purpose of this report 5 Data sources 5 Market overview 6 TelstraClear disappears 6 UFB drives up telecommunications investment 6 Broadband connections continue to grow 8 Calling volumes converging 10 Total revenue rise not sustained 11 Fixed-line and mobile markets show similar levels of concentration 12 Retail fixed-line market 14 Market overview 14 Fall in local calling accelerates 15 Most fixed-line revenues continue to fall 17 Telecom’s retail voice share continues to slip, including wholesale 18 Consolidation in broadband market 19 Lift in average broadband speed 21 Retail mobile market 23 Market overview 23 Rising data revenues underpin modest growth 24 Mobile voice minutes resume upward trend 25 Off-net calling becomes more popular 26 Texting appears to have peaked 28 Competition progressing in prepay but not much in business 30 The telecommunications consumer – from smartphone to smart living 33 How much appetite do users have for data and speed? 34 What drives consumption? 38 What is the impact on citizens’ welfare and New Zealand’s economic growth? 44 Challenges of digital life 50 The 2013 year in review 52 List of defined terms and abbreviations 57 3 Executive Summary This is the Commerce Commission’s seventh annual telecommunications market monitoring report. It is produced as part of the Commission’s on-going monitoring of the evolution of competition in the telecommunications sector in New Zealand. We have included two infographics showing how the telecommunications market is delivering more to consumers and helping to drive the economy. -

The World Internet Project International Report 6Th Edition

The World Internet Project International Report 6th Edition THE WORLD INTERNET PROJECT International Report ̶ Sixth Edition Jeffrey I. Cole, Ph.D. Director, USC Annenberg School Center for the Digital Future Founder and Organizer, World Internet Project Michael Suman, Ph.D., Research Director Phoebe Schramm, Associate Director Liuning Zhou, Ph.D., Research Associate Interns: Negin Aminian, Hany Chang, Zoe Covello, Ryan Eason, Grace Marie Laffoon‐Alejanre, Eunice Lee, Zejun Li, Cheechee Lin, Guadalupe Madrigal, Mariam Manukyan, Lauren Uba, Tingxue Yu Written by Monica Dunahee and Harlan Lebo World Internet Project International Report ̶ Sixth Edition | i WORLD INTERNET PROJECT – International Report Sixth Edition Copyright © 2016 University of Southern California COPIES You are welcome to download additional copies of The World Internet Project International Report for research or individual use. However, this report is protected by copyright and intellectual property laws, and cannot be distributed in any way. By acquiring this publication you agree to the following terms: this copy of the sixth edition of the World Internet Project International Report is for your exclusive use. Any abuse of this agreement or any distribution will result in liability for its illegal use. To download the full text and graphs in this report, go to www.digitalcenter.org. ATTRIBUTION Excerpted material from this report can be cited in media coverage and institutional publications. Text excerpts should be attributed to The World Internet Project. Graphs should be attributed in a source line to: The World Internet Project International Report (sixth edition) USC Annenberg School Center for the Digital Future REPRINTING Reprinting this report in any form other than brief excerpts requires permission from the USC Annenberg School Center for the Digital Future at the address below. -

Cross-Media News Repertories in New Zealand

. Volume 14, Issue 2 November 2017 Shopping in a narrow field: Cross-media news repertories in New Zealand Craig Hight, University of Newcastle, Australia Arezou Zalipour, University of Waikato, New Zealand Abstract: This article reports on the New Zealand case study within a larger project investigating cross-media news repertoires within (and across) national audiences. Six key news media repertoires emerged in this case study; heavy news consumers; hybrid browsers; digital browsers; ambivalent networkers; mainstream multiplatformers; and casual and connected). Despite a range of news media outlets available within New Zealand, particularly across digital platforms, participants consistently noted a relatively narrow social, cultural and political discursive field for news content in the country. Within this context, the news repertoires identified within this case study highlighted the high value placed by news consumers on national daily newspapers (print and online), and the continued salience of television and radio news broadcasting for some audience segments. But findings also offered a snapshot of the ways these are being supplemented or replaced, for some audience segments, by digital news outlets (even as these also generated dissatisfaction from many participants). Keywords: news repertoires, New Zealand, Q-methodology, news consumption, cross- cultural Introduction This article reports on the New Zealand case study within a larger project investigating patterns of news repertoires (Schrøder 2015) within (and across) national audiences, at a time of broadening forms of distribution of news content across a variety of media Page 416 Volume 14, Issue 2 November 2017 platforms. The overall project involved 12 countries and used a Q-sort methodology (Kobbernagel & Schrøder, 2016) to analyze and examine cross-media news consumption among audiences. -

IT Acronyms.Docx

List of computing and IT abbreviations /.—Slashdot 1GL—First-Generation Programming Language 1NF—First Normal Form 10B2—10BASE-2 10B5—10BASE-5 10B-F—10BASE-F 10B-FB—10BASE-FB 10B-FL—10BASE-FL 10B-FP—10BASE-FP 10B-T—10BASE-T 100B-FX—100BASE-FX 100B-T—100BASE-T 100B-TX—100BASE-TX 100BVG—100BASE-VG 286—Intel 80286 processor 2B1Q—2 Binary 1 Quaternary 2GL—Second-Generation Programming Language 2NF—Second Normal Form 3GL—Third-Generation Programming Language 3NF—Third Normal Form 386—Intel 80386 processor 1 486—Intel 80486 processor 4B5BLF—4 Byte 5 Byte Local Fiber 4GL—Fourth-Generation Programming Language 4NF—Fourth Normal Form 5GL—Fifth-Generation Programming Language 5NF—Fifth Normal Form 6NF—Sixth Normal Form 8B10BLF—8 Byte 10 Byte Local Fiber A AAT—Average Access Time AA—Anti-Aliasing AAA—Authentication Authorization, Accounting AABB—Axis Aligned Bounding Box AAC—Advanced Audio Coding AAL—ATM Adaptation Layer AALC—ATM Adaptation Layer Connection AARP—AppleTalk Address Resolution Protocol ABCL—Actor-Based Concurrent Language ABI—Application Binary Interface ABM—Asynchronous Balanced Mode ABR—Area Border Router ABR—Auto Baud-Rate detection ABR—Available Bitrate 2 ABR—Average Bitrate AC—Acoustic Coupler AC—Alternating Current ACD—Automatic Call Distributor ACE—Advanced Computing Environment ACF NCP—Advanced Communications Function—Network Control Program ACID—Atomicity Consistency Isolation Durability ACK—ACKnowledgement ACK—Amsterdam Compiler Kit ACL—Access Control List ACL—Active Current -

Reference Information

APPENDIX B Software Field Description Tables The window field description tables for the following are included in this section: • “Flow Record Match and Collect Field Descriptions” • “Configure Filter Window Fields” • “Configure Records Window Fields” • “Configure Collector Window Fields” • “Configure Exporter Window Fields” • “Configure Monitor Window Fields” • “Application ID Collect Field Information” Table B-1 lists the match and collect field descriptions for IPv4, IPv6, and Layer 2 flow records in the user interface, as well as the CLI. Table B-1 Flow Record Match and Collect Field Descriptions Match Fields (keys of the flow GUI and CLI record) IPv4 IPv6 Layer 2 CoS X X X Ethertype X X X Input SNMP Interface X X X IP Protocol X X IPv4 Destination Address X IPv4 Source Address X IPv4 TOS X IPv4 TTL X IPv6 Destination Address X IPv6 Hop Limit X IPv6 Source Address X IPv6 Traffic Class X Layer 4 Destination Port X X Layer 4 Source Port X X MAC Destination Address X X X Cisco NetFlow Generation Appliance User Guide B-1 Appendix B Software Field Description Tables Table B-1 Flow Record Match and Collect Field Descriptions (continued) Match Fields (keys of the flow GUI and CLI record) IPv4 IPv6 Layer 2 MAC Source Address X X X MPLS Label X X X Output SNMP Interface X X X VLAN ID X X X Collect Fields Application ID1 XXX Byte Count X X X First Timestamp X X X Flow Label X IPv4 ICMP Code X IPv4 ICMP Type X IPv6 ICMP Code X IPv6 ICMP Type X Last Timestamp X X X Network Encapsulation X X X Packet Count X X X TCP Header Flags X X X 1. -

Troubleshooting TCP/IP

4620-1 ch05.f.qc 10/28/99 12:00 PM Page 157 Chapter 5 Troubleshooting TCP/IP In This Chapter ᮣ Troubleshooting TCP/IP in Windows 2000 Server ᮣ TCP/IP troubleshooting steps ᮣ Defining which is the best TCP/IP troubleshooting tool to solve your problem ᮣ Mastering basic TCP/IP utilities roubleshooting is, it seems, an exercise in matrix mathematics. That is, Twe use a variety of approaches and influences to successfully solve our problems, almost in a mental columns-and-rows format. These approaches may include structured methodologies, inductive and deductive reasoning, common sense, experience, and luck. And this is what troubleshooting is made of. Troubleshooting TCP/IP problems is really no different from troubleshooting other Windows 2000 Server problems mentioned in this book, such as instal- lation failures described in Chapter 2. Needless to say, Windows 2000 Server offers several TCP/IP-related tools and utilities to assist us, but more on the specifics in a moment. TCP/IP Troubleshooting Basics The goal in TCP/IP troubleshooting is very simple: fix the problem. Too often, it is easy to become overly concerned about why something happened instead of just fixing the problem. And by fixing the problem, I mean cost effectively. 1. Be cost effective. Don’t forget that several hours’ worth of MCSE-level consulting could more than pay for the additional bandwidth that may easily solve your TCP/IP WAN-related problem. Don’t overlook such an easy fix when struggling to make a WAN connection between sites utilizing Windows 2000 Server. Too little bandwidth is typically the result of being penny wise and pound foolish. -

09-Mobile Networking

Mobile Networking Programming for Engineers Winter 2015 Andreas Zeller, Saarland University Today’s Topics • Mobile Networking • HTTP • HTML • Webserver! The aim of a computer network is Murray Leinster to have computers communicate “A Logic Named Joe” (1946) with each other The computer ... manages the spreading of ninety-four percent of all TV programs, conveys all information about weather, air trafc, special deals… and records every business conversation, every contract… Computers have changed the world. Computers are the civilisation. If we turn them of, we will fall back to a kind of civilisation, of which we have forgotten how it even works. Murray Leinster, 1896–1975 Partial map of the Internet based on the January 15, 2005 data The Internet found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005. Wireless Internet • WLAN = Wireless Local Area Network • Allows “local” computers to communicate The Arduino ESP8266 shield allows the Arduino to connect to Wireless Modem networks, and also to set up its own network The modem is controlled by so- Controlling a Modem called AT commands Modems receive • data to be sent • commands to control them View from above – connectors are Programming for Engineers WS15/16 Prof. Dr. Andreas Zeller saarland Software Engineering Chair Resp. Tutor: Curd Becker university at the bottom ConnectingSaarland University theAssignment Modem Sheet 6 computer science The connectors for the ESP8266 wireless module are shown in more detail here. -

ANNUAL TELECOMMUNICATIONS MONITORING REPORT 2010 April 2011

ANNUAL TELECOMMUNICATIONS MONITORING REPORT 2010 April 2011 TABLE OF CONTENTS INTRODUCTION ........................................................................................... 4 KEY MARKET TRENDS AND OBSERVATIONS FOR 2010 ................. 5 MARKET OVERVIEW ................................................................................. 8 Investment in Telecommunications Markets ............................................ 8 Investment Initiatives .................................................................................. 9 Fixed Line and Mobile Comparisons ....................................................... 11 Call Volumes ............................................................................................... 12 Revenue from Telecommunications Services .......................................... 14 THE FIXED LINE MARKET ..................................................................... 18 Market Overview ....................................................................................... 18 Fixed Line Voice ......................................................................................... 19 Fixed Line Data .......................................................................................... 25 Development of Unbundling ..................................................................... 28 Broadband Quality .................................................................................... 31 MOBILE MARKET ..................................................................................... -

Dos Amplification Attacks – Protocol-Agnostic Detection Of

DoS Amplification Attacks – Protocol-Agnostic Detection of Service Abuse in Amplifier Networks B Timm B¨ottger1( ), Lothar Braun1 , Oliver Gasser1, Felix von Eye2, Helmut Reiser2, and Georg Carle1 1 Technische Universit¨at M¨unchen, Munich, Germany {boettget,braun,gasser,carle}@net.in.tum.de 2 Leibniz Supercomputing Centre, Munich, Germany {voneye,reiser}@lrz.de Abstract. For many years Distributed Denial-of-Service attacks have been known to be a threat to Internet services. Recently a configura- tionflawinNTPdaemonsledtoattackswithtrafficratesofseveral hundred Gbit/s. For those attacks a third party, the amplifier, is used to significantly increase the volume of traffic reflected to the victim. Recent research revealed more UDP-based protocols that are vulnerable to amplification attacks. Detecting such attacks from an abused ampli- fier network’s point of view has only rarely been investigated. In this work we identify novel properties which characterize ampli- fication attacks and allow to identify the illegitimate use of arbitrary services. Their suitability for amplification attack detection is evaluated in large high-speed research networks. We prove that our approach is fully capa- ble of detecting attacks that were already seen in the wild as well as capable of detecting attacks we conducted ourselves exploiting newly discovered vulnerabilities. 1 Introduction Denial-of-Service attacks aim at making services unavailable to their intended users. Attackers can use different methods to consume bandwidth or deplete other resources of the victim. One method to exhaust bandwidth is called Dis- tributed Reflection Denial-of-Service (DRDoS) attack: an attacker sends forged requests to several servers with the victim’s spoofed source address. -

Vulnerability Report TLP: White

Vulnerability Report TLP: White Contents 1 Introduction ..................................... 4 2 Overview of Scanning Projects ............... 5 2.1 SSL POODLE Scan ................................................ 6 2.2 HTTP Scan ........................................................ 7 2.3 FTP Scan .......................................................... 8 2.4 RDP ................................................................ 9 2.5 mDNS ............................................................ 10 2.6 SNMP ............................................................. 11 2.7 AFP ............................................................... 12 2.8 NTP .............................................................. 13 2.9 Telnet ........................................................... 14 2.10 SSL Freak ....................................................... 15 2.11 Port Mapper .................................................... 16 2.12 VNC .............................................................. 17 2.13 DNS............................................................... 18 2.14 Netbios .......................................................... 19 2.15 SSDP ............................................................. 20 2.16 ISAKMP .......................................................... 21 2.17 TFTP ............................................................. 22 2.18 RSYNC ........................................................... 23 2.19 SMB .............................................................. 24 2.20 CWMP -

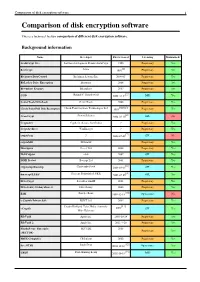

Comparison of Disk Encryption Software 1 Comparison of Disk Encryption Software

Comparison of disk encryption software 1 Comparison of disk encryption software This is a technical feature comparison of different disk encryption software. Background information Name Developer First released Licensing Maintained? ArchiCrypt Live Softwaredevelopment Remus ArchiCrypt 1998 Proprietary Yes [1] BestCrypt Jetico 1993 Proprietary Yes BitArmor DataControl BitArmor Systems Inc. 2008-05 Proprietary Yes BitLocker Drive Encryption Microsoft 2006 Proprietary Yes Bloombase Keyparc Bloombase 2007 Proprietary Yes [2] CGD Roland C. Dowdeswell 2002-10-04 BSD Yes CenterTools DriveLock CenterTools 2008 Proprietary Yes [3][4][5] Check Point Full Disk Encryption Check Point Software Technologies Ltd 1999 Proprietary Yes [6] CrossCrypt Steven Scherrer 2004-02-10 GPL No Cryptainer Cypherix (Secure-Soft India) ? Proprietary Yes CryptArchiver WinEncrypt ? Proprietary Yes [7] cryptoloop ? 2003-07-02 GPL No cryptoMill SEAhawk Proprietary Yes Discryptor Cosect Ltd. 2008 Proprietary Yes DiskCryptor ntldr 2007 GPL Yes DISK Protect Becrypt Ltd 2001 Proprietary Yes [8] cryptsetup/dmsetup Christophe Saout 2004-03-11 GPL Yes [9] dm-crypt/LUKS Clemens Fruhwirth (LUKS) 2005-02-05 GPL Yes DriveCrypt SecurStar GmbH 2001 Proprietary Yes DriveSentry GoAnywhere 2 DriveSentry 2008 Proprietary Yes [10] E4M Paul Le Roux 1998-12-18 Open source No e-Capsule Private Safe EISST Ltd. 2005 Proprietary Yes Dustin Kirkland, Tyler Hicks, (formerly [11] eCryptfs 2005 GPL Yes Mike Halcrow) FileVault Apple Inc. 2003-10-24 Proprietary Yes FileVault 2 Apple Inc. 2011-7-20 Proprietary -

The Internet, the Law, and Privacy in New Zealand: Dignity with Liberty?

International Journal of Communication 6 (2012), 127–143 1932–8036/20120127 The Internet, the Law, and Privacy in New Zealand: Dignity with Liberty? JONATHAN BARRETT LUKE STRONGMAN Open Polytechnic of New Zealand Early participants in the Internet experienced very little legal or social pressure with respect to either data privacy or regulation. However, the innovations of Web 2.0 are symptomatic of a re-creation of cyberspace from an original “free for all,” in which websites had no normative constraints, toward a significant shift to website management that addresses privacy concerns. If the laws of the non-virtual world are difficult to apply to the online world, must the non-virtual world create new laws to control the online world? Should a balance be made between laws of the non-virtual and virtual worlds, or should a new set of laws be created specifically to govern the Internet? Concordant with this dilemma is the issue that although precedent may create new laws, when the law changes with the possibilities for uses and abuses of new online technologies, to what extent can it be said to either perpetuate or create to any internally consistent system? Introduction For many of its early participants, the Internet presented the opportunity for a benignly anarchic and anomic space for freedom of expression that, in principle and practice, should be liberated from established legal traditions and social pressures. Its debt to the military notwithstanding (see Morozov, 2011b), Web 1.0 was typically conceived as a virtual realm that could be created, grown, and governed— or non-governed—by its participants.