Digital Correction of Lens Aberrations in Light Field Photography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alternative Processes a Few Essentials Introduction

Alternative Processes A Few Essentials Introduction Chapter 1. Capture Techniques From Alternative Photographic Processes: Crafting Handmade Images Chapter 2. Digital Negatives for Gum From Gum Printing: A Step-by-Step Manual, Highlighting Artists and Their Creative Practice Chapter 3. Fugitive and Not-So-Fugitive Printing From Jill Enfield?s Guide to Photographic Alternative Processes: Popular Historical and Contemporary Techniques 2 Featured Books on Alternative Process Photography from Routledge | Focal Press Use discount code FLR40 to take 20% off all Routledge titles. Simply visit www.routledge.com/photography to browse and purchase books of interest. 3 Introduction A young art though it may be, photography already has a rich history. As media moves full steam ahead into the digital revolution and beyond, it is a natural instinct to look back at where we?ve come from. With more artists rediscovering photography?s historical processes, the practice of photography continually redefines and re-contextualizes itself. The creative possibilities of these historical processes are endless, spawning a growing arena of practice - alternative processes, which combines past, present and everything in between, in the creation of art. This collection is an introduction to and a sample of these processes and possibilities. With Alternative Photographic Processes, Brady Wilks demonstrates techniques for manipulating photographs, negatives and prints ? emphasizing the ?hand-made? touch. Bridging the gap between the simplest of processes to the most complex, Wilks? introduction demonstrates image-manipulation pre-capture, allowing the artist to get intimate with his or her images long before development. In the newly-released Gum Printing, leading gum expert Christina Z. -

Modeling of Microlens Arrays with Different Lens Shapes Abstract

Modeling of Microlens Arrays with Different Lens Shapes Abstract Microlens arrays are found useful in many applications, such as imaging, wavefront sensing, light homogenizing, and so on. Due to different fabrication techniques / processes, the microlenses may appear in different shapes. In this example, microlens array with two typical lens shapes – square and round – are modeled. Because of the different apertures shapes, the focal spots are also different due to diffraction. The change of the focal spots distribution with respect to the imposed aberration in the input field is demonstrated. 2 www.LightTrans.com Modeling Task 1.24mm 4.356mm 150µm input field - wavelength 633nm How to calculate field on focal - diameter 1.5mm - uniform amplitude plane behind different types of - phase distributions microlens arrays, and how 1) no aberration does the spot distribution 2) spherical aberration ? 3) coma aberration change with the input field 4) trefoil aberration aberration? x z or x z y 3 Results x square microlens array wavefront error [휆] z round microlens array [mm] [mm] y y x [mm] x [mm] no aberration x [mm] (color saturation at 1/3 maximum) or diffraction due to square aperture diffraction due to round aperture 4 Results x square microlens array wavefront error [휆] z round microlens array [mm] [mm] y y x [mm] x [mm] spherical aberration x [mm] Fully physical-optics simulation of system containing microlens array or takes less than 10 seconds. 5 Results x square microlens array wavefront error [휆] z round microlens array [mm] [mm] y y x [mm] x [mm] coma aberration x [mm] Focal spots distribution changes with respect to the or aberration of the input field. -

A N E W E R a I N O P T I

A NEW ERA IN OPTICS EXPERIENCE AN UNPRECEDENTED LEVEL OF PERFORMANCE NIKKOR Z Lens Category Map New-dimensional S-Line Other lenses optical performance realized NIKKOR Z NIKKOR Z NIKKOR Z Lenses other than the with the Z mount 14-30mm f/4 S 24-70mm f/2.8 S 24-70mm f/4 S S-Line series will be announced at a later date. NIKKOR Z 58mm f/0.95 S Noct The title of the S-Line is reserved only for NIKKOR Z lenses that have cleared newly NIKKOR Z NIKKOR Z established standards in design principles and quality control that are even stricter 35mm f/1.8 S 50mm f/1.8 S than Nikon’s conventional standards. The “S” can be read as representing words such as “Superior”, “Special” and “Sophisticated.” Top of the S-Line model: the culmination Versatile lenses offering a new dimension Well-balanced, of the NIKKOR quest for groundbreaking in optical performance high-performance lenses Whichever model you choose, every S-Line lens achieves richly detailed image expression optical performance These lenses bring a higher level of These lenses strike an optimum delivering a sense of reality in both still shooting and movie creation. It offers a new This lens has the ability to depict subjects imaging power, achieving superior balance between advanced in ways that have never been seen reproduction with high resolution even at functionality, compactness dimension in optical performance, including overwhelming resolution, bringing fresh before, including by rendering them with the periphery of the image, and utilizing and cost effectiveness, while an extremely shallow depth of field. -

Irix 45Mm F1.4

Explore the magic of medium format photography with the Irix 45mm f/1.4 lens equipped with the native mount for Fujifilm GFX cameras! The Irix Lens brand introduces a standard 45mm wide-angle lens with a dedicated mount that can be used with Fujifilm GFX series cameras equipped with medium format sensors. Digital medium format is a nod to traditional analog photography and a return to the roots that defined the vividness and quality of the image captured in photos. Today, the Irix brand offers creators, who seek iconic image quality combined with mystical vividness, a tool that will allow them to realize their wildest creative visions - the Irix 45mm f / 1.4 G-mount lens. It is an innovative product because as a precursor, it paves the way for standard wide-angle lenses with low aperture, which are able to cope with medium format sensors. The maximum aperture value of f/1.4 and the sensor size of Fujifilm GFX series cameras ensure not only a shallow depth of field, but also smooth transitions between individual focus areas and a high dynamic range. The wide f/1.4 aperture enables you to capture a clear background separation and work in low light conditions, and thanks to the excellent optical performance, which consists of high sharpness, negligible amount of chromatic aberration and great microcontrast - this lens can successfully become the most commonly used accessory that will help you create picturesque shots. The Irix 45mm f / 1.4 GFX is a professional lens designed for FujiFilm GFX cameras. It has a high-quality construction, based on the knowledge of Irix Lens engineers gained during the design and production of full-frame lenses. -

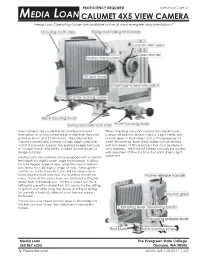

Basic View Camera

PROFICIENCY REQUIRED Operating Guide for MEDIA LOAN CALUMET 4X5 VIEW CAMERA Media Loan Operating Guides are available online at www.evergreen.edu/medialoan/ View cameras are usually tripod mounted and lend When checking out a 4x5 camera from Media Loan, themselves to a more contemplative style than the more patrons will need to obtain a tripod, a light meter, one portable 35mm and 2 1/4 formats. The Calumet 4x5 or both types of film holders, and a changing bag for Standard model view camera is a lightweight, portable sheet film loading. Each sheet holder can be loaded tool that produces superior, fine grained images because with two sheets of film, a process that must be done in of its large format and ability to adjust for a minimum of total darkness. The Polaroid holders can only be loaded image distortion. with one sheet of film at a time, but each sheet is light Media Loan's 4x5 cameras come equipped with a 150mm protected. lens which is a slightly wider angle than normal. It allows for a 44 degree angle of view, while the normal 165mm lens allows for a 40 degree angle of view. Although the controls on each of Media Loan's 4x5 lens may vary in terms of placement and style, the functions remain the same. Some of the lenses have an additional setting for strobe flash or flashbulb use. On these lenses, use the X setting for use with a strobe flash (It’s crucial for the setting to remain on X while using the studio) and the M setting for use with a flashbulb (Media Loan does not support flashbulbs). -

Lens Openings and Shutter Speeds

Illustrations courtesy Life Magazine Encyclopedia of Photography Lens Openings & Shutter Speeds Controlling Exposure & the Rendering of Space and Time Equal Lens Openings/ Double Exposure Time Here is an analogy to photographic exposure. The timer in this illustration represents the shutter speed portion of the exposure. The faucet represents the lens openings. The beaker represents the complete "filling" of the sensor chip or the film, or full exposure. You can see that with equal "openings" of the "lens" (the faucet) the beaker on the left is half full (underexposed) in 2 seconds, and completely full in 4 seconds... Also note that the capacity of the beaker is analogous to the ISO or light sensitivity setting on the camera. A large beaker represents a "slow" or less light sensitive setting, like ISO 100. A small beaker is analogous to a "fast" or more light sensitive setting, like ISO 1200. Doubling or halving the ISO number doubles or halves the sensitivity, effectively the same scheme as for f/stops and shutter speeds. 200 ISO film is "one stop faster" than ISO 100. Equal Time One f/stop down In the second illustration, the example on the left, the faucet (lens opening, f-stop, or "aperture"- all the same meaning here) is opened twice as much as the example on the right- or one "stop." It passes twice as much light as the one on the right, in the same period of time. Thus by opening the faucet one "stop," the beaker will be filled in 2 seconds. In the right example, with the faucet "stopped down" the film is underexposed by half- or one "stop." On the left, the "lens" is "opened by one stop." Equivalent Exposure: Lens Open 1 f/stop more & 1/2 the Time In this illustration, we have achieved "equivalent exposure" by, on the left, "opening the lens" by one "stop" exposing the sensor chip fully in 2 seconds. -

Tessar and Dagor Lenses

Tessar and Dagor lenses Lens Design OPTI 517 Prof. Jose Sasian Important basic lens forms Petzval DB Gauss Cooke Triplet little stress Stressed with Stressed with Low high-order Prof. Jose Sasian high high-order aberrations aberrations Measuring lens sensitivity to surface tilts 1 u 1 2 u W131 AB y W222 B y 2 n 2 n 2 2 1 1 1 1 u 1 1 1 u as B y cs A y 1 m Bstop ystop n'u' n 1 m ystop n'u' n CS cs 2 AS as 2 j j Prof. Jose Sasian Lens sensitivity comparison Coma sensitivity 0.32 Astigmatism sensitivity 0.27 Coma sensitivity 2.87 Astigmatism sensitivity 0.92 Coma sensitivity 0.99 Astigmatism sensitivity 0.18 Prof. Jose Sasian Actual tough and easy to align designs Off-the-shelf relay at F/6 Coma sensitivity 0.54 Astigmatism sensitivity 0.78 Coma sensitivity 0.14 Astigmatism sensitivity 0.21 Improper opto-mechanics leads to tough alignment Prof. Jose Sasian Tessar lens • More degrees of freedom • Can be thought of as a re-optimization of the PROTAR • Sharper than Cooke triplet (low index) • Compactness • Tessar, greek, four • 1902, Paul Rudolph • New achromat reduces lens stress Prof. Jose Sasian Tessar • The front component has very little power and acts as a corrector of the rear component new achromat • The cemented interface of the new achromat: 1) reduces zonal spherical aberration, 2) reduces oblique spherical aberration, 3) reduces zonal astigmatism • It is a compact lens Prof. Jose Sasian Merte’s Patent of 1932 Faster Tessar lens F/5.6 Prof. -

Cooke Triplet

Cooke triplet Lens Design OPTI 517 Prof. Jose Sasian Cooke triplet • A new design • Enough variables to correct all third– order aberrations • Thought of as an afocal front and an imaging rear • 1896 • Harold Dennis Taylor Prof. Jose Sasian Cooke triplet field-speed trade-off’s 24 deg @ f/4.5 27 deg @ f/5.6 Prof. Jose Sasian Aberration correction • Powers, glass, and separations for: power, axial chromatic, field curvature, lateral color, and distortion. Lens bendings, for spherical aberration, coma, and astigmatism. Symmetry. •Power: yaa ybb ycc ya 2 2 2 •Axial color: ya a /Va yb b /Vb yc c /Vc 0 • Lateral color: ya yaa /Va yb ybb /Vb yc ycc /Vc 0 • Field curvature: a / na b / nb c / nc 0 Prof. Jose Sasian • Crossing of the sagittal and tangential field is an indication of the balancing of third-order, fifth-order astigmatism, field curvature, and defocus. Prof. Jose Sasian The strong power of the first positive lens leads to spherical aberration of the pupil which changes the chief ray high whereby inducing significant higher order aberrations. Y y ay 3 Y 2 y 2 2ay 4 a2 y 6 2 W222 Y 2 W422 ay W222 Prof. Jose Sasian Cooke triplet example from Geiser OE •5 waves scale •visible F1=34 mm F2=-17 mm F3=24 mm 1 STANDARD 23.713 4.831 LAK9 2 STANDARD 7331.288 5.86 STO STANDARD -24.456 0.975 SF5 4 STANDARD 21.896 4.822 5 STANDARD 86.759 3.127 LAK9 6 STANDARD -20.4942 41.10346 IMA STANDARD Infinity From Geiser OE f/4 at +/- 20 deg. -

Design and Fabrication of Flexible Naked-Eye 3D Display Film Element Based on Microstructure

micromachines Article Design and Fabrication of Flexible Naked-Eye 3D Display Film Element Based on Microstructure Axiu Cao , Li Xue, Yingfei Pang, Liwei Liu, Hui Pang, Lifang Shi * and Qiling Deng Institute of Optics and Electronics, Chinese Academy of Sciences, Chengdu 610209, China; [email protected] (A.C.); [email protected] (L.X.); [email protected] (Y.P.); [email protected] (L.L.); [email protected] (H.P.); [email protected] (Q.D.) * Correspondence: [email protected]; Tel.: +86-028-8510-1178 Received: 19 November 2019; Accepted: 7 December 2019; Published: 9 December 2019 Abstract: The naked-eye three-dimensional (3D) display technology without wearing equipment is an inevitable future development trend. In this paper, the design and fabrication of a flexible naked-eye 3D display film element based on a microstructure have been proposed to achieve a high-resolution 3D display effect. The film element consists of two sets of key microstructures, namely, a microimage array (MIA) and microlens array (MLA). By establishing the basic structural model, the matching relationship between the two groups of microstructures has been studied. Based on 3D graphics software, a 3D object information acquisition model has been proposed to achieve a high-resolution MIA from different viewpoints, recording without crosstalk. In addition, lithography technology has been used to realize the fabrications of the MLA and MIA. Based on nanoimprint technology, a complete integration technology on a flexible film substrate has been formed. Finally, a flexible 3D display film element has been fabricated, which has a light weight and can be curled. -

Big Bertha/Baby Bertha

Big Bertha /Baby Bertha by Daniel W. Fromm Contents 1 Big Bertha As She Was Spoke 1 2 Dreaming of a Baby Bertha 5 3 Baby Bertha conceived 8 4 Baby Bertha’s gestation 8 5 Baby cuts her teeth - solve one problem, find another – and final catastrophe 17 6 Building Baby Bertha around a 2x3 Cambo SC reconsidered 23 7 Mistakes/good decisions 23 8 What was rescued from the wreckage: 24 1 Big Bertha As She Was Spoke American sports photographers used to shoot sporting events, e.g., baseball games, with specially made fixed lens Single Lens Reflex (SLR) cameras. These were made by fitting a Graflex SLR with a long lens - 20" to 60" - and a suitable focusing mechanism. They shot 4x5 or 5x7, were quite heavy. One such camera made by Graflex is figured in the first edition of Graphic Graflex Photography. Another, used by the Fort Worth, Texas, Star-Telegram, can be seen at http://www.lurvely.com/photo/6176270759/FWST_Big_Bertha_Graflex/ and http://www.flickr.com/photos/21211119@N03/6176270759 Long lens SLRs that incorporate a Graflex are often called "Big Berthas" but the name isn’t applied consistently. For example, there’s a 4x5 Bertha in the George Eastman House collection (http://geh.org/fm/mees/htmlsrc/mG736700011_ful.html) identified as a "Little Bertha." "Big Bertha" has also been applied to regular production Graflexes, e.g., a 5x7 Press Graflex (http://www.mcmahanphoto.com/lc380.html ) and a 4x5 Graflex that I can’t identify (http://www.avlispub.com/garage/apollo_1_launch.htm). These cameras lack the usual Bertha attributes of long lens, usually but not always a telephoto, and rapid focusing. -

Microlens Array Grid Estimation, Light Field Decoding, and Calibration

1 Microlens array grid estimation, light field decoding, and calibration Maximilian Schambach and Fernando Puente Leon,´ Senior Member, IEEE Karlsruhe Institute of Technology, Institute of Industrial Information Technology Hertzstr. 16, 76187 Karlsruhe, Germany fschambach, [email protected] Abstract—We quantitatively investigate multiple algorithms lenslet images, while others perform calibration utilizing the for microlens array grid estimation for microlens array-based raw images directly [4]. In either case, this includes multiple light field cameras. Explicitly taking into account natural and non-trivial pre-processing steps, such as the detection of the mechanical vignetting effects, we propose a new method for microlens array grid estimation that outperforms the ones projected microlens (ML) centers and estimation of a regular previously discussed in the literature. To quantify the perfor- grid approximating the centers, alignment of the lenslet image mance of the algorithms, we propose an evaluation pipeline with the sensor, slicing the image into a light field and, in utilizing application-specific ray-traced white images with known the case of hexagonal MLAs, resampling the light field onto a microlens positions. Using a large dataset of synthesized white rectangular grid. These steps have a non-negligible impact on images, we thoroughly compare the performance of the different estimation algorithms. As an example, we apply our results to the quality of the decoded light field and camera calibration. the decoding and calibration of light fields taken with a Lytro Hence, a quantitative evaluation is necessary where possible. Illum camera. We observe that decoding as well as calibration Here, we will focus on the estimation of the MLA grid benefit from a more accurate, vignetting-aware grid estimation, parameters (to which we refer to as pre-calibration), which especially in peripheral subapertures of the light field. -

Scanning Confocal Microscopy with a Microlens Array

Scanning Confocal Microscopy with A Microlens Array Antony Orth*, and Kenneth Crozier y School of Engineering and Applied Sciences, Harvard University, Cambridge, Massachusetts 02138, USA Corresponding authors: * [email protected], y [email protected] Abstract: Scanning confocal fluorescence microscopy is performed with a refractive microlens array. We simul- taneously obtain an array of 3000 20µm x 20µm images with a lateral resolution of 645nm and observe low power optical sectioning. OCIS codes: (180.0180) Microscopy; (180.1790) Confocal microscopy; (350.3950) Micro-optics High throughput fluorescence imaging of cells and tissues is an indispensable tool for biological research[1]. Even low-resolution imaging of fluorescently labelled cells can yield important information that is not resolvable in traditional flow cytometry[2]. Commercial systems typically raster scan a well plate under a microscope objective to generate a large field of view (FOV). In practice, the process of scanning and refocusing limits the speed of this approach to one FOV per second[3]. We demonstrate an imaging modality that has the potential to speed up fluorescent imaging over a large field of view, while taking advantage of the background rejection inherent in confocal imaging. Figure 1: a) Experimental setup. Inset: Microscope photograph of an 8x8 sub-section of the microlens array. Scale bar is 80µm. b) A subset of the 3000, 20µm x 20µm fields of view, each acquired by a separate microlens. Inset: Zoom-in of one field of view showing a pile of 2µm beads. The experimental geometry is shown in Figure 1. A collimated laser beam (5mW output power, λex =532nm) is focused into a focal spot array on the fluorescent sample by a refractive microlens array.