AT&T Research at TRECVID 2009 Content-Based Copy Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Before the Federal Communications Commission Washington, DC 20554

Before the Federal Communications Commission Washington, DC 20554 In the Matter of DIRECTV Enterprises, LLC File No. SAT-MOD- _____________ Application to Modify Authorization for SPACEWAY 1 (S2191) APPLICATION OF DIRECTV ENTERPRISES, LLC TO MODIFY AUTHORIZATION FOR SPACEWAY 1 DIRECTV Enterprises, LLC (“DIRECTV”), pursuant to Section 25.117 of the rules of the Federal Communications Commission (“Commission” or “FCC”), 47 C.F.R. § 25.117, hereby seeks to modify its authorization for the SPACEWAY 1 satellite (Call Sign S2191). Specifically, this modification application seeks authority to relocate SPACEWAY 1 from 102.925° W.L. to 138.9° W.L. as early as the first quarter of 2018 and to extend its license term for an additional five years, through December 31, 2025. In accordance with the Commission’s rules,1 this application has been filed electronically as an attachment to FCC Form 312. DIRECTV provides the technical information relating to the proposed modification on Schedule S and in the attached Engineering Statement.2 The remainder of the technical information on file with the Commission for the SPACEWAY 1 satellite is unchanged and incorporated by reference.3 To the extent necessary, DIRECTV 1 47 C.F.R. § 25.117(c). 2 47 C.F.R. § 25.114. 3 See File Nos. SAT-MOD-20091009-00108, SAT-MOD-20070626-00087, SAT-MOD- 20041122-00211, SAT-MOD-20040614-00114. requests that previously granted technical waivers continue to apply to operation of SPACEWAY 1 at 138.9° W.L.4 I. PROPOSED MODIFICATIONS A. Relocation to 138.9° W.L. DIRECTV requests authority to relocate SPACEWAY 1 to, and operate the satellite at, 138.9° W.L. -

Surface Temperature

OSI SAF SST Products and Services Pierre Le Borgne Météo-France/DP/CMS (With G. Legendre, A. Marsouin, S. Péré, S. Philippe, H. Roquet) 2 Outline Satellite IR radiometric measurements From Brightness Temperatures to Sea Surface Temperature SST from Metop – Production – Validation SST from MSG (GOES-E) – Production – Validation Practical information OSI-SAF SST, EUMETRAIN 2011 3 Satellite IR Radiometric measurements IR Radiometric Measurement = (1) Emitted by surface + (2) Emitted by atmosphere and reflected to space + (3) Direct atmosphere contribution (emission+ absorption) (3) (2) (1) OSI-SAF SST, EUMETRAIN 2011 4 Satellite IR Radiometric measurements From SST to Brightness Temperature (BT) A satellite radiometer measures a radiance Iλ : Iλ= τλ ελ Bλ (Ts) + Ir + Ia Emitted by surface Reflected Emitted by atmosphere – Ts: surface temperature Clear sky only! – Bλ: Planck function – λ : wavelength IR: 0.7 to 1000 micron – ελ: surface emissivity – τλ: atmospheric transmittance -2 -1 -1 – Iλ: measured radiance (w m μ ster ) –1 Measured Brightness Temperature: BT = B λ (Iλ) OSI-SAF SST, EUMETRAIN 2011 5 Satellite IR Radiometric measurements From BT to SST Transmittance τλ 12.0 μm Which channel? 3.7 μm 8.7 μm 10.8 μm OSI-SAF SST, EUMETRAIN 2011 6 FROM BT to SST SST OSI-SAF SST, EUMETRAIN 2011 7 FROM BT to SST BT 10.8μ OSI-SAF SST, EUMETRAIN 2011 8 FROM BT to SST BT 12.0μ OSI-SAF SST, EUMETRAIN 2011 9 FROM BT to SST SST - BT10.8μ OSI-SAF SST, EUMETRAIN 2011 10 FROM BT to SST BT10.8μ - BT12.0μ OSI-SAF SST, EUMETRAIN 2011 11 FROM BT to -

CE Router CCG for AT&T IP Flexible Reach and IP Toll-Free Over AVPN

AT&T IP Flexible Reach Service and/or AT&T IP Toll-Free on AT&T VPN Customer Edge Router Customer Configuration Guide (November 25, 2015, Version 2.6) AT&T IP Flexible Reach Service and AT&T IP Toll-Free on AT&T VPN Service Customer Edge Router (CER) Customer Configuration Guide for AT&T IP Flexible Reach Service and AT&T IP Toll-Free on AT&T VPN Service as the Underlying Transport Service Cisco ISR G1, 7200 and 7300 Platforms November 25, 2015 Version 2.6 © 2014 AT&T Intellectual Property. All rights reserved. AT&T, the AT&T logo and all other AT&T marks contained herein are trademarks of AT&T Intellectual Property and/or AT&T affiliated companies. All other marks contained herein are the property of their respective owners. Page 1 AT&T IP Flexible Reach Service and/or AT&T IP Toll-Free on AT&T VPN Customer Edge Router Customer Configuration Guide (November 25, 2015, Version 2.6) Table of Contents 1 INTRODUCTION ......................................................................................................................................... 4 1.1 OVERVIEW .............................................................................................................................................. 4 1.2 NETWORK TOPOLOGY ............................................................................................................................. 7 1.2.1 CER combined with TDM Gateway................................................................................................... 8 1.2.2 AT&T Certified IP-PBX’s ................................................................................................................ -

59864 Federal Register/Vol. 85, No. 185/Wednesday, September 23

59864 Federal Register / Vol. 85, No. 185 / Wednesday, September 23, 2020 / Rules and Regulations FEDERAL COMMUNICATIONS C. Congressional Review Act II. Report and Order COMMISSION 2. The Commission has determined, A. Allocating FTEs 47 CFR Part 1 and the Administrator of the Office of 5. In the FY 2020 NPRM, the Information and Regulatory Affairs, Commission proposed that non-auctions [MD Docket No. 20–105; FCC 20–120; FRS Office of Management and Budget, funded FTEs will be classified as direct 17050] concurs that these rules are non-major only if in one of the four core bureaus, under the Congressional Review Act, 5 i.e., in the Wireline Competition Assessment and Collection of U.S.C. 804(2). The Commission will Bureau, the Wireless Regulatory Fees for Fiscal Year 2020 send a copy of this Report & Order to Telecommunications Bureau, the Media Congress and the Government Bureau, or the International Bureau. The AGENCY: Federal Communications indirect FTEs are from the following Commission. Accountability Office pursuant to 5 U.S.C. 801(a)(1)(A). bureaus and offices: Enforcement ACTION: Final rule. Bureau, Consumer and Governmental 3. In this Report and Order, we adopt Affairs Bureau, Public Safety and SUMMARY: In this document, the a schedule to collect the $339,000,000 Homeland Security Bureau, Chairman Commission revises its Schedule of in congressionally required regulatory and Commissioners’ offices, Office of Regulatory Fees to recover an amount of fees for fiscal year (FY) 2020. The the Managing Director, Office of General $339,000,000 that Congress has required regulatory fees for all payors are due in Counsel, Office of the Inspector General, the Commission to collect for fiscal year September 2020. -

Brocade Mainframe Connectivity Solutions

PART 1: BROCADE MAINFRAME CHAPTER 2 CONNECTIVITY SOLUTIONS The modern IBM mainframe, also known as IBM zEnterprise, has a distinguished 50-year history BROCADEMainframe I/O and as the leading platform for reliability, availability, serviceability, and scalability. It has transformed Storage Basics business and delivered innovative, game-changing technology that makes the extraordinary possible, and has improved the way the world works. For over 25 of those years, Brocade, MAINFRAME the leading networking company in the IBM mainframe ecosystem, has provided non-stop The primary purpose of any computing system is to networks for IBM mainframe customers. From parallel channel extension to ESCON, FICON, process data obtained from Input/Output devices. long-distance FCIP connectivity, SNA/IP, and IP connectivity, Brocade has been there with IBM CONNECTIVITY and our mutual customers. Input and Output are terms used to describe the SOLUTIONStransfer of data between devices such as Direct This book, written by leading mainframe industry and technology experts from Brocade, discusses Access Storage Device (DASD) arrays and main mainframe SAN and network technology, best practices, and how to apply this technology in your storage in a mainframe. Input and Output operations mainframe environment. are typically referred to as I/O operations, abbreviated as I/O. The facilities that control I/O operations are collectively referred to as the mainframe’s channel subsystem. This chapter provides a description of the components, functionality, and operations of the channel subsystem, mainframe I/O operations, mainframe storage basics, and the IBM System z FICON qualification process. STEVE GUENDERT DAVE LYTLE FRED SMIT Brocade Bookshelf www.brocade.com/bookshelf i BROCADE MAINFRAME CONNECTIVITY SOLUTIONS STEVE GUENDERT DAVE LYTLE FRED SMIT BROCADE MAINFRAME CONNECTIVITY SOLUTIONS ii © 2014 Brocade Communications Systems, Inc. -

Overview of Chinese First C Band Multi-Polarization SAR Satellite GF-3

Overview of Chinese First C Band Multi-Polarization SAR Satellite GF-3 ZHANG Qingjun, LIU Yadong China Academy of Space Technology, Beijing 100094 Abstract: The GF-3 satellite, the first C band and multi-polarization Synthetic Aperture Radar (SAR) satellite in China, achieved breakthroughs in a number of key technologies such as multi-polarization and the design of a multi- imaging mode, a multi-polarization phased array SAR antenna, and in internal calibration technology. The satellite tech- nology adopted the principle of “Demand Pulls, Technology Pushes”, creating a series of innovation firsts, reaching or surpassing the technical specifications of an international level. Key words: GF-3 satellite, system design, application DOI: 10. 3969/ j. issn. 1671-0940. 2017. 03. 003 1 INTRODUCTION The GF-3 satellite, the only microwave remote sensing phased array antenna technology; high precision SAR internal imaging satellite of major event in the National High Resolution calibration technique; deployable mechanism for a large phased Earth Observation System, is the first C band multi-polarization array SAR antenna; thermal control technology of SAR antenna; and high resolution synthetic aperture radar (SAR) in China. pulsed high power supply technology and satellite control tech- The GF-3 satellite has the characteristics of high resolution, nology with star trackers. The GF-3 satellite has the following wide swath, high radiation precision, multi-imaging modes, long characteristics: design life, and it can acquire global land and ocean informa- -

FCC-21-49A1.Pdf

Federal Communications Commission FCC 21-49 Before the Federal Communications Commission Washington, DC 20554 In the Matter of ) ) Assessment and Collection of Regulatory Fees for ) MD Docket No. 21-190 Fiscal Year 2021 ) ) Assessment and Collection of Regulatory Fees for MD Docket No. 20-105 Fiscal Year 2020 REPORT AND ORDER AND NOTICE OF PROPOSED RULEMAKING Adopted: May 3, 2021 Released: May 4, 2021 By the Commission: Comment Date: June 3, 2021 Reply Comment Date: June 18, 2021 Table of Contents Heading Paragraph # I. INTRODUCTION...................................................................................................................................1 II. BACKGROUND.....................................................................................................................................3 III. REPORT AND ORDER – NEW REGULATORY FEE CATEGORIES FOR CERTAIN NGSO SPACE STATIONS ....................................................................................................................6 IV. NOTICE OF PROPOSED RULEMAKING .........................................................................................21 A. Methodology for Allocating FTEs..................................................................................................21 B. Calculating Regulatory Fees for Commercial Mobile Radio Services...........................................24 C. Direct Broadcast Satellite Regulatory Fees ....................................................................................30 D. Television Broadcaster Issues.........................................................................................................32 -

Federal Register/Vol. 86, No. 91/Thursday, May 13, 2021/Proposed Rules

26262 Federal Register / Vol. 86, No. 91 / Thursday, May 13, 2021 / Proposed Rules FEDERAL COMMUNICATIONS BCPI, Inc., 45 L Street NE, Washington, shown or given to Commission staff COMMISSION DC 20554. Customers may contact BCPI, during ex parte meetings are deemed to Inc. via their website, http:// be written ex parte presentations and 47 CFR Part 1 www.bcpi.com, or call 1–800–378–3160. must be filed consistent with section [MD Docket Nos. 20–105; MD Docket Nos. This document is available in 1.1206(b) of the Commission’s rules. In 21–190; FCC 21–49; FRS 26021] alternative formats (computer diskette, proceedings governed by section 1.49(f) large print, audio record, and braille). of the Commission’s rules or for which Assessment and Collection of Persons with disabilities who need the Commission has made available a Regulatory Fees for Fiscal Year 2021 documents in these formats may contact method of electronic filing, written ex the FCC by email: [email protected] or parte presentations and memoranda AGENCY: Federal Communications phone: 202–418–0530 or TTY: 202–418– summarizing oral ex parte Commission. 0432. Effective March 19, 2020, and presentations, and all attachments ACTION: Notice of proposed rulemaking. until further notice, the Commission no thereto, must be filed through the longer accepts any hand or messenger electronic comment filing system SUMMARY: In this document, the Federal delivered filings. This is a temporary available for that proceeding, and must Communications Commission measure taken to help protect the health be filed in their native format (e.g., .doc, (Commission) seeks comment on and safety of individuals, and to .xml, .ppt, searchable .pdf). -

Emcing135150714filingmainstpdf

Notice of Exempt Modification Stamford Central 555 Main Street Stamford, CT 06901 New Cingular Wireless PCS, LLC ("AT&T") submits this Notice of Exempt Modification to the Connecticut Siting Council ("Council") pursuant to Sections 16-50j-73 and 16-50j-72(b) of the Regulations of Connecticut State Agencies (“Regulations”) in connection with AT&T’s planned modification of antennas and associated equipment on an existing self support tower located on a rooftop with an address of 555 Main Street, in the City of Stamford, Connecticut. More particularly, AT&T plans to upgrade this site by adding LTE technology to its facilities. The proposed modifications will not increase the tower height, cause a significant adverse change or alteration in the physical or environmental characteristics of the site, extend the boundaries of the tower site, increase noise levels at the tower site boundary by six (6) decibels, add radio frequency sending or receiving capability which increases the total radio frequency electromagnetic radiation power density measured at the tower site boundary to or above the standard adopted by the Federal Communications Commission pursuant to Section 704 of the Telecommunications Act of 1996, as amended, and the State Department of Energy and Environmental Protection, pursuant to Section 22a-162 of the Connecticut General Statutes, or impair the structural integrity of the facility, as determined in a certification provided by a professional engineer licensed in Connecticut. To better meet the growing voice and data demands of its wireless customers, AT&T is upgrading their network nationwide to include LTE technology, which will provide faster service and better overall performance. -

The Sea Surface Temperature Product Algorithm of the Ocean Color and Temperature Scanner (OCTS) and Its Accuracy

Journal of Oceanography, Vol. 54, pp. 437 to 442. 1998 The Sea Surface Temperature Product Algorithm of the Ocean Color and Temperature Scanner (OCTS) and Its Accuracy 1 2 3 3 4 FUTOKI SAKAIDA , MASAO MORIYAMA , HIROSHI MURAKAMI , HIROMI OAKU , YASUSHI MITOMI , 4 5 AKIRA MUKAIDA and HIROSHI KAWAMURA 1Ocean Mechanical Engineering Chair, Kobe University of Mercantile Marine, 5-1-1, Fukae-Minami-machi, Kobe 658-0022, Japan 2Computer and Information Science Department, Faculty of Engineering, Nagasaki University, 1-14 Bunkyo-machi, Nagasaki 852-8521, Japan 3Earth Observation Research Center (EORC), National Space Development Agency of Japan (NASDA), Roppongi First Building, 1-9-9, Roppongi, Minato-ku, Tokyo 106-0032, Japan 4Remote Sensing Technology Center of Japan (RESTEC), Roppongi First Building, 1-9-9, Roppongi, Minato-ku, Tokyo 106-0032, Japan 5Center for Atmospheric and Oceanic Studies, Faculty of Science, Tohoku University, Aoba, Sendai 980-8578, Japan (Received 29 January 1998; in revised form 7 May 1998; accepted 12 May 1998) The Ocean Color and Temperature Scanner (OCTS) aboard the Advanced Earth Keywords: Observing Satellite (ADEOS) can observe ocean color and sea surface temperature (SST) ⋅ OCTS/ADEOS, simultaneously. This paper explains the algorithm for the OCTS SST product in the ⋅ SST. NASDA OCTS mission. In the development of the latest, third version (V3) algorithm, the OCTS match-up dataset plays an important role, especially when the coefficients required in the MCSST equation are derived and the equation form is adjusted. As a result of the validation using the OCTS match-up dataset, the algorithm has improved the root mean square (rms) error of the OCTS SST up to 0.698°C although some problems remain in the match-up dataset used in the present study. -

FCC-21-98A1.Pdf

Federal Communications Commission FCC 21-98 Before the Federal Communications Commission Washington, D.C. 20554 In the Matter of ) ) Assessment and Collection of Regulatory Fees for ) MD Docket No. 21-190 Fiscal Year 2021 ) ) REPORT AND ORDER AND NOTICE OF PROPOSED RULEMAKING Adopted: August 25, 2021 Released: August 26, 2021 Comment Date: [30 days after date of publication in the Federal Register] Reply Comment Date: [45 days after date of publication in the Federal Register] By the Commission: Acting Chairwoman Rosenworcel and Commissioners Carr and Simington issuing separate statements. TABLE OF CONTENTS Heading Paragraph # I. INTRODUCTION...................................................................................................................................1 II. BACKGROUND.....................................................................................................................................2 III. REPORT AND ORDER..........................................................................................................................6 A. Allocating Full-time Equivalents......................................................................................................7 B. Commercial Mobile Radio Service Regulatory Fees Calculation ..................................................27 C. Direct Broadcast Satellite Fees .......................................................................................................28 D. Full-Service Television Broadcaster Fees ......................................................................................36 -

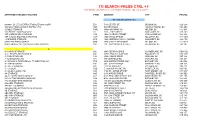

To Search Press Ctrl + F ***Information Subject to Change Without Notification***

TO SEARCH PRESS CTRL + F ***INFORMATION SUBJECT TO CHANGE WITHOUT NOTIFICATION*** APPROVED STORAGE FACILITIES CODE ADDRESS CITY POSTAL BRITISH COLUMBIA (BC) 0938481 BC LTD & EXTRA STORAGE NOW CORP EX1 7111 BEATTY ST. MISSION, BC V2V 6C4 100 MILE FEED & RANCH SUPPLY LTD. 1MF EXETER ROAD 100 MILE HOUSE, BC V0K 2E0 12 MILE STORAGE TWE 4691A HIGHWAY 3A NELSON, BC V1L 6N3 12th STREET MINI STORAGE 12T 1255 - 12th STREET KAMLOOPS, BC V2B 3C8 1ST AVENUE MINI STORAGE 1AM 1428 CHARLES STREET VANCOUVER, BC V5L 2S8 1ST CHOICE MOVING & STORAGE 1CM 1005 ETHEL STREET KELOWNA, BC V1Y 2W3 4 CORNERS STORAGE 4CS 1298 KOOTENAY WAY - CLOSED KAMLOOPS, BC V2H 1K2 6 MILE RV AND STORAGE 6MS 2878 6 MILE LAKES ROAD NELSON, BC V1L 6W3 604 STORAGE INC. and GREAT DANE MOVING 604 120 - 1651 PATRICK STREET RICHMOND, BC V6V 3A7 A A & A MINI STORAGE A20 9400 CEDAR AVENUE SUMMERLAND, BC VOH 1Z2 A & L SHOPS AND STORAGE A&L 38959 PRODUCTION WAY SQUAMISH, BC V0N 3G0 A A MINI STORAGE A19 2399 CIENAR DRIVE NANAIMO, BC V9T 3L6 A TO B MOVING LTD AT1 878 VIEWFIELD RD VICTORIA, BC V9A 4V1 A1 MOVING & STORAGE div. TR WESTCAN INC. TR5 8035 NORTH FRASER WAY BURNABY, BC V5J 5M8 A-1 MINI STORAGE A1E 361 - 317TH AVENUE KIMBERLEY, BC V8A 3J8 A-1 SELF STORAGE A30 1721 ISLAND HWY VICTORIA, BC V9B 1J1 A-1 U-LOCK AUS 5-1209 ALPHA LAKE RD WHISTLER, BC V0N 1B1 A1 U LOCK (2009) LTD. A1U 3190 ST. JOHNS STREET PORT MOODY, BC V3H 2C7 AA STORAGE AAS 2139 AIRSIDE DRIVE CAMPBELL RIVER, BC V9H 0E2 AA-1 CLASSIC MINI STORAGE A23 4155 SPALLUMCHEEN PLACE ARMSTRONG, BC V0E 1B6 AAA ACTION MOVERS (2008) INC AA4 #112 7450 LOWLAND DR.