Analysis of Song/Artist Latent Features and Its Application for Song Search

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Excesss Karaoke Master by Artist

XS Master by ARTIST Artist Song Title Artist Song Title (hed) Planet Earth Bartender TOOTIMETOOTIMETOOTIM ? & The Mysterians 96 Tears E 10 Years Beautiful UGH! Wasteland 1999 Man United Squad Lift It High (All About 10,000 Maniacs Candy Everybody Wants Belief) More Than This 2 Chainz Bigger Than You (feat. Drake & Quavo) [clean] Trouble Me I'm Different 100 Proof Aged In Soul Somebody's Been Sleeping I'm Different (explicit) 10cc Donna 2 Chainz & Chris Brown Countdown Dreadlock Holiday 2 Chainz & Kendrick Fuckin' Problems I'm Mandy Fly Me Lamar I'm Not In Love 2 Chainz & Pharrell Feds Watching (explicit) Rubber Bullets 2 Chainz feat Drake No Lie (explicit) Things We Do For Love, 2 Chainz feat Kanye West Birthday Song (explicit) The 2 Evisa Oh La La La Wall Street Shuffle 2 Live Crew Do Wah Diddy Diddy 112 Dance With Me Me So Horny It's Over Now We Want Some Pussy Peaches & Cream 2 Pac California Love U Already Know Changes 112 feat Mase Puff Daddy Only You & Notorious B.I.G. Dear Mama 12 Gauge Dunkie Butt I Get Around 12 Stones We Are One Thugz Mansion 1910 Fruitgum Co. Simon Says Until The End Of Time 1975, The Chocolate 2 Pistols & Ray J You Know Me City, The 2 Pistols & T-Pain & Tay She Got It Dizm Girls (clean) 2 Unlimited No Limits If You're Too Shy (Let Me Know) 20 Fingers Short Dick Man If You're Too Shy (Let Me 21 Savage & Offset &Metro Ghostface Killers Know) Boomin & Travis Scott It's Not Living (If It's Not 21st Century Girls 21st Century Girls With You 2am Club Too Fucked Up To Call It's Not Living (If It's Not 2AM Club Not -

2ANGLAIS.Pdf

ANGLAIS domsdkp.com HERE WITHOUT YOU 3 DOORS DOWN KRYPTONITE 3 DOORS DOWN IN DA CLUB 50 CENT CANDY SHOP 50 CENT WHAT'S UP ? 4 NON BLONDES TAKE ON ME A-HA MEDLEY ABBA MONEY MONEY MONEY ABBA DANCING QUEEN ABBA FERNANDO ABBA THE WINNER TAKES IT ALL ABBA TAKE A CHANCE ON ME ABBA I HAVE A DREAM ABBA CHIQUITITA ABBA GIMME GIMME GIMME ABBA WATERLOO ABBA KNOWING ME KNOWING YOU ABBA TAKE A CHANCE ON ME ABBA THANK YOU FOR THE MUSIC ABBA SUPER TROUPER ABBA VOULEZ VOUS ABBA UNDER ATTACK ABBA ONE OF US ABBA HONEY HONEY ABBA HAPPY NEW YEAR ABBA HIGHWAY TO HELL AC DC HELLS BELLS AC DC BACK IN BLACK AC DC TNT AC DC TOUCH TOO MUCH AC DC THUNDERSTRUCK AC DC WHOLE LOTTA ROSIE AC DC LET THERE BE ROCK AC DC THE JACK AC DC YOU SHOOK ME ALL NIGHT LONG AC DC WAR MACHINE AC DC PLAY BALL AC DC ROCK OR DUST AC DC ALL THAT SHE WANTS ACE OF BASE MAD WORLD ADAM LAMBERT ROLLING IN THE DEEP ADELE SOMEONE LIKE YOU ADELE DON'T YOU REMEMBER ADELE RUMOUR HAS IT ADELE ONLY AND ONLY ADELE SET FIRE TO THE RAIN ADELE TURNING TABLES ADELE SKYFALL ADELE WALK THIS WAY AEROSMITH WALK THIS WAY AEROSMITH BECAUSE I GOT HIGH AFROMAN RELEASE ME AGNES LONELY AKON EYES IN THE SKY ALAN PARSON PROJECT THANK YOU ALANIS MORISSETTE YOU LEARN ALANIS MORISSETTE IRONIC ALANIS MORISSETTE THE BOY DOES NOTHING ALESHA DIXON NO ROOTS ALICE MERTON FALLIN' ALICIA KEYS NO ONE ALICIA KEYS Page 1 IF I AIN'T GOT YOU ALICIA KEYS DOESN'T MEAN ANYTHING ALICIA KEYS SMOOTH CRIMINAL ALIEN ANT FARM NEVER EVER ALL SAINTS SWEET FANTA DIALO ALPHA BLONDY A HORSE WITH NO NAME AMERICA KNOCK ON WOOD AMII STEWART THIS -

Song Lyrics ©Marshall Mitchell All Rights Reserved

You Don’t Know - Song Lyrics ©Marshall Mitchell All Rights Reserved Freckled Face Girl - Marshall Mitchell © All Rights Reserved A freckled face girl in the front row of my class Headin’ Outta Wichita - Harvey Toalson/Marshall Mitchell On the playground she runs way too fast © All Rights Reserved And I can’t catch her so I can let her know I think she is pretty and I love her so Headin’ outta Wichita; we’re headed into Little Rock tonight And we could’ve had a bigger crowd but I think the band was sounding pretty tight She has pigtails and ribbons in her hair With three long weeks on this road there ain’t nothing left to do, you just listen to the radio When she smiles my heart jumps into the air And your mind keeps a’reachin’ back to find that old flat land you have the nerve to call your home And all through high school I wanted her to know That I thought she was pretty and I loved her so Now, you can’t take time setting up because before you know it’s time for you to play And you give until you’re giving blood then you realize they ain’t a’listenin’ anyway I could yell it in assembly And then you tell yourself that it can’t get worse and it does, and all you can think about is breaking down I could write it on the wall And then you find yourself back on the road following a dotted line to another town Because when I am around her I just cannot seem to speak at all I wanna see a starlit night and I wanna hold my baby tight again While in college I saw her now and then And I’m really tired of this grind and I’d sure like to see the face -

SMYC 2013 CD Booklet

SPECIAL MULTISTAKE YOUTH CONFERENCE 2013 MUSIC SOUNDTRACK Audio CD_Booklet.indd 1 10/18/2013 9:04:08 AM Stand Chorus Chorus: Joy of Life Singer: Megan Sackett No, I can’t say that I know for certain, but, Singers: Savanna Liechty & Hayden Gillies Every choice, every step, I’m feelin’ somethin’ deep inside my heart Morning sun fill the earth, every moment, I wanna say that I know for certain, Sunlight breaking through the shadows fill the shadows, point my course, set my path but for now it’s a good start. stand lighting all we see fill my soul with light. make me ready. taking in each single moment Sacred light, holy house, I’ve been trying things out a bit, with the air we breath Steady flame, steady guide, loving Father, wanting to know what’s real still so much that lies before us steady voice of peace bind my heart, fill my life I don’t know what will come of it but still so much within our reach lead me through the night. with this promise to stand… if I keep it up I will. of life joy Chorus: Won’t give in, won’t give up And be not moved, together I know there’s someone who after all I can do Every day won’t give out at all Stand makes it all alright brings another moment ‘til my heart is right. In a holy place Then I hear a voice that whispers to me every time Stand… Oh Stand that I’ve known it all my life. -

Californication Biography Song List What We Play

Californication Biography about us Established in early 2004, Californication the all new Red Hot Chili Peppers Show, aims to deliver Australia’s tribute to one of the world’s most popular and distinguishable bands: the Red Hot Chili Peppers. Californication recreates an authentic and live experience that is, the Red Hot Chili Peppers. The Chili Peppers themselves first came to prominence 20 years ago by fusing funk, rock and rap music styles through their many groundbreaking albums. A typical 90 minute show performed by Californication includes all the big hits and smash anthems that have made the Chili Peppers such an enduring global act. From their breakthrough cover of Stevie Wonder’s Higher Ground to the multiplatinum singles Give it Away, Under the Bridge and Breaking the Girl; as well as Aeroplane, Scar Tissue, Otherside and Californication; fans of the Chili Peppers’ music will never walk away disappointed. Fronted by Johnny, the vocalist of the Red Hot Chili Peppers Show captures all the stage antics and high energy required to emulate Anthony Kiedis, while the rest of the band carry out the very essence of the Red Hot Chili Peppers through the attention of musical detail and a stunning visual performance worthy of the famous LAbased quartet. And now, exclusively supported by the exciting, upcoming party band Big Way Out, you can be guaranteed an enjoyable night’s worth of entertainment. Be prepared to experience Californication the Red Hot Chili Peppers Show. Coming soon to a venue near you! Song List what we play Mothers -

Complaint Against Home Depot, Et

Case 2:12-cv-05386-RSWL-RZ Document 1 Filed 06/21/12 Page 1 of 16 Page ID #:4 1 RUSSELL J. FRACKMAN (SBN 49087) msk.com 2 STINE LEPERA (pro hac vice motion forthcoming) msk.com 3 STINA E. DJORDJEVICH (SBN 262721) n-3 msk.com 4 HELL SILBERBERG & KNUPP LLP 11377 West Olympic Boulevard 5 Los Angeles, California 90064-1683 Telephone: (310) 312-2000 6 Facsimile: (310) 312-3100 7 Attorneys for Plaintiffs • • c_n Cr) 8 9 UNITED STATES DISTRICT COURT 10 CENTRAL DISTRICT OF CALIFORNIA 11 i 12 DANIEL AUERBACH and PATRICK CASE p 12-5386 CARNEY (collectively and 13 professionally known as "THE BLACK COMPLAINT FOR YS"); TFM BLACK KEYS 14 PARTNERSHIP d/b/a MCMOORE COPYRIGHT INFRINGEMENT MCLESST PUBLISHING; and BRIAN 15 BURTON p/k/a DANGER MOUSE individually and d/b/a SWEET DEMAND FOR JURY TRIAL 16 SCIENCE, 17 Plaintiffs, 18 V. 19 THE HOME DEPOT, INC., a Delaware corporation; and DOES 1 — 10, 20 inclusive, 21 Defendants. 22 23 24 Plaintiffs Daniel Auerbach ("Auerbach") and Patrick Carney ("Carney") 25 (collectively and professionally known as "The Black Keys"), Plaintiff The Black 26 Keys Partnership d/b/a McMoore McLesst Publishing and Plaintiff Brian Burton 27 p/k/a Danger Mouse d/b/a Sweet Science ("Burton") (collectively, "Plaintiffs") 28 aver as follows: Mitchell Silberberg & Knupp LLP COMPLAINT FOR COPYRIGHT INFRINGEMENT 82400.1 / 42943-00000 Case 2:12-cv-05386-RSWL-RZ Document 1 Filed 06/21/12 Page 2 of 16 Page ID #:5 1 PRELIMINARY STATEMENT 2 I. Plaintiffs bring this action seeking to put an immediate stop to, and to 3 obtain redress for, Defendants' blatant and purposeful infringement of the 4 copyright in Plaintiffs' musical composition entitled "Lonely Boy." 5 2. -

Project VIP Spring2020 Manuscript

PARENTS (& KIDS) JUST DON’T UNDERSTAND PERIOD Friends Forever #BLM PARENTS (& KIDS) JUST DON’T UNDERSTAND Table of Contents I. Ensemble Photos pg 2 II. Directors’ Note pg 3 III. Scripts: 1. Some Things We Wish Our Parents Would Have Told Us pg 4 2. Period Piece pg 5 3. Sister Sister pg 10 4. What I Wish I could Tell You pg 12 5. Wear What I Want pg 13 6. Party At A Friend’s pg 19 7. Things Heard During Covid-19 Quarantine pg 23 Written by the Ensemble: Jendayi Benjamin, Sariya Western Boyd, Aniyah Campos, Ashanti Campos, Shea Davis, Deshante Deberry, Atseda Johnson, Annalise Rios Mercado, Jaslene Rios Mercado, Tia Sledge & Nakayla Teagle 1 Dear Aniyah, Annalise, Ashante, Atseda, Deshante, Jaslene, Jendayi, Nakayla, Sariya, Shea & Tia: What an interesting semester we have had! We came in talking about parents and all the craziness we had to endure with them … remember watching Will Smith’s video for Parents Just Don’t Understand, and then the corny (but funny) song “Kids” from Bye Bye Byrdie? And improvising (hilarious) inner voice/outer voice conversations? And then our world turned upside down. We’ve never had a semester like this where so much of our work was completed online. No rehearsals, no more theatre games, no more seeing each other in person. Yet despite all this we came up with some great scripts and poems to show how parent and child relationships work in 2020. We loved the energy you brought to the group. Thank you for all your hard work in class and online. -

Radio Essentials 2012

Artist Song Series Issue Track 44 When Your Heart Stops BeatingHitz Radio Issue 81 14 112 Dance With Me Hitz Radio Issue 19 12 112 Peaches & Cream Hitz Radio Issue 13 11 311 Don't Tread On Me Hitz Radio Issue 64 8 311 Love Song Hitz Radio Issue 48 5 - Happy Birthday To You Radio Essential IssueSeries 40 Disc 40 21 - Wedding Processional Radio Essential IssueSeries 40 Disc 40 22 - Wedding Recessional Radio Essential IssueSeries 40 Disc 40 23 10 Years Beautiful Hitz Radio Issue 99 6 10 Years Burnout Modern Rock RadioJul-18 10 10 Years Wasteland Hitz Radio Issue 68 4 10,000 Maniacs Because The Night Radio Essential IssueSeries 44 Disc 44 4 1975, The Chocolate Modern Rock RadioDec-13 12 1975, The Girls Mainstream RadioNov-14 8 1975, The Give Yourself A Try Modern Rock RadioSep-18 20 1975, The Love It If We Made It Modern Rock RadioJan-19 16 1975, The Love Me Modern Rock RadioJan-16 10 1975, The Sex Modern Rock RadioMar-14 18 1975, The Somebody Else Modern Rock RadioOct-16 21 1975, The The City Modern Rock RadioFeb-14 12 1975, The The Sound Modern Rock RadioJun-16 10 2 Pac Feat. Dr. Dre California Love Radio Essential IssueSeries 22 Disc 22 4 2 Pistols She Got It Hitz Radio Issue 96 16 2 Unlimited Get Ready For This Radio Essential IssueSeries 23 Disc 23 3 2 Unlimited Twilight Zone Radio Essential IssueSeries 22 Disc 22 16 21 Savage Feat. J. Cole a lot Mainstream RadioMay-19 11 3 Deep Can't Get Over You Hitz Radio Issue 16 6 3 Doors Down Away From The Sun Hitz Radio Issue 46 6 3 Doors Down Be Like That Hitz Radio Issue 16 2 3 Doors Down Behind Those Eyes Hitz Radio Issue 62 16 3 Doors Down Duck And Run Hitz Radio Issue 12 15 3 Doors Down Here Without You Hitz Radio Issue 41 14 3 Doors Down In The Dark Modern Rock RadioMar-16 10 3 Doors Down It's Not My Time Hitz Radio Issue 95 3 3 Doors Down Kryptonite Hitz Radio Issue 3 9 3 Doors Down Let Me Go Hitz Radio Issue 57 15 3 Doors Down One Light Modern Rock RadioJan-13 6 3 Doors Down When I'm Gone Hitz Radio Issue 31 2 3 Doors Down Feat. -

Tt Newsletter / Issue 4 : May 2010

OFFICIAL ISLE OF MAN TT NEWSLETTER / ISSUE 4 : MAY 2010 Welcome ����������� Contents 01 MONSTER ENERGY TO FUEL TT RACES 02 VIEW FROM THE GRID WITH GUY MARTIN 03 NEWS FROM OUR PARTNERS: TT PROGRAMME NEW LOOK WEBSITE SURE LAUNCH TT TEXTLINE 04 TT MARSHALS ASSOCIATION NEWS Above: A number of TT stars were on hand to help launch the 2010 TT Races with new 05 TT ZERO NEWS presenting sponsor Monster Energy. 06 FESTIVAL NEWS: SPANISH DUO TO VISIT TT 2010 PRESS DAYS 07 FESTIVAL NEWS: THREE HEADLINE MUSIC ACTS CONFIRMED THE LAST WORD Above: Current King of the Mountain John McGuinness gives a helping hand at the Monster Energy Launch. NEW PRESENTING SPONSOR Monster Energy on board to fuel the 2010 TT Races An exciting new partner has been confi rmed world’s most talented motorcycle racers and it is a privilege to be for the 2010 TT Races: associated with it.” As part of their sponsorship, the brand will live up to its reputation Energy drink Monster Energy is the latest top brand to back the TT for putting on a show in the form of music and high profi le races, coming on board as the overall presenting sponsor. As part of appearances from Monster Energy endorsed ambassadors. the deal they will also endorse the Supersport Races. The TT Races will now carry the credit ‘fuelled by Monster Energy’. The company will look to bring in the Monster Army Camp, DJs as well as feeding the spectacle on the promenade alongside the Monster Energy, who also sponsor Valentino Rossi, Ken Block already scheduled entertainment programme. -

English Song Booklet

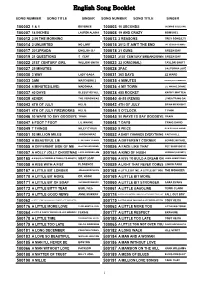

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

Keith Carlock

APRIZEPACKAGEFROM 2%!3/.34/,/6%"),,"25&/2$s.%/.42%%3 7). 3ABIANWORTHOVER -ARCH 4HE7ORLDS$RUM-AGAZINE 'ET'OOD 4(%$25--%23/& !,)#)!+%93 $!.'%2-/53% #/(%%$ 0,!.4+2!533 /.345$)/3/5.$3 34!249/52/7. 4%!#().'02!#4)#% 3TEELY$AN7AYNE+RANTZS ,&*5)$"3-0$,7(9(%34(%-!.4/7!4#( s,/52%%$34/.9h4(5.$%2v3-)4( s*!+),)%"%:%)4/&#!. s$/5",%"!3335"34)454% 2%6)%7%$ -/$%2.$25--%2#/- '2%43#(052%7//$"%%#(3/5,4/.%/,$3#(//,3*/9&5,./)3%%,)4%3.!2%3%6!.34/-0/7%2#%.4%23 Volume 35, Number 3 • Cover photo by Rick Malkin CONTENTS 48 31 GET GOOD: STUDIO SOUNDS Four of today’s most skilled recording drummers, whose tones Rick Malkin have graced the work of Gnarls Barkley, Alicia Keys, Robert Plant & Alison Krauss, and Coheed And Cambria, among many others, share their thoughts on getting what you’re after. 40 TONY “THUNDER” SMITH Lou Reed’s sensitive powerhouse traveled a long and twisting musical path to his current destination. He might not have realized it at the time, but the lessons and skills he learned along the way prepared him per- fectly for Reed’s relentlessly exploratory rock ’n’ roll. 48 KEITH CARLOCK The drummer behind platinum-selling records and SRO tours reveals his secrets on his first-ever DVD, The Big Picture: Phrasing, Improvisation, Style & Technique. Modern Drummer gets the inside scoop. 31 40 12 UPDATE 7 Walkers’ BILL KREUTZMANN EJ DeCoske STEWART COPELAND’s World Percussion Concerto Neon Trees’ ELAINE BRADLEY 16 GIMME 10! Hot Hot Heat’s PAUL HAWLEY 82 PORTRAITS The Black Keys’ PATRICK CARNEY 84 9 REASONS TO LOVE Paul La Raia BILL BRUFORD 82 84 96 -

Hyperreality in Radiohead's the Bends, Ok Computer

HYPERREALITY IN RADIOHEAD’S THE BENDS, OK COMPUTER, AND KID A ALBUMS: A SATIRE TO CAPITALISM, CONSUMERISM, AND MECHANISATION IN POSTMODERN CULTURE A Thesis Presented as Partial Fulfillment of the Requirements for the Attainment of the Sarjana Sastra Degree in English Language and Literature By: Azzan Wafiq Agnurhasta 08211141012 STUDY PROGRAM OF ENGLISH LANGUAGE AND LITERATURE DEPARTMENT OF ENGLISH LANGUAGE EDUCATION FACULTY OF LANGUAGES AND ARTS YOGYAKARTA STATE UNIVERSITY 2014 APPROVAL SHEET HYPERREALITY IN RADIOHEAD’S THE BENDS, OK COMPUTER, AND KID A ALBUMS: A SATIRE TO CAPITALISM, CONSUMERISM, AND MECHANISATION IN POSTMODERN CULTURE A THESIS By Azzan Wafiq Agnurhasta 08211141012 Approved on 11 June 2014 By: First Consultant Second Consultant Sugi Iswalono, M. A. Eko Rujito Dwi Atmojo, M. Hum. NIP 19600405 198901 1 001 NIP 19760622 200801 1 003 ii RATIFICATION SHEET HYPERREALITY IN RADIOHEAD’S THE BENDS, OK COMPUTER, AND KID A ALBUMS: A SATIRE TO CAPITALISM, CONSUMERISM, AND MECHANISATION IN POSTMODERN CULTURE A THESIS By: AzzanWafiqAgnurhasta 08211141012 Accepted by the Board of Examiners of Faculty of Languages and Arts of Yogyakarta State University on 14July 2014 and declared to have fulfilled the requirements for the attainment of the Sarjana Sastra degree in English Language and Literature. Board of Examiners Chairperson : Nandy Intan Kurnia, M. Hum. _________________ Secretary : Eko Rujito D. A., M. Hum. _________________ First Examiner : Ari Nurhayati, M. Hum. _________________ Second Examiner : Sugi Iswalono, M. A. _________________