Patient Safety for Ellen Patient Safety

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

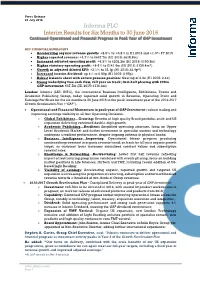

Informa PLC Interim Results for Six Months to 30 June 2016 Continued Operational and Financial Progress in Peak Year of GAP Investment

Press Release 28 July 2016 Informa PLC Interim Results for Six Months to 30 June 2016 Continued Operational and Financial Progress in Peak Year of GAP investment KEY FINANCIAL HIGHLIGHTS Accelerating organic revenue growth: +2.5% vs +0.2% in H1 2015 and +1.0% FY 2015 Higher reported revenue: +4.7% to £647.7m (H1 2015: £618.8m) Increased adjusted operating profit: +6.3% to £202.2m (H1 2015: £190.3m) Higher statutory operating profit: +8.6% to £141.6m (H1 2015: £130.4m*) Growth in adjusted diluted EPS: +3.1% to 23.1p (H1 2015: 22.4p*) Increased interim dividend: up 4% to 6.80p (H1 2015: 6.55p) Robust balance sheet with secure pension position: Gearing of 2.4x (H1 2015: 2.4x) Strong underlying free cash flow, full year on track; first-half phasing with £20m GAP investment: £67.7m (H1 2015: £116.4m) London: Informa (LSE: INF.L), the international Business Intelligence, Exhibitions, Events and Academic Publishing Group, today reported solid growth in Revenue, Operating Profit and Earnings Per Share for the six months to 30 June 2016 in the peak investment year of the 2014-2017 Growth Acceleration Plan (“GAP”). Operational and Financial Momentum in peak year of GAP Investment – robust trading and improving earnings visibility in all four Operating Divisions: o Global Exhibitions…Growing: Benefits of high quality Brand portfolio, scale and US expansion delivering continued double-digit growth; o Academic Publishing…Resilient: Simplified operating structure, focus on Upper Level Academic Market and further investment in specialist content -

Annual Copyright License for Corporations

Responsive Rights - Annual Copyright License for Corporations Publisher list Specific terms may apply to some publishers or works. Please refer to the Annual Copyright License search page at http://www.copyright.com/ to verify publisher participation and title coverage, as well as any applicable terms. Participating publishers include: Advance Central Services Advanstar Communications Inc. 1105 Media, Inc. Advantage Business Media 5m Publishing Advertising Educational Foundation A. M. Best Company AEGIS Publications LLC AACC International Aerospace Medical Associates AACE-Assn. for the Advancement of Computing in Education AFCHRON (African American Chronicles) AACECORP, Inc. African Studies Association AAIDD Agate Publishing Aavia Publishing, LLC Agence-France Presse ABC-CLIO Agricultural History Society ABDO Publishing Group AHC Media LLC Abingdon Press Ahmed Owolabi Adesanya Academy of General Dentistry Aidan Meehan Academy of Management (NY) Airforce Historical Foundation Academy Of Political Science Akademiai Kiado Access Intelligence Alan Guttmacher Institute ACM (Association for Computing Machinery) Albany Law Review of Albany Law School Acta Ecologica Sinica Alcohol Research Documentation, Inc. Acta Oceanologica Sinica Algora Publishing ACTA Publications Allerton Press Inc. Acta Scientiae Circumstantiae Allied Academies Inc. ACU Press Allied Media LLC Adenine Press Allured Business Media Adis Data Information Bv Allworth Press / Skyhorse Publishing Inc Administrative Science Quarterly AlphaMed Press 9/8/2015 AltaMira Press American -

Class 200: New Studies in Religion”

V NEWS n New Book Series: “Class 200: New Studies in Religion” The University of Chicago Press has recently launched a new book series: “Class 200: New Stud- ies in Religion.” The editors of the series are Kathryn Lofton (Department of Religious Studies, Yale University) and John Lardas Modern (Department of Religious Studies, Franklin & Mar- shall College). According to the editors, “Class 200 offers the most innovative works in the study of religion today. Resting on a generation of critical scholarship that reevaluated the central categories of the field, the series aims to surpass that good work by rebuilding the vocabulary of, and estab- lishing new questions for, religious studies. “The series will publish authors who understand descriptions of religion to be always bound up in explanations for it. It will nurture authorial reflexivity, documentary intensity, and gene- alogical responsibility. The series presumes no inaugurating definition of religion other than what it is not: it is not reducible to demographics, doctrines, or cognitive mechanics. It is more than a discursive concept or cultural idiom. It is something that can be named only with a pre- cise and poetic wrestling with the nature of its naming. “Class 200 seeks to renew the study of religion as a field of inquiry that is open in terms of disciplinary affiliation, relishes archival and ethnographic immersion, and is scrupulous in its use of categories. The series is not defined by topics but by certain shared fundamentals: rigor, an investment in language, an awareness of authority, and a strategy regarding the politics of truth claims in any archival or anthropological situation.” More information can be found at the University of Chicago Press’s website: http://www. -

I PERFORMING VIDEO GAMES: APPROACHING GAMES AS

i PERFORMING VIDEO GAMES: APPROACHING GAMES AS MUSICAL INSTRUMENTS A Thesis Submitted to the Faculty of Purdue University by Remzi Yagiz Mungan In Partial Fulfillment of the Requirements for the Degree of Master of Fine Arts August 2013 Purdue University West Lafayette, Indiana ii to Selin iii ACKNOWLEDGEMENTS I read that the acknowledgment page might be the most important page of a thesis and dissertation and I do agree. First, I would like to thank to my committee co‐chair, Prof. Fabian Winkler, whom welcomed me to ETB with open arms when I first asked him about the program more than three years ago. In these three years, I have learned a lot from him about art and life. Second, I want to express my gratitude to my committee co‐chair, Prof. Shannon McMullen, whom helped when I got lost and supported me when I got lost again. I will remember her care for the students when I teach. Third, I am thankful to my committee member Prof. Rick Thomas for having me along the ride to Prague, teaching many things about sound along the way and providing his insightful feedback. I was happy to be around a group of great people from many areas in Visual and Performing Arts. I specially want to thank the ETB people Jordan, Aaron, Paul, Mara, Oren, Esteban and Micah for spending time with me until night in FPRD. I also want to thank the Sound Design people Ryan, Mike and Ian for our time in the basement or dance studios of Pao Hall. -

Social Media in Travel, Tourism and Hospitality New Directions in Tourism Analysis Series Editor: Dimitri Ioannides, E-TOUR, Mid Sweden University, Sweden

SOCIAL MEDIA IN TRAVEL, TOURISM AND HOSPITALITY New Directions in Tourism Analysis Series Editor: Dimitri Ioannides, E-TOUR, Mid Sweden University, Sweden Although tourism is becoming increasingly popular as both a taught subject and an area for empirical investigation, the theoretical underpinnings of many approaches have tended to be eclectic and somewhat underdeveloped. However, recent developments indicate that the field of tourism studies is beginning to develop in a more theoretically informed manner, but this has not yet been matched by current publications. The aim of this series is to fill this gap with high quality monographs or edited collections that seek to develop tourism analysis at both theoretical and substantive levels using approaches which are broadly derived from allied social science disciplines such as Sociology, Social Anthropology, Human and Social Geography, and Cultural Studies. As tourism studies covers a wide range of activities and sub fields, certain areas such as Hospitality Management and Business, which are already well provided for, would be excluded. The series will therefore fill a gap in the current overall pattern of publication. Suggested themes to be covered by the series, either singly or in combination, include – consumption; cultural change; development; gender; globalisation; political economy; social theory; sustainability. Also in the series Tourists, Signs and the City The Semiotics of Culture in an Urban Landscape Michelle M. Metro-Roland ISBN 978-0-7546-7809-0 Stories of Practice: Tourism -

Humanities Section (463KB)

UNIVERSITY OF SAN CARLOS Josef Baumgartner Learning Resource Center Humanities Section Acquisitions List FIRST SEMESTER, SY 2015 – 2016 THE ARTS Alread, Jason. (2014). Design-tech: building science for architects. 2nd edition. New York: Routledge. [720 Al77] Anderson, Kjell. (2014). Design energy simulation for architects: guide to 3D graphics. New York: Routledge. [720.472028566] Bailey, Sarah. (2014). Visual merchandising for fashion. London: Bloomsbury Academic. [746.920688 B15] Ballinger, Barbara. (2014). The kitchen bible: designing the perfect culinary space. Australia: Images Publishing. [747.797 B21] Barlis, Alan. (2013). Classic and modern: signature and styles. [San Rafael, California]: ORO Editions. [728 B24] Binggeli, Corky. (2014). Materials for interior environments. 2nd edition. Hoboken: Wiley. [729 B51] Bonda, Penny. (2014). Sustainable commercial interiors. 2nd edition. Hoboken, New Jersey: John Wiley and Sons, Inc. [725.23047 B64] Bradbury, Dominic. (2014). New Brazilian house: with 303 colour illustrations. New York, N.Y.: Thames and Hudson. [720.0981 B72] Broto, Charles. (2014). Social housing: architecture and design. Barcelona: Links International. [728.1 B79] 1 Bluyssen, Philomena. (2014). The healthy indoor environment: how to assess occupants’ welllbeing in buildings. New York: Taylor & Francis Group. [720.286 B62] Burkhalter, Thomas. (2013). Local music scenes and globalization: transnational platforms in Beirut. New York: Routledge. [780.956925 B91] Cairns, Stephen. (2014). Buildings must die: a perverse view of architecture. Massachusetts: The MIT Press. [720.1 C12] Camuffo, Dario. (2014). Microclimate for cultural heritage: conservation, restoration, and maintenance of indoor and outdoor monuments . 2nd edition. Amsterdam: Elsevier. [702.9 C15] Cantor, Jeremy. (2014). Secrets of CG short filmmakers. Boston: Cengage Learning PTR. [777.7 C16] Cline, Lydia Sloan. -

Cosplay Culture: the Development of Interactive and Living Art Through Play

Ryerson University Digital Commons @ Ryerson Theses and dissertations 1-1-2012 Cosplay Culture: The evelopmeD nt of Interactive and Living Art through Play Ashley Lotecki Ryerson University, [email protected] Follow this and additional works at: http://digitalcommons.ryerson.ca/dissertations Part of the Critical and Cultural Studies Commons, and the Sociology Commons Recommended Citation Lotecki, Ashley, "Cosplay Culture: The eD velopment of Interactive and Living Art through Play" (2012). Theses and dissertations. Paper 806. This Major Research Paper is brought to you for free and open access by Digital Commons @ Ryerson. It has been accepted for inclusion in Theses and dissertations by an authorized administrator of Digital Commons @ Ryerson. For more information, please contact [email protected]. COSPLAY CULTURE: THE DEVELOPMENT OF INTERACTIVE AND LIVING ART THROUGH PLAY by Ashley Lotecki BDes, Fashion Communications, Ryerson University, Canada, 2006 A Major Research Paper presented to Ryerson University in partial fulfillment of the requirements for the degree of Master of Arts in the Program of Fashion Toronto, Ontario, Canada, 2012 © Ashley Lotecki 2012 Author’s Declaration I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I authorize Ryerson University to lend this thesis to other institutions or individuals for the purpose of scholarly research. I further authorize Ryerson University to reproduce this thesis by photocopying or by other means, in total or in part, at the request of other institutions or individuals for the purpose of scholarly research. I understand that my thesis may be made electronically available to the public. -

Instructor Textbooks

Updated: 12/4/15 PLEASE NOTE: Now Available – E-Book version at CourseSmart Login to http://instructors.coursesmart.com to create an account to acquire free e-book desk copies. (The majority of textbooks are available at this site) Publishers Listing Academic Press – See Elsevier Addison-Wesley – See Pearson Education Allyn & Bacon/Longman – See Pearson Education Amacom – Division of American Management Association 800-250-5308 600 AMA Way, Saranac Lake, NY 12983-5534 FAX: 518-891-2372 Email: [email protected] Website: www.amacombooks.org American Psychological Association 800-374-2721 750 First Street NE, Washington, DC, 20002-4242 202-336-5500 Website: www.apa.org FAX: 202-336-5502 American Safety Training, Inc. 563-322-4942 317 W. 4th St., Davenport, IA 52801 FAX: 563-323-0804 ArTech House 800-225-9977 685 Canton Street, Norwood, MA 02062 FAX: 781-769-6334 Email: [email protected] Website: www.artechouse.com Instructor copies: complete form - http://www.artechhouse.com/Instructor.aspx Ashgate Publishing Co. 800-535-9544 P.O. Box 2225, Williston, VT 05494-2225 FAX: 802-864-7626 Website: www.ashgate.com Desk Copies: Susan Sprague [email protected] 802-276-3162 x302 Avalon Publishing Group – See Perseus Books Group Updated: 12/4/15 Aviation Shops (AKA McGraw-Hill Aviation Week) 800-525-5003 Website: www.aviationweek.com FAX: 888-385-1428 Aviation Supplies & Academics (ASA) 800-272-2359 7005 132nd Place SE, Newcastle, WA 98059-3153 425-235-1500 Website: www.asa2fly.com Barron’s Educational Series 800-645-3476 250 Wireless Blvd., Hauppauge, NY 11788 FAX: 631-434-3723 Email contact site: [email protected] Website: www.barronseduc.com Bedford/St. -

Evolving the Information Experience

evolving the information experience InfoTools Define Explain Locate Translate Search Document... Search All Documents... Search Web Search Catalog Highlight Add to Bookshelf Copy Text... Copy Bookmark Print... Print Again Toggle Automenu Preferences... Help About ebrary Reader... Acquire and Share, Enhance Your Integrate every Customize Archive and End-user document in the your collection Distribute your Experience ebrary system of e-books and own digitized through with other digital other materials materials on our InfoTools™ and resources and from leading servers or yours other powerful information on publishers features for easily the web personalizing and organizing information Acquire and Customize your e-book selection SUBSCRIBE T O G R O W I N G E - B O O K D ATA B A S E S W I T H S IMULTANEOU S , MULTI-USER ACCESS. Academic Databases Publisher Databases Partial Publisher List for Subscription Databases Academic Complete includes all • Datamonitor academic databases listed below. • D&B International Business Reports • Ashgate Publishing • Beacon Press • Business & Economics • IGI Global InfoSci-Books • Berg Publishers • Computers & IT • Brill Academic Publishers • Education Other Databases • Brookings Institution Press • Engineering & Technology • Community College (for U.S. • Columbia University Press • History & Political Science and Canada) • Continuum International Publishing Group • Humanities • Public Library Complete • Emerald Group • Interdisciplinary & Area Studies • Online Sheet Music • Greenwood Publishing • Language, -

SPS Accepted Journals and Publishers

ANNEX A: Accepted non-ISI Journals Acta Medica Philippina Journal of the Geological Society of the Philippines Journal of the Philippine Society for Microbiology and Infectious Diseases Philippine Health Systems Research Philippine Journal of Internal Medicine Philippine Journal of Nutrition Philippine Nuclear Journal Philippine Population Review Science Diliman The Philippine Journal of Pediatrics Acta Horticulturae Geological Society of Malaysia Bulletin Journal of Environmental Science and Management Journal of Japan Society on Water Environment Journal of Southeast Asian Education LaMer Malaysian Journal of Nutrition Media Asia Nutrition Today Oceans Southeast Asian Journal on Open and Distance Learning The Journal of Maternal-Fetal and Neonatal Medicine UPV Journal of Natural Sciences ANNEX B: List of local publishers Ateneo de Manila University Press De la Salle University Press U.P. Press ANNEX C: List of international publishers A.A. Balkema Publishers (Leiden, Netherlands) American Mathematical Society American Medical Association Press AOCS Press (Champaign, Illinois) Artspace Visual Arts Centre Ltd. Ash Institute for Democratic Governance and Innovation, JFK School of Government, Harvard University Ashgate Publishing Company Asian Development Bank Aunt Lute Books (San Francisco) Backhuys Publishers (Leiden, Netherlands) Birkhauser Velag Blackwell Publishers Ltd. Brookings Institution Press CABI Publishing (Oxon, UK) Cambridge University Press Eastern Universities Press, Singapore Elsevier Amsterdam Geological Society of London -

The Prospectus

IMPORTANT: You must read the following disclaimer before continuing. The following disclaimer applies to the attached document and you are therefore advised to read this carefully before reading, accessing or making any other use of the attached document. In accessing the document, you agree to be bound by the following terms and conditions, including any modifications to them from time to time, each time you receive any information from Informa PLC (the “ Company ”), Barclays Bank PLC (“ Barclays ”), Merrill Lynch International (“ BofA Merrill Lynch ”), HSBC Bank plc (“ HSBC ”), Banco Santander, S.A. (“ Banco Santander ”), BNP Paribas or Commerzbank Aktiengesellschaft, London Branch (“ Commerzbank ”) (each an “ Underwriter ” and together the “ Underwriters ”) as a result of such access. You acknowledge that this electronic transmission and the delivery of the attached document is confidential and intended only for you and you agree you will not forward, reproduce, copy, download or publish this electronic transmission or the attached document (electronically or otherwise) to any other person . The document and the offer when made are only addressed to and directed at persons in member states of the European Economic Area (“EEA”) who are “qualified investors” within the meaning of Article 2(1)(e) of the Prospectus Directive (Directive 2003/71/EC and amendments thereto, including Directive 2010/73/EU, to the extent implemented in the relevant Member State of the European Economic Area) and any implementing measure in each relevant Member -

INFORMA PLC (Incorporated As a Public Limited Company in England and Wales)

BASE LISTING PARTICULARS INFORMA PLC (incorporated as a public limited company in England and Wales) Guaranteed by certain other companies in the Enlarged Group £2,500,000,000 Euro Medium Term Note Programme Under the Euro Medium Term Note Programme described in this Base Listing Particulars (the "Base Listing Particulars") (the "Programme"), Informa PLC (the "Issuer"), subject to compliance with all relevant laws, regulations and directives, may from time to time issue euro medium term notes (the "Notes"). Application has been made to The Irish Stock Exchange plc trading as Euronext Dublin ("Euronext Dublin") for the Notes issued under the Programme during the period of twelve months from the date hereof to be admitted to the official list (the "Official List") and to trading on the Global Exchange Market of Euronext Dublin (the "GEM"). This offering circular has been approved by Euronext Dublin as a Base Listing Particulars. References in this Base Listing Particulars to Notes being "listed" (and all related references) shall mean that such Notes have been admitted to the Official List and to trading on the GEM. The Programme provides that Notes may be listed on such other or further stock exchange(s) as may be agreed between Issuer and the relevant Dealer (as defined below). This Base Listing Particulars does not constitute a prospectus for the purposes of Article 5 of Directive 2003/71/EC (as such directive may be amended from time to time, the "Prospectus Directive"). The Issuer is not offering the Notes in any jurisdiction in circumstances that would require a prospectus to be prepared pursuant to the Prospectus Directive.