Copyright by Linda Lou Brown 2009

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

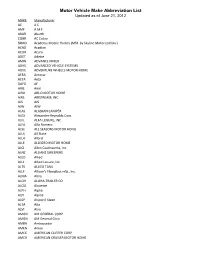

Motor Vehicle Make Abbreviation List Updated As of June 21, 2012 MAKE Manufacturer AC a C AMF a M F ABAR Abarth COBR AC Cobra SKMD Academy Mobile Homes (Mfd

Motor Vehicle Make Abbreviation List Updated as of June 21, 2012 MAKE Manufacturer AC A C AMF A M F ABAR Abarth COBR AC Cobra SKMD Academy Mobile Homes (Mfd. by Skyline Motorized Div.) ACAD Acadian ACUR Acura ADET Adette AMIN ADVANCE MIXER ADVS ADVANCED VEHICLE SYSTEMS ADVE ADVENTURE WHEELS MOTOR HOME AERA Aerocar AETA Aeta DAFD AF ARIE Airel AIRO AIR-O MOTOR HOME AIRS AIRSTREAM, INC AJS AJS AJW AJW ALAS ALASKAN CAMPER ALEX Alexander-Reynolds Corp. ALFL ALFA LEISURE, INC ALFA Alfa Romero ALSE ALL SEASONS MOTOR HOME ALLS All State ALLA Allard ALLE ALLEGRO MOTOR HOME ALCI Allen Coachworks, Inc. ALNZ ALLIANZ SWEEPERS ALED Allied ALLL Allied Leisure, Inc. ALTK ALLIED TANK ALLF Allison's Fiberglass mfg., Inc. ALMA Alma ALOH ALOHA-TRAILER CO ALOU Alouette ALPH Alpha ALPI Alpine ALSP Alsport/ Steen ALTA Alta ALVI Alvis AMGN AM GENERAL CORP AMGN AM General Corp. AMBA Ambassador AMEN Amen AMCC AMERICAN CLIPPER CORP AMCR AMERICAN CRUISER MOTOR HOME Motor Vehicle Make Abbreviation List Updated as of June 21, 2012 AEAG American Eagle AMEL AMERICAN ECONOMOBILE HILIF AMEV AMERICAN ELECTRIC VEHICLE LAFR AMERICAN LA FRANCE AMI American Microcar, Inc. AMER American Motors AMER AMERICAN MOTORS GENERAL BUS AMER AMERICAN MOTORS JEEP AMPT AMERICAN TRANSPORTATION AMRR AMERITRANS BY TMC GROUP, INC AMME Ammex AMPH Amphicar AMPT Amphicat AMTC AMTRAN CORP FANF ANC MOTOR HOME TRUCK ANGL Angel API API APOL APOLLO HOMES APRI APRILIA NEWM AR CORP. ARCA Arctic Cat ARGO Argonaut State Limousine ARGS ARGOSY TRAVEL TRAILER AGYL Argyle ARIT Arista ARIS ARISTOCRAT MOTOR HOME ARMR ARMOR MOBILE SYSTEMS, INC ARMS Armstrong Siddeley ARNO Arnolt-Bristol ARRO ARROW ARTI Artie ASA ASA ARSC Ascort ASHL Ashley ASPS Aspes ASVE Assembled Vehicle ASTO Aston Martin ASUN Asuna CAT CATERPILLAR TRACTOR CO ATK ATK America, Inc. -

The Washington August 2020

August 2020 The Washington Greater Seattle Chapter SDC Founded in 1969 Volume 50 Number 8 Not much to report this month either. Can- celled seems to be the slogan these days The editor is continuing on his 51 project, but going slow. Got the trunk lid straightened out and painted. I had a lot more small dents and imperfections than I realized. It is on the car but still having a hinge problem. It seems that is common with worn hinges. For now I put it aside to work on another day. Most of my time has been taken up with things around the house. Like fence repair to keep deer and rab- bits out of the garden. We really prefer to eat the vegetables ourselves. And then there was pressure washing and painting decks and patios. Not any of my fun projects. The painting was hired out to Greta, and she did a good job on the deck. 4 Gallons worth. Your editor (temporary that is) From Gary Finch The dealer that Dad managed : Packer’s Cor- ner, owned by Don Packer, family owned Studebaker dealer since 1924 The Washington President Page 2 AUGUST MEETING CANCELLED 2020 Greater Seattle Chapter Upcoming Events Month Date Event Location Time Information & CONTACT Aug 16 BBQ and car show 14810 SE Jones Pl, Renton CANCELLED Sep 5 Cruise and Car show Bickelton, WA CANCELLED Oct 11 Fall Color Tour Don Albrecht 425-392-7611 Nov 15 Election Meeting 29902 176 Ave SE, Kent Noller’s hosting Dec 6, Christmas Party 1PM Sizzler, South Center GSC hosting upcoming swap meets Month Date Location Information & CONTACT Sep 5 Bickleton, WA Flea market and car show CANCELLED Sep 25-26 Chehalis, WA Swap Meet Oct 10-11 Monroe Fall Swap meet Nov 7-8 Bremerton Swap meet Internationals August 5-8, 2020 , 56th SDC International CANCELLED Chattanooga, Tenn. -

Karl E. Ludvigsen Papers, 1905-2011. Archival Collection 26

Karl E. Ludvigsen papers, 1905-2011. Archival Collection 26 Karl E. Ludvigsen papers, 1905-2011. Archival Collection 26 Miles Collier Collections Page 1 of 203 Karl E. Ludvigsen papers, 1905-2011. Archival Collection 26 Title: Karl E. Ludvigsen papers, 1905-2011. Creator: Ludvigsen, Karl E. Call Number: Archival Collection 26 Quantity: 931 cubic feet (514 flat archival boxes, 98 clamshell boxes, 29 filing cabinets, 18 record center cartons, 15 glass plate boxes, 8 oversize boxes). Abstract: The Karl E. Ludvigsen papers 1905-2011 contain his extensive research files, photographs, and prints on a wide variety of automotive topics. The papers reflect the complexity and breadth of Ludvigsen’s work as an author, researcher, and consultant. Approximately 70,000 of his photographic negatives have been digitized and are available on the Revs Digital Library. Thousands of undigitized prints in several series are also available but the copyright of the prints is unclear for many of the images. Ludvigsen’s research files are divided into two series: Subjects and Marques, each focusing on technical aspects, and were clipped or copied from newspapers, trade publications, and manufacturer’s literature, but there are occasional blueprints and photographs. Some of the files include Ludvigsen’s consulting research and the records of his Ludvigsen Library. Scope and Content Note: The Karl E. Ludvigsen papers are organized into eight series. The series largely reflects Ludvigsen’s original filing structure for paper and photographic materials. Series 1. Subject Files [11 filing cabinets and 18 record center cartons] The Subject Files contain documents compiled by Ludvigsen on a wide variety of automotive topics, and are in general alphabetical order. -

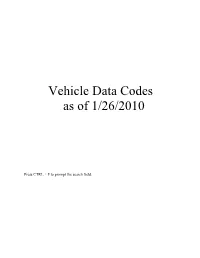

H:\My Documents\Article.Wpd

Vehicle Data Codes as of 1/26/2010 Press CTRL + F to prompt the search field. VEHICLE DATA CODES TABLE OF CONTENTS 1--LICENSE PLATE TYPE (LIT) FIELD CODES 1.1 LIT FIELD CODES FOR REGULAR PASSENGER AUTOMOBILE PLATES 1.2 LIT FIELD CODES FOR AIRCRAFT 1.3 LIT FIELD CODES FOR ALL-TERRAIN VEHICLES AND SNOWMOBILES 1.4 SPECIAL LICENSE PLATES 1.5 LIT FIELD CODES FOR SPECIAL LICENSE PLATES 2--VEHICLE MAKE (VMA) AND BRAND NAME (BRA) FIELD CODES 2.1 VMA AND BRA FIELD CODES 2.2 VMA, BRA, AND VMO FIELD CODES FOR AUTOMOBILES, LIGHT-DUTY VANS, LIGHT- DUTY TRUCKS, AND PARTS 2.3 VMA AND BRA FIELD CODES FOR CONSTRUCTION EQUIPMENT AND CONSTRUCTION EQUIPMENT PARTS 2.4 VMA AND BRA FIELD CODES FOR FARM AND GARDEN EQUIPMENT AND FARM EQUIPMENT PARTS 2.5 VMA AND BRA FIELD CODES FOR MOTORCYCLES AND MOTORCYCLE PARTS 2.6 VMA AND BRA FIELD CODES FOR SNOWMOBILES AND SNOWMOBILE PARTS 2.7 VMA AND BRA FIELD CODES FOR TRAILERS AND TRAILER PARTS 2.8 VMA AND BRA FIELD CODES FOR TRUCKS AND TRUCK PARTS 2.9 VMA AND BRA FIELD CODES ALPHABETICALLY BY CODE 3--VEHICLE MODEL (VMO) FIELD CODES 3.1 VMO FIELD CODES FOR AUTOMOBILES, LIGHT-DUTY VANS, AND LIGHT-DUTY TRUCKS 3.2 VMO FIELD CODES FOR ASSEMBLED VEHICLES 3.3 VMO FIELD CODES FOR AIRCRAFT 3.4 VMO FIELD CODES FOR ALL-TERRAIN VEHICLES 3.5 VMO FIELD CODES FOR CONSTRUCTION EQUIPMENT 3.6 VMO FIELD CODES FOR DUNE BUGGIES 3.7 VMO FIELD CODES FOR FARM AND GARDEN EQUIPMENT 3.8 VMO FIELD CODES FOR GO-CARTS 3.9 VMO FIELD CODES FOR GOLF CARTS 3.10 VMO FIELD CODES FOR MOTORIZED RIDE-ON TOYS 3.11 VMO FIELD CODES FOR MOTORIZED WHEELCHAIRS 3.12 -

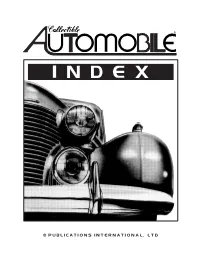

COLLECTIBLE AUTOMOBILE® INDEX Current Through Volume 37 Number 6, April 2021

® INDEX © PUBLICATIONS INTERNATIONAL, LTD COLLECTIBLE AUTOMOBILE® INDEX Current through Volume 37 Number 6, April 2021 CONTENTS FEATURES ..................................................... 1–7 PHOTO FEATURES ............................................ 7–10 FUTURE COLLECTIBLES ..................................... 10–12 CHEAP WHEELS ............................................. 12–14 COLLECTIBLE COMMERCIAL VEHICLES ..................... 14–15 COLLECTIBLE CANADIAN VEHICLES ............................ 15 NEOCLASSICS .................................................. 15 SPECIAL ARTICLES .......................................... 15–18 STYLING STUDIES .............................................. 18 PERSONALITY PROFILES, INTERVIEWS ....................... 18–19 MUSEUM PASS ............................................... 19–20 COLLECTIBLE AUTOMOBILIA ................................... 20 REFLECTED LIGHT ............................................. 20 BOOK REVIEWS .............................................. 20–22 VIDEOS ......................................................... 22 COLLECTIBLE AUTOMOBILE® INDEX Current through Volume 37 Number 6, April 2021 FEATURES AUTHOR PG. VOL. DATE AUTHOR PG. VOL. DATE Alfa Romeo: 1954-65 Giulia Buick: 1962-96 V-6 Richard Popely 24 12#4 Dec 95 and Giulietta Ray Thursby 58 19#6 Apr 03 Buick: 1963-65 Riviera James W. Howell & Allard: 1949-54 J2 and J2-X Dean Batchelor 28 7#6 Apr 91 Dick Nesbitt 8 2#1 May 85 Allstate: 1952-53 Richard M. Langworth 66 9#2 Aug 92 Buick: 1964-67 Special/Skylark Don Keefe 42 32#2 Aug 15 AMC: 1959-82 Foreign Markets Patrick Foster 58 22#1 Jun 05 Buick: 1964-72 Sportwagon and AMC: 1965-67 Marlin John A. Conde 60 5#1 Jun 88 Oldsmobile Vista-Cruiser John Heilig 8 21#5 Feb 05 AMC: 1967-68 Ambassador Patrick Foster 48 20#1 Jun 03 Buick: 1965-66 John Heilig 26 20#6 Apr 04 AMC: 1967-70 Rebel Patrick Foster 56 29#6 Apr 13 Buick: 1965-67 Gran Sport John Heilig 8 18#5 Feb 02 AMC: 1968-70 AMX John A. Conde 26 1#2 Jul 84 Buick: 1966-70 Riviera Michael Lamm 8 9#3 Oct 92 AMC: 1968-74 Javelin Richard M. -

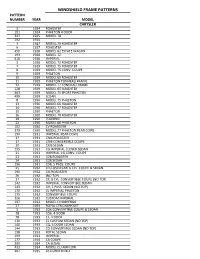

Windshield Frame Patterns

WINDSHIELD FRAME PATTERNS PATTERN NUMBER YEAR MODEL CHRYSLER 3 1924 ROADSTER 191 1924 PHAETON 4 DOOR 347 1925 MODEL 70 192 1925 3 1927 MODEL 70 ROADSTER 6 1927 ROADSTER 450 1928 MODEL 62 ESTATE WAGON 193 1928 MODEL 72 416 1928 IMPERIAL 3 1928 MODEL 72 ROADSTER 7 1929 MODEL 75 ROADSTER 8 1929 MODEL 75 CONV. COUPE 9 1929 PHAETON 10 1929 MODEL 65 ROADSTER 11 1929 PHAETON TONNEAU FRAME 12 1929 MODEL 77 TONNEAU FRAME 128 1929 MODEL 65 ROADSTER 463 1929 MODEL 75 SPORT PHAETON 499 1929 SEDAN 8 1930 MODEL 75 PHAETON 13 1930 MODEL 66 ROADSTER 14 1930 MODEL 77 ROADSTER 15 1930 PHAETON 16 1930 MODEL 70 ROADSTER 19 1930 TOURING 22 1930 MODEL 66 PHAETON 102 1930 CV ROADSTER 379 1930 MODEL 77 PHAETON REAR COWL 194 1931 IMPERIAL REAR COWL 17 1931 CM6 ROADSTER 17 1931 CM6 CONVERTIBLE COUPE 20 1931 CM6 SEDAN 195 1931 CG IMPERIAL CLOSED SEDAN 21 1931 IMPERIAL CG CONV. COUPE 23 1931 CD8 ROADSTER 24 1931 CD8 SEDAN 196 1931 CD8, 5 PASS. COUPE 25 1932 CI CLOSED CAR, 6 CYL. COUPE & SEDAN 190 1932 CI6 ROADSTER 26 1932 (NO TOP) 37 1932 CP, 8 CYL. CONVERTIBLE COUPE (NO TOP) 142 1932 IMPERIAL CONVERTIBLE SEDAN 143 1932 CH, 5 PASS. SEDAN (NO TOP) 170 1932 CL IMPERIAL PHAETON 175 1932 CONVERTIBLE COUPE 326 1932 CUSTOM IMPERIAL 197 1932 MODEL CH IMPERIAL 27 1933 ROYAL CT8 CABRIOLET 5 1933 CO6 CONVERTIBLE COUPE & SEDAN 78 1933 CO6, 4 DOOR 78 1933 CT, 4 DOOR 130 1933 CL CUSTOM SEDAN (NO TOP) 140 1933 CQ, 4 DOOR SEDAN 144 1933 CO CONVERTIBLE SEDAN (NO TOP) 198 1933 ROYAL SEDAN 199 1933 IMPERIAL 177 1934 CA COUPE 200 1934 CA SEDAN 433 1934 MODEL CU AIRFLOW 207 1935 C6 CONVERTIBLE 201 1935 C6 AIRSTREAM COUPE 202 1937 MODEL C15 203 1938 CONVERTIBLE COUPE PLYMOUTH 204 1929 ROADSTER 102 1929 MODEL 65 ROADSTER 19 1930 PA SEDAN 117 1930 MODEL U ROADSTER 155 1 1931 MODEL PA COUPE & SEDAN 17 1931 MODEL PA ROADSTER 17 1931 CONVERTIBLE COUPE 20 1931 COUPE & SEDAN 1 1932 MODEL PB COUPE, CONV. -

Appraisal Report

APPRAISAL REPORT 1937 Ford 1/2-Ton Pickup PREPARED FOR: Ford 1/2-Ton Client A PROFESSIONAL VALUATION SERVICE FOR ALL TYPES OF COLLECTOR VEHICLES ©2006 Auto Appraisal Network, Inc. All rights reserved. 19 Spectrum Pointe Dr. Ste. 605 Lake Forest, CA 92630 (949) 387-7774 or fax (949) 387-7775, [email protected] A professional valuation service for all types of collector vehicles 3/29/2013 Ford 1/2-Ton Client 123 Main St Tulsa, OK 74136 APPRAISAL REPORT: 1937 Ford 1/2-Ton Pickup Truck To Whom It May Concern: In compliance with the recent request, we have appraised the above referenced vehicle. We have enclosed the limited appraisal report that constitutes our analysis and conclusion of the research completed on this vehicle. The Replacement Value of this vehicle, as of the date specified herein was; $35,700.00 Thank you very much for your business, and if you should have any further questions, please do not hesitate to call. Very truly yours, 7TO13491955 Page 2 PURPOSE AND SCOPE OF REPORT: PURPOSE The purpose of this document is to report to the customer the appraiser’s opinion of the overall condition of the subject vehicle. MINIMUM DIMINISHED VALUE The Minimum Diminished Value of the vehicle is described as the minimum amount of money that an automobile is estimated to have depreciated in value, due to a particular accident, mishap, event or circumstance and is based on wholesale market pricing and the standard sliding scale established by the International Society of Automotive Appraisers or a fair market offer to purchase after the date of loss. -

VTR-249 Standard Abbreviations for Vehicle Makes and Body Styles

Standard Abbreviations for Vehicle Makes and Body Styles Automobiles, Buses, and Light Trucks ACURA................................ACUR FERRARI............................ FERR MINI.....................................MNNI ALFA ROMEO.....................ALFA FIAT.................................... FIAT MITSUBISHI........................MITS ALL STATE.........................ALLS FISKER...............................FSKR MONARCH..........................MONA AMBASSADOR...................AMER FORD..................................FORD MORGAN............................MORG AMERICAN.........................AMER FORMERLY YUGO.............ZCZY MORRIS..............................MORR ASSEMBLED......................ASVE FRAZIER.............................FRAZ NASH..................................NASH ASTON-MARTIN.................ASTO GEO....................................GEO NISSAN...............................NISS AUBURN.............................AUBU GMC....................................GMC OLDSMOBILE.....................OLDS AUDI....................................AUDI HILLMAN.............................HILL OPEL...................................OPEL AUSTIN...............................AUST HOMEMADE.......................HMDE PACKARD...........................PACK AUSTIN-HEALY..................AUHE HONDA...............................HOND PEUGEOT...........................PEUG AUTOCAR...........................AUTO HUDSON.............................HUDS PIERCE ARROW................PRCA AVANTI...............................AVTI -

Applications & Specifications Utilisations Et Spe

Principal Applications & Specifications Utilisations et spe´cifications principals Aplicaciones y especificaciones principales A – Fastest moving items N – New B – Fast moving items R – Racing C – Good moving items S – Specialized applications D – Fair moving items W – Slow moving items L – Availability limited to existing inventory. Consult your representative for details. A – Articles a` plus forte rotation N – Nouveaux articles B – Articles a` forte rotation R – Compe´tition C – Articles a` rotation normale S – Applications spe´cialise´es D – Articles a` rotation acceptable W – Articles a` faible rotation L – La disponibilite´ se limite au stock existant. Consultez votre repre´sentante ou repre´sentant pour tous les de´tails. A – Artı´culos de salida ma´s ra´pida N – Nuevo B – Artı´culos de salida ra´pida R – Competicio´n C – Artı´culos de salida constante S – Aplicaciones especializadas D – Artı´culos de salida intermedia W – Artı´culos de salida lenta L – Disponibilidad limitada al inventario existente. Consulte con su representante para obtener mayores detalles. RESISTOR/ HEAT SPARK THREAD PRINCIPAL APPLICATION REACH HEX SEAT TIP NON-RESISTOR/ RANGE SPECIAL FEATURES PLUG NO. DIAMETER SUPPRESSOR CHART DIAMÉTRE LONGEURR RÉSISTANCE/ TABLEAU POP NUMÉRO ECROU CARACTÉRISTIQUES APPLICATIONS PRINCIPALES DU DU SIÈGE POINTE SANS RÉSISTANCE/ DES ૽ DE BOUGIE HEXAGONAL SPÉCIALES FILETAGE FILETAGE ANTIPARISITAGE TEMPERATURES DIÁMETRO RESISTENCIA/ TABLA NÚMERO CARACTERÍSTICAS APLICACIONES PRINCIPALES DE ALCANCE HEX ASIENTO PUNTA SIN-RESISTOR/ -

Report No. Cl36735-1 Michigan Department of Licensing

REPORT NO. CL36735-1 'C' SALES BY DESCENDING SALES PAGE 1 MICHIGAN DEPARTMENT OF JAN THRU DEC 2017 PERIOD covered" LICENSING & REGULATORY AFFAIRS 01/01/2017 THRU 12/31/2017 LIQUOR CONTROL COMMISSION DATE PRODUCED: 03/08/2018 LICENSE BUS ID CITY NAME OF BUSINESS SALES 01-002944 1885 Grand Rapids THE B.O.B. 752, 977.. 98 01-280984 249412 Detroit LITTLE CAESARS ARENA 693, 284 ,.23 01-250700 239108 Dearborn THE PANTHEION CLUB 532, 028 ..60 01-249170 238177 Detroit LEGENDS DETROIT 504, 695..69 01-005386 3518 Detroit FLOOD'S ■ 488, 822 . 05 01-256665 234992 Detroit STANDBY DETROIT, THE SKIP 459, 768..53 01-074686 129693 Detroit COMERICA PARK COMPLEX AND BALL PARK 447, 909.. 90 01-256954 240691 Detroit THE COLISEUM 425, 835 . 72 01-176176 211480 Southfield .7 BAR GRILL 410, 974.43 01-171141 208056 Walled Lake UPTOWN GRILLE, LLC ■ 410, 096.90 01-205709 190692 Detroit THE PENTHOUSE CLUB 403, 166..39 01-009249 6026 West Bloomfield SHENANDOAH GOLF & COUNTRY CLUB 395, 079..24 01-212753 225737 Ferndale WOODWARD IMPERIAL 381, 516 ..39 01-222359 223327 Royal Oak FIFTH AVENUE 3.76, 272..34 01-244491 236827 Detroit PUNCH BOWL SOCIAL-DETROIT 362, 291.. 41 01-252261 238977 Detroit TOWNHOUSE 336, 972.86 01-230927 232496 Birmingham MARKET NORTH END 331, 212.36 01-192070 223065 Detroit RUB BBQ 330, 264.52 .01-187582 214921 Detroit STARTERS BAR & GRILL AT STUDIO ONE 322, 071.46 01-007005 4566 Detroit NIKKI'S PIZZA 321, 873.10 01-000586 381 Detroit GOLDEN FLEECE 320, 830.50 01-107843 132852 Royal Oak O'TOOLES TAVERN 317, 264.91 01-267109 244173 Southfield DUO -

SPAIN COUNTRY READER TABLE of CONTENTS William C. Trimble 1931-1932 Consular Practice, Seville Murat Williams 1939 Private Secre

SPAIN COUNTRY READER TABLE OF CONTENTS William C. Trimble 1931-1932 Consular Practice, Seville Murat Williams 1939 Private Secretary to U.S. Ambassa or to Spain, Ma ri Niles W. Bon 1942-1946 Political Officer, Ma ri William B. Dunham 1945-1954 Country Specialist, Washington, DC Thomas ,. Corcoran 1948-195. Consular Officer, Barcelona ,ames N. Corta a 1949-1951 Consular Officer, Barcelona ,ohn Wesley ,ones 1949-1953 /irst Secretary, Political Officer, Ma ri 0erbert Thompson 1949-1954 1otation Officer, Ma ri Terrence 2eorge 3eonhar y 1949-1955 Economic Officer, Ma ri Carl /. Nor en 1952 Commercial Counselor, Ma ri Stuart W. 1oc5well 1952-1955 Political Section Chief, Ma ri E war S. 3ittle 1952-1956 /oreign Service 1eserve Officer, Ma ri ,ohn /. Correll 1952-1956 3abor Attach7, Ma ri 1oy 1. 1ubottom, ,r. 1953-1956 Economic Counselor, Ma ri ,oseph McEvoy 1954-1959 Public Affairs Officer, USIS, Ma ri William W. 3ehfel t 1955-1957 :ice Consul, Bilbao Stanley ,. Donovan 1955-196. Strategic Air Comman , Ma ri ,ohn E gar Williams 1956-196. :isa/Economic Officer, Ma ri William K. 0itchcoc5 1956-196. Special Assistant to Ambassa or, Ma ri Milton Barall 1957-196. Economic Counselor, Ma ri 0arry 0aven Ken all 1957-1961 Information Officer, USIS, Ma ri Charles W. 2rover 1958-196. :ice Consul, :alencia Selwa 1oosevelt 1958-1961 Spouse of Archie 1oosevelt, Station Chief, Ma ri Phillip W. Pillsbury, ,r. 1959-196. ,unior Officer Trainee, USIS, Ma ri /re eric5 0. Sac5ste er 1959-1961 Ai e to Ambassa or, Ma ri Elinor Constable 1959-1961 Spouse of Peter Constable, :ice Consul, :igo Peter Constable 1959-1961 :ice Consul, :igo Allen C. -

Automobile Quarterly Index

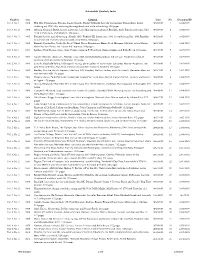

Automobile Quarterly Index Number Year Contents Date No. DocumentID Vol. 1 No. 1 1962 Phil Hill, Pininfarina's Ferraris, Luigi Chinetti, Barney Oldfield, Lincoln Continental, Duesenberg, Leslie 1962:03:01 1 1962.03.01 Saalburg art, 1750 Alfa, motoring thoroughbreds and art in advertising. 108 pages. Vol. 1 No. 2 1962 Sebring, Ormond Beach, luxury motorcars, Lord Montagu's museum at Beaulieu, early French motorcars, New 1962:06:01 2 1962.06.01 York to Paris races and Montaut. 108 pages. Vol. 1 No. 3 1962 Packard history and advertising, Abarth, GM's Firebird III, dream cars, 1963 Corvette Sting Ray, 1904 Franklin 1962:09:01 3 1962.09.01 race, Cord and Harrah's Museum with art portfolio. 108 pages. Vol. 1 No. 4 1962 Renault; Painter Roy Nockolds; Front Wheel Drive; Pininfarina; Henry Ford Museum; Old 999; Aston Martin; 1962:12:01 4 1962.12.01 fiction by Ken Purdy: the "Green Pill" mystery. 108 pages. Vol. 2 No. 1 1963 LeMans, Ford Racing, Stutz, Char-Volant, Clarence P. Hornburg, three-wheelers and Rolls-Royce. 116 pages. 1963:03:01 5 1963.03.01 Vol. 2 No. 2 1963 Stanley Steamer, steam cars, Hershey swap meet, the Duesenberg Special, the GT Car, Walter Gotschke art 1963:06:01 6 1963.06.01 portfolio, duPont and tire technology. 126 pages. Vol. 2 No. 3 1963 Lincoln, Ralph De Palma, Indianapolis racing, photo gallery of Indy racers, Lancaster, Haynes-Apperson, the 1963:09:01 7 1963.09.01 Jack Frost collection, Fiat, Ford, turbine cars and the London to Brighton 120 pages.