Autotuning Programs with Algorithmic Choice by Jason Ansel

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Role of Ansel in Tracy Letts' Killer Joe: a Production Thesis in Acting

Louisiana State University LSU Digital Commons LSU Master's Theses Graduate School 2003 The oler of Ansel in Tracy Letts' Killer Joe: a production thesis in acting Ronald William Smith Louisiana State University and Agricultural and Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_theses Part of the Theatre and Performance Studies Commons Recommended Citation Smith, Ronald William, "The or le of Ansel in Tracy Letts' Killer Joe: a production thesis in acting" (2003). LSU Master's Theses. 3197. https://digitalcommons.lsu.edu/gradschool_theses/3197 This Thesis is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Master's Theses by an authorized graduate school editor of LSU Digital Commons. For more information, please contact [email protected]. THE ROLE OF ANSEL IN TRACY LETTS’ KILLER JOE: A PRODUCTION THESIS IN ACTION A Thesis Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the Requirements for the degree of Master of Fine Arts in The Department of Theatre by Ronald William Smith B.S., Clemson University, 2000 May 2003 Acknowledgements I would like to thank my professors, Bob and Annmarie Davis, Jo Curtis Lester, Nick Erickson and John Dennis for their knowledge, dedication, and care. I would like to thank my classmates: Deb, you are a friend and I’ve learned so much from your experiences. Fire, don’t ever lose the passion you have for life and art, it is beautiful and contagious. -

FY16 Purchase Orders

PO Numbe PO Date Quantity Unit Price PO Description Vendor Name Vendor Address1 Vendor City Vendor StateVendor Zip 16000001 07/01/2015 1.00$ 135.00 Chesapeake Waste CHESAPEAKE WASTE IND LLC PO BOX 2695 SALISBURY MD 21802 16000002 07/01/2015 1.00$ 204.67 FOR BID AWARD MAILING SERVICES MAIL MOVERS PO BOX 2494 SALISBURY MD 21802-2494 16000002 07/01/2015 1.00$ 7,000.00 FOR BID AWARD MAILING SERVICES MAIL MOVERS PO BOX 2494 SALISBURY MD 21802-2494 16000003 07/01/2015 1.00$ 260.00 BLANKET PO FOR MEMBERSHIP DUES CLIENT PROTECTION FUND OF THE BAR OF MD 200 HARRY S TRUMAN PKWY ANNAPOLIS MD 21401 16000004 07/01/2015 1.00$ 5,000.00 BLANKET PO FOR INSURANCE DEDUC LOCAL GOVERNMENT INS TRUST 7225 PARKWAY DR HANOVER MD 21076 16000005 07/01/2015 1.00$ 350.00 BLANKET PO FOR MEMBERSHIP DUES MD STATE BAR ASSOCIATION PO BOX 64747 BALTIMORE MD 21264-4747 16000006 07/01/2015 1.00$ 115,433.00 Replacement Chiller THE TRANE COMPANY PO BOX 406469 ATLANTA GA 30384 16000007 07/01/2015 1.00$ 500.00 BLANKET PO FOR LEGAL ADVERTISI THE DAILY TIMES PO BOX 742621 CINCINNATI OH 45274-2621 16000008 07/01/2015 1.00$ 5,000.00 BLANKET PO FOR INSURANCE DEDUC TRAVELERS 13607 COLLECTIONS CENTER DR CHICAGO IL 60693 16000009 07/01/2015 1.00$ 6,325.00 BLANKET PO FOR LEGAL DATABASE THOMSON REUTERS-WEST PUBLISHING CORP WEST PAYMENT CENTER CAROL STREAM IL 60197-6292 16000010 07/01/2015 1.00$ 100.00 BLANKET PO FOR MEMBERSHIP DUES WICOMICO COUNTY BAR ASSOCIATION PO BOX 4394 SALISBURY MD 21803-0389 16000011 07/01/2015 1.00$ 555.00 BLANKET PO FOR MEMBERSHIP DUES IMLA - INTERNATIONAL MUNICIPAL -

Permitting Activity 01/01/2020 Thru 8/2/2020 Date of Report: 08/03/2020 L-BUILD ONLY - Grouped by Type Then Subtype Page 1 of 228

Permitting Activity 01/01/2020 Thru 8/2/2020 Date of Report: 08/03/2020 L-BUILD ONLY - Grouped by Type then Subtype Page 1 of 228 Issued App. Permit Sq- Total Fees Date Status Number Sub Type Type Valuation Feet Contractor Owner Address Total Fees Due Commercial Permits: Addition Permits: LB2000140 Addition Commercial $30,000.00 518 L&L CONSTRUCTION SERVICESBANNERMAN LLC CROSSINGS II6668 LLC Thomasville Rd $287.46 $0.00 3/12/20 Issued LB2000383 Addition Commercial $95,000.00 2800 COMMERCIAL REPAIR & RENOVATIONSWESTON TRAWICK INC. INC 5392 Tower Rd $1,954.57 $0.00 5/7/20 Issued LB2000386 Addition Commercial $17,000.00 748 ADVANCED AWNING & DESIGN,JNC REALTY LLC ENTERPRISES6800 INC Thomasville Rd, Ste 106 $412.88 $0.00 4/20/20 Issued Addition Totals: 3 $142,000.00 $2,654.91 $0.00 Alteration Permits: LB1801185 Alteration Commercial $12,000.00 BETACOM INCORPORATEDTALL TIMBERS RESEARCH, 4000INC County Rd 12 $224.17 $0.00 2/10/20 Issued LB1802065 Alteration Commercial $40,000.00 4021 HILL DEVELOPMENT MANAGEMENT,NGUYEN LOAN LLC T 4979 Blountstown Hwy $3,917.67 $0.00 3/11/20 Issued LB1900627 Alteration Commercial $75,000.00 THE DEEB COMPANIES CAMELLIA OAKS LLC 4330 Terebinth Trl $2,377.76 $0.00 5/5/20 Certificate of Occupancy LB1901330 Alteration Commercial $250,000.00 SOUTHEAST INDUSTRIAL RENTALCOLE CS AND TALLAHASSEE CLEANING FL INC 4067LLC Lagniappe Way $3,090.38 $0.00 2/3/20 Issued LB1901550 Alteration Commercial $200,000.00 WSD ENGINEERING GLOBAL SIGNAL ACQUISITIONS2008 IV Mckee LLC Rd $2,548.65 $0.00 6/9/20 Issued LB1901889 Alteration Commercial $56,050.00 1120 TAYLOR CONSTRUCTION &3219 RENOVATION TALLAHASSEE LLC 3219 N Monroe St $1,981.57 $0.00 2/17/20 Issued LB1902034 Alteration Commercial $20,632.00 2008 CHAPMAN CANOPY INCORPORATEDRIVERS RD EXPRESS #15 LLC6330 Crawfordville Rd $1,827.13 $0.00 2/5/20 Issued LB1902154 Alteration Commercial $20,000.00 SAC WIRELESS LLC CROWN CASTLE USA 1478 Capital Cir NW $568.64 $0.00 2/26/20 Issued LB1902168 Alteration Commercial $400,000.00 OLIVER SPERRY RENOVATIONLEON AND COUNTY CONSTRUCTION,1583 INC. -

Ansel Adams in the Human and Natural Environments of Yosemite

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Journal of the National Collegiate Honors Council --Online Archive National Collegiate Honors Council Spring 2005 Seeing Nature: Ansel Adams in the Human and Natural Environments of Yosemite Megan McWenie University of Arizona, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/nchcjournal Part of the Higher Education Administration Commons McWenie, Megan, "Seeing Nature: Ansel Adams in the Human and Natural Environments of Yosemite" (2005). Journal of the National Collegiate Honors Council --Online Archive. 161. https://digitalcommons.unl.edu/nchcjournal/161 This Article is brought to you for free and open access by the National Collegiate Honors Council at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Journal of the National Collegiate Honors Council --Online Archive by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Portz-Prize-Winning Essay, 2004 55 SPRING/SUMMER 2005 56 JOURNAL OF THE NATIONAL COLLEGIATE HONORS COUNCIL MEGAN MCWENIE Portz Prize-Winning Essay, 2004 Seeing Nature: Ansel Adams in the Human and Natural Environments of Yosemite MEGAN MCWENIE UNIVERSITY OF ARIZONA allace Stegner once hailed the legacy of Ansel Adams as bringing photography Wto the world of art as a unique “way of seeing.”1 What Adams saw through the lens of his camera, and what audiences see when looking at one of his photographs, does indeed constitute a particular way of seeing the world, a vision that is almost always connected to the natural environment. An Ansel Adams photograph evokes more than an aesthetic response to his work—it also stirs reflections about his involvement in the natural world that was so often his subject. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm A sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) which require input data to be in sorted lists; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation (reordering) of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2006). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and upper and lower bounds. Classification Sorting algorithms are often classified by: • Computational complexity (worst, average and best behavior) of element comparisons in terms of the size of the list (n). For typical serial sorting algorithms good behavior is O(n log n), with parallel sort in O(log2 n), and bad behavior is O(n2). -

Ansel Adams Wilderness Bass Lake Ranger District

PACIFIC SOUTHWEST REGION Restoring, Enhancing and Sustaining Forests in California, Hawaii and the Pacific Islands Sierra National Forest Ansel Adams Wilderness Bass Lake Ranger District The Ansel Adams Wilderness is a diverse and Southern portions of the Wilderness provide forests spectacular area comprised of 228,500 acres of huge pine and fir where few people visit. draped along the crest of the Sierra Nevada Highway 41 and 168 access western slope trail- within the Sierra and Inyo National Forests. This heads while Highways 120 (Tioga Pass through Yo- wilderness ecosystem includes a number of lake semite National Park) and Highway 395 access trail- and stream systems that are the headwaters of the heads on the East side of the Sierra. San Joaquin River. Vegetation is mixed conifer- Commercial pack stations provide services from ous and deciduous forests of pine and oak in low Agnew and Reds Meadow (Inyo National Forest) on elevation, and sub-alpine forests of lodgepole the eastern side of the range, and from Miller pine, mountain hemlock and red fir. Alpine Meadow and Florence and Edison Lakes (Sierra Na- meadows grace the higher elevations with wild- tional Forest) on the western slope. flowers and crystal streams. Elevations range from hot dry canyons at REGULATIONS AND PERMIT REQUIRE- 3,500 feet in the San Joaquin River gorge to MENTS 13,157 foot Mount Ritter. Precipitation is from A wilderness Visitor Permit is required for all over- 18 to 50 inches, with snow depth averages about night trips into the wilderness. Important travel in- 171 inches. formation is available concerning bear encounters, The John Muir Trail, which starts in Yosem- fire danger, current weather, snow and hazard condi- ite National Park, crosses Donahue Pass (11,056 tions, and to assist visitors in understanding how to feet), into the Ansel Adams Wilderness and south properly use the wilderness and leave no trace. -

Automatic Algorithm Recognition Based on Programming Schemas and Beacons Aalto University

Department of Computer Science and Engineering Aalto- Ahmad Taherkhani Taherkhani Ahmad DD 17 Automatic Algorithm / 2013 2013 Recognition Based on Automatic Algorithm Recognition Based on Programming Schemas and Beacons and Beacons Schemas Programming on Based Recognition Algorithm Automatic Programming Schemas and Beacons A Supervised Machine Learning Classification Approach Ahmad Taherkhani 9HSTFMG*aejijc+ ISBN 978-952-60-4989-2 BUSINESS + ISBN 978-952-60-4990-8 (pdf) ECONOMY ISSN-L 1799-4934 ISSN 1799-4934 ART + ISSN 1799-4942 (pdf) DESIGN + ARCHITECTURE University Aalto Aalto University School of Science SCIENCE + Department of Computer Science and Engineering TECHNOLOGY www.aalto.fi CROSSOVER DOCTORAL DOCTORAL DISSERTATIONS DISSERTATIONS Aalto University publication series DOCTORAL DISSERTATIONS 17/2013 Automatic Algorithm Recognition Based on Programming Schemas and Beacons A Supervised Machine Learning Classification Approach Ahmad Taherkhani A doctoral dissertation completed for the degree of Doctor of Science (Technology) (Doctor of Philosophy) to be defended, with the permission of the Aalto University School of Science, at a public examination held at the lecture hall T2 of the school on the 8th of March 2013 at 12 noon. Aalto University School of Science Department of Computer Science and Engineering Learning + Technology Group Supervising professor Professor Lauri Malmi Thesis advisor D. Sc. (Tech) Ari Korhonen Preliminary examiners Professor Jorma Sajaniemi, University of Eastern Finland, Finland Dr. Colin Johnson, University of Kent, United Kingdom Opponent Professor Tapio Salakoski, University of Turku, Finland Aalto University publication series DOCTORAL DISSERTATIONS 17/2013 © Ahmad Taherkhani ISBN 978-952-60-4989-2 (printed) ISBN 978-952-60-4990-8 (pdf) ISSN-L 1799-4934 ISSN 1799-4934 (printed) ISSN 1799-4942 (pdf) http://urn.fi/URN:ISBN:978-952-60-4990-8 Unigrafia Oy Helsinki 2013 Finland Publication orders (printed book): The dissertation can be read at http://lib.tkk.fi/Diss/ Abstract Aalto University, P.O. -

PART III Basic Data Types

PART III Basic Data Types 186 Chapter 7 Using Numeric Types Two kinds of number representations, integer and floating point, are supported by C. The various integer types in C provide exact representations of the mathematical concept of “integer” but can represent values in only a limited range. The floating-point types in C are used to represent the mathematical type “real.” They can represent real numbers over a very large range of magnitudes, but each number generally is an approximation, using a limited number of decimal places of precision. In this chapter, we define and explain the integer and floating-point data types built into C and show how to write their literal forms and I/O formats. We discuss the range of values that can be stored in each type, how to perform reliable arithmetic computations with these values, what happens when a number is converted (or cast) from one type to another, and how to choose the proper data type for a problem. We would like to think of numbers as integer values, not as patterns of bits in memory. This is possible most of the time when working with C because the language lets us name the numbers and compute with them symbolically. Details such as the length (in bytes) of the number and the arrangement of bits in those bytes can be ignored most of the time. However, inside the computer, the numbers are just bit patterns. This becomes evident when conditions such as integer overflow occur and a “correct” formula produces a wrong and meaningless answer. -

GEDCOM 5.5.2 With

THE GEDCOM SPECIFICATION Includes GEDXML format DRAFT Release 5.6 Prepared by the Family History Department The Church of Jesus Christ of Latter-day Saints 18 December 2000 Suggestions and Correspondence: Email: Mail: [email protected] Family History Department GEDCOM Coordinator—3T Telephone: 50 East North Temple Street 801-240-4534 Salt Lake City, UT 84150 801-240-3442 USA Copyright © 2000 by The Church of Jesus Christ of Latter-day Saints. This document may be copied for purposes of review or programming of genealogical software, provided this notice is included. All other rights reserved. TABLE OF CONTENTS Introduction ................................................................................. 5 Purpose and Content of The GEDCOM Standard ................................................. 5 Purposes for Version 5.x ................................................................... 6 Modifications in Version 5.6 ................................................................ 6 Chapter 1 Data Representation Grammar ............................................................... 9 Concepts ............................................................................... 9 Grammar .............................................................................. 10 Description of Grammar Components ........................................................ 14 Chapter 2 Lineage-Linked Grammar ................................................................. 19 Record Structures of the Lineage-Linked Form ................................................ -

Ansi Z39.47-1985

ANSI® * Z39.47-1985 ISSN: 8756-0860 American National Standard for Information Sciences - Extended Latin Alphabet Coded Character Set for Bibliographic Use Secretariat Council of National Library and Information Associations Approved September 4, 1984 American National Standards Institute, Inc Abstract This standard establishes computer codes for an extended Latin alphabet character set to be used in bibliographic work when handling non-English items. This standard establishes the 7-bit and 8-bit code values. 3 sawvwn nkhhoiw jo umMm im Generated on 2013-01-28 18:16 GMT / http://hdl.handle.net/2027/mdp.39015079886241 Public Domain, Google-digitized / http://www.hathitrust.org/access_use#pd-google Por^XA/OrH fW* Foreword is not part of American National Standard Z39.47-1985.) This standard for the extended Latin alphabet coded character set for bibliographic use l\ <-. was developed by Subcommittee N on Coded Character Sets for Bibliographic Informa- tion Interchange of the American National Standards Committee on Library and Informa- tion Sciences and Related Publishing Practices, Z39, now the National Information Stan- . dards Organization (Z39). / L O I The codes given in this standard may be identified by the use of the notation ANSEL. The notation ANSEL should be taken to mean the codes prescribed by the latest edition of this standard. To explicitly designate a particular edition, the last two digits of the year of the issue may be appended, as in ANSEL 85. The standard establishes both the 7-bit and the 8-bit code values for the computer codes for characters used in bibliographic work when handling non-English items. -

Applied C and C++ Programming

Applied C and C++ Programming Alice E. Fischer David W. Eggert University of New Haven Michael J. Fischer Yale University August 2018 Copyright c 2018 by Alice E. Fischer, David W. Eggert, and Michael J. Fischer All rights reserved. This manuscript may be used freely by teachers and students in classes at the University of New Haven and at Yale University. Otherwise, no part of it may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the authors. 192 Part III Basic Data Types 193 Chapter 7 Using Numeric Types Two kinds of number representations, integer and floating point, are supported by C. The various integer types in C provide exact representations of the mathematical concept of \integer" but can represent values in only a limited range. The floating-point types in C are used to represent the mathematical type \real." They can represent real numbers over a very large range of magnitudes, but each number generally is an approximation, using a limited number of decimal places of precision. In this chapter, we define and explain the integer and floating-point data types built into C and show how to write their literal forms and I/O formats. We discuss the range of values that can be stored in each type, how to perform reliable arithmetic computations with these values, what happens when a number is converted (or cast) from one type to another, and how to choose the proper data type for a problem. -

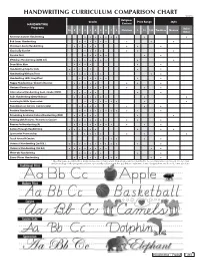

Handwriting Curriculum Comparison Chart

HANDWRITING CURRICULUM COMPARISON CHART ©2018 Religious Grades Price Range Style HANDWRITING Content Programs Italic/ PK K 1 2 3 4 5 6 7 8 Christian $ $$ $$$ Tradition Modern Other American Cursive Handwriting • • • • • • • • • Bob Jones Handwriting • • • • • • • • • Christian Liberty Handwriting • • • • • • • • Classically Cursive • • • • • • • Cursive First • • • • • • • D'Nealian Handwriting (2008 ed.) • • • • • • • • • Draw Write Now • • • • • • Handwriting Help for Kids • • • • • • • Handwriting Without Tears • • • • • • • • • Handwriting Skills Simplified • • • • • • • • Happy Handwriting / Cheerful Cursive • • • • • • • • Horizons Penmanship • • • • • • • • • International Handwriting Cont. Stroke (HMH) • • • • • • • Italic Handwriting (Getty-Dubay) • • • • • • • • • Learning to Write Spencerian • • • • • • • • • New American Cursive (cursive only) • • • • • • • Pentime Handwriting • • • • • • • • • • • Preventing Academic Failure Handwriting (PAF) • • • • • • • Printing with Pictures / Pictures in Cursive • • • • • • • • • • Reason for Handwriting (A) • • • • • • • • • • • Sailing Through Handwriting • • • • • • • • • Spencerian Penmanship • • • • • • • Teach Yourself Cursive • • • • • • Universal Handwriting (2nd Ed.) • • • • • • • • • • • • Universal Handwriting (3rd Ed.) • • • • • • • • • • Write-On Handwriting • • • • • • • Zaner-Bloser Handwriting • • • • • • • • • • • *This chart was assembled by Rainbow Resource Curriculum Consultants and is intended to be a comparative tool based on our own understanding of the programs