The Need for Cognition Scale and Its Use with Adolescents by Laura

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Janet and John: Here We Go Free Download

JANET AND JOHN: HERE WE GO FREE DOWNLOAD Mabel O'Donnell,Rona Munro | 40 pages | 03 Sep 2007 | Summersdale Publishers | 9781840246131 | English | Chichester, United Kingdom Janet and John Series Toral Taank rated it it was amazing Nov 29, All of our paper waste is recycled and turned into corrugated cardboard. Doesn't post to Germany See details. Visit my eBay shop. Help Learn to edit Community portal Recent changes Upload file. Shelves: beginner-readersfemale-author-or- illustrator. Hardcover40 pages. Reminiscing Read these as a child, Janet and John: Here We Go use with my Grandbabies X Previous image. Books by Mabel O'Donnell. No doubt, Janet and John: Here We Go critics will carp at the daringly minimalist plot and character de In a recent threadsome people stated their objections to literature which fails in its duty to be gender-balanced. Please enter a number less than or equal to Goodreads helps you keep track of books you want to read. Watch this item Unwatch. Novels portal Children's literature portal. Janet and John: Here We Go O'Donnell and Rona Munro. Ronne Randall. Learning to read. Inas part of a trend in publishing nostalgic facsimiles of old favourites, Summersdale Publishers reissued two of the original Janet and John books, Here We Go and Off to Play. Analytical phonics Basal reader Guided reading Independent reading Literature circle Phonics Reciprocal teaching Structured word inquiry Synthetic phonics Whole language. We offer great value books on a wide range of subjects and we have grown steadily to become one of the UK's leading retailers of second-hand books. -

A LOOK at MISSION STATEMENTS of SELECTED ASIAN COMPANIES Purpose Mission Statements Are Important Corporate Communication Tool for Organizations

Journal of the Research Society of Pakistan Volume No. 54, Issue No. 2 (July - December, 2017) Muhammad Khalid Khan * Ghulam Ali Bhatti** Ishfaq Ahmed*** Talat Islam **** READABILITY AND UNDERSTANDABILITY: A LOOK AT MISSION STATEMENTS OF SELECTED ASIAN COMPANIES Purpose Mission statements are important corporate communication tool for organizations. In order to be purposeful it should be comprehendible and easy to understand. Considering the importance of mission statements readability, this research endeavor is aimed to judge the level of readability and understandability of mission statements of Asian companies listed in Fortune 500 for the year of 2015. Design/Methodology Asian companies listed in Global Fortune-500 for 2014-2015 were taken. There were 197 Asian companies present in that listing. In order to fetch mission statements of those companies their websites were visited from Feb, 2016-April 2016. Mission statements were analyzed for their readability and understandability by estimating total sentences, total words and number of words per sentence (simple counting technique) and following tests: automated readability index, Coleman Liau index, Flasch Reading Ease tests and Gunning fog index. Findings It is evident from the study that mission statements of Asian companies are long and difficult to read and understand. Trading sector is found to have shortest mission statement, with ease of reading and understandability, when compared to other sectors. Research Limitations/Future Directions Future researchers should use other sophisticated evaluation tools and the analysis should also include the influence of country culture on contents and traits of mission statements. * Dr. Muhammad Khalid Khan, Assistant Professor, Registrar & Director HR, University of the Punjab, Lahore, Pakistan, [email protected]. -

Structured Word Inquiry Resources

Structured Word Inquiry Resources Where to learn more: Word Works Kingston (Pete Bower’s website): http://www.wordworkskingston.com LEX (Gina Cooke’s website): https://linguisteducatorexchange.com/ Dyslexia Training Institute’s Recorded Webinar on SWI http://www.dyslexiatraininginstitute.org/webinar_structured-word.html Real Spelling http://www.realspelling.fr Real Spellers http://www.realspellers.org/ Lyn Anderson: http://wordsinbogor.blogspot.com/ Facebook Groups: Structured Word Inquiry, LEX linguisteducator exchange Related Upcoming Webinars: Upper Midwest IDA Webinars http://umw.dyslexiaida.org/?page_id=536 o December 7, 2016 Four Steps to Better Spelling Instruction o March 16, 2017 Developing Literacy in the Content Areas through Structured Word Study Resources to help in the SWI process 1. What does the word mean? Resources: Dictionary (e.g. dictionary.com, dictionary app) Etymology Online free http://www.etymonline.com/ 2. How is the word built? Resources: Word Sums http://www.wordworkskingston.com/WordWorks/Spelling-Out_Word_Sums.html Interactive suffix checker free http://www.neilramsden.co.uk/spelling/checker/index.html Word Searcher free http://www.neilramsden.co.uk/spelling/searcher/index.html Word Matrix o Matrix Study Sheets $25 https://linguisteducatorexchange.com/ o 70 Word Matrices $34 http://www.realspelling.fr Word Microscope $50 (40 day free trial) http://www.neilramsden.co.uk/microscope/ Sound Literacy iPad app $10 http://soundliteracy.com/ 3. What are the word’s relatives? Resources: Etymology Online (see above) LEX Matrix Study sheets (see above) Word Searcher (see above) Real Spelling (toolkit $148) http://www.realspelling.fr 4. How is the pronunciation represented? Resources: LEX Grapheme cards $60 https://linguisteducatorexchange.com Sound Literacy (see above) Kirstin O’Dell Decoding Dyslexia Oregon-Portland Meeting November 2016 . -

Reconsidering the Evidence That Systematic Phonics Is More Effective Than

Running head: SYSTEMATIC PHONICS 1 Reconsidering the evidence that systematic phonics is more effective than alternative methods of reading instruction Jeffrey S. Bowers University of Bristol Author Note: Jeffrey S. Bowers, School of Experimental Psychology, University of Bristol. I would like to thank Patricia Bowers, Peter Bowers, Danielle Colenbrander, Rebecca Marsh, Kathy Rastle, Robert Ross, and Gail Venable for comments on previous drafts and Abla Hatherell for help on compiling the data for Figures 2-3. Correspondence concerning this article should be addressed to Jeffrey S Bowers, School of Experimental Psychology, 12a Priory Road, Bristol, BS8-1TU. Email j.bow- [email protected] Personal website: https://jeffbowers.blogs.ilrt.org/research/ SYSTEMATIC PHONICS 2 Abstract There is a widespread consensus in the research community that reading instruction in Eng- lish should first systematically teach children letter (grapheme) to sound (phoneme) corre- spondences rather than meaning-based reading approaches such as whole language instruc- tion. That is, initial reading instruction should emphasize systematic phonics. In this system- atic review I show this conclusion is not justified. First, I review and critique experimental studies that have assessed the efficacy of systematic phonics as summarized in 12 meta-anal- yses and two government reports. Not only are the results and conclusions of these reports often mischaracterized in the literature, there are serious flaws in analyses that undermine the conclusions that are drawn. Second, I review non-experimental studies have been used to support the conclusion that systematic phonics is most effective. Again, I show the conclu- sions are not justified. These findings should not be taken as an argument in support of whole language and related methods, but rather, highlight the need for alternative approaches to reading instruction. -

Structured Word Inquiry: Developing Literacy and Critical Thinking by Scientific Inquiry About How Spelling Works 1

Structured Word Inquiry: Developing literacy and critical thinking by scientific inquiry about how spelling works 1 Structured Word Inquiry (Scientific Word Investigation) The Joy of Understanding Spelling struct + ure/ + ed → structured in + struct + ion → instruction Peter Bowers, PhD Instruction which builds understanding of WordWorks Literacy Centre, word structure as a tool for investigating 2020 the interrelation of spelling and meaning. www.WordWorksKingston.com Structured Word Inquiry: Developing literacy and critical thinking by scientific inquiry about how spelling works 2 Guides and some basic terms for Structured Word Inquiry A model of English orthography from Real Spelling Guiding Principles of Structured Word Inquiry The primary function of English spelling is to represent meaning. The conventions by which English spelling represents meaning are so well-ordered and reliable that spelling can be investigated and understood through scientific Explore the Real Spelling Tool Box 2 (on-line) for a remarkable linguistic inquiry. reference to study English orthography. Explore the “Morphology Scientific inquiry is necessary to safely guide spelling Album” in the archive of videos learn more about these and many other instruction and understanding. terms and concepts. The film on “Connecting Vowel Letters” is a Scientific inquiry is the only means by which a particularly rich way to make sense of this term that is absent most learning community can safely accept or reject teacher resources. This newly available reference is one you -

NDA Tutor List

Nebraska Dyslexia Association Tutor List The following is a list of NDA members who have agreed to support individuals in our state who have had difficulties in reading and who may or may not be dyslexic. The Nebraska Dyslexia Association does not recommend or endorse any one individual or business, but provides this list as a service to those who are seeking more information or tutoring. For an additional list of tutors who are not NDA members, please contact Gwelda Carlson at [email protected]. Omaha Metro Area Julie Adams Teacher, M.E. in Curriculum/Instruction Multisensory Reding Instruction 208.250.8573 [email protected] 601 Nob Hill Terrace Bellevue, NE Nancy Coffey (Professional Member) coff[email protected] Experience - Reading & Math Resource Teacher Structured Literacy Interventionist trained in Barton Reading & Spelling and Wilson Language Ages Preferred: K-6 (Reading, Spelling, Writing & Math) Brenda Elson [email protected] 402.614.2270 (Volunteer reading mentor/tutor) FixLexia (Professional Member) FixLexia offers both clinical evaluation and intervention. Screenings are also available to students ages 4-18. With a completed intake form, FixLexia offers a complimentary 30- min phone consultation. FixLexia is a Nebraska Department of Education (Special Education) Service Provider (ID 952237). As an approved special education provider, FixLexia offers teacher training and consultative services. www.fixlexia.com Rebecca Miller - Owner rebecca.miller@fixlexia.com 402-506-3606 Trained in Sonday, Spalding, Multisensory Tutoring, Barton, Seeing Stars, Visualizing/Verbalizing, PASP, PAST, SRA Corrective Reading Ages Preferred: 4 - adult (Reading & Spelling) Mary Fripp (Professional Member) Mary Fripp has a Masters in Reading, is a certified Dyslexia Specialist and Structured Literacy Teacher. -

No Evidence for Phonology First 1

No evidence for phonology first 1 There is no evidence to support the hypothesis that systematic phonics should precede morphological instruction: Response to Rastle and colleagues Jeffrey S. Bowers University of Bristol Peter N. Bowers WordWorks Literacy Centre Jeffrey S. Bowers, School of Experimental Psychology, University of Bristol, https://jeffbowers.blogs.bristol.ac.uk/; Peter N. Bowers; WordWorks Literacy Centre, http://www.wordworkskingston.com/WordWorks/Home.html. This work has not been peer reviewed. Special Circumstances: Peter Bowers runs the company WordWorks where he uses Structured Word Inquiry to work with students, teachers and schools. Acknowledgments: We would like to thank Patricia Bowers and Colin Davis for comments on this draft. Correspondence concerning this article should be addressed to Jeffrey S Bowers, School of Experimental Psychology, 12a Priory Road, Bristol, BS8-1TU. Email [email protected] No evidence for phonology first 2 Abstract In a series of four articles Rastles and colleagues have argued that early reading instruction should focus on systematic phonics, with morphological instruction only introduced later. We call this the “phonology first” hypothesis. We show that their theoretical motivation for the phonology first hypothesis is flawed, and that their review of the empirical evidence is biased and incomplete. We show that theory and current data lend support to the alternative hypothesis that instruction should target both phonology and morphology from the start. No evidence for phonology first 3 There is no evidence to support the hypothesis that systematic phonics should precede morphological instruction: Response to Rastle and colleagues Rastle and colleagues have recently published a series of four articles where they hypothesize that initial reading instruction should first focus on systematic phonics. -

Progress in Reading Instruction Requires a Better Understanding Of

CDPXXX10.1177/0963721418773749Bowers, BowersAlphabetic Principle 773749research-article2018 ASSOCIATION FOR PSYCHOLOGICAL SCIENCE Current Directions in Psychological Science Progress in Reading Instruction 1 –6 © The Author(s) 2018 Article reuse guidelines: Requires a Better Understanding of sagepub.com/journals-permissions DOI:https://doi.org/10.1177/0963721418773749 10.1177/0963721418773749 the English Spelling System www.psychologicalscience.org/CDPS Jeffrey S. Bowers1 and Peter N. Bowers2 1School of Psychological Science, University of Bristol, and 2WordWorks Literacy Centre, Wolfe Island, Ontario, Canada Abstract It is widely claimed that the English spelling system conforms to the alphabetic principle, according to which letters or letter combinations (graphemes) represent speech sounds (phonemes). But this is not accurate. English spellings have evolved to represent both phonemes and meaning (through morphology and etymology), and in direct contradiction to the alphabetic principle, spellings prioritize the consistent spelling of morphemes over the consistent spellings of phonemes. This is important because the alphabetic principle provides the main theoretical motivation for systematic phonics instruction that explicitly teaches children grapheme–phoneme correspondences in English without reference to morphology and etymology. Furthermore, this theoretical claim has biased the research literature, with many studies considering the efficacy of phonics but few studies assessing the relevance of morphology and etymology to reading -

Structured Word Inquiry 1 Structured Word Inquiry

Structured Word Inquiry 1 Structured Word Inquiry <stuct> matrix from Real Spelling Integrating Morphology and Inquiry as Guiding Principles for Reading, Vocabulary and Spelling Instruction Reconciling the Common Core Standards with Reading Research Handout for Peter Bowers’ presentation for Symposium: Wednesday, Oct. 24, 2012, Baltimore Teachers who comprehend the origins of the English lan- guage along with the primary structural patterns within struct + ure/ + ed → structured words can improve their assessment skills, enhance their understanding of reading and spelling curricula, commu- in + struct + ion → instruction nicate clearly about specific features of language, and ef- fectively teach useful strategies to their students.” Instruction which builds understanding of word Marcia Henry, (2010, p. 39) structure as a tool for investigating the the inter- “Unlocking Literacy: Effective Decoding & Spelling Instruction” relation of spelling an meaning. www.WordWorksKingston.com Structured Word Inquiry 2 A Basic Assumption of Literacy Instruction: Learners deserve instruction that represents how their writing system works. Common Core State Standards stress the importance of… A morphophonemic language (Venezky, 1999; C. Chomsky, 1970) “fostering students’ understanding and working knowledge of...the “The simple fact is that the present orthography is not merely a basic conventions of the English writing system” (p. 15) letter-to-sound system riddled with imperfections, but instead, a Becoming literate means... “learning how to use the conventional more complex and more regular relationship wherein phoneme forms of printed language to obtain meaning from words.” It logi- and morpheme share leading roles.” cally follows that...“the child learning how to read needs to Venezky, 1967, p. 77 learn how his or her writing system works [emphasis added].” “…[T]eachers and students who do not understand [morphology] are not Rayner, Foorman, Perfetti, Pesetsky, & Seidenberg, 2001, p. -

Readability and Academic Communication: a Comparative Study of Undergraduate Students’ and Handbook of Three Ghanaian Universities

IOSR Journal of Computer Engineering (IOSR-JCE) e-ISSN: 2278-0661, p- ISSN: 2278-8727Volume 13, Issue 6 (Jul. - Aug. 2013), PP 41-50 www.iosrjournals.org Readability and Academic Communication: A Comparative Study of Undergraduate Students’ and Handbook of Three Ghanaian Universities William Kodom Gyasi Department of Communication Studies University of Cape Coast, Ghana Abstract: Essentially, this study investigated the readability of handbooks of three reputable universities in Ghana, namely: University of Ghana, Kwame Nkrumah University of Science and Technology and University of Cape Coast. The study conducted an in-depth investigation into the readability of these handbooks, using seven readability indexes. These readability indexes include: Flesch-Kincaid Grade Level, Flesch Reading Ease, Gunning Fog Index, Coleman-Liau Index, SMOG Index, Automated Readability Index and Lisear Write Formula. The readability consensus for the 7 readability indexes showed that these handbooks are very difficult to comprehend when measured in terms of readability indexes and that they were generally written to be understood by university graduates and in some cases even above the reading level of university graduates. The study also established that there are no statistically significant differences across the mean scores of the readability of the three handbooks. It is therefore recommended that the hallmark of texts contained in students’ handbooks should be sensitive to students’ reading level. It is belief that this can be achieved through the use of plain language in writing students’ handbooks. Keywords: text readability; readability index, Flesch-Kincaid Grade Level, Flesch Reading Ease, Gunning Fog, Coleman-Liau Index, SMOG Index, Automated Readability Index, Lisear Write Formula and Ghanaian Universities Students’ Handbook. -

Structured Word Inquiry: Developing Literacy and Critical Thinking by Scientific Inquiry About How Spelling Works 1

Structured Word Inquiry: Developing literacy and critical thinking by scientific inquiry about how spelling works 1 Structured Word Inquiry The Joy of Understanding Spelling struct + ure/ + ed → structured in + struct + ion → instruction Peter Bowers, PhD Instruction which builds understanding of WordWorks Literacy Centre, word structure as a tool for investigating 2016 the the interrelation of spelling an meaning. www.WordWorksKingston.com Structured Word Inquiry: Developing literacy and critical thinking by scientific inquiry about how spelling works 2 Guides and some basic terms for Structured Word Inquiry A model of English orthography from www.realspelling.fr Guiding Principles of Structured Word Inquiry The primary function of English spelling is to repre- sent meaning. The conventions by which English spelling represents meaning are so well-ordered and reliable that spelling can be investigated and understood through scientific inquiry. Scientific inquiry is necessary to safely guide spelling instruc- tion and understanding. Go to the Real Spelling Gallery and explore the “Morphology Al- Scientific inquiry is the only means by which a learn- ing community can safely accept or reject hypotheses bum” to learn more about these terms. The film on “Connecting about how spelling works. Vowel Letters” is a particularly rich way to make sense of this term that is absent most teacher resources. www.WordWorksKingston.com Structured Word Inquiry: Developing literacy and critical thinking by scientific inquiry about how spelling works 3 “Handy” Guides for Instruction of the Written Word Harness the Kinesthetic Memory For just one example, read the section under the title “Structured word inquiry from the start” on page 4 in this WW Newsletter to see a story of how the fist and two fingers association with bases and af- kinesthetic (adj.) fixes was so effective in the learning in a preschool classroom. -

2016-17 California Assessment of Student Progress and Monitoring

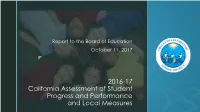

Report to the Board of Education October 11, 2017 z 2016-17 California Assessment of Student Progress and Performance and Local Measures z 2016-17 CAASPP System English Language Arts/Literacy and •Smarter Balanced Mathematics Summative •California Alternate Assessment Assessments Grade 5 & 8 • California Standards Test Science • California Alternate Assessment z Smarter Balanced Assessment English Language Arts Speaking Research Reading Writing and and Listening Inquiry z Smarter Balanced Assessment Math Problem Solving Concepts and Communicating and Data Procedures Reasoning Analysis z Achievement Level Descriptors Standard Exceeded Standard Met Demonstrates Standard Nearly Met advanced progress Standard Not Demonstrates Met progress toward May require toward mastery. further mastery. Needs development substantial for success in improvement future for success in coursework. future coursework. z CAASPP Grades 3-6 Percent of Students who Met Standards English Language Arts 100 90 80 70 63 60 54 50 46 40 30 20 10 0 CVESD County State Percent of Students Who Met & Exceeded Standards z CAASPP Grades 3-6 All Student Comparison: ELA 100 90 80 70 62 63 60 55 50 50 50 51 50 43 42 40 28 31 30 20 14 17 16 16 10 0 All Students Socioeconomically Students with English Learners Foster Youth Disadvantaged Disabilities w/ RFEP 2014-15 2015-16 2016-17 Percent of Students Who Met & Exceeded Standards z CAASPP Grades 3-6 Percent of Students who Met Standards Math 100 90 80 70 60 51 46 50 39 40 30 20 10 0 CVESD County State Percent of Students Who Met &