Doing Sound Slaten Dissertation Deposit

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Surround Sound Auteurs and the Fragmenting of Genre

Alan Williams: Surround Sound Auteurs and the Fragmenting of Genre Proceedings of the 12th Art of Record Production Conference Mono: Stereo: Multi Williams, A. (2019). Surround Sound Auteurs and the Fragmenting of Genre. In J.-O. Gullö (Ed.), Proceedings of the 12th Art of Record Production Conference Mono: Stereo: Multi (pp. 319-328). Stockholm: Royal College of Music (KMH) & Art of Record Production. Alan Williams: Surround Sound Auteurs and the Fragmenting of Genre Abstract Multi-channel sonic experience is derived from a myriad of technological processes, shaped by market forces, configured by creative decision makers and translated through audience taste preferences. From the failed launch of quadrophonic sound in the 1970s, through the currently limited, yet sustained niche market for 5.1 music releases, a select number of mix engineers and producers established paradigms for defining expanded sound stages. Whe- reas stereophonic mix practices in popular music became ever more codified during the 1970s, the relative paucity of multi-channel releases has preserved the individual sonic fingerprint of mixers working in surround sound. More- over, market forces have constricted their work to musical genres that appeal to the audiophile community that supports the format. This study examines the work of Elliot Scheiner, Bob Clearmountain, Giles Martin, and Steven Wilson to not only analyze the sonic signatures of their mixes, but to address how their conceptions of the soundstage become associated with specific genres, and serve to establish micro-genres of their own. I conclude by ar- guing that auteurs such as Steven Wilson have amassed an audience for their mixes, with a catalog that crosses genre boundaries, establishing a mode of listening that in itself represents an emergent genre – surround rock. -

UW System Requests $30 Surcharge

Inside Block that sun P.3 Yoko is surprise P.4 Women's buckets win opener * .. P.5 Unique gift guide special insert Vol. 25, NO. 30, December 2,1980 UW System requests $30 surcharge by James E. Piekarski not seek a general tax increase when the The letter also cited the "uncertain ofThePoststaff legislature reconvenes in January. ties" caused by litigation challenging the 4.4 percent budget cutback to muni The UW Board of Regents is expected Committee referral cipalities and school districts. The State to approve a $30 surcharge for the second If the Regents approve the $30 sur Supreme Court has ordered the return semester at its meeting this week, ac charge, it must then be approved by Of almost $18 million to municipali cording to UW System Vice President the legislature's Joint Committee on ties, and the school district case, which Reuben Lorenz. Finance. At a November meeting, the seeks the return of $29 million, is still The surcharge, which would raise tui Regents approved a resolution, with only pending. - tion for a semester to $516 for a full- one objection, that authorized UW Sys Chancellor Frank Horton, who will time resident undergraduate student, is tem President Robert O'Neil to file a attend the Board of Regents meeting in based on the anticipation of no further request with the Joint Committee on Madison on Thursday and Friday, said budget cutbacks, despite the state's wor Finance for the surcharge by its Nov. that based on the experience of the first sened financial forecast for the biennium 10 deadline. -

Making Musical Magic Live

Making Musical Magic Live Inventing modern production technology for human-centric music performance Benjamin Arthur Philips Bloomberg Bachelor of Science in Computer Science and Engineering Massachusetts Institute of Technology, 2012 Master of Sciences in Media Arts and Sciences Massachusetts Institute of Technology, 2014 Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Media Arts and Sciences at the Massachusetts Institute of Technology February 2020 © 2020 Massachusetts Institute of Technology. All Rights Reserved. Signature of Author: Benjamin Arthur Philips Bloomberg Program in Media Arts and Sciences 17 January 2020 Certified by: Tod Machover Muriel R. Cooper Professor of Music and Media Thesis Supervisor, Program in Media Arts and Sciences Accepted by: Tod Machover Muriel R. Cooper Professor of Music and Media Academic Head, Program in Media Arts and Sciences Making Musical Magic Live Inventing modern production technology for human-centric music performance Benjamin Arthur Philips Bloomberg Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, on January 17 2020, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Media Arts and Sciences at the Massachusetts Institute of Technology Abstract Fifty-two years ago, Sergeant Pepper’s Lonely Hearts Club Band redefined what it meant to make a record album. The Beatles revolution- ized the recording process using technology to achieve completely unprecedented sounds and arrangements. Until then, popular music recordings were simply faithful reproductions of a live performance. Over the past fifty years, recording and production techniques have advanced so far that another challenge has arisen: it is now very difficult for performing artists to give a live performance that has the same impact, complexity and nuance as a produced studio recording. -

Nagy Rock 'N' Roll Könyv

Szakács Gábor Nagy rock ʼnʼ roll könyv Szakács Gábor „Ahogy SzakácsSzakács GáborGábor mondjamondja aa műfajábanműfajában egyedülegyedülálló álló Nagy rock’n’rollOzzy Osbourne: c. könyvében: …el kell döntenünk, hogy zajt vagy zenét aka- runk„Amikor hallani.” fiam rehabilitációján megismertem a szerek mélységét, és ez hasonló a heroinhoz, teljesen ledöbbentem.Juhász Kristóf: 55 éves Magyar vagyok Idők és 2017/3/7.hátralé- vô életemben újra kell gondolnom sok mindent, mivel saját magamat is hihetetlenül hosszú ideig károsítottam.” Ozzy Osbourne: „Amikor fiam rehabilitációján megismertem a szerek mélységét, és ez hasonló a heroinhoz, teljesen ledöbbentem. 55 éves vagyok és hátralévő életemben újra kell gondolnom sok mindent, mivel saját magamat is hihetetlenül hosszú ideig károsítottam.” A szerző külünköszönetet köszönetet mond mondaz Attila az ifjúságaAttila ifjúsága lemez zenészeinek:zenészeinek: Kecskés együttes: L. Kecskés András, Kecskés Péter, Herczegh László, Lévai Péter, Nagyné Bartha Anna ifjúifj.© Szakács CsoóriCsoóri Gábor, SándorLászló 2004. június Rock:ISBN Bernáth 963 216 329Tibor, X Berkes Károly, Bóta Zsolt, Horváth János, Hor- váth Menyhért, Papp Gyula, Sárdy Barbara, Szász Ferenc A szerző köszönetet mond Valkóczi Józsefnek a könyv javításáért. © Szakács Gábor, 2004. június, 2021 ISBN© Szakács 963 216Gábor, 329 2004. X június, 2021. február ISBN 963 216 329 X FOTÓK: Antal Orsolya, Ágg Károly, Becskereki Dusi, Dávid Zsolt, Galambos Anita, Knapp Zoltán, Rásonyi Mária, Tóth Tibor BORÍTÓTERV, KÉPFELDOLGOZÁS: FortekFartek Zsolt KIADVÁNYSZERKESZTÔ: Székely-Magyari Hunor KIADÓ ÉS NYOMDA: Holoprint Kft. • 1163 Bp., Veres Péter út 37. Tel.: 403-4470, fax: 402-0229, www.holoprint.hu Nagy Rock ʼnʼ Roll Könyv Tartalomjegyzék Elôszó . 4 Így kezdôdött . 6 Írók, költôk, hangadók . 15 Családok, gyermekek, utódok . 24 Indulás, feltûnés, beérkezés . -

Table of Contents

Table of Contents From the Editors 3 From the President 3 From the Executive Director 5 The Sound Issue “Overtures” Music, the “Jew” of Jewish Studies: Updated Readers’ Digest 6 Edwin Seroussi To Hear the World through Jewish Ears 9 Judah M. Cohen “The Sound of Music” The Birth and Demise of Vocal Communities 12 Ruth HaCohen Brass Bands, Jewish Youth, and the Sonorities of a Global Perspective 14 Maureen Jackson How to Get out of Here: Sounding Silence in the Jewish Cabaretesque 20 Philip V. Bohlman Listening Contrapuntally; or What Happened When I Went Bach to the Archives 22 Amy Lynn Wlodarski The Trouble with Jewish Musical Genres: The Orquesta Kef in the Americas 26 Lillian M. Wohl Singing a New Song 28 Joshua Jacobson “Sounds of a Nation” When Josef (Tal) Laughed; Notes on Musical (Mis)representations 34 Assaf Shelleg From “Ha-tikvah” to KISS; or, The Sounds of a Jewish Nation 36 Miryam Segal An Issue in Hebrew Poetic Rhythm: A Cognitive-Structuralist Approach 38 Reuven Tsur Words, Melodies, Hands, and Feet: Musical Sounds of a Kerala Jewish Women’s Dance 42 Barbara C. Johnson Sound and Imagined Border Transgressions in Israel-Palestine 44 Michael Figueroa The Siren’s Song: Sound, Conflict, and the Politics of Public Space in Tel Aviv 46 Abigail Wood “Surround Sound” Sensory History, Deep Listening, and Field Recording 50 Kim Haines-Eitzen Remembering Sound 52 Alanna E. Cooper Some Things I Heard at the Yeshiva 54 Jonathan Boyarin The Questionnaire What are ways that you find most useful to incorporate sound, images, or other nontextual media into your Jewish Studies classrooms? 56 Read AJS Perspectives Online at perspectives.ajsnet.org AJS Perspectives: The Magazine of President Please direct correspondence to: the Association for Jewish Studies Pamela Nadell Association for Jewish Studies From the Editors perspectives.ajsnet.org American University Center for Jewish History 15 West 16th Street Dear Colleagues, Vice President / Program New York, NY 10011 Editors Sounds surround us. -

Downbeat.Com March 2014 U.K. £3.50

£3.50 £3.50 U.K. DOWNBEAT.COM MARCH 2014 D O W N B E AT DIANNE REEVES /// LOU DONALDSON /// GEORGE COLLIGAN /// CRAIG HANDY /// JAZZ CAMP GUIDE MARCH 2014 March 2014 VOLUME 81 / NUMBER 3 President Kevin Maher Publisher Frank Alkyer Editor Bobby Reed Associate Editor Davis Inman Contributing Editor Ed Enright Designer Ara Tirado Bookkeeper Margaret Stevens Circulation Manager Sue Mahal Circulation Assistant Evelyn Oakes Editorial Intern Kathleen Costanza Design Intern LoriAnne Nelson ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile 630-941-2030 [email protected] Musical Instruments & East Coast Schools Ritche Deraney 201-445-6260 [email protected] Advertising Sales Associate Pete Fenech 630-941-2030 [email protected] OFFICES 102 N. Haven Road, Elmhurst, IL 60126–2970 630-941-2030 / Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 / [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, Aaron Cohen, John McDonough Atlanta: Jon Ross; Austin: Kevin Whitehead; Boston: Fred Bouchard, Frank- John Hadley; Chicago: John Corbett, Alain Drouot, Michael Jackson, Peter Margasak, Bill Meyer, Mitch Myers, Paul Natkin, Howard Reich; Denver: Norman Provizer; Indiana: Mark Sheldon; Iowa: Will Smith; Los Angeles: Earl Gibson, Todd Jenkins, Kirk Silsbee, Chris Walker, Joe Woodard; Michigan: John Ephland; Minneapolis: Robin James; Nashville: Bob Doerschuk; New Orleans: Erika Goldring, David Kunian, Jennifer Odell; New York: Alan Bergman, Herb Boyd, Bill Douthart, Ira Gitler, Eugene -

Keeping the Tradition Y B 2 7- in MEMO4 BILL19 Cooper-Moore • Orrin Evans • Edition Records • Event Calendar

June 2011 | No. 110 Your FREE Guide to the NYC Jazz Scene nycjazzrecord.com Dee Dee Bridgewater RIAM ANG1 01 Keeping The Tradition Y B 2 7- IN MEMO4 BILL19 Cooper-Moore • Orrin Evans • Edition Records • Event Calendar It’s always a fascinating process choosing coverage each month. We’d like to think that in a highly partisan modern world, we actually live up to the credo: “We New York@Night Report, You Decide”. No segment of jazz or improvised music or avant garde or 4 whatever you call it is overlooked, since only as a full quilt can we keep out the cold of commercialism. Interview: Cooper-Moore Sometimes it is more difficult, especially during the bleak winter months, to 6 by Kurt Gottschalk put together a good mixture of feature subjects but we quickly forget about that when June rolls around. It’s an embarrassment of riches, really, this first month of Artist Feature: Orrin Evans summer. Just like everyone pulls out shorts and skirts and sandals and flipflops, 7 by Terrell Holmes the city unleashes concert after concert, festival after festival. This month we have the Vision Fest; a mini-iteration of the Festival of New Trumpet Music (FONT); the On The Cover: Dee Dee Bridgewater inaugural Blue Note Jazz Festival taking place at the titular club as well as other 9 by Marcia Hillman city venues; the always-overwhelming Undead Jazz Festival, this year expanded to four days, two boroughs and ten venues and the 4th annual Red Hook Jazz Encore: Lest We Forget: Festival in sight of the Statue of Liberty. -

Lisa Mansell Cardiff, Wales Mav 2007

FORM OF FIX: TRANSATLANTIC SONORITY IN THE MINORITY Lisa Mansell Cardiff, Wales Mav 2007 UMI Number: U584943 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. Dissertation Publishing UMI U584943 Published by ProQuest LLC 2013. Copyright in the Dissertation held by the Author. Microform Edition © ProQuest LLC. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 For 25 centuries Western knowledge has tried to look upon the world. It has failed to understand that the world is not for beholding. It is for hearing [...]. Now we must learn to judge a society by its noise. (Jacques Attali} DECLARATION This work has not previously been accepted in substance for any degree and is not concurrently submitted in candidature fof any degree. Signed r?rrr?rr..>......................................... (candidate) Date STATEMENT 1 This thesis is being submitted in partial fulfillment of the requirements for the degree o f ....................... (insert MCh, Mfo MPhil, PhD etc, as appropriate) (candidate) D ateSigned .. (candidate) DateSigned STATEMENT 2 This thesis is the result of my own independent work/investigation, except where otherwise stated. Other sources aite acknowledged by explicit references. Signed ... ..................................... (candidate) Date ... V .T ../.^ . STATEMENT 3 I hereby give consent for my thesis, if accepted, to be available for photocopying and for inter-library loan, and for the title and summary to be made available to outside organisations. -

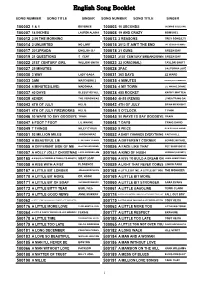

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

AES 123Rd Convention Program October 5 – 8, 2007 Jacob Javits Convention Center, New York, NY

AES 123rd Convention Program October 5 – 8, 2007 Jacob Javits Convention Center, New York, NY Special Event Program: LIVE SOUND SYMPOSIUM: SURROUND LIVE V Delivering the Experience 8:15 am – Registration and Continental Breakfast Thursday, October 4, 9:00 am – 4:00 pm 9:00 am – Event Introduction – Frederick Ampel Broad Street Ballroom 9:10 am – Andrew Goldberg – K&H System Overview 41 Broad Street, New York, NY 10004 9:20 am –The Why and How of Surround – Kurt Graffy Arup Preconvention Special Event; additional fee applies 9:50 am – Coffee Break 10:00 am – Neural Audio Overview Chair: Frederick J. Ampel, Technology Visions, 10:10 am – TiMax Overview and Demonstration Overland Park, KS, USA 10:20 am – Fred Aldous – Fox Sports 10:55 am – Jim Hilson – Dolby Labs Panelists: Kurt Graffy 11:40 am – Mike Pappas – KUVO Radio Fred Aldous 12:25 pm – Lunch Randy Conrod Jim Hilson 1:00 pm – Tom Sahara – Turner Networks Michael Nunan 1:40 pm – Sports Video Group Panel – Ken Michael Pappas Kirschenbaumer Tom Sahara 2:45 pm – Afternoon Break beyerdynamic, Neural Audio, and others 3:00 pm – beyerdynamic – Headzone Overview Once again the extremely popular Surround Live event 3:10 pm – Mike Nunan – CTV Specialty Television, returns to AES’s 123rd Convention in New York City. Canada 4:00 pm – Q&A; Closing Remarks Created and Produced by Frederick Ampel of Technology Visions with major support from the Sports PLEASE NOTE: PROGRAM SUBJECT TO CHANGE Video Group, this marks the event’s fifth consecutive PRIOR TO THE EVENT. FINAL PROGRAM WILL workshop exclusively provided to the AES. -

John Bailey Randy Brecker Paquito D'rivera Lezlie Harrison

192496_HH_June_0 5/25/18 10:36 AM Page 1 E Festival & Outdoor THE LATIN SIDE 42 Concert Guide OF HOT HOUSE P42 pages 30-41 June 2018 www.hothousejazz.com Smoke Jazz & Supper Club Page 17 Blue Note Page 19 Lezlie Harrison Paquito D'Rivera Randy Brecker John Bailey Jazz Forum Page 10 Smalls Jazz Club Page 10 Where To Go & Who To See Since 1982 192496_HH_June_0 5/25/18 10:36 AM Page 2 2 192496_HH_June_0 5/25/18 10:37 AM Page 3 3 192496_HH_June_0 5/25/18 10:37 AM Page 4 4 192496_HH_June_0 5/25/18 10:37 AM Page 5 5 192496_HH_June_0 5/25/18 10:37 AM Page 6 6 192496_HH_June_0 5/25/18 10:37 AM Page 7 7 192496_HH_June_0 5/25/18 10:37 AM Page 8 8 192496_HH_June_0 5/25/18 11:45 AM Page 9 9 192496_HH_June_0 5/25/18 10:37 AM Page 10 WINNING SPINS By George Kanzler RUMPET PLAYERS ARE BASI- outing on soprano sax. cally extroverts, confident and proud Live 1988, Randy Brecker Quintet withT a sound and tone to match. That's (MVDvisual, DVD & CD), features the true of the two trumpeters whose albums reissue of a long out-of-print album as a comprise this Winning Spins: John Bailey CD, accompanying a previously unreleased and Randy Brecker. Both are veterans of DVD of the live date, at Greenwich the jazz scene, but with very different Village's Sweet Basil, one of New York's career arcs. John has toiled as a first-call most prominent jazz clubs in the 1980s trumpeter for big bands and recording ses- and 1990s. -

AD GUITAR INSTRUCTION** 943 Songs, 2.8 Days, 5.36 GB

Page 1 of 28 **AD GUITAR INSTRUCTION** 943 songs, 2.8 days, 5.36 GB Name Time Album Artist 1 I Am Loved 3:27 The Golden Rule Above the Golden State 2 Highway to Hell TUNED 3:32 AD Tuned Files and Edits AC/DC 3 Dirty Deeds Tuned 4:16 AD Tuned Files and Edits AC/DC 4 TNT Tuned 3:39 AD Tuned Files and Edits AC/DC 5 Back in Black 4:20 Back in Black AC/DC 6 Back in Black Too Slow 6:40 Back in Black AC/DC 7 Hells Bells 5:16 Back in Black AC/DC 8 Dirty Deeds Done Dirt Cheap 4:16 Dirty Deeds Done Dirt Cheap AC/DC 9 It's A Long Way To The Top ( If You… 5:15 High Voltage AC/DC 10 Who Made Who 3:27 Who Made Who AC/DC 11 You Shook Me All Night Long 3:32 AC/DC 12 Thunderstruck 4:52 AC/DC 13 TNT 3:38 AC/DC 14 Highway To Hell 3:30 AC/DC 15 For Those About To Rock (We Sal… 5:46 AC/DC 16 Rock n' Roll Ain't Noise Pollution 4:13 AC/DC 17 Blow Me Away in D 3:27 AD Tuned Files and Edits AD Tuned Files 18 F.S.O.S. in D 2:41 AD Tuned Files and Edits AD Tuned Files 19 Here Comes The Sun Tuned and… 4:48 AD Tuned Files and Edits AD Tuned Files 20 Liar in E 3:12 AD Tuned Files and Edits AD Tuned Files 21 LifeInTheFastLaneTuned 4:45 AD Tuned Files and Edits AD Tuned Files 22 Love Like Winter E 2:48 AD Tuned Files and Edits AD Tuned Files 23 Make Damn Sure in E 3:34 AD Tuned Files and Edits AD Tuned Files 24 No More Sorrow in D 3:44 AD Tuned Files and Edits AD Tuned Files 25 No Reason in E 3:07 AD Tuned Files and Edits AD Tuned Files 26 The River in E 3:18 AD Tuned Files and Edits AD Tuned Files 27 Dream On 4:27 Aerosmith's Greatest Hits Aerosmith 28 Sweet Emotion