Thought and Knowledge

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Devils Picturebooks a History of Playing Cards

P RE FA C E . “ ’ H E Devil s Book s was the name bestowed upon Playing- cards by the P urita n s and other piou s souls who were probably in h opes that this name would alarm timid persons and so prevent their use . Whether or not his S a tan ic P - Majesty originated laying cards , we have no means of discovering ; but it is more probable he - that only inspired their invention , and placed of who them in the hands mankind , have eagerly o of ad pted this simple means amusing themselves , and have used it according to the good or evil s which predominated in their own brea ts . Many learned men have written books or treatises on P - I for laying cards , and am indebted a large part of the information contained in this histo ry to “ ” Les C a . P C artes Jouer, by M aul la roix ; ” P - Facts and Speculations about laying cards , by h of P - . C T e Mr hatto ; History laying cards , by T The of the Rev . Edward aylor ; and History ” - P . laying cards , by M r Singer out of These books are now print, and some ffi I n what di cult to obtain ; and hope , by bringi g into a small compass the principal features set I to f forth in them , shall be able place be ore a number of readers interesting facts that would be otherwise unobtainable . Hearty thanks are due to the custodians of the o Nati nal M useum in Washington , who have aided t o me in every way in their power, and also the many kind friends who have sought far and wide for o of unique and uncomm n packs cards , and helped materially by gathering facts rel a ting to fo r them me . -

The Reasoning Skills and Thinking Dispositions of Problem Gamblers: a Dual-Process Taxonomy

Journal of Behavioral Decision Making J. Behav. Dec. Making, 20: 103–124 (2007) Published online 22 September 2006 in Wiley InterScience (www.interscience.wiley.com) DOI: 10.1002/bdm.544 The Reasoning Skills and Thinking Dispositions of Problem Gamblers: A Dual-Process Taxonomy MAGGIE E. TOPLAK1*, ELEANOR LIU2, ROBYN MACPHERSON3, TONY TONEATTO2 and KEITH E. STANOVICH3 1York University, Canada 2Centre forAddiction and Mental Health,Toronto, Canada 3University of Toronto, Canada ABSTRACT We present a taxonomy that categorizes the types of cognitive failure that might result in persistent gambling. The taxonomy subsumes most previous theories of gambling behavior, and it defines three categories of cognitive difficulties that might lead to gambling problems: The autonomous set of systems (TASS) override failure, missing TASS output, and mindware problems. TASS refers to the autonomous set of systems in the brain (which are executed rapidly and without volition, are not under conscious control, and are not dependent on analytic system output). Mindware consists of rules, procedures, and strategies available for explicit retrieval. Seven of the eight tasks administered to pathological gamblers, gamblers with subclinical symptoms, and control participants were associated with problem gambling, and five of the eight were significant predictors in analyses that statistically controlled for age and cognitive competence. In a commonality analysis, an indicator from each of the three categories of cognitive difficulties explained significant unique variance in problem gambling, indicating that each of the categories had some degree of predictive specificity. Copyright # 2006 John Wiley & Sons, Ltd. key words dual-process theories; problem gambling; human reasoning; intuitive and analytic processing Pathological gambling is defined as ‘‘persistent and recurrent maladaptive gambling behavior that disrupts personal, family, and vocational pursuits’’ (DSM-IV-TR, American Psychiatric Association, 2000, p. -

Meta-Effectiveness Excerpts from Cognitive Productivity: Using Knowledge to Become Profoundly Effective

Meta-effectiveness Excerpts from Cognitive Productivity: Using Knowledge to Become Profoundly Effective Luc P. Beaudoin, Ph.D. (Cognitive Science) Adjunct Professor of Education Adjunct Professor of Cognitive Science Simon Fraser University EDB 7505, 8888 University Drive Burnaby, BC V5A 1S6 Canada [email protected] Skype: LPB2ha http://sfu.ca/~lpb/ Last Revised: 2015–05–31 (See revision history) I recently wrote a blog post containing some importants concepts for understanding adult development of competence (including “learning to learn”). The overarching concept of that topic, and Cognitive Productivity, is meta-effectiveness, i.e., the abilities and dispositions (or “mindware”) to use knowledge to become more effective. Effectance is a component of meta-effectiveness. The concepts of meta-effectiveness and effectance being both subtle and important, I am publishing in this document a few excerpts from my book (Cognitive Productivity) to elucidate them. You will notice that I am critical of the position espoused by Dennett (e.g., in Inside Jokes) that the tendency to think is pleasure- seeking in disguise. Also, I’ve modernized White’s concept of effectance, aligning it with Sloman’s concept of architecture-based motivation. I have also updated David Perkins’ concept of mindware. Acknowledgements Footnotes Revision History 2015–05–31. First revision. Cognitive Productivity Using Knowledge to Become Profoundly Effective Luc P. Beaudoin This book is for sale at http://leanpub.com/cognitiveproductivity This version was published on 2015-04-12 This is a Leanpub book. Leanpub empowers authors and publishers with the Lean Publishing process. Lean Publishing is the act of publishing an in-progress ebook using lightweight tools and many iterations to get reader feedback, pivot until you have the right book and build traction once you do. -

Keith Stanovich Curriculum Vitae

CURRICULUM VITAE PERSONAL INFORMATION: Name: Keith E. Stanovich Date of Birth: December 13, 1950 Marital Status: Married Address: Department of Human Development and Applied Psychology Ontario Institute for Studies in Education University of Toronto 252 Bloor Street West Toronto, Ontario Canada M5S 1V6 Phone: 503-297-3912 E-mail [email protected] EDUCATION: The Ohio State University, 1969-1973 - B.A., 1973 (Major - Psychology) The University of Michigan, 1973-1977 - M. A., Ph.D. (Major - Psychology) POSITIONS HELD: Professor, University of Toronto, 1991-present Department of Human Development and Applied Psychology Member, Centre for Applied Cognitive Science Program Head, Human Development and Education, 1996 - 2001 Department of Human Development and Applied Psychology Canada Research Chair of Applied Cognitive Science, 2002-2005 Professor of Psychology and Education, Oakland University, 1987-1991 Associate Professor of Psychology and Education, Oakland University 1985 - 1987 Associate Professor of Psychology, Oakland University 1982 - 1985 Assistant Professor of Psychology, Oakland University 1977 - 1982 Stanovich, Keith E. 1 AWARDS AND NATIONAL COMMITTEES: Distinguished Scientific Contributions Award, Society for the Scientific Study of Reading, July, 2000. Distinguished Researcher Award, Special Education Research SIG, American Educational Research Association, March, 2008 Oscar S. Causey Award, National Reading Conference, 1996 Silvia Scribner Award, Division C, American Educational Research Association, March, 1997 Elected, -

The BG News September 13, 1991

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 9-13-1991 The BG News September 13, 1991 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News September 13, 1991" (1991). BG News (Student Newspaper). 5249. https://scholarworks.bgsu.edu/bg-news/5249 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. ^ The BG News FRIDAY, SEPTEMBER 13, 1991 BOWLING GREEN, OHIO VOLUME 74, ISSUE 13 Officials Divisions Briefly endeavor endanger Inside reformists to better MOSCOW (AP) - Soviet reform- The party's over: ers told Secretary of State James The past, the present and A. Baker III Thursday that dis- the future of East Merry relations array in their ranks and ancient Madness. by Julie Potter ethnic tensions are the greatest See page two. city reporter threats to a peaceful transition to democracy in the Soviet Union. Goal!: Moscow Mayor Gavrill Popov BG hosts weekend soccer In an effort to improve re- said, too, that the forces that tourney. lations between the city and stu- backed the three-day coup See page ten. dents, city officials went door-to- against Soviet President Mikhail door to visit students last Thurs- S. Gorbachev in August "will al- Sing along with In- day. -

The New World Witchery Guide to CARTOMANCY

The New World Witchery Guide to CARTOMANCY The Art of Fortune-Telling with Playing Cards By Cory Hutcheson, Proprietor, New World Witchery ©2010 Cory T. Hutcheson 1 Copyright Notice All content herein subject to copyright © 2010 Cory T. Hutcheson. All rights reserved. Cory T. Hutcheson & New World Witchery hereby authorizes you to copy this document in whole or in party for non-commercial use only. In consideration of this authorization, you agree that any copy of these documents which you make shall retain all copyright and other proprietary notices contained herein. Each individual document published herein may contain other proprietary notices and copyright information relating to that individual document. Nothing contained herein shall be construed as conferring, by implication or otherwise any license or right under any patent or trademark of Cory T. Hutcheson, New World Witchery, or any third party. Except as expressly provided above nothing contained herein shall be construed as conferring any license or right under any copyright of the author. This publication is provided "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, OR NON-INFRINGEMENT. Some jurisdictions do not allow the exclusion of implied warranties, so the above exclusion may not apply to you. The information provided herein is for ENTERTAINMENT and INFORMATIONAL purposes only. Any issues of health, finance, or other concern should be addressed to a professional within the appropriate field. The author takes no responsibility for the actions of readers of this material. This publication may include technical inaccuracies or typographical errors. -

The Psychology of Problem Solving Edited by Janet E

Cambridge University Press 978-0-521-79333-9 - The Psychology of Problem Solving Edited by Janet E. Davidson and Robert J. Sternberg Frontmatter More information The Psychology of Problem Solving Problems are a central part of human life. The Psychology of Problem Solving organizes in one volume much of what psychologists know about problem solving and the factors that contribute to its success or failure. There are chapters by leading experts in this field, includ- ing Miriam Bassok, Randall Engle, Anders Ericsson, Arthur Graesser, Norbert Schwarz, Keith Stanovich, and Barry Zimmerman. The Psychology of Problem Solving is divided into four parts. Fol- lowing an introduction that reviews the nature of problems and the history and methods of the field, Part II focuses on individual differ- ences in, and the influence of, the abilities and skills that humans bring to problem situations. Part III examines motivational and emotional states and cognitive strategies that influence problem-solving perfor- mance, while Part IV summarizes and integrates the various views of problem solving proposed in the preceding chapters. Janet E. Davidson is Associate Professor of Psychology at Lewis & Clark College. She conducts research on several aspects of problem solving, including the roles that insight and metacognitive skills play in problem solving. Robert J. Sternberg is IBM Professor of Psychology and Education at Yale University and Director of the Yale Center for the Psychology of Abilities, Competencies and Expertise (PACE Center). Professor Sternberg is Editor of Contemporary Psychology and past Editor of Psychological Bulletin. Together, Professors Davidson and Sternberg have edited two previ- ous books, Conceptions of Giftedness (Cambridge, 1986) and The Nature of Insight (1995). -

June 23, 1932

„ municipality alm-fiic) rate _l'l.ll> (VlVMIS. M \|Iti:t( .2.1 M-n m BARRINGTQ>, IIJJN018, THI11SI>AV. JINK *», 1188 James Doyle Carries dp Find Relief From Summer ^te«n Committees Summer and Season's on i$ Flower Garden HSat at Bar ring ton's Pool; Plan Local Park Bo 175 At tend Annual for Departed Friend Fhat Sweltering Heat Appointed by Lions Swimming andDiving Instruction Wave Arrive Together A man who devotes his time Votes 26 Per Cen Alumni Banquet at and Interest to u Jtlyilntf memorial Sun mer arrived at 10:23 a, in. -,t Monday Meeting to n departed, frhjml is James The nrrlvi 1 of summer attended by port was voted'by. the nark district riic»d|y and brought, with It a Itayle, for many years an infl- warmer weaklier that drove the mer eoinmlsslonera at a meeting last night. Appropriation Cut constantly rising temperature School Auditorium mate friend and keen admirer of cury up intc (he OO'H made the North It will, be in the form of a ¢2.50 that teached its maximum late -j A. W. Meyer who died Wti sum- Sid*1 park sv intnilng- pool a.mecca for mtnthly ticket Which will entitle the Wednesday afternoon whon the i ' -— , ilt.i„w -Kleports Wntht on , roer. thoNe scekim relief from the. swelter pewon to w 10m it is issued admission Commissioners Lead Munici lk«rm)metfv registered 08 de Dancing Is Added to Usual („n\i'ntio0 Held ing hent. \ 'eilnesday, when the heat to the pool as often and as many grees In the shade, according to • vt.itt Mr. -

NGA | 2014 Annual Report

NATIO NAL G ALLERY OF ART 2014 ANNUAL REPORT ART & EDUCATION Juliet C. Folger BOARD OF TRUSTEES COMMITTEE Marina Kellen French (as of 30 September 2014) Frederick W. Beinecke Morton Funger Chairman Lenore Greenberg Earl A. Powell III Rose Ellen Greene Mitchell P. Rales Frederic C. Hamilton Sharon P. Rockefeller Richard C. Hedreen Victoria P. Sant Teresa Heinz Andrew M. Saul Helen Henderson Benjamin R. Jacobs FINANCE COMMITTEE Betsy K. Karel Mitchell P. Rales Linda H. Kaufman Chairman Mark J. Kington Jacob J. Lew Secretary of the Treasury Jo Carole Lauder David W. Laughlin Frederick W. Beinecke Sharon P. Rockefeller Frederick W. Beinecke Sharon P. Rockefeller LaSalle D. Leffall Jr. Chairman President Victoria P. Sant Edward J. Mathias Andrew M. Saul Robert B. Menschel Diane A. Nixon AUDIT COMMITTEE John G. Pappajohn Frederick W. Beinecke Sally E. Pingree Chairman Tony Podesta Mitchell P. Rales William A. Prezant Sharon P. Rockefeller Diana C. Prince Victoria P. Sant Robert M. Rosenthal Andrew M. Saul Roger W. Sant Mitchell P. Rales Victoria P. Sant B. Francis Saul II TRUSTEES EMERITI Thomas A. Saunders III Julian Ganz, Jr. Leonard L. Silverstein Alexander M. Laughlin Albert H. Small David O. Maxwell Benjamin F. Stapleton John Wilmerding Luther M. Stovall Alexa Davidson Suskin EXECUTIVE OFFICERS Christopher V. Walker Frederick W. Beinecke Diana Walker President William L Walton Earl A. Powell III John R. West Director Andrew M. Saul John G. Roberts Jr. Dian Woodner Chief Justice of the Franklin Kelly United States Deputy Director and Chief Curator HONORARY TRUSTEES’ William W. McClure COUNCIL Treasurer (as of 30 September 2014) Darrell R. -

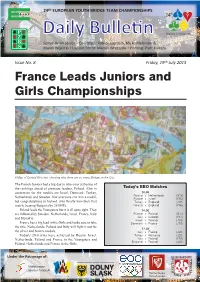

Daily Bulletin

24th EUROPEAN YOUTH BRIDGE TEAM CHAMPIONSHIPS DailyDaily BBulleulletin Editor: Brian Senior • Co-Editors: Patrick Jourdain, Micke Melander & Marek Wojcicki • Lay-out Editor: Maciek Wreczycki • Printing: Piotr Kulesza Issue No. 8 Friday, 19th July 2013 France Leads Juniors and Girls Championships A Map of Central Wroclaw, showing why there are so many Bridges in the City The French Juniors had a big day to take-over at the top of the rankings ahead of previous leaders, Poland. Also in Today’s BBO Matches contention for the medals are Israel, Denmark, Turkey, 10.00 Netherlands and Sweden. Not everyone can win a medal, Poland v Netherlands (G16) France v Israel (Y16) but congratulations to Ireland, who finally won their first Turkey v England (J18) match, beating Bulgaria by 28 IMPs. Poland v England (Y16) Poland leads the Youngsters but it is all quite tight. They 14.00 are followed by Sweden, Netherlands, Israel, France, Italy France v Poland (G17) and Slovakia. Italy v Sweden (Y17) Israel v Poland (J19) France has a big lead in the Girls and looks sure to take Denmark v France (J19) the title. Netherlands, Poland and Italy will fight it out for 17.20 the silver and bronze medals. Italy v France (J20) Today's 20-0 wins were achieved by Russia, Israel, Turkey v Romania (J20) Netherlands, Poland and France in the Youngsters and Italy v Poland (Y18) England v Poland (J20) Poland, Netherlands and France in the Girls. Under the Patronage of: Ministerstwo Sportu i Turystyki 24th EUROPEAN BRIDGE YOUTH TEAM CHAMPIONSHIPS • Wrocław, Poland 11–20 July 2013 Results – Junior Teams Round 16 Rankings after 17 Rounds IMPs VPs Rank Team VPs Table Home Team Visiting Team Home Visit. -

Than 50 Effects Using the Romero Box

LIMITLESS MORE THAN 50 EFFECTS USING THE ROMERO BOX Prologue Anthony Blake This book is dedicated to Antonio Ferragut, Master of the art of wit. BACK TO INDEX BACK BACK TO INDEX BACK TITLE Limitless. More than 50 effects using the Romero Box. CREATED BY Romero Magic. COVER DESIGN Sansón y Dalila BOOK LAYOUT Belén Romero - Sansón y Dalila FOTOGRAPHER Concepción Jurado PRINTED BY Sansón y Dalila TRANSLATED BY Fernando Rosal. Madrid, July 2014. LIMITLESS MORE THAN 50 EFFECTS USING THE ROMERO BOX BACK TO INDEX BACK 4 Index 4 Index 6 Prologue 6 Prologue 8 Foreword 8 Foreword 10 The Box 11 Basic technique. 12 Loading cards. 14 Types of effects. I•15 Romero Box Classics 16 Card prediction/mind reading. 19 Torn and restored card. 23 Card at number. 25 Do as I do. 28 Double coincidence. II•31 Transpositions 32 Card to card box. 35 Signed card to card box. BACK TO INDEX BACK 38 Card to any location. 41 Card to cell phone. 43 Houdini’s scape. 45 Two card transposition. 47 Transposition with a twist. III•49 Coins 50 Coin through card box. 53 Copper-silver transposition. 55 Copper-silver finale. IV•61 Mentalism and other mysteries 62 Business Card revelation. 65 Divination of the serial number of a bank note. 67 The magazine test. 70 The newspaper divination. 71 The matches. 73 The living/dead test. 75 The puzzle. 76 Open prediction. 81 ESP coincidence. V•83 Transformations and strange phenomena. 84 Transformation of a card into another. 86 Transformation of a business card. -

119 ^6L95 \ 37.9 Mill Tax Jlaje Adopted by Directors

m o H • ' ’ •’ ■■fR6 K. v*»Wnrfi*.**M.**»A* V' •* TIIURSDAY, MAY 4, 1967 PAGE TWENTY-FOUR E u m n g Average Dhlly Net Priess Run The Weather For The Week Ended Fair, coktt tonight, low 88- heavy In spots; In fact a check if you were famlUex wWi the April 28, 1967 A luncheon for past presi of orchestrad personnel Bhfcwed score. ^ F R E E 40; ffdr tomorrow, high In mid About Town dents of Vetelrans of World War Flutist, Tenor, String Group Hartt Offers as many cellos as first violins. Since hardly anybody In ^ | I Auxiliary wiU_be held Safur- This represented one more coUo audience wop famdllf with D ELIV ER Y 15,134 - S t Bridget Roeairy Society day, May 13 at 2 p.m. at the In , Top Effort and one less vloUn than Strauss the score to this ^ tancheater-r-A City of Village Cltarm WW sponsor a Runtvmai^ Sale Green Dolphin, 926 S. Main St, Concert hy Chuminade _ , , . , envisioned, and the orcbeertstt- suppose it reaily m a ffe d . ARTHUR DRUO B atu r^y at 10 . a.m. ‘ in the Cheshire. Reservations close I "A i«i g tlon is so caaefully wrought that Pairanov was just unf^unate m (Classified Advertising ~«ll Page 21) PRICE SEVEN CENTS baiseniient of the church. The Monday and may be made with Ohamdnade Musical Club will x & x effect was quite noticeaible having mte in the audience. VOL. LXXXVI, NO. 183 (TWENTY-FOUR PAGES—TWO SECTIONS) MANCHESTER, CONN., FRIDAY, MAY 5, 1967 event is open to the pubHc.