STAT 830: Statistical Theory I

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computing the Oja Median in R: the Package Ojanp

Computing the Oja Median in R: The Package OjaNP Daniel Fischer Karl Mosler Natural Resources Institute of Econometrics Institute Finland (Luke) and Statistics Finland University of Cologne and Germany School of Health Sciences University of Tampere Finland Jyrki M¨ott¨onen Klaus Nordhausen Department of Social Research Department of Mathematics University of Helsinki and Statistics Finland University of Turku Finland and School of Health Sciences University of Tampere Finland Oleksii Pokotylo Daniel Vogel Cologne Graduate School Institute of Complex Systems University of Cologne and Mathematical Biology Germany University of Aberdeen United Kingdom June 27, 2016 arXiv:1606.07620v1 [stat.CO] 24 Jun 2016 Abstract The Oja median is one of several extensions of the univariate median to the mul- tivariate case. It has many nice properties, but is computationally demanding. In this paper, we first review the properties of the Oja median and compare it to other multivariate medians. Afterwards we discuss four algorithms to compute the Oja median, which are implemented in our R-package OjaNP. Besides these 1 algorithms, the package contains also functions to compute Oja signs, Oja signed ranks, Oja ranks, and the related scatter concepts. To illustrate their use, the corresponding multivariate one- and C-sample location tests are implemented. Keywords Oja median, Oja signs, Oja signed ranks, Oja ranks, R,C,C++ 1 Introduction The univariate median is a popular location estimator. It is, however, not straightforward to generalize it to the multivariate case since no generalization known retains all properties of univariate estimator, and therefore different gen- eralizations emphasize different properties of the univariate median. -

Annual Report of the Center for Statistical Research and Methodology Research and Methodology Directorate Fiscal Year 2017

Annual Report of the Center for Statistical Research and Methodology Research and Methodology Directorate Fiscal Year 2017 Decennial Directorate Customers Demographic Directorate Customers Missing Data, Edit, Survey Sampling: and Imputation Estimation and CSRM Expertise Modeling for Collaboration Economic and Research Experimentation and Record Linkage Directorate Modeling Customers Small Area Simulation, Data Time Series and Estimation Visualization, and Seasonal Adjustment Modeling Field Directorate Customers Other Internal and External Customers ince August 1, 1933— S “… As the major figures from the American Statistical Association (ASA), Social Science Research Council, and new Roosevelt academic advisors discussed the statistical needs of the nation in the spring of 1933, it became clear that the new programs—in particular the National Recovery Administration—would require substantial amounts of data and coordination among statistical programs. Thus in June of 1933, the ASA and the Social Science Research Council officially created the Committee on Government Statistics and Information Services (COGSIS) to serve the statistical needs of the Agriculture, Commerce, Labor, and Interior departments … COGSIS set … goals in the field of federal statistics … (It) wanted new statistical programs—for example, to measure unemployment and address the needs of the unemployed … (It) wanted a coordinating agency to oversee all statistical programs, and (it) wanted to see statistical research and experimentation organized within the federal government … In August 1933 Stuart A. Rice, President of the ASA and acting chair of COGSIS, … (became) assistant director of the (Census) Bureau. Joseph Hill (who had been at the Census Bureau since 1900 and who provided the concepts and early theory for what is now the methodology for apportioning the seats in the U.S. -

The Theory of the Design of Experiments

The Theory of the Design of Experiments D.R. COX Honorary Fellow Nuffield College Oxford, UK AND N. REID Professor of Statistics University of Toronto, Canada CHAPMAN & HALL/CRC Boca Raton London New York Washington, D.C. C195X/disclaimer Page 1 Friday, April 28, 2000 10:59 AM Library of Congress Cataloging-in-Publication Data Cox, D. R. (David Roxbee) The theory of the design of experiments / D. R. Cox, N. Reid. p. cm. — (Monographs on statistics and applied probability ; 86) Includes bibliographical references and index. ISBN 1-58488-195-X (alk. paper) 1. Experimental design. I. Reid, N. II.Title. III. Series. QA279 .C73 2000 001.4 '34 —dc21 00-029529 CIP This book contains information obtained from authentic and highly regarded sources. Reprinted material is quoted with permission, and sources are indicated. A wide variety of references are listed. Reasonable efforts have been made to publish reliable data and information, but the author and the publisher cannot assume responsibility for the validity of all materials or for the consequences of their use. Neither this book nor any part may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, microfilming, and recording, or by any information storage or retrieval system, without prior permission in writing from the publisher. The consent of CRC Press LLC does not extend to copying for general distribution, for promotion, for creating new works, or for resale. Specific permission must be obtained in writing from CRC Press LLC for such copying. Direct all inquiries to CRC Press LLC, 2000 N.W. -

Statistical Theory

Statistical Theory Prof. Gesine Reinert November 23, 2009 Aim: To review and extend the main ideas in Statistical Inference, both from a frequentist viewpoint and from a Bayesian viewpoint. This course serves not only as background to other courses, but also it will provide a basis for developing novel inference methods when faced with a new situation which includes uncertainty. Inference here includes estimating parameters and testing hypotheses. Overview • Part 1: Frequentist Statistics { Chapter 1: Likelihood, sufficiency and ancillarity. The Factoriza- tion Theorem. Exponential family models. { Chapter 2: Point estimation. When is an estimator a good estima- tor? Covering bias and variance, information, efficiency. Methods of estimation: Maximum likelihood estimation, nuisance parame- ters and profile likelihood; method of moments estimation. Bias and variance approximations via the delta method. { Chapter 3: Hypothesis testing. Pure significance tests, signifi- cance level. Simple hypotheses, Neyman-Pearson Lemma. Tests for composite hypotheses. Sample size calculation. Uniformly most powerful tests, Wald tests, score tests, generalised likelihood ratio tests. Multiple tests, combining independent tests. { Chapter 4: Interval estimation. Confidence sets and their con- nection with hypothesis tests. Approximate confidence intervals. Prediction sets. { Chapter 5: Asymptotic theory. Consistency. Asymptotic nor- mality of maximum likelihood estimates, score tests. Chi-square approximation for generalised likelihood ratio tests. Likelihood confidence regions. Pseudo-likelihood tests. • Part 2: Bayesian Statistics { Chapter 6: Background. Interpretations of probability; the Bayesian paradigm: prior distribution, posterior distribution, predictive distribution, credible intervals. Nuisance parameters are easy. 1 { Chapter 7: Bayesian models. Sufficiency, exchangeability. De Finetti's Theorem and its intepretation in Bayesian statistics. { Chapter 8: Prior distributions. Conjugate priors. -

Chapter 5 Statistical Inference

Chapter 5 Statistical Inference CHAPTER OUTLINE Section 1 Why Do We Need Statistics? Section 2 Inference Using a Probability Model Section 3 Statistical Models Section 4 Data Collection Section 5 Some Basic Inferences In this chapter, we begin our discussion of statistical inference. Probability theory is primarily concerned with calculating various quantities associated with a probability model. This requires that we know what the correct probability model is. In applica- tions, this is often not the case, and the best we can say is that the correct probability measure to use is in a set of possible probability measures. We refer to this collection as the statistical model. So, in a sense, our uncertainty has increased; not only do we have the uncertainty associated with an outcome or response as described by a probability measure, but now we are also uncertain about what the probability measure is. Statistical inference is concerned with making statements or inferences about char- acteristics of the true underlying probability measure. Of course, these inferences must be based on some kind of information; the statistical model makes up part of it. Another important part of the information will be given by an observed outcome or response, which we refer to as the data. Inferences then take the form of various statements about the true underlying probability measure from which the data were obtained. These take a variety of forms, which we refer to as types of inferences. The role of this chapter is to introduce the basic concepts and ideas of statistical inference. The most prominent approaches to inference are discussed in Chapters 6, 7, and 8. -

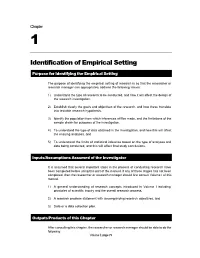

Identification of Empirical Setting

Chapter 1 Identification of Empirical Setting Purpose for Identifying the Empirical Setting The purpose of identifying the empirical setting of research is so that the researcher or research manager can appropriately address the following issues: 1) Understand the type of research to be conducted, and how it will affect the design of the research investigation, 2) Establish clearly the goals and objectives of the research, and how these translate into testable research hypothesis, 3) Identify the population from which inferences will be made, and the limitations of the sample drawn for purposes of the investigation, 4) To understand the type of data obtained in the investigation, and how this will affect the ensuing analyses, and 5) To understand the limits of statistical inference based on the type of analyses and data being conducted, and this will affect final study conclusions. Inputs/Assumptions Assumed of the Investigator It is assumed that several important steps in the process of conducting research have been completed before using this part of the manual. If any of these stages has not been completed, then the researcher or research manager should first consult Volume I of this manual. 1) A general understanding of research concepts introduced in Volume I including principles of scientific inquiry and the overall research process, 2) A research problem statement with accompanying research objectives, and 3) Data or a data collection plan. Outputs/Products of this Chapter After consulting this chapter, the researcher or research -

Statistical Inference: Paradigms and Controversies in Historic Perspective

Jostein Lillestøl, NHH 2014 Statistical inference: Paradigms and controversies in historic perspective 1. Five paradigms We will cover the following five lines of thought: 1. Early Bayesian inference and its revival Inverse probability – Non-informative priors – “Objective” Bayes (1763), Laplace (1774), Jeffreys (1931), Bernardo (1975) 2. Fisherian inference Evidence oriented – Likelihood – Fisher information - Necessity Fisher (1921 and later) 3. Neyman- Pearson inference Action oriented – Frequentist/Sample space – Objective Neyman (1933, 1937), Pearson (1933), Wald (1939), Lehmann (1950 and later) 4. Neo - Bayesian inference Coherent decisions - Subjective/personal De Finetti (1937), Savage (1951), Lindley (1953) 5. Likelihood inference Evidence based – likelihood profiles – likelihood ratios Barnard (1949), Birnbaum (1962), Edwards (1972) Classical inference as it has been practiced since the 1950’s is really none of these in its pure form. It is more like a pragmatic mix of 2 and 3, in particular with respect to testing of significance, pretending to be both action and evidence oriented, which is hard to fulfill in a consistent manner. To keep our minds on track we do not single out this as a separate paradigm, but will discuss this at the end. A main concern through the history of statistical inference has been to establish a sound scientific framework for the analysis of sampled data. Concepts were initially often vague and disputed, but even after their clarification, various schools of thought have at times been in strong opposition to each other. When we try to describe the approaches here, we will use the notions of today. All five paradigms of statistical inference are based on modeling the observed data x given some parameter or “state of the world” , which essentially corresponds to stating the conditional distribution f(x|(or making some assumptions about it). -

Topics of Statistical Theory for Register-Based Statistics Zhang, Li-Chun Statistics Norway Kongensgt

Topics of statistical theory for register-based statistics Zhang, Li-Chun Statistics Norway Kongensgt. 6, Pb 8131 Dep N-0033 Oslo, Norway E-mail: [email protected] 1. Introduction For some decades now administrative registers have been an important data source for official statistics alongside survey sampling and population census. Not only do they provide frames and valuable auxiliary information for sample surveys and censuses, systems of inter-linked statistical registers (i.e. registers for statistical uses) have been developed on the basis of various available administrative registers to produce a wide range of purely register-based statistics (e.g. Statistics Denmark, 1995; Statistics Finland, 2004; Wallgren and Wallgren, 2007). A summary of the development of some of the key statistical registers in the Nordic countries is given in UNECE (2007, Table on page 5), the earliest ones of which came already into existence in the late 1960s and early 1970s. Statistics Denmark was the first to conduct a register-based census in 1981, and the next census in 2011 will be completely register-based in all Nordic countries. Whereas the so-called virtual census (e.g. Schulte Nordholt, 2005), which combines data from registers and various sample surveys in a more ‘traditional’ way, will be implemented in a number of European countries. The major advantages associated with the statistical use of administrative registers include reduction of response burden, long-term cost efficiency, as well as potentials for detailed spatial-demographic and longitudinal statistics. The trend towards more extensive use of administrative data has long been recognized by statisticians around the world (e.g. -

Lecture Notes on Statistical Theory1

Lecture Notes on Statistical Theory1 Ryan Martin Department of Mathematics, Statistics, and Computer Science University of Illinois at Chicago www.math.uic.edu/~rgmartin January 8, 2015 1These notes are meant to supplement the lectures for Stat 411 at UIC given by the author. The course roughly follows the text by Hogg, McKean, and Craig, Introduction to Mathematical Statistics, 7th edition, 2012, henceforth referred to as HMC. The author makes no guarantees that these notes are free of typos or other, more serious errors. Contents 1 Statistics and Sampling Distributions 4 1.1 Introduction . .4 1.2 Model specification . .5 1.3 Two kinds of inference problems . .6 1.3.1 Point estimation . .6 1.3.2 Hypothesis testing . .6 1.4 Statistics . .7 1.5 Sampling distributions . .8 1.5.1 Basics . .8 1.5.2 Asymptotic results . .9 1.5.3 Two numerical approximations . 11 1.6 Appendix . 14 1.6.1 R code for Monte Carlo simulation in Example 1.7 . 14 1.6.2 R code for bootstrap calculation in Example 1.8 . 15 2 Point Estimation Basics 16 2.1 Introduction . 16 2.2 Notation and terminology . 16 2.3 Properties of estimators . 18 2.3.1 Unbiasedness . 18 2.3.2 Consistency . 20 2.3.3 Mean-square error . 23 2.4 Where do estimators come from? . 25 3 Likelihood and Maximum Likelihood Estimation 27 3.1 Introduction . 27 3.2 Likelihood . 27 3.3 Maximum likelihood estimators (MLEs) . 29 3.4 Basic properties . 31 3.4.1 Invariance . 31 3.4.2 Consistency . -

Statistical Inference a Work in Progress

Ronald Christensen Department of Mathematics and Statistics University of New Mexico Copyright c 2019 Statistical Inference A Work in Progress Springer v Seymour and Wes. Preface “But to us, probability is the very guide of life.” Joseph Butler (1736). The Analogy of Religion, Natural and Revealed, to the Constitution and Course of Nature, Introduction. https://www.loc.gov/resource/dcmsiabooks. analogyofreligio00butl_1/?sp=41 Normally, I wouldn’t put anything this incomplete on the internet but I wanted to make parts of it available to my Advanced Inference Class, and once it is up, you have lost control. Seymour Geisser was a mentor to Wes Johnson and me. He was Wes’s Ph.D. advisor. Near the end of his life, Seymour was finishing his 2005 book Modes of Parametric Statistical Inference and needed some help. Seymour asked Wes and Wes asked me. I had quite a few ideas for the book but then I discovered that Sey- mour hated anyone changing his prose. That was the end of my direct involvement. The first idea for this book was to revisit Seymour’s. (So far, that seems only to occur in Chapter 1.) Thinking about what Seymour was doing was the inspiration for me to think about what I had to say about statistical inference. And much of what I have to say is inspired by Seymour’s work as well as the work of my other professors at Min- nesota, notably Christopher Bingham, R. Dennis Cook, Somesh Das Gupta, Mor- ris L. Eaton, Stephen E. Fienberg, Narish Jain, F. Kinley Larntz, Frank B. -

Principles of Statistical Inference

Principles of Statistical Inference In this important book, D. R. Cox develops the key concepts of the theory of statistical inference, in particular describing and comparing the main ideas and controversies over foundational issues that have rumbled on for more than 200 years. Continuing a 60-year career of contribution to statistical thought, Professor Cox is ideally placed to give the comprehensive, balanced account of the field that is now needed. The careful comparison of frequentist and Bayesian approaches to inference allows readers to form their own opinion of the advantages and disadvantages. Two appendices give a brief historical overview and the author’s more personal assessment of the merits of different ideas. The content ranges from the traditional to the contemporary. While specific applications are not treated, the book is strongly motivated by applications across the sciences and associated technologies. The underlying mathematics is kept as elementary as feasible, though some previous knowledge of statistics is assumed. This book is for every serious user or student of statistics – in particular, for anyone wanting to understand the uncertainty inherent in conclusions from statistical analyses. Principles of Statistical Inference D.R. COX Nuffield College, Oxford CAMBRIDGE UNIVERSITY PRESS Cambridge, New York, Melbourne, Madrid, Cape Town, Singapore, São Paulo Cambridge University Press The Edinburgh Building, Cambridge CB2 8RU, UK Published in the United States of America by Cambridge University Press, New York www.cambridge.org Information on this title: www.cambridge.org/9780521866736 © D. R. Cox 2006 This publication is in copyright. Subject to statutory exception and to the provision of relevant collective licensing agreements, no reproduction of any part may take place without the written permission of Cambridge University Press. -

Optimal Design for A/B Testing in the Presence of Covariates And

Optimal Design for A/B Testing in the Presence of Covariates and Network Connection 1 2 Qiong Zhang∗ and Lulu Kang† 1School of Mathematical and Statistical Sciences, Clemson University 2Department of Applied Mathematics, Illinois Institute of Technology Abstract A/B testing, also known as controlled experiments, refers to the statistical pro- cedure of conducting an experiment to compare two treatments applied to different testing subjects. For example, many companies offering online services frequently to conduct A/B testing on their users who are connected in social networks. Since two connected users usually share some similar traits, we assume that their measurements are related to their network adjacency. In this paper, we assume that the users, or the test subjects of the experiments, are connected on an undirected network. The subjects’ responses are affected by the treatment assignment, the observed covariate features, as well as the network connection. We include the variation from these three sources in a conditional autoregressive model. Based on this model, we propose a design criterion on treatment allocation that minimizes the variance of the estimated treatment effect. Since the design criterion depends on an unknown network correla- arXiv:2008.06476v1 [stat.ME] 14 Aug 2020 tion parameter, we propose a Bayesian optimal design method and a hybrid solution approach to obtain the optimal design. Examples via synthetic and real social networks are shown to demonstrate the performances of the proposed approach. Keywords: A/B testing; Conditional autoregressive model; Controlled experiments; Bayesian optimal design; D-optimal design; Network correlation. ∗[email protected] †[email protected] 1 1 Introduction A/B testing, also known as controlled experiments approach, has been widely used in agri- cultural, clinical trials, engineering and science studies, marketing research, etc.