Lessons from the English Auxiliary System1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alignment in Syntax: Quotative Inversion in English

Alignment in Syntax: Quotative Inversion in English Benjamin Bruening, University of Delaware rough draft, February 5, 2014; comments welcome Abstract This paper explores the idea that many languages have a phonological Align(ment) constraint that re- quires alignment between the tensed verb and C. This Align constraint is what is behind verb-second and many types of inversion phenomena generally. Numerous facts about English subject-auxiliary inversion and French stylistic inversion fall out from the way this Align constraint is stated in each language. The paper arrives at the Align constraint by way of a detailed re-examination of English quotative inversion. The syntactic literature has overwhelmingly accepted Collins and Branigan’s (1997) conclusion that the subject in quotative inversion is low, within the VP. This paper re-examines the properties of quotative inversion and shows that Collins and Branigan’s analysis is incorrect: quotative inversion subjects are high, in Spec-TP, and what moves is a full phrase, not just the verb. The constraints on quotative inver- sion, including the famous transitivity constraint, fall out from two independently necessary constraints: (1) a constraint on what can be stranded by phrasal movement like VP fronting, and (2) the aforemen- tioned Align constraint which requires alignment between V and C. This constraint can then be seen to derive numerous seemingly unrelated facts in a single language, as well as across languages. Keywords: Generalized Alignment, quotative inversion, subject-in-situ generalization, transitivity restric- tions, subject-auxiliary inversion, stylistic inversion, verb second, phrasal movement 1 Introduction This paper explores the idea that the grammar of many languages includes a phonological Align(ment) con- straint that requires alignment between the tensed verb and C. -

Topicalization in English and the Trochaic Requirement Augustin Speyer

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by ScholarlyCommons@Penn University of Pennsylvania Working Papers in Linguistics Volume 10 Article 19 Issue 2 Selected Papers from NWAVE 32 1-1-2005 Topicalization in English and the Trochaic Requirement Augustin Speyer This paper is posted at ScholarlyCommons. http://repository.upenn.edu/pwpl/vol10/iss2/19 For more information, please contact [email protected]. Topicalization in English and the Trochaic Requirement This working paper is available in University of Pennsylvania Working Papers in Linguistics: http://repository.upenn.edu/pwpl/ vol10/iss2/19 Topicalization in English and the Trochaic Requirement• Augustin Speyer 1 The loss of topicalization The verb-second constraint (V2), which is at work in all other modem Ger manic languages (e.g. Haeberli 2000:109), was lost in English in the course of the Middle English Period. In other words: The usual word order in today's English is as shown in example(!). In earlier stages of English, however, one could also form sen tences examples like (2a). This sentence shows V2: The verb is in second position, and is preceded by some constituent which can be something other than the subject. At a certain time sentences like (2a) became ungrammatical and were replaced by examples like (2b), which follow a new constraint, namely that the subject must precede the verb. Sentences like (!) are unaf fected, but not because they observe the V2-constraint but because they hap pen to observe also the subject-before-verb-constraint. (I) John hates beans. -

Meaning and Linguistic Variation

i Meaning and Linguistic Variation Linguistic styles, particularly variations in pronunciation, carry a wide range of meaning – from speakers’ socioeconomic class to their mood or stance in the moment. This book examines the development of the study of sociolin- guistic variation, from early demographic studies to a focus on the construc- tion of social meaning in stylistic practice. It traces the development of the “Third Wave” approach to sociolinguistic variation, uncovering the stylistic practices that underlie broad societal patterns of change. Eckert charts the development of her thinking and of the emergence of a theoretical community around the “Third Wave” approach to social meaning. Featuring new material alongside earlier seminal work, it provides a coherent account of the social meaning of linguistic variation. PENELOPE ECKERT is the Albert Ray Lang Professor of Linguistics and Anthropology at Stanford University. She is author of Jocks and Burnouts (1990) and Linguistic Variation as Social Practice (2000), co- editor of Style and Sociolinguistic Variation (Cambridge, 2002) with John R. Rickford and co- author of Language and Gender (Cambridge, 2003, 2013) with Sally McConnell- Ginet. ii iii Meaning and Linguistic Variation The Third Wave in Sociolinguistics Penelope Eckert Stanford University, California iv University Printing House, Cambridge CB2 8BS, United Kingdom One Liberty Plaza, 20th Floor, New York, NY 10006, USA 477 Williamstown Road, Port Melbourne, VIC 3207, Australia 314– 321, 3rd Floor, Plot 3, Splendor Forum, Jasola District Centre, New Delhi – 110025, India 79 Anson Road, #06- 04/ 06, Singapore 079906 Cambridge University Press is part of the University of Cambridge. It furthers the University’s mission by disseminating knowledge in the pursuit of education, learning, and research at the highest international levels of excellence. -

Verbs Show an Action (Eg to Run)

VERBS Verbs show an action (e.g. to run). The tricky part is that sometimes the action can't be seen on the outside (like run) – it is done on the inside (e.g. to decide). Sometimes the action even has to do with just existing, in other words to be (e.g. is, was, are, were, am). Tenses The three basic tenses are past, present and future. Past – has already happened Present – is happening Future – will happen To change a word to the past tense, add "-ed" (e.g. walk → walked). ***Watch out for the words that don't follow this (or any) rule (e.g. swim → swam) *** To change a word to the future tense, add "will" before the word (e.g. walk → will walk BUT not will walks) Exercise 1 For each word, write down the correct tense in the appropriate space in the table below. Past Present Future dance will plant looked runs shone will lose put dig Spelling note: when "-ed" is added to a word ending in "y", the "y" becomes an "i" e.g. try → tried These are the basic tenses. You can break present tense up into simple present (e.g. walk), present perfect (e.g. has walked) and present continuous (e.g. is walking). Both past and future tenses can also be broken up into simple, perfect and continuous. You do not need to know these categories, but be aware that each tense can be found in different forms. Is it a verb? Can it be acted out? no yes Can it change It's a verb! tense? no yes It's NOT a It's a verb! verb! Exercise 2 In groups, decide which of the words your teacher gives you are verbs using the above diagram. -

Modals and Auxiliaries 12

Verbs: Modals and Auxiliaries 12 An auxiliary is a helping verb. It is used with a main verb to form a verb phrase. For example, • She was calling her friend. Here the word calling is the main verb and the word was is an auxiliary verb. The words be , have , do , can , could , may , might , shall , should , must , will , would , used , need , dare , ought are called auxiliaries . The verbs be, have and do are often referred to as primary auxiliaries. They have a grammatical function in a sentence. The rest in the above list are called modal auxiliaries, which are also known as modals. They express attitude like permission, possibility, etc. Note the forms of the primary auxiliaries. Auxiliary verbs Present tense Past tense be am, is, are was, were do do, does did have has, have had The table below illustrates the application of these primary verbs. Primary auxiliary Function Example used in the formation of She is sewing a dress. continuous tenses I am leaving tomorrow. be in sentences where the The missing child was action is more important found. than the subject 67 EBC-6_Ch19.indd 67 8/12/10 11:47:38 PM when followed by an We are to leave next infinitive, it is used to week. indicate a plan or an arrangement denotes command You are to see the Principal right now. used to form the perfect The carpenter has tenses worked well. have used with the infinitive I had to work that day. to indicate some kind of obligation used to form the He doesn’t work at all. -

Introducing Sign-Based Construction Grammar IVA N A

September 4, 2012 1 Introducing Sign-Based Construction Grammar IVA N A. SAG,HANS C. BOAS, AND PAUL KAY 1 Background Modern grammatical research,1 at least in the realms of morphosyntax, in- cludes a number of largely nonoverlapping communities that have surpris- ingly little to do with one another. One – the Universal Grammar (UG) camp – is mainly concerned with a particular view of human languages as instantia- tions of a single grammar that is fixed in its general shape. UG researchers put forth highly abstract hypotheses making use of a complex system of repre- sentations, operations, and constraints that are offered as a theory of the rich biological capacity that humans have for language.2 This community eschews writing explicit grammars of individual languages in favor of offering conjec- tures about the ‘parameters of variation’ that modulate the general grammat- ical scheme. These proposals are motivated by small data sets from a variety of languages. A second community, which we will refer to as the Typological (TYP) camp, is concerned with descriptive observations of individual languages, with particular concern for idiosyncrasies and complexities. Many TYP re- searchers eschew formal models (or leave their development to others), while others in this community refer to the theory they embrace as ‘Construction Grammar’ (CxG). 1For comments and valuable discussions, we are grateful to Bill Croft, Chuck Fillmore, Adele Goldberg, Stefan Müller, and Steve Wechsler. We also thank the people mentioned in footnote 8 below. 2The nature of these representations has changed considerably over the years. Seminal works include Chomsky 1965, 1973, 1977, 1981, and 1995. -

On the Loci of Agreement: Inversion Constructions in Mapudungun Mark

On the Loci of Agreement: Inversion Constructions in Mapudungun Mark C. Baker Rutgers University 1. Introduction: Ordinary and Unusual Aspects of Mapudungun Agreement “Languages are all the same, but not boringly so.” I think this is my own maxim, not one of the late great Kenneth Hale’s. But it is nevertheless something that he taught me, by example, if not by explicit precept. Ken Hale believed passionately in a substantive notion of Universal Grammar that underlies all languages. But this did not blind him to the details—even the idiosyncrasies—of less-studied “local” languages. On the contrary, I believe it stimulated his famous zeal for those details and idiosyncrasies. It is against the backdrop of what is universal that idiosyncrasies become interesting and meaningful. Conversely, it is often the fact that quite different, language particular constructions obey the same abstract constraints that provides the most striking evidence that those constraints are universal features of human language. It is in this Halean spirit that I present this study of agreement on verbs in Mapudungun, a language of uncertain genealogy spoken in the Andes of central Chile and adjoining areas of Argentina.1 Many features of agreement in Mapudungun look 1 This research was made possible thanks to a trip to Argentina in October 2000, to visit at the Universidad de Comahue in General Roca, Argentina. This gave me a chance to supplement my knowledge of Mapudungun from secondary sources by consulting with Argentine experts on this language, and by interviewing members of the Mapuche community in General Roca and Junin de los Andes, Argentina. -

Verbnet Guidelines

VerbNet Annotation Guidelines 1. Why Verbs? 2. VerbNet: A Verb Class Lexical Resource 3. VerbNet Contents a. The Hierarchy b. Semantic Role Labels and Selectional Restrictions c. Syntactic Frames d. Semantic Predicates 4. Annotation Guidelines a. Does the Instance Fit the Class? b. Annotating Verbs Represented in Multiple Classes c. Things that look like verbs but aren’t i. Nouns ii. Adjectives d. Auxiliaries e. Light Verbs f. Figurative Uses of Verbs 1 Why Verbs? Computational verb lexicons are key to supporting NLP systems aimed at semantic interpretation. Verbs express the semantics of an event being described as well as the relational information among participants in that event, and project the syntactic structures that encode that information. Verbs are also highly variable, displaying a rich range of semantic and syntactic behavior. Verb classifications help NLP systems to deal with this complexity by organizing verbs into groups that share core semantic and syntactic properties. VerbNet (Kipper et al., 2008) is one such lexicon, which identifies semantic roles and syntactic patterns characteristic of the verbs in each class and makes explicit the connections between the syntactic patterns and the underlying semantic relations that can be inferred for all members of the class. Each syntactic frame in a class has a corresponding semantic representation that details the semantic relations between event participants across the course of the event. In the following sections, each component of VerbNet is identified and explained. VerbNet: A Verb Class Lexical Resource VerbNet is a lexicon of approximately 5800 English verbs, and groups verbs according to shared syntactic behaviors, thereby revealing generalizations of verb behavior. -

Stylistic Inversion in French: Equality and Inequality In

Subject Inversion in French: Equality and Inequality in LFG Annie Zaenen and Ronald M. Kaplan Palo Alto Research Center {Kaplan|Zaenen}@parc.com Abstract: We reanalyze the data presented in Bonami, Godard and Marandin (1999), Bonami & Godard (2001) and Marandin (2001) in an LFG framework and show that the facts about Stylistic Inversion fall out of the LFG treatment of equality. This treatment, however, necessitates a different approach to object-control and object- raising constructions. We treat these as cases of subsumption and suggest that subsumption, not equality, might be the default way of modeling this type of relation across languages 1. Subjects in object position in French1 It is by now well known that French exhibits a number of constructions in which the subject follows rather than precedes the verb it is a dependent of. Examples of this are given in (1) through (5).2 (1) Comment va Madame? “How is Madame doing?” (2) Voici le texte qu’a écrit Paul. (BG&M) “Here is the text that Paul wrote.” (3) Sur la place se dresse la cathédrale. (B&G) “On the square stands the cathedral.” (4) Je voudrais que vienne Marie. (M) “I wished that Marie would come.” (5) Alors sont entrés trois hommes. (M) “Then came in three men.” These various cases, however, do not all illustrate the same phenomenon. In this paper we will restrict our attention to what has been called Stylistic Inversion (Kayne, 1973), which we will contrast with what Marandin (2001) has called Unaccusative Inversion. Stylistic Inversion is illustrated above with the examples (1) and (2) and arguably (3) (see Bonami, Godard, and Marandin, 1999). -

Topicalization in English and the Trochaic Requirement

University of Pennsylvania Working Papers in Linguistics Volume 10 Issue 2 Selected Papers from NWAVE 32 Article 19 2005 Topicalization in English and the Trochaic Requirement Augustin Speyer Follow this and additional works at: https://repository.upenn.edu/pwpl Recommended Citation Speyer, Augustin (2005) "Topicalization in English and the Trochaic Requirement," University of Pennsylvania Working Papers in Linguistics: Vol. 10 : Iss. 2 , Article 19. Available at: https://repository.upenn.edu/pwpl/vol10/iss2/19 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/pwpl/vol10/iss2/19 For more information, please contact [email protected]. Topicalization in English and the Trochaic Requirement This working paper is available in University of Pennsylvania Working Papers in Linguistics: https://repository.upenn.edu/pwpl/vol10/iss2/19 Topicalization in English and the Trochaic Requirement• Augustin Speyer 1 The loss of topicalization The verb-second constraint (V2), which is at work in all other modem Ger manic languages (e.g. Haeberli 2000:109), was lost in English in the course of the Middle English Period. In other words: The usual word order in today's English is as shown in example(!). In earlier stages of English, however, one could also form sen tences examples like (2a). This sentence shows V2: The verb is in second position, and is preceded by some constituent which can be something other than the subject. At a certain time sentences like (2a) became ungrammatical and were replaced by examples like (2b), which follow a new constraint, namely that the subject must precede the verb. Sentences like (!) are unaf fected, but not because they observe the V2-constraint but because they hap pen to observe also the subject-before-verb-constraint. -

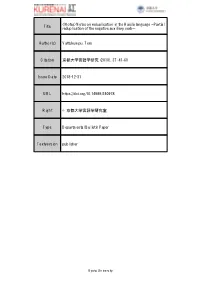

Title <Notes>Notes on Reduplication in the Haisla Language --Partial

<Notes>Notes on reduplication in the Haisla language --Partial Title reduplication of the negative auxiliary verb-- Author(s) Vattukumpu, Tero Citation 京都大学言語学研究 (2018), 37: 41-60 Issue Date 2018-12-31 URL https://doi.org/10.14989/240978 Right © 京都大学言語学研究室 Type Departmental Bulletin Paper Textversion publisher Kyoto University 京都大学言語学研究 (Kyoto University Linguistic Research) 37 (2018), 41 –60 Notes on reduplication in the Haisla language Ü Partial reduplication of the negative auxiliary verb Ü Tero Vattukumpu Abstract: In this paper I will point out that, according to my data, a plural form of the negative auxiliary verb formed by means of partial reduplication exists in the Haisla language in contrary to a description on the topic in a previous study. The plural number of the subject can, and in many cases must, be indicated by using a plural form of at least one of the components of the predicate, i.e. either an auxiliary verb (if there is one), the semantic head of the predicate or both. Therefore, I have examined which combinations of the singular and plural forms of the negative auxiliary verb and different semantic heads of the predicate are judged to be acceptable by native speakers of Haisla. My data suggests that – at least at this point – it seems to be impossible to make convincing generalizations about any patterns according to which the acceptability of different combinations could be determined *. Keywords: Haisla, partial reduplication, negative auxiliary verb, plural, root extension 1 Introduction The purpose of this paper is to introduce some preliminary notes about reduplication in the Haisla language from a morphosyntactic point of view as a first step towards a better understanding and a more comprehensive analysis of all the possible patterns of reduplication in the language. -

Style and Sociolinguistic Variation Edited by Penelope Eckert and John R

This page intentionally left blank The study of sociolinguistic variation examines the relation between social identity and ways of speaking. The analysis of style in speech is central to this field because it varies not only between speakers, but in indi- vidual speakers as they move from one style to another. Studying these variations in language not only reveals a great deal about speakers’ strate- gies with respect to variables such as social class, gender, ethnicity and age, it also affords us the opportunity to observe linguistic change in progress. The volume brings together a team of leading experts from a range of disciplines to create a broad perspective on the study of style and varia- tion. Beginning with an introduction to the broad theoretical issues, the book goes on to discuss key approaches to stylistic variation in spoken language, including such issues as attention paid to speech, audience design, identity construction, the corpus study of register, genre, distinc- tiveness and the anthropological study of style. Rigorous and engaging, this book will become the standard work on stylistic variation. It will be welcomed by students and academics in socio- linguistics, English language, dialectology, anthropology and sociology. is Professor of Linguistics, Courtesy Professor in Anthropology, and co-Chair of the Program in Feminist Studies at Stanford University. She has published work in pure ethnography as well as ethnographically based sociolinguistics including Jocks and Burnouts: Social Identity in the High School (1989) and Variation as Social Practices (2000). . is the Martin Luther King, Jr., Centennial Professor of Linguistics at Stanford University. He is also Courtesy Professor in Education, and Director of the Program in African and African American Studies.