Secure Multi-User Content Sharing for Augmented Reality Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration

ORIGINAL RESEARCH published: 14 June 2021 doi: 10.3389/frvir.2021.697367 Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration Allison Jing*, Kieran May, Gun Lee and Mark Billinghurst Empathic Computing Lab, Australian Research Centre for Interactive and Virtual Environment, STEM, The University of South Australia, Mawson Lakes, SA, Australia Gaze is one of the predominant communication cues and can provide valuable implicit information such as intention or focus when performing collaborative tasks. However, little research has been done on how virtual gaze cues combining spatial and temporal characteristics impact real-life physical tasks during face to face collaboration. In this study, we explore the effect of showing joint gaze interaction in an Augmented Reality (AR) interface by evaluating three bi-directional collaborative (BDC) gaze visualisations with three levels of gaze behaviours. Using three independent tasks, we found that all bi- directional collaborative BDC visualisations are rated significantly better at representing Edited by: joint attention and user intention compared to a non-collaborative (NC) condition, and Parinya Punpongsanon, hence are considered more engaging. The Laser Eye condition, spatially embodied with Osaka University, Japan gaze direction, is perceived significantly more effective as it encourages mutual gaze Reviewed by: awareness with a relatively low mental effort in a less constrained workspace. In addition, Naoya Isoyama, Nara Institute of Science and by offering additional virtual representation that compensates for verbal descriptions and Technology (NAIST), Japan hand pointing, BDC gaze visualisations can encourage more conscious use of gaze cues Thuong Hoang, Deakin University, Australia coupled with deictic references during co-located symmetric collaboration. -

A Conceptual Framework to Compare Two Paradigms

A conceptual framework to compare two paradigms of Augmented and Mixed Reality experiences Laura Malinverni, Cristina Valero, Marie-Monique Schaper, Narcis Pares Universitat Pompeu Fabra Barcelona, Spain [email protected], [email protected], [email protected], [email protected] ABSTRACT invisible visible and adding new layers of meaning to our Augmented and Mixed Reality mobile technologies are physical world. These features, in the context of Child- becoming an emerging trend in the development of play and Computer Interaction, open the path for emerging learning experiences for children. This tendency requires a possibilities related to creating novel relations of meaning deeper understanding of their specificities to adequately between the digital and the physical, between what can be inform design. To this end, we ran a study with 36 directly seen and what needs to be discovered, between our elementary school children to compare two AR/MR physical surroundings and our imagination. interaction paradigms for mobile technologies: (1) the This potential has attracted both research and industry, which consolidated “Window-on-the-World” (WoW), and (2) the are increasingly designing mobile-based AR/MR emerging “World-as-Support” (WaS). By analyzing technologies to support a wide variety of play and learning children's understanding and use of space while playing an experiences. This emerging trend has shaped AR/MR as one AR/MR mystery game, and analyzing the collaboration that of the leading emerging technologies of 2017 [16], hence emerges among them, we show that the two paradigms requiring practitioners to face novel ways of thinking about scaffold children’s attention differently during the mobile technologies and designing for them. -

Violent Victimization in Cyberspace: an Analysis of Place, Conduct, Perception, and Law

Violent Victimization in Cyberspace: An Analysis of Place, Conduct, Perception, and Law by Hilary Kim Morden B.A. (Hons), University of the Fraser Valley, 2010 Thesis submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts IN THE SCHOOL OF CRIMINOLOGY FACULTY OF ARTS AND SOCIAL SCIENCES © Hilary Kim Morden 2012 SIMON FRASER UNIVERSITY Summer 2012 All rights reserved. However, in accordance with the Copyright Act of Canada, this work may be reproduced, without authorization, under the conditions for “Fair Dealing.” Therefore, limited reproduction of this work for the purposes of private study, research, criticism, review and news reporting is likely to be in accordance with the law, particularly if cited appropriately. Approval Name: Hilary Kim Morden Degree: Master of Arts (School of Criminology) Title of Thesis: Violent Victimization in Cyberspace: An Analysis of Place, Conduct, Perception, and Law Examining Committee: Chair: Dr. William Glackman, Associate Director Graduate Programs Dr. Brian Burtch Senior Supervisor Professor, School of Criminology Dr. Sara Smyth Supervisor Assistant Professor, School of Criminology Dr. Gregory Urbas External Examiner Senior Lecturer, Department of Law Australian National University Date Defended/Approved: July 13, 2012 ii Partial Copyright Licence iii Abstract The anonymity, affordability, and accessibility of the Internet can shelter individuals who perpetrate violent acts online. In Canada, some of these acts are prosecuted under existing criminal law statutes (e.g., cyber-stalking, under harassment, s. 264, and cyber- bullying, under intimidation, s. 423[1]). However, it is unclear whether victims of other online behaviours such as cyber-rape and organized griefing have any established legal recourse. -

Advanced Displays and Techniques for Telepresence COAUTHORS for Papers in This Talk

Advanced Displays and Techniques for Telepresence COAUTHORS for papers in this talk: Young-Woon Cha Rohan Chabra Nate Dierk Mingsong Dou Wil GarreM Gentaro Hirota Kur/s Keller Doug Lanman Mark Livingston David Luebke Andrei State (UNC) 1994 Andrew Maimone Surgical Consulta/on Telepresence Federico Menozzi EMa Pisano, MD Henry Fuchs Kishore Rathinavel UNC Chapel Hill Andrei State Eric Wallen, MD ARPA-E Telepresence Workshop Greg Welch Mary WhiMon April 26, 2016 Xubo Yang Support gratefully acknowledged: CISCO, Microsoft Research, NIH, NVIDIA, NSF Awards IIS-CHS-1423059, HCC-CGV-1319567, II-1405847 (“Seeing the Future: Ubiquitous Computing in EyeGlasses”), and the BeingThere Int’l Research Centre, a collaboration of ETH Zurich, NTU Singapore, UNC Chapel Hill and Singapore National Research Foundation, Media Development Authority, and Interactive Digital Media Program Office. 1 Video Teleconferencing vs Telepresence • Video Teleconferencing • Telepresence – Conven/onal 2D video capture and – Provides illusion of presence in the display remote or combined local&remote space – Single camera, single display at each – Provides proper stereo views from the site is common configura/on for precise loca/on of the user Skype, Google Hangout, etc. – Stereo views change appropriately as user moves – Provides proper eye contact and eye gaze cues among all the par/cipants Cisco TelePresence 3000 Three distant rooms combined into a single space with wall-sized 3D displays 2 Telepresence Component Technologies • Acquisi/on (cameras) • 3D reconstruc/on Cisco -

New Realities Risks in the Virtual World 2

Emerging Risk Report 2018 Technology New realities Risks in the virtual world 2 Lloyd’s disclaimer About the author This report has been co-produced by Lloyd's and Amelia Kallman is a leading London futurist, speaker, Amelia Kallman for general information purposes only. and author. As an innovation and technology While care has been taken in gathering the data and communicator, Amelia regularly writes, consults, and preparing the report Lloyd's does not make any speaks on the impact of new technologies on the future representations or warranties as to its accuracy or of business and our lives. She is an expert on the completeness and expressly excludes to the maximum emerging risks of The New Realities (VR-AR-MR), and extent permitted by law all those that might otherwise also specialises in the future of retail. be implied. Coming from a theatrical background, Amelia started Lloyd's accepts no responsibility or liability for any loss her tech career by chance in 2013 at a creative or damage of any nature occasioned to any person as a technology agency where she worked her way up to result of acting or refraining from acting as a result of, or become their Global Head of Innovation. She opened, in reliance on, any statement, fact, figure or expression operated and curated innovation lounges in both of opinion or belief contained in this report. This report London and Dubai, working with start-ups and corporate does not constitute advice of any kind. clients to develop connections and future-proof strategies. Today she continues to discover and bring © Lloyd’s 2018 attention to cutting-edge start-ups, regularly curating All rights reserved events for WIRED UK. -

IO2 A2 Analysis and Synthesis of the Literature on Learning Design

Using Mobile Augmented Reality Games to develop key competencies through learning about sustainable development IO2_A2 Analysis and synthesis of the literature on learning design frameworks and guidelines of MARG University of Groningen Team 1 WHAT IS AUGMENTED REALITY? The ways in which the term “Augmented Reality” (AR) has been defined differ among researchers in computer sciences and educational technology. A commonly accepted definition of augmented reality defines it as a system that has three main features: a) it combines real and virtual objects; b) it provides opportunities for real-time interaction; and, c) it provides accurate registration of three-dimensional virtual and real objects (Azuma, 1997). Klopfer and Squire (2008), define augmented reality as “a situation in which a real world context is dynamically overlaid with coherent location or context sensitive virtual information” (p. 205). According to Carmigniani and Furht (2011), augmented reality is defined as a direct or indirect real-time view of the actual natural environment which is enhanced by adding virtual information created by computer. Other researchers, such as Milgram, Takemura, Utsumi and Kishino, (1994) argued that augmented reality can be considered to lie on a “Reality-Virtuality Continuum” between the real environment and virtual environment (see Figure 1). It comprises Augmented Reality AR and Augmented Virtuality (AV) in between where AR is closer to the real world and AV is closer to virtual environment. Figure 1. Reality-Virtuality Continuum (Milgram et al., 1994, p. 283). Wu, Lee, Chang, and Liang (2013) discussed how that the notion of augmented reality is not limited to any type of technology and could be reconsidered from a broad view nowadays. -

A Sensor-Based Interactive Digital Installation System

A SENSOR-BASED INTERACTIVE DIGITAL INSTALLATION SYSTEM FOR VIRTUAL PAINTING USING MAX/MSP/JITTER A Thesis by ANNA GRACIELA ARENAS Submitted to the Office of Graduate Studies of Texas A&M University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE December 2008 Major Subject: Visualization Sciences A SENSOR-BASED INTERACTIVE DIGITAL INSTALLATION SYSTEM FOR VIRTUAL PAINTING USING MAX/MSP/JITTER A Thesis by ANNA GRACIELA ARENAS Submitted to the Office of Graduate Studies of Texas A&M University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Approved by: Chair of Committee, Karen Hillier Committee Members, Carol Lafayette Jeff Morris Yauger Williams Head of Department, Tim McLaughlin December 2008 Major Subject: Visualization Sciences iii ABSTRACT A Sensor-Based Interactive Digital Installation System for Virtual Painting Using MAX/MSP/Jitter. (December 2008) Anna Graciela Arenas, B.S., Texas A&M University Chair of Advisory Committee: Prof. Karen Hillier Interactive art is rapidly becoming a part of cosmopolitan society through public displays, video games, and art exhibits. It is a means of exploring the connections between our physical bodies and the virtual world. However, a sense of disconnection often exists between the users and technology because users are driving actions within an environment from which they are physically separated. This research involves the creation of a custom interactive, immersive, and real-time video-based mark-making installation as public art. Using a variety of input devices including video cameras, sensors, and special lighting, a painterly mark-making experience is contemporized, enabling the participant to immerse himself in a world he helps create. -

Co-Creation of Public Open Places. Practice - Reflection Learning Co-Creation of Public Open Places

SERIES CULTURE & 04 TERRITORY Series CULTURE & TERRITORY C3Places - using ICT for Co-Creation of inclusive public Places is a project funded Carlos SMANIOTTO COSTA, Universidade under the scheme of the ERA-NET Cofund Smart Urban Futures / Call joint research programme Lusófona - Interdisciplinary Research Centre for (ENSUF), JPI Urban Europe, https://jpi-urbaneurope.eu/project/c3places. C3Places aims at Education and Development / CeiED, Lisbon, Zammit, Antoine & Kenna, Therese (Eds.) (2017). increasing the quality of public open spaces (e.g. squares, parks, green spaces) as community Portugal. Enhancing Places through Technology, service, reflecting the needs of different social groups through ICTs. The notion of C3Places is based on the understanding that public open spaces have many different forms and features, [email protected] ISBN 978-989-757-055-1 and collectively add crucial value to the experience and liveability of urban areas. Understanding Monika MAČIULIENÈ, Mykolas Romeris Univer- public open spaces can be done from a variety of perspectives. For simplicity’s sake, and because CO-CREATION OF PUBLIC sity, Social Technologies LAB, Vilnius, Lithuania. it best captures what people care most about, C3Places considers the “public” dimension to be [email protected] Smaniotto Costa, Carlos & Ioannidis, Konstantinos a crucial feature of an urban space. Public spaces are critical for cultural identity, as they offer (2017). The Making of the Mediated Public Space. places for interactions among generations and ethnicities. Even in the digital era, people still need OPEN PLACES. Marluci MENEZES, Laboratório Nacional de contact with nature and other people to develop different life skills, values and attitudes, to be Essays on Emerging Urban Phenomena, ISBN Engenharia Civil - LNEC, Lisbon, Portugal. -

Mobile Edutainment in the City

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by OAR@UM IADIS International Conference Mobile Learning 2011 MOBILE EDUTAINMENT IN THE CITY Alexiei Dingli and Dylan Seychell University of Malta ABSTRACT Touring around a City can sometimes be frustrating rather than an enjoyable experience. The scope of the Virtual Mobile City Guide (VMCG) is to create a mobile application which aims to provide the user with tools normally used by tourists while travelling and provides them with factual information about the city. The VMCG is a mash up of different APIs implemented in the Android platform which together with an information infrastructure provides the user with information about different attractions and guidance around the city in question. While providing the user with the traditional map view by making use of the Google maps API, the VMCG also employs the Wikitude® API to provide the user with an innovative approach to navigating through cities. This view uses augmented reality to indicate the location of attractions and displays information in the same augmented reality. The VMCG also has a built in recommendation engine which suggests attractions to the user depending on the attractions which the user is visiting during the tour and tailor information in order to cater for a learning experience while the user travels around the city in question. KEYWORDS Mobile Technology, Tourism, Android, Location Based Service, Augmented Reality, Educational 1. INTRODUCTION A visitor in a city engaged in a learning experience requires different forms of guidance and assistance, whether or not the city is known to the person and according to the learning quest undertaken. -

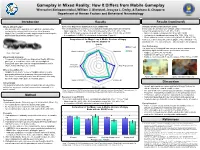

Gameplay in Mixed Reality: How It Differs from Mobile Gameplay Weerachet Sinlapanuntakul, William J

Gameplay in Mixed Reality: How It Differs from Mobile Gameplay Weerachet Sinlapanuntakul, William J. Shelstad, Jessyca L. Derby, & Barbara S. Chaparro Department of Human Factors and Behavioral Neurobiology Introduction Results Results (continued) What is Mixed Reality? Game User Experience Satisfaction Scale (GUESS-18) Simulator Sickness Questionnaire (SSQ) • Mixed Reality (MR) expands a user’s physical environment by The overall satisfaction scores (out of 56) indicated a statistically significance between: In this study, the 20-minute use of Magic Leap is resulted with overlaying the real-world with interactive virtual elements • Magic Leap (M = 42.31, SD = 5.61) and mobile device (M = 38.31, SD = 5.49) concerning symptoms (M = 16.83, SD = 15.51): • Magic Leap 1 is an MR wearable spatial computer that brings the • With α = 0.05, Magic Leap was rated with higher satisfaction, t(15) = 3.09, p = 0.007. • Nausea is considered minimal (M = 9.54, SD = 12.07) physical and digital worlds together (Figure 1). • A comparison of GUESS-18 subscales is shown below (Figure 2). • Oculomotor is considered concerning (M = 17.53, SD = 16.31) • Disorientation is considered concerning (M = 16.53, SD = 17.02) Comparison of the Magic Leap & Mobile Versions of Angry Total scores can be associated with negligible (< 5), minimal (5 – 10), Birds with the GUESS-18 significant (10 – 15), concerning (15 – 20), and bad (> 20) symptoms. Usability 7 Magic Leap User Performance 6 The stars (out of 3) collected from each level played represented a statistically significant difference in performance outcomes: Visual Aesthetics 5 Narratives Mobile Figure 1. -

Characterization of Multi-User Augmented Reality Over Cellular Networks

Characterization of Multi-User Augmented Reality over Cellular Networks Kittipat Apicharttrisorn∗, Bharath Balasubramaniany, Jiasi Chen∗, Rajarajan Sivarajz, Yi-Zhen Tsai∗, Rittwik Janay, Srikanth Krishnamurthy∗, Tuyen Trany, and Yu Zhouy ∗University of California, Riverside, California, USA fkapic001 j [email protected], fjiasi j [email protected] yAT&T Labs Research, Bedminster, New Jersey, USA fbb536c j [email protected], ftuyen j [email protected] zAT&T Labs Research, San Ramon, California, USA [email protected] Abstract—Augmented reality (AR) apps where multiple users For example, Fig. 1 shows the End-to-End interact within the same physical space are gaining in popularity CDF of the AR latencies of the Aggregate RAN (e.g., shared AR mode in Pokemon Go, virtual graffiti in Google CloudAnchor AR app [3], 1.0 Google’s Just a Line). However, multi-user AR apps running running on a 4G LTE production over the cellular network can experience very high end-to- 0.5 end latencies (measured at 12.5 s median on a public LTE network of a Tier-I US cellular CDF network). To characterize and understand the root causes of carrier at different locations and 0 this problem, we perform a first-of-its-kind measurement study times of day (details in xIV). The 0 10 20 30 on both public LTE and industry LTE testbed for two popular results show a median 3.9 s and multi-user AR applications, yielding several insights: (1) The Latency (s) radio access network (RAN) accounts for a significant fraction 12.5 s of aggregate radio access Fig. -

The Openxr Specification

The OpenXR Specification Copyright (c) 2017-2021, The Khronos Group Inc. Version 1.0.19, Tue, 24 Aug 2021 16:39:15 +0000: from git ref release-1.0.19 commit: 808fdcd5fbf02c9f4b801126489b73d902495ad9 Table of Contents 1. Introduction . 2 1.1. What is OpenXR?. 2 1.2. The Programmer’s View of OpenXR. 2 1.3. The Implementor’s View of OpenXR . 2 1.4. Our View of OpenXR. 3 1.5. Filing Bug Reports. 3 1.6. Document Conventions . 3 2. Fundamentals . 5 2.1. API Version Numbers and Semantics. 5 2.2. String Encoding . 7 2.3. Threading Behavior . 7 2.4. Multiprocessing Behavior . 8 2.5. Runtime . 8 2.6. Extensions. 9 2.7. API Layers. 9 2.8. Return Codes . 16 2.9. Handles . 23 2.10. Object Handle Types . 24 2.11. Buffer Size Parameters . 25 2.12. Time . 27 2.13. Duration . 28 2.14. Prediction Time Limits . 29 2.15. Colors. 29 2.16. Coordinate System . 30 2.17. Common Object Types. 33 2.18. Angles . 36 2.19. Boolean Values . 37 2.20. Events . 37 2.21. System resource lifetime. 42 3. API Initialization. 43 3.1. Exported Functions . 43 3.2. Function Pointers . 43 4. Instance. 47 4.1. API Layers and Extensions . 47 4.2. Instance Lifecycle . 53 4.3. Instance Information . 58 4.4. Platform-Specific Instance Creation. 60 4.5. Instance Enumerated Type String Functions. 61 5. System . 64 5.1. Form Factors . 64 5.2. Getting the XrSystemId . 65 5.3. System Properties . 68 6. Path Tree and Semantic Paths.